The document discusses the transition to multi-cloud environments with an increasing emphasis on private clouds and the adoption of OpenStack and Kubernetes technologies. It outlines the structure and deployment processes for Kubernetes alongside infrastructure management, including storage solutions and network configurations. Additionally, it details command line procedures for managing OpenStack resources, flavors, and instances, showcasing the complexity and capabilities of the overall cloud infrastructure setup.

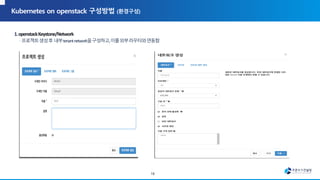

![(virtenv) [root@test5 openstack-deploy-train-test]# openstack Network create octavia-network

(virtenv) [root@test5 openstack-deploy-train-test]# openstack subnet create --Network octavia-network --subnet-range xx.xx.4.0/24 octavia-subnet1

(virtenv) [root@test5 openstack-deploy-train-test]# openstack router add subnet osc-demo-router octavia-subnet1

(virtenv) [root@test5 openstack-deploy-train-test]# openstack security group create octavia-sg

(virtenv) [root@test5 openstack-deploy-train-test]# openstack security group rule create --dst-port 9443 --ingress --protocol tcp octavia-sg

(virtenv) [root@test5 openstack-deploy-train-test]# openstack flavor create --id 100 --vcpus 4 --ram 4096 octavia-flavor

(virtenv) [root@test5 octavia]# source create_single_CA_intermediate_CA.sh

################# Verifying the Octavia files ###########################

etc/octavia/certs/client.cert-and-key.pem: OK

etc/octavia/certs/server_ca.cert.pem: OK

!!!!!!!!!!!!!!!Do not use this script for deployments!!!!!!!!!!!!!

Please use the Octavia Certificate Configuration guide:

https://docs.openstack.org/octavia/latest/admin/guides/certificates.html

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

12](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-12-320.jpg)

![(virtenv) [root@test5 ~]# openstack image create --property hw_scsi_model=virtio-scsi --property hw_disk_bus=scsi --tag amphora

--file packages/octavia/amphora-x64-haproxy-ubuntu.raw --disk-format raw amphora-image

(virtenv) [root@test5 openstack-deploy-train-test]# kolla-ansible deploy -i /etc/kolla/multinode

(virtenv) [root@test5 ~]# openstack keypair create --public-key /root/.ssh/id_rsa.pub octavia_ssh_key

/etc/sysconfig/network-scripts/ifcfg-br-ex

DEVICE=br0

ONBOOT=yes

TYPE=OVSBridge

BOOTPROTO=none

NAME=br-ex

DEVICE=br-ex

ONBOOT=yes

IPADDR=외부 서비스 n/w ip

PREFIX=24

MTU=9000

VLAN=yes

13](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-13-320.jpg)

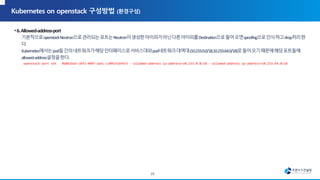

![[nova]

availability_zone= ssss

[root@test8 ~]# cat /etc/sysconfig/network-scripts/route-br-ex

xx.xx.4.0/24 via [외부 서비스 IP] dev br-ex

14](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-14-320.jpg)

![(virtenv) [root@test5 data]# openstack flavor create --disk 200 --ram 117760 --vcpus 18 worker-flavor

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 200 |

| id | 8ffead97-9ff7-4627-83e9-8dd59d4db698 |

| name | worker-flavor |

| os-flavor-access:is_public | True |

| properties | |

| ram | 117760 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 18 |

+----------------------------+--------------------------------------+

(virtenv) [root@test5 data]# openstack flavor create --disk 200 --ram 32768 --vcpus 8 master-flavor

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 200 |

| id | 93ed4569-244d-4121-981d-b5f97d7f46ad |

| name | master-flavor |

| os-flavor-access:is_public | True |

| properties | |

| ram | 32768 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 8 |

+----------------------------+--------------------------------------+ 19](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-19-320.jpg)

![(virtenv) [root@test5 data]# openstack volume create --size 200 --image centos7-x86-64-2003 --type ceph-ssd --bootable master01-volume

(virtenv) [root@test5 data]# openstack volume create --size 200 --image centos7-x86-64-2003 --type ceph-hdd --bootable test01-volume

(virtenv) [root@test5 data]# openstack server create --volume test01-volume --security-group sg --flavor worker-flavor

--key-name test5-keypair --nic net-id=04f9ad30-b2ab9-b013-d5298de69116,v4-fixed-ip=XX.XX.XX.XX test01

20](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-20-320.jpg)

![(virtenv) [root@test5 data]# nova list

+--------------------------------------+----------------------+--------+------------+-------------+---------------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+----------------------+--------+------------+-------------+---------------------------------+

| a436192e-664e-47e3-82b7-93c3055b268f | a-test01 | ACTIVE | - | Running | test--network=xx.xx.10.41 |

| 3beab3b0-95e8-4903-9f11-423bb65ca47c | a-test02 | ACTIVE | - | Running | test--network=xx.xx.10.42 |

| 79fe665a-544c-4cc9-aa8f-35bca9cb06ac | a-test03 | ACTIVE | - | Running | test--network=xx.xx.10.43 |

| 1e86268d-cae1-434d-a26e-ef09ffcdee9b | a-test04 | ACTIVE | - | Running | test--network=xx.xx.10.44 |

| 55e50216-0bd3-479d-b66f-ee235fc8a736 | a-test05 | ACTIVE | - | Running | test--network=xx.xx.10.45 |

| c7c8c10a-d4a2-4a03-857d-c60dec95798c | a-test06 | ACTIVE | - | Running | test--network=xx.xx.10.46 |

| 3790eab3-bb85-49f6-99b4-464db1a13d86 | a-test07 | ACTIVE | - | Running | test--network=xx.xx.10.47 |

| 3f7aa9e1-75aa-4118-b586-79104e4a1302 | a-test08 | ACTIVE | - | Running | test--network=xx.xx.10.48 |

| d8f5333b-46d7-4aea-b708-8e9b1fa30dfd | a-test09 | ACTIVE | - | Running | test--network=xx.xx.10.49 |

| 4e9b7eb5-f175-4f99-89e4-c8059ef13240 | a-test10 | ACTIVE | - | Running | test--network=xx.xx.10.50 |

| d43f4c6f-7493-45a2-b804-070a40adfa41 | test--master01 | ACTIVE | - | Running | test--network=xx.xx.10.11 |

| 347c7726-d9e7-4e06-a5fe-3be1f3beae41 | test--master02 | ACTIVE | - | Running | test--network=xx.xx.10.12 |

| 93b9b9ae-d415-4b9b-8710-37611a89462a | test--master03 | ACTIVE | - | Running | test--network=xx.xx.10.13 |

| fe5fdbd6-7949-4dd4-869c-1187e9ae2aec | test01 | ACTIVE | - | Running | test--network=xx.xx.10.21 |

| 507123ed-7d61-41b7-8deb-b0936a9e8cdd | test02 | ACTIVE | - | Running | test--network=xx.xx.10.22 |

| e8fc684f-d26d-4aa3-a1b0-7cf22f586f14 | test03 | ACTIVE | - | Running | test--network=xx.xx.10.23 |

| 7357d45e-e6c6-41e8-b67e-c6ae4e2d3a68 | test04 | ACTIVE | - | Running | test--network=xx.xx.10.24 |

| 5d2ca83f-1064-41c7-b79c-8820d6f25d09 | test05 | ACTIVE | - | Running | test--network=xx.xx.10.25 |

| 2e6ab299-f3c1-4c57-9d29-974ca2227211 | test06 | ACTIVE | - | Running | test--network=xx.xx.10.26 |

| eee6c54a-4948-4b77-ac43-e2e490fa5d04 | test07 | ACTIVE | - | Running | test--network=xx.xx.10.27 |

| 85ea1490-bf06-42c4-85f3-0c8dc8fd4000 | test08 | ACTIVE | - | Running | test--network=xx.xx.10.28 |

| 81d41852-a73b-4286-8f3b-c843b499a6d5 | test09 | ACTIVE | - | Running | test--network=xx.xx.10.29 |

| 396a7f69-952e-4d0b-9dac-e9680cc8912e | test10 | ACTIVE | - | Running | test--network=xx.xx.10.30 |

| 15648b26-3307-42ad-b9c5-425ca420dc8a | test11 | ACTIVE | - | Running | test--network=xx.xx.10.31 |

| f11161a1-722e-49df-b06f-f4573562f4cb | test12 | ACTIVE | - | Running | test--network=xx.xx.10.32 |

+--------------------------------------+----------------------+--------+------------+-------------+---------------------------------+

21](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-21-320.jpg)

![inventory/hosts

23

[master]

test-master01 ansible_host=xx.xx.10.11 api_address=xx.xx.10.11

test-master02 ansible_host=xx.xx.10.12 api_address=xx.xx.10.12

test-master03 ansible_host=xx.xx.10.13 api_address=xx.xx.10.13

[worker]

test0-01 ansible_host=xx.xx.10.21 api_address=xx.xx.10.21

test0-02 ansible_host=xx.xx.10.22 api_address=xx.xx.10.22

test0-03 ansible_host=xx.xx.10.23 api_address=xx.xx.10.23

test0-04 ansible_host=xx.xx.10.24 api_address=xx.xx.10.24

test0-05 ansible_host=xx.xx.10.25 api_address=xx.xx.10.25

---

etcd_kubeadm_enabled: false

bin_dir: /usr/local/bin

LoadBalancer_apiserver_port: 6443

LoadBalancer_apiserver_healthcheck_port: 8081

upstream_dns_servers:

- xx.xx.xx.76

- xx.xx.xx.76

cloud_provider: external

external_cloud_provider: openstack](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-23-320.jpg)

![24

k8s-net-calico.yml

nat_outgoing: true

global_as_num: "64512"

calico_mtu: 8930

calico_datastore: "etcd"

calico_iptables_backend: "Legacy"

typha_enabled: false

typha_secure: false

calico_network_backend: bird

calico_ipip_mode: 'CrossSubnet'

calico_vxlan_mode: 'Never'

calico_ip_auto_method: "interface=eth0"

(virtenv) [root@test-master01 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

a-svc01 Ready <none> 61m v1.18.9 xx.xx.10.41 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.13

test-master01 Ready master 62m v1.18.9 xx.xx.10.11 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.13

test-master02 Ready master 62m v1.18.9 xx.xx.10.12 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.13

test-master03 Ready master 62m v1.18.9 xx.xx.10.13 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.13](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-24-320.jpg)

![25

(virtenv) [root@test-master01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5df9d68dd6-sjgpr 1/1 Running 0 59m

calico-node-5pqb4 1/1 Running 1 61m

coredns-d687dc8df-w84dw 1/1 Running 0 59m

csi-cinder-controllerplugin-664b4964cf-mnbtg 5/5 Running 0 58m

csi-cinder-nodeplugin-28d8b 2/2 Running 0 8m6s

dns-autoscaler-6bb9b476-c5xsj 1/1 Running 0 59m

kube-apiserver-test-master01 1/1 Running 0 64m](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-25-320.jpg)

![(virtenv) [root@test--master01 ~]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

cinder-csi cinder.csi.openstack.org Delete WaitForFirstConsumer false 19h

cinder-csi-hdd cinder.csi.openstack.org Delete WaitForFirstConsumer false 17h

cinder-csi-ssd cinder.csi.openstack.org Delete WaitForFirstConsumer false 18h

(virtenv) [root@test--master01 ~]# nginx-ssd.yml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-pvc-cinderplugin-ssd

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: cinder-csi-ssd

volumes:

- name: csi-data-cinderplugin

persistentVolumeClaim:

claimName: csi-pvc-cinderplugin-ssd

readOnly: false

(virtenv) [root@test--master01 ~]# tee nginx-hdd.yml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-pvc-cinderplugin-hdd

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: cinder-csi-hdd

volumes:

- name: csi-data-cinderplugin

persistentVolumeClaim:

claimName: csi-pvc-cinderplugin-hdd

readOnly: false

27](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-27-320.jpg)

![(virtenv) (virtenv) [root@test--master01 osc]# kubectl get pod

NAME READY STATUS RESTARTS AGE

echoserver 1/1 Running 0 13m

nginx-hdd 1/1 Running 0 16s

nginx-ssd 1/1 Running 0 20s

(virtenv) (virtenv) [root@test--master01 osc]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS

REASON AGE

pvc-6c5d5108-075e-4e7f-9935-eea3cb2a057a 1Gi RWO Delete Bound default/csi-pvc-cinderplugin-ssd cinder-csi-ssd

22s

pvc-a569676c-96f3-4a77-a92a-f6adf9e646cf 1Gi RWO Delete Bound default/csi-pvc-cinderplugin-hdd cinder-csi-hdd

19s

(virtenv) (virtenv) [root@test--master01 osc]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

csi-pvc-cinderplugin-hdd Bound pvc-a569676c-96f3-4a77-a92a-f6adf9e646cf 1Gi RWO cinder-csi-hdd 21s

csi-pvc-cinderplugin-ssd Bound pvc-6c5d5108-075e-4e7f-9935-eea3cb2a057a 1Gi RWO cinder-csi-ssd 25s

28](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-28-320.jpg)

![# kubectl run echoserver --image=test--

master01:5000/google-containers/echoserver:1.10 --

port=8080

(virtenv) [root@test--master01 ~]#

kind: Service

apiVersion: v1

metadata:

name: loadbalanced-service

spec:

selector:

run: echoserver

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

protocol: TCP

30](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-30-320.jpg)

![(virtenv) [root@test--master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 116m

loadbalanced-service LoadBalancer 10.233.59.140 xx.xx.10.239 80:30534/TCP 3m5s

(virtenv) [root@test--master01 ~]# curl xx.xx.10.239

Hostname: echoserver

Pod Information:

-no pod information available-

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=xx.xx.10.11

method=GET

real path=/

query=

request_version=1.1

request_scheme=http

request_uri=http://xx.xx.4.153:8080/

Request Headers:

accept=*/*

host=xx.xx.4.153

user-agent=curl/7.29.0

Request Body:

-no body in request-

(virtenv) [root@test--master01 ~]# kubectl delete svc loadbalanced-service

service "loadbalanced-service" deleted

31](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-31-320.jpg)

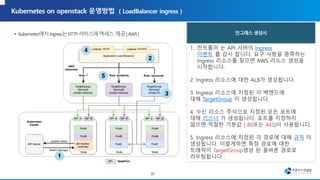

![apiVersion: apps/v1

kind: Deployment

----------

ports:

- containerPort: 8080

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: test-octavia-ingress

annotations:

kubernetes.io/ingress.class: "openstack"

octavia.ingress.kubernetes.io/internal: "false"

NAME CLASS HOSTS ADDRESS PORTS AGE

test-octavia-ingress <none> foo.bar.com 80 7s

((virtenv) [root@test--master01 osc]# kubectl get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

test-octavia-ingress <none> foo.bar.com xx.xx.83.104 80 100s

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /

backend:

serviceName: webserver

servicePort: 8080

33](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-33-320.jpg)

![(virtenv) [root@test--master01 osc]# IPADDRESS=xx.xx.83.104

(virtenv) [root@test--master01 osc]# curl -H "Host: foo.bar.com" http://$IPADDRESS/

Hostname: webserver-598ddccb79-gl8mn

Pod Information:

-no pod information available-

Server values:

server_version=nginx: 1.13.3 - lua: 10008

34](https://image.slidesharecdn.com/osck8svsk8sonopenstackkhoj-210310051504/85/on-34-320.jpg)