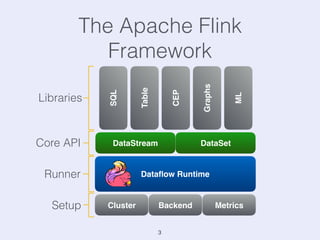

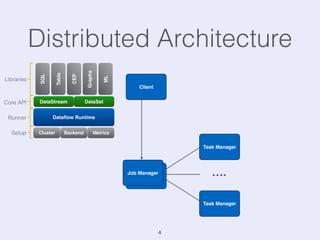

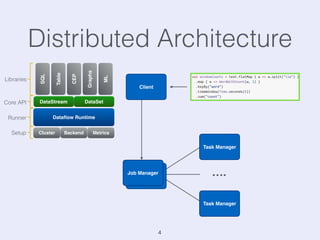

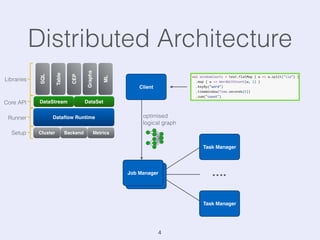

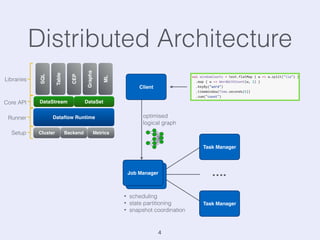

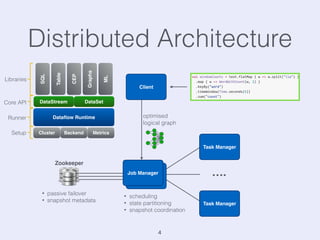

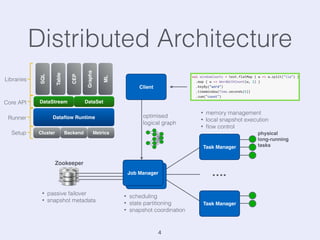

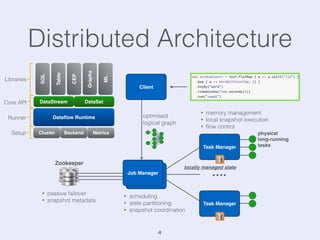

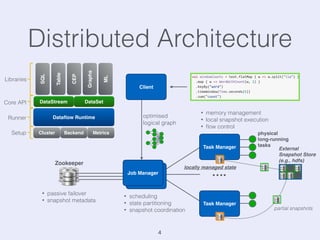

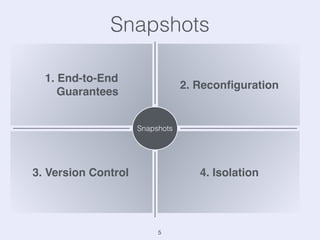

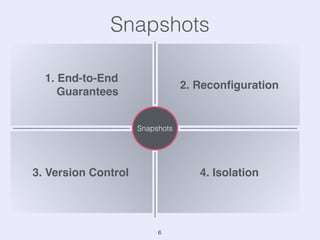

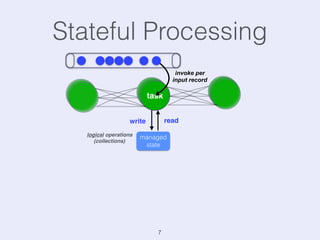

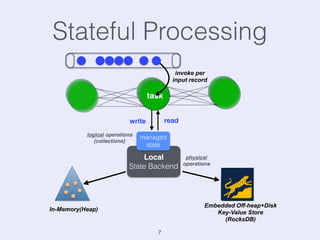

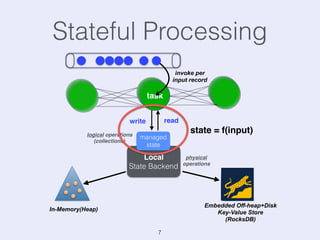

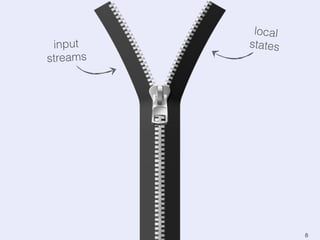

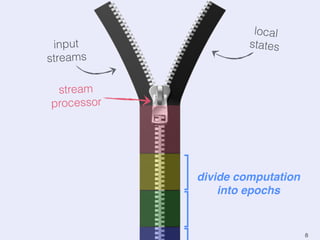

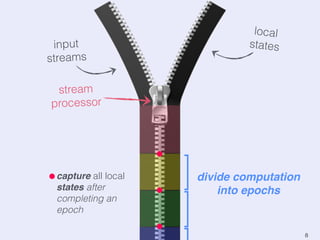

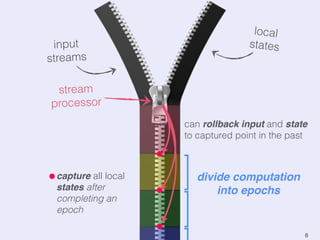

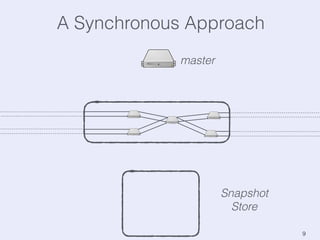

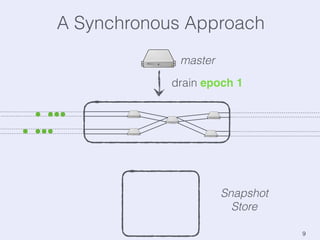

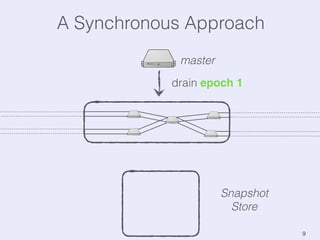

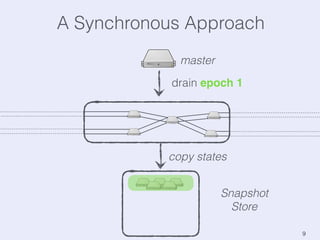

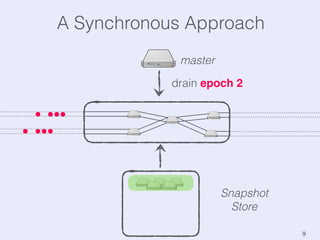

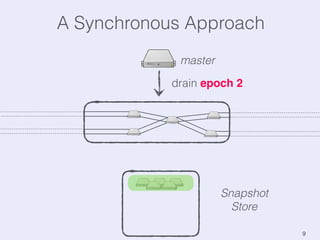

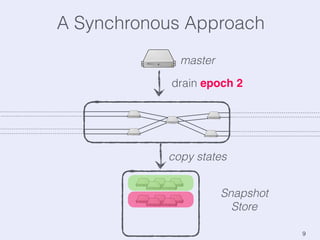

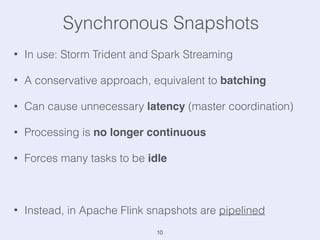

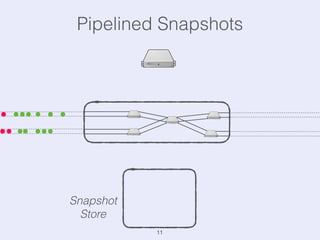

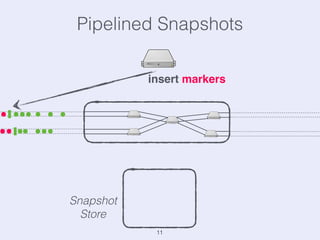

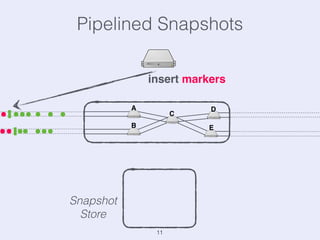

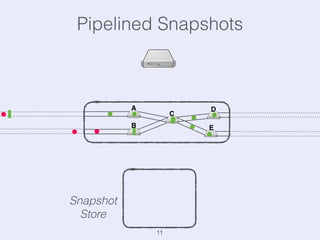

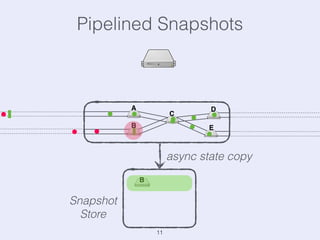

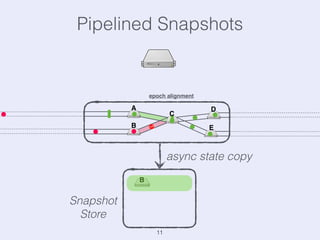

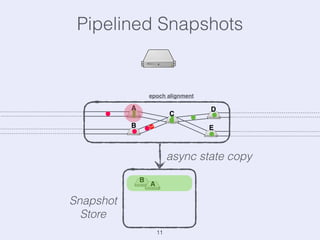

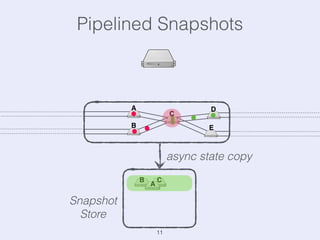

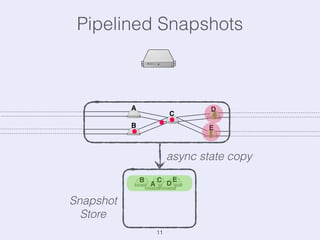

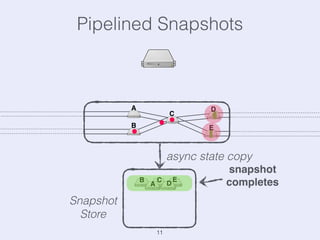

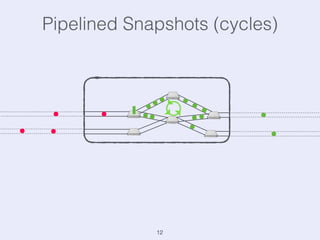

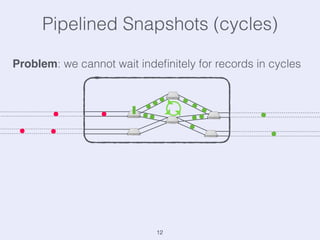

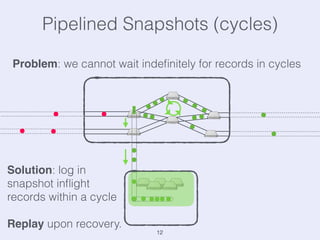

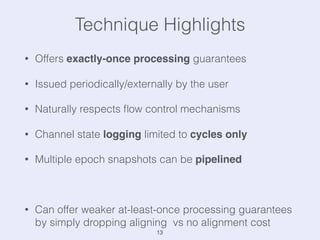

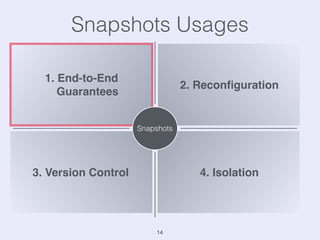

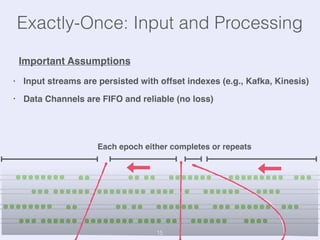

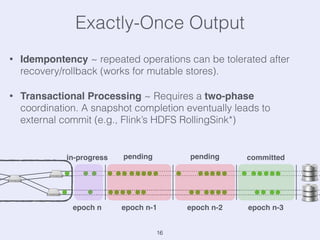

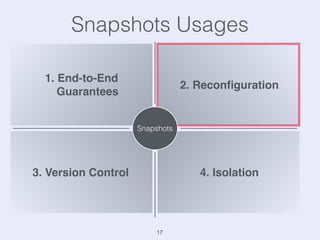

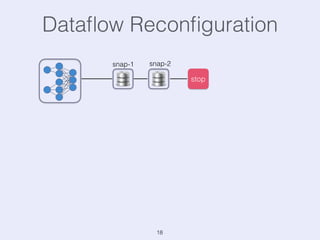

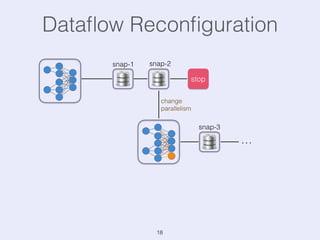

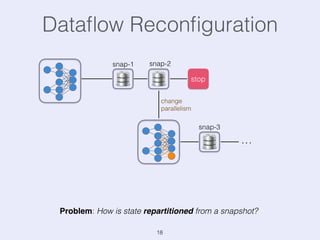

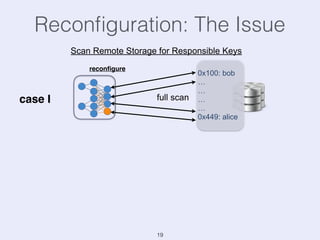

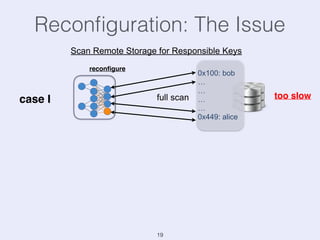

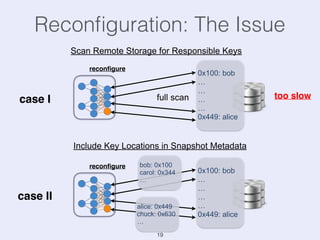

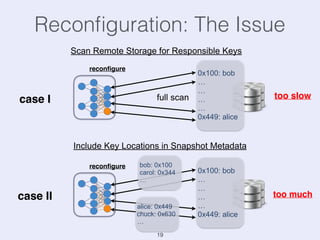

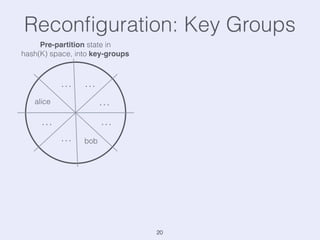

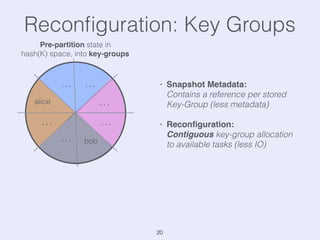

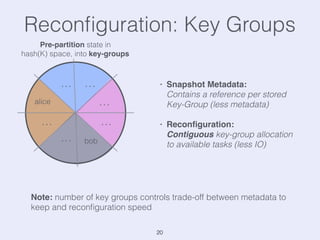

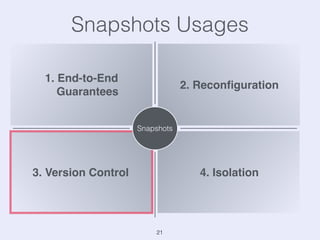

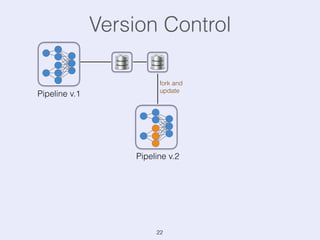

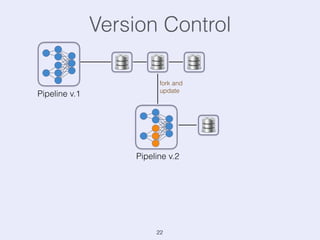

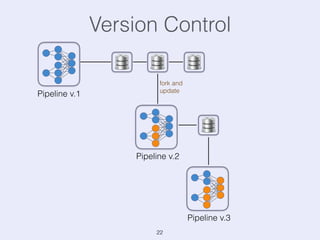

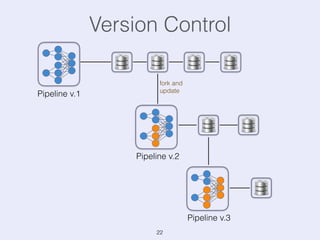

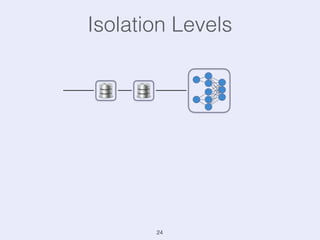

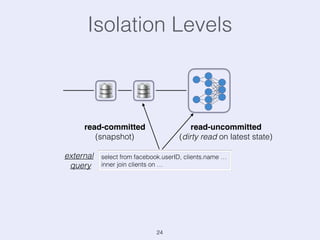

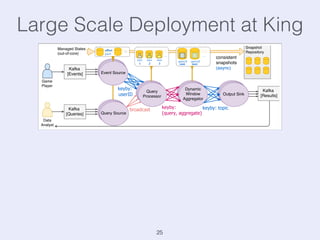

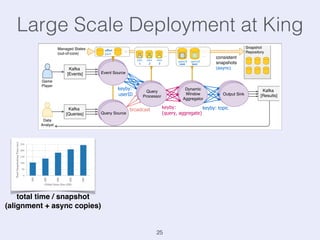

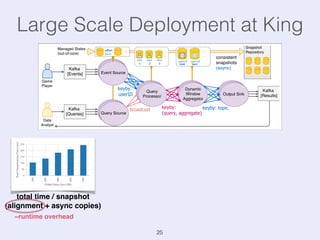

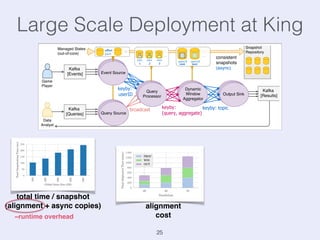

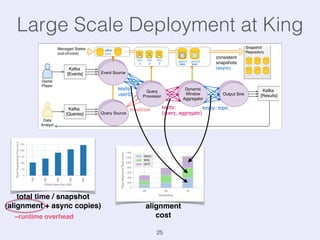

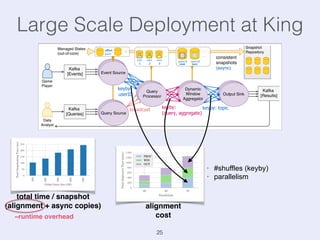

The document presents an overview of state management in Apache Flink, focusing on consistent stateful distributed stream processing. It covers topics such as the system architecture, pipelined consistent snapshots, and operations with snapshots aimed at large-scale deployments. Additionally, it discusses the implications of state reconfiguration, version control, and the importance of maintaining end-to-end processing guarantees.