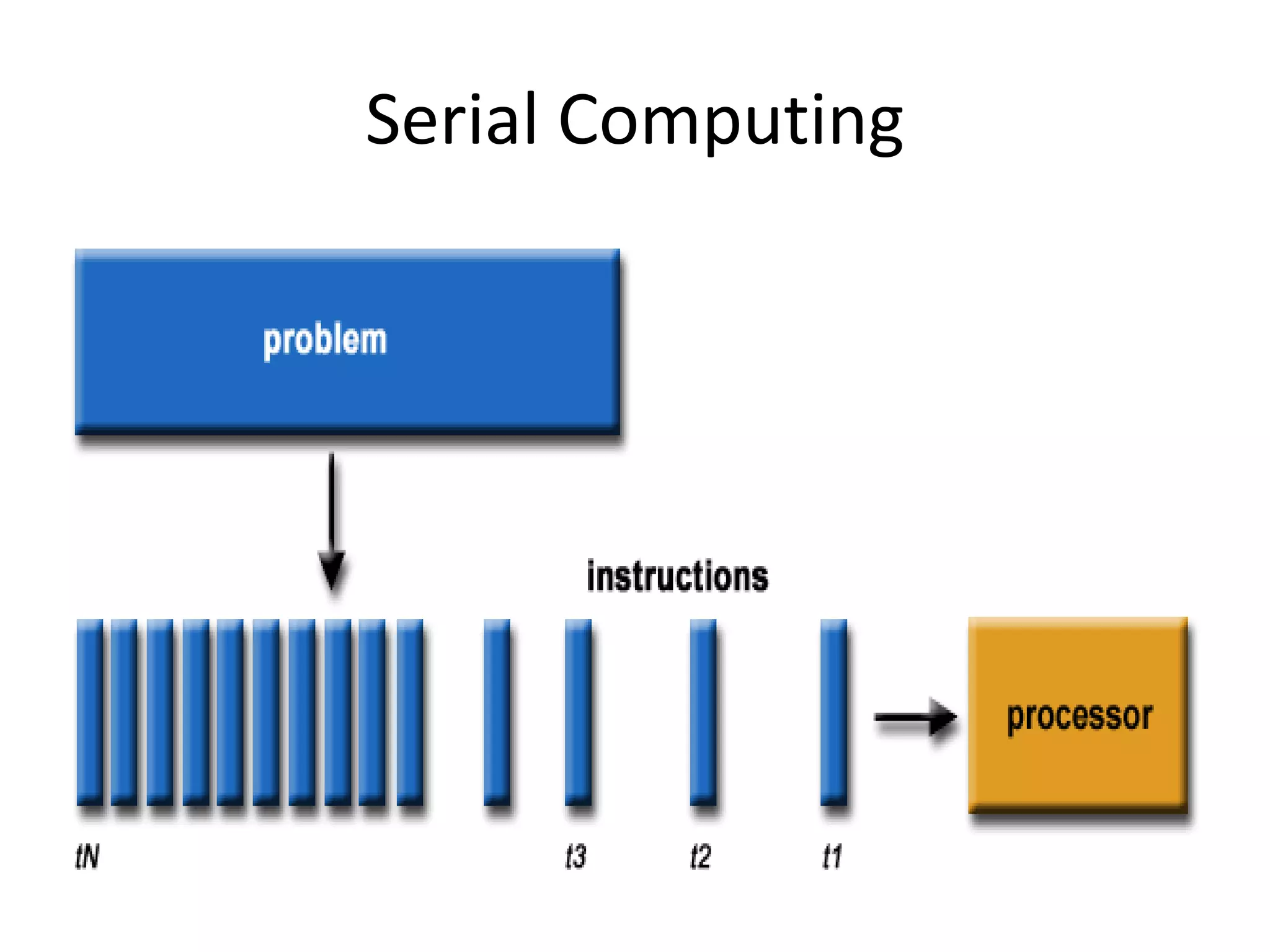

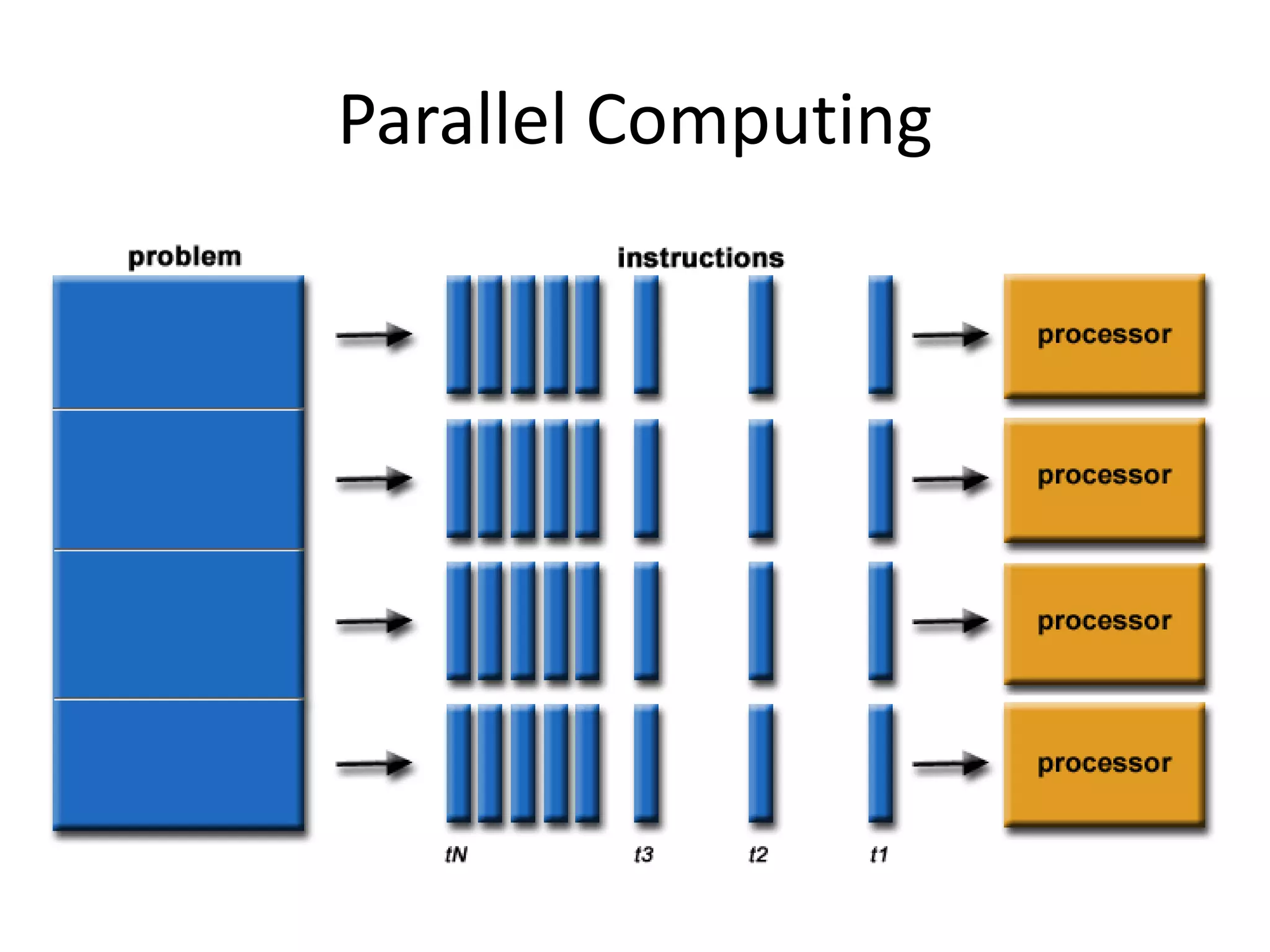

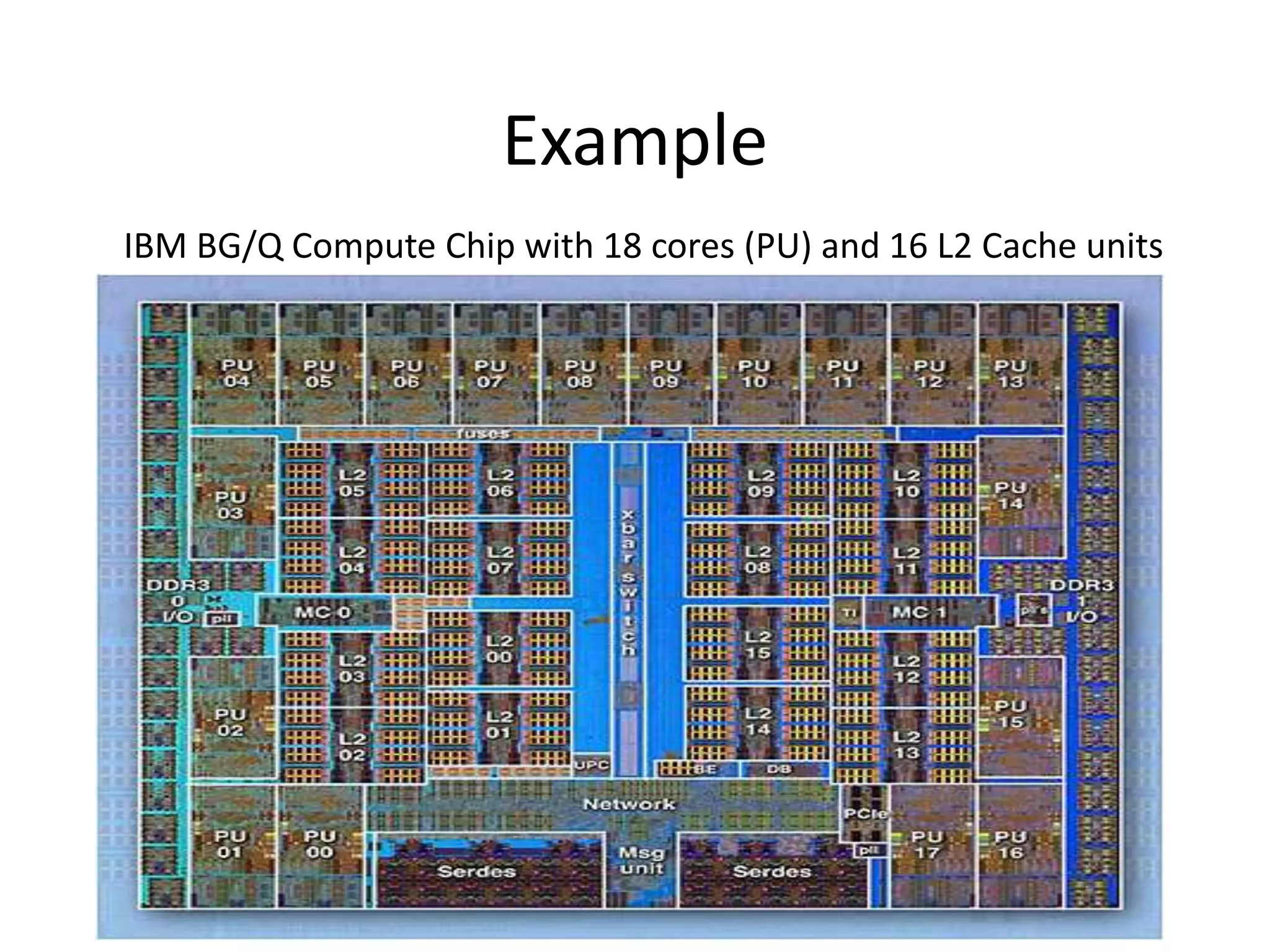

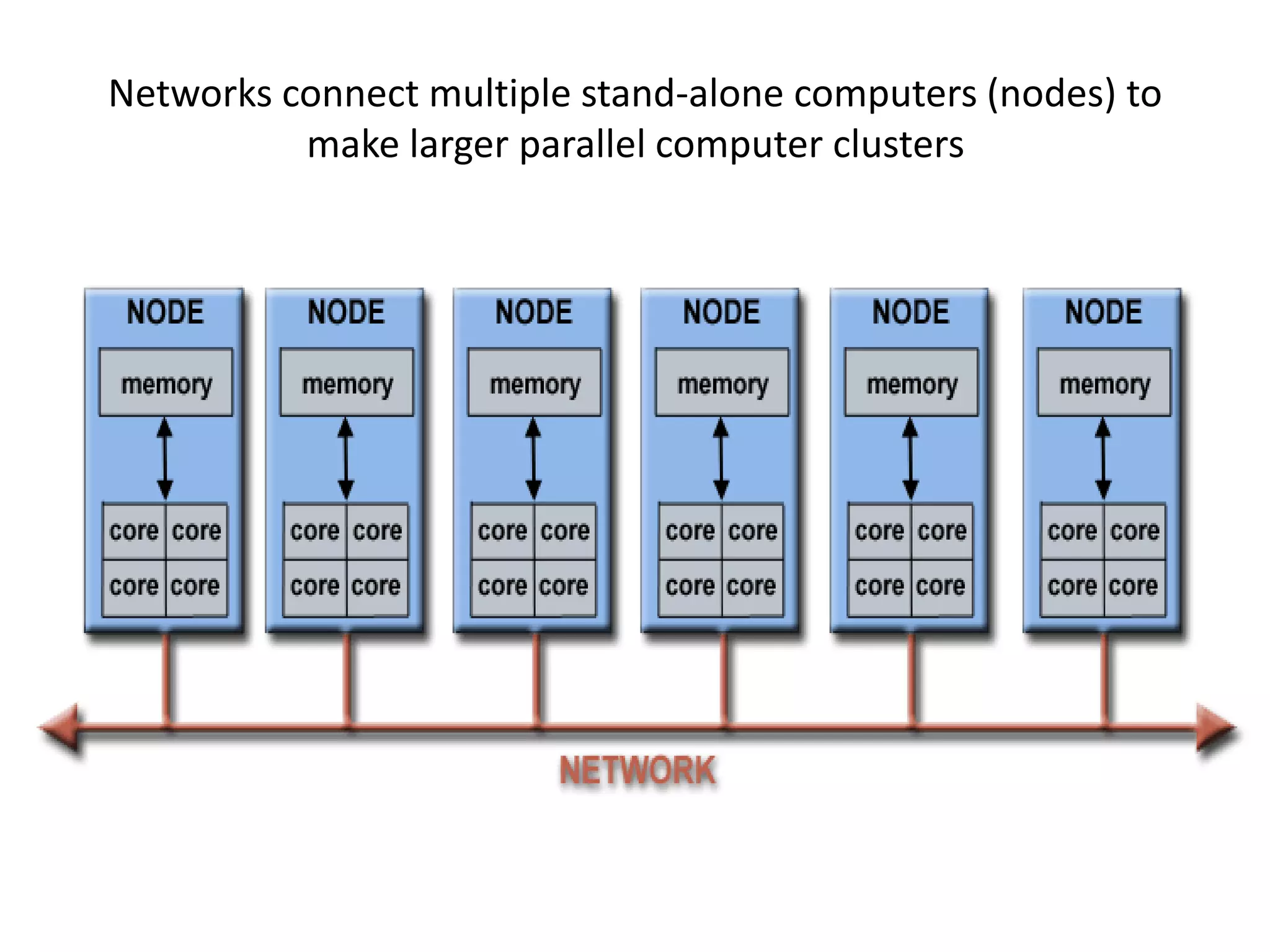

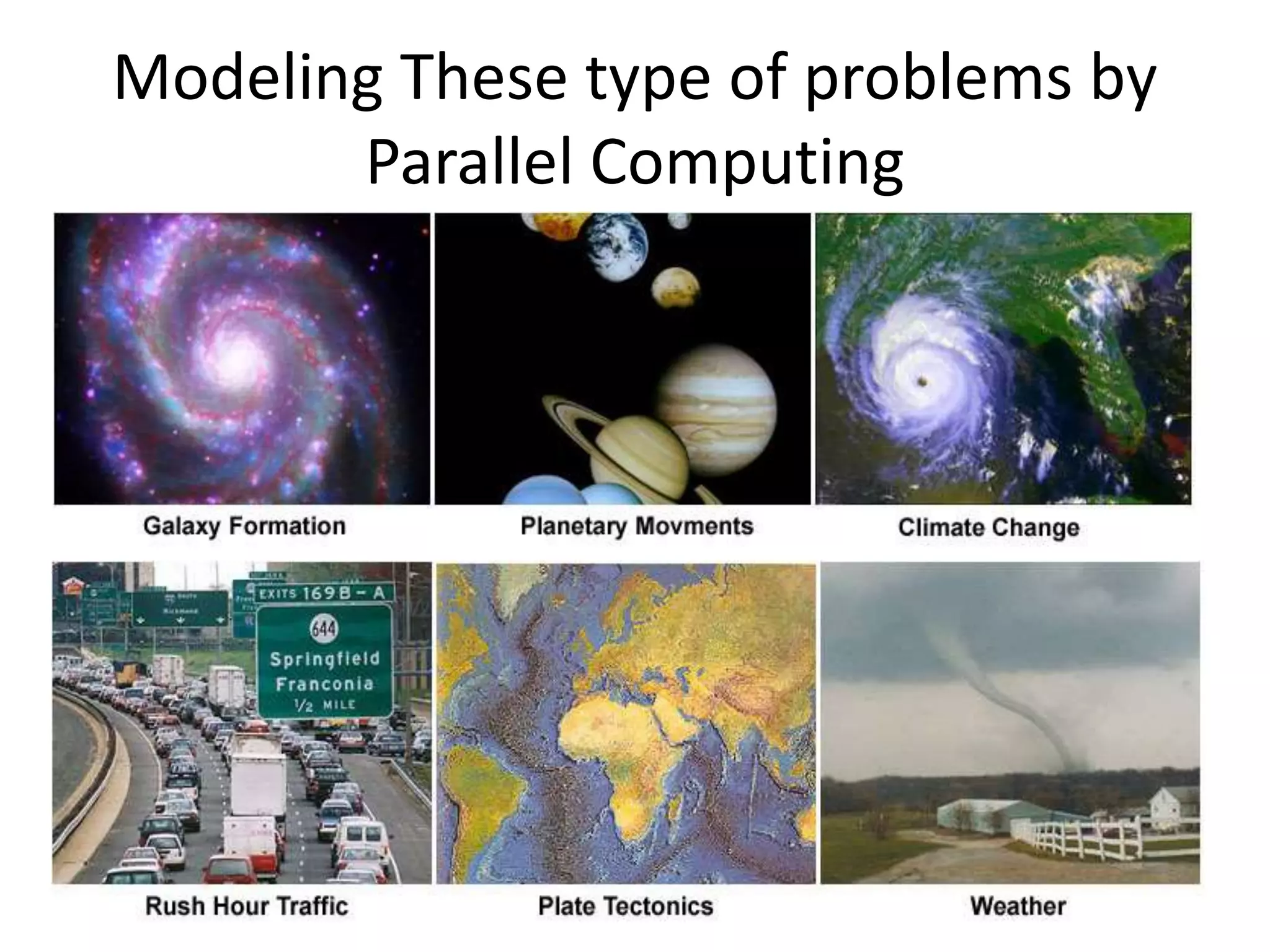

Parallel computing involves solving computational problems simultaneously using multiple processors. It breaks problems into discrete parts that can be solved concurrently rather than sequentially. Parallel computing provides benefits like reduced time/costs to solve large problems and ability to model complex real-world phenomena. Common forms include bit-level, instruction-level, data, and task parallelism. Parallel resources can include multiple cores/processors in a single computer or networks of computers.