How to address privacy, ethical and regulatory issues: Examples in cognitive enhancement, depression and ADHD

- 3. How to address privacy, ethical and regulatory issues: Examples in cognitive enhancement, depression and ADHD Chaired by: Keith Epstein, Healthcare Practice Leader at Blue Heron Dr. Anna Wexler, Assistant Professor at the Perelman School of Medicine at UPenn Jacqueline Studer, Senior VP and General Counsel of Akili Interactive Labs Dr. Karen Rommelfanger, Director of the Neuroethics Program at Emory University

- 4. . Rethinkingandretooling brainhealthwithethics Karen S. Rommelfanger, PhD Neuroethics Program Director,Center for Ethics Departments of Neurology and Psychiatry & Behavioral Sciences

- 5. Neuroethics: • The societal, ethical and legal implications of neuroscience. • An exploration of how neuroscience reflects and informs our societal values.

- 6. .

- 7. .

- 8. .

- 9. . Brain as Self: Roskies, 2007; Racine et al, 2010; De Jong et al, 2015 Kasulis in Swanson Brain Science and Kokoro, 2011; *Adam et al, 2015; Yang et al 2015

- 10. . Brain as Self: Roskies, 2007; Racine et al, 2010; De Jong et al, 2015 Kasulis in Swanson Brain Science and Kokoro, 2011; *Adam et al, 2015; Yang et al 2015

- 11. . • Societal Implications for Brain as Self: Roskies, 2007; Racine et al, 2010; De Jong et al, 2015 Kasulis in Swanson Brain Science and Kokoro, 2011; *Adam et al, 2015; Yang et al 2015

- 12. . • Neuroethical concerns have risen to the highest levels of government. (GNS delegates, 2018) • Values and culture determine what kinds of science happen and where that science can happen. • Gaps in understanding can lead to missed opportunities for collaboration and advancement to future discoveries. • GNS unites neuroethics components of the IBI

- 13. future of healthcare early detection early intervention disease modification.

- 14. Torous, 2016 Kirby et al, 2018

- 16. • Continuous recording • Surveillance: Stigma • Privacy, • Treatment: • Big Data Analytics: • Bias • Ownership and gatekeeping of data Insel 2017 JAMA; Rommelfanger et al in prep New opportunities and challenges

- 18. .

- 19. Privacy and re-identifiability • Opportunity: continuous (vs. episodic), ubiquitous recording • Risks: re-identifiability

- 20. • Medicalization: Social process where • Reinforcing bias: Groups and group differences are usually defined by criteria that also have important social meanings— i.e., by geographical boundaries, race/ethnicity, SES, or genealogical ties.

- 21. • Opportunity: Surveillance, Risk Assessment and Intervention • Risks: New unanticipated/legacy use • Beyond the clinic might predictive information go to employers, insurance agencies, etc.? • What new kinds of information may be deduced/disclosed in analysis? • No other field has the power to take and grant rights like psychiatry • With big data, might we revisit meaning of privacy anyway?

- 22. • Opportunity: Surveillance, Risk Assessment and Intervention • Risks: New unanticipated/legacy use • Beyond the clinic might predictive information go to employers, insurance agencies, etc.? • What new kinds of information may be deduced/disclosed in analysis? • No other field has the power to take and grant rights like psychiatry • Online Platforms and Networked Harms

- 23. ew kinds of data. • The purpose of big data analytics is to create new unanticipated knowledge of already existing data • Big data science* “changes how we know and likewise we should expect more complicated ethical implications of what we know” • It is difficult to fully understand the risk of such research as the science continues to evolve and legacy use and ownership of data remain unclear *Data is now… 1. Infinitely connectable 2. Indefinitely repurposable 3. Continuously updatable 4. Distanced from the context of collection *Quoted from Metcalfe and Crawford, 2016 Opportunity: Big Data Analytics Risks: New unanticipated use

- 25. Issues unique ? Not entirely Yes • Informed consent

- 26. ? • In US, • In EU • China Japan • It would be incorrect to suggest there are absolute differences, however relative differences may matter in considering appropriate governance and enforcement structures

- 27. 70% of the worlds populations are collectivist. Hofstede, 2014, Wu et al, 2015; Lan, 2015 Would you use enhancers? Society for Neuroscience, 2005, Cyranoski, Nature 2005

- 30. OVERSIGHT OF DIRECT- TO-CONSUMER NEUROTECHNOLOGIES Anna Wexler SharpBrains Virtual Summit May 7, 2019

- 31. Talk outline • What is direct-to-consumer (DTC) neurotechnology? • How is DTC neurotechnology regulated in the United States? • What are the outstanding issues regarding DTC neurotechnology? • What should we do?

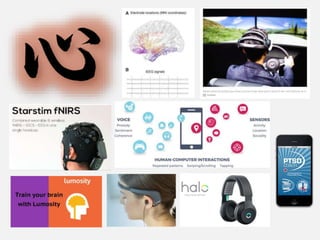

- 32. What is direct-to-consumer neurotechnology? • the set of products (devices, software, applications) that are marketed to modulate or affect brain function • sold directly to consumers (i.e., bypassing the physician) • appeal to the fruits of brain and cognitive science

- 33. • Not referring to: • Cognitive enhancement drugs • Neuromarketing • Invasive/implantable technologies (i.e., brain-computer interfaces) • Very futuristic technologies What is direct-to-consumer neurotechnology?

- 34. Consumer neuroscience technology market is predicted to top $3 billion by 2020 (SharpBrains 2018)

- 35. How is DTC neurotechnology regulated (in the US)?

- 36. How is DTC neurotechnology regulated (in the US)? According to Section 201(h) of the Food, Drug & Cosmetic (FD&C) Act, a medical device is: an instrument, apparatus, implement, machine, contrivance, implant, in vitro reagent, or other similar or related article, including a component part, or accessory which is: • recognized in the official National Formulary, or the United States Pharmacopoeia, or any supplement to them, • intended for use in the diagnosis of disease or other conditions, or in the cure, mitigation, treatment, or prevention of disease, in man or other animals, or • intended to affect the structure or any function of the body of man or other animals, and which does not achieve its primary intended purposes through chemical action within or on the body of man or other animals and which is not dependent upon being metabolized for the achievement of any of its primary intended purposes.

- 37. How is DTC neurotechnology regulated (in the US)? Any app/device/product that claims to diagnose or treat disease is regulated as a medical device by the FDA

- 38. How is DTC neurotechnology regulated (in the US)? 21st Century Cures Act excludes from the definition of a medical device any software related to “maintaining or encouraging a healthy lifestyle”

- 39. How is DTC neurotechnology regulated (in the US)? FDA will exercise enforcement discretion for low-risk devices marketed for general wellness • Examples of general wellness claims are those relating to: - mental acuity - concentration - problem solving - stress and relaxation

- 40. How is DTC neurotechnology regulated (in the US)? FDA will exercise enforcement discretion for low-risk devices marketed for general wellness • Examples of general wellness claims are those relating to: - mental acuity, - concentration - problem solving - stress and relaxation Consumer neurostimulation devices are not considered low-risk, due to “the risks to a user’s safety from electrical stimulation”

- 41. How is DTC neurotechnology regulated (in the US)? Lumos Labs, Jungle Rangers, UltimaEyes (2015-2016)

- 42. How is DTC neurotechnology regulated (in the US)? Lumos Labs, Jungle Rangers, UltimaEyes (2015-2016) tDCS Home Device Kit (2013)

- 43. Is current regulatory oversight sufficient? • DTC neurotech – device version of dietary supplements (?)

- 44. Is current regulatory oversight sufficient? • DTC neurotech – device version of dietary supplements (?) • DSHEA (1994): mostly post-market authority

- 45. 1. Effectiveness • Does DTC neurotechnology work as advertised?

- 46. 1. Effectiveness • Does DTC neurotechnology work as advertised? • Lack of data • Lack of data when translating from lab consumer space • Lack of scientific consensus (even with data)

- 47. 2. Consumer understanding Consumer challenges in navigating claims

- 48. 2. Consumer understanding Consumer challenges in navigating claims • 21% of respondents in SharpBrains (2018) reported that ”navigating claims” was the most important issue facing the brain fitness field

- 49. 2. Consumer understanding Consumer challenges in navigating claims • 21% of respondents in SharpBrains (2018) reported that ”navigating claims” was the most important issue facing the brain fitness field • AARP report: over a quarter of adults age 40 and older believe that the best way to maintain/improve brain health is to play brain games, even though there is “little scientific evidence” to support this (Mehegan et. al, 2017)

- 50. 3. Potential harms & ethical issues • Safety • 3% of users of neurostimulation devices reported serious skin burns (Wexler 2018) • Unknown effects of chronic use: users stimulate far more frequently than scientists

- 51. 3. Potential harms & ethical issues • Safety • 3% of users of neurostimulation devices reported serious skin burns (Wexler 2018) • Unknown effects of chronic use: users stimulate far more frequently than scientists • Psychological Harm • Unreliable (potentially false) information may cause individuals undue stress (Wexler & Thibault, 2018)

- 52. 3. Potential harms & ethical issues • Safety • 3% of users of neurostimulation devices reported serious skin burns (Wexler 2018) • Unknown effects of chronic use: users stimulate far more frequently than scientists • Psychological Harm • Unreliable (potentially false) information may cause individuals undue stress (Wexler & Thibault, 2018) • Additional considerations • Opportunity costs • May hold particular appeal for vulnerable populations • Clear terms of service + data privacy policies

- 53. THE CHALLENGE How do we foster innovation while ensuring adequate oversight?

- 54. What should we do? • Independent working group • Evaluate the main domains of neurotechnology & provide appraisals of potential harm and probable efficacy • Disseminate information to key consumer groups (e.g., AARP), media outlets, etc. • Identify areas for future research • Serve as a clearinghouse for regulatory agencies, third-party organizations that monitor advertising claims, industry, funding agencies Wexler & Reiner 2019

- 55. THANK YOU! awex@pennmedicine.upenn.edu Mehegan, L., Rainville, C., Skufca, L.“2017 AARP Cognitive Activity and Brain Health Survey” (AARP Research, Washington, DC, 2017), doi:10.26419/res.00044.001. SharpBrains, Market Report on Pervasive Neurotechnology: A Groundbreaking Analysis of 10,000+ Patent Filings Transforming Medicine, Health, Entertainment and Business (2018) (available at https://sharpbrains.com/pervasive-neurotechnology/). Wexler, A. & Thibault, R. (2018). Mind-reading or misleading? Assessing direct-to-consumer electroencephalography (EEG) devices marketed for wellness and their ethical and regulatory implications. Journal of Cognitive Enhancement [published online first, Sep 2018]. Wexler, A. (2018). “Who uses direct-to-consumer brain stimulation products, and why? A study of home users of tDCS devices.” Journal of Cognitive Enhancement, 2(1): 114-134. Wexler A, & Reiner P.B. (2019). “Oversight of direct-to-consumer neurotechnologies.” Science, 363(6424):234-325.

- 56. May 2019

- 57. Akili is a leader in prescription digital therapeutics by creating video game treatments and supportive technology applications for cognitive dysfunction. Building new treatment model rooted in unique delivery, accessibility and user experience — one that traditional medicine and digital models have yet to realize. It’s time to play your medicine.

- 58. Akili is a leader in prescription digital therapeutics by creating video game treatments and supportive technology applications for cognitive dysfunction. Building new treatment model rooted in unique delivery, accessibility and user experience — one that traditional medicine and digital models have yet to realize. It’s time to play your medicine.

- 59. • Beyond behavioral therapy to physiologically- active digital treatments • Products and platform validated with rigorous prospective pharma-style clinical trials • Deep investment in user experience and entertainment value • First internally-operated, fully-integrated business model Evolving the definition of Digital Therapeutics ‘Digital therapeutics represent a potential paradigm shift in the way disease is treated, as software itself starts to become the therapeutic…’ CowenResearch, 2018

- 60. Video game developers, artists and influencers LucasArts - Google - Microsoft T E C H N O L O G Y & G A M I N G P H A R M A & H E A L T H C A R E Pharmaceutical leaders with deep experience in novel commercial launches Shire - Sanofi - Pfizer C L I N I C A L & S C I E N C E Best-in-class teams committed to R&D and clinical trial development MIT - Yale - Duke BOSTON SAN FRANCISCO 99 Diversity of perspective is at the core of Akili’s capabilities.

- 61. Unmet needs in treating cognitive dysfunction have remained the same for decades…

- 62. A D H D D E P R E S S I O N M U L T I P L E S C L E R O S I S Center for Disease Control and Prevention; Anxiety and Depression Association of America; Multiple Sclerosis Discovery Forum; Data on File Experience adverse effects from drugs 67% Show no response to first drug therapy 50% Have untreated cognitive impairments that continue to impact quality of life after drug treatment 50% children and adolescents in U.S. Americans treated every year diagnosed per week 6.5 million 12 million 200 new cases

- 63. • In-person therapy or Rx pickup • Treating symptoms through non-specific pharmacology • SEs as a necessary risk • One size/type fits all • Little data to understand disease progress • Targeting specific physiological networks to treat specific functions • Safe risk profile with no AEs • Adaptive, personalized technology • Rich, continuous data to visualize benefits & gain insight Akili’s Unique Approach to Treatment TRADITIONAL MEDICINE DRUGS & BEHAVIORAL THERAPY DIGITAL TREATMENT • Digital prescription delivery

- 64. We believe medicine can be beautiful, wonderful, captivating. We believe medicine can be beautiful, wonderful, captivating.

- 65. We believe medicine can be beautiful, wonderful, captivating. We believe medicine can be beautiful, wonderful, captivating.

- 66. Privacy, Ethics and Regulatory Issues Overcoming patient and societal fears • Privacy • Patient fears regarding privacy and security of sensitive diagnostic & treatment information • Loss of public trust in company transparency regarding use of data • Informed consent • Patient authentication and data integrity • Patchwork of national/state/federal privacy laws • Safety & Efficacy • Suspicion regarding marketing claims versus approved medical claims backed up by clinical trials We’re interacting with patients in a new way. Building trust is critical in everything we do.

- 68. Access recorded talks, Q&A, and more at: SharpBrains.com

Editor's Notes

- Where neuroscientists find themselves pondering questions that have perennially plagued philosophers Conceptual framework for self: neuro-essentialism Mapping the brain’s networks = a feat no less equated to mapping human identity “the wiring that makes us who we are” (Seung, 2012).”

- Where neuroscientists find themselves pondering questions that have perennially plagued philosophers Conceptual framework for self: neuro-essentialism Mapping the brain’s networks = a feat no less equated to mapping human identity “the wiring that makes us who we are” (Seung, 2012).”

- Where neuroscientists find themselves pondering questions that have perennially plagued philosophers Conceptual framework for self: neuro-essentialism Mapping the brain’s networks = a feat no less equated to mapping human identity “the wiring that makes us who we are” (Seung, 2012).”

- “pervasive neurotechnology applications include brain-computer interfaces (BCIs) for device control or real-time neuromonitoring, neurosensor-based vehicle operator systems, cognitive training tools, electrical and magnetic brain stimulation, wearables for mental wellbeing, and virtual reality systems.” Ienca and Andorno, 2019

- Catastrophic mistakes could include impairing agency.

- “Research shows that the combination of neuroimaging technology and artificial intelligence allows to “read” correlates of mental states including hidden intentions, visual experiences or even dreams with an increasing degree of accuracy and resolution.”—Ienca 2019; Forbes, 2009 Wolpe

- “A recent experiment recorded functional MRI (fMRI) data from volunteers while they were watching video clips. From their brain activity, computers were able to partially reconstruct some of the images that the volunteers saw. That is, from the information gathered by the brain scans it was possible, to a certain extent, to watch what they were watching. This is not the same as watching what was "in their minds," so to speak, but watching what their minds were watching. Still, quite an amazing achievement.” npr, 2011

- Privacy. Research is dependent on participant trust which is a precious commodity. Opportunity for continuous (vs. episodic) recording in ‘patient’s ecosystem’. As I and others have said, data can be collected and easily passively and actively input throughout the patients day. And even with the best intentions, this raises issues of privacy. Privacy risks. As with genomics, wide scale use of neuroscience data could lead to re-identifiability of participants. “Neuroimaging data coupled with layers of descriptive meta-data may mean that “sulcal and gyral fingerprints” or even BOLD activity patterns could compromise participant confidentiality, even when the data has been “anonymized” in ordinary ways. “ -Choudhury, 2014 pg. 5. But imagine in this instance where we can cross link GPS, mood, purchases, social media feeds, AND brain activity. Even having a GPS stamp and two expensive purchases from a typical store, people can be re-identified, so what with be the potential for maintaining de-identification with brain recording data? Importantly, Neural data *may* feel qualitatively different because the types of information that is inferred. We know from some interviews with participants about BCI and with some researchers that privacy is an issue of concern. From GNS paper: The public has expressed some privacy concerns as collected via outreach seminars in Europe which focus on brain computer interfaces or BCI (Jebari and Hansson, 2013) about the acquisition of private information and similar concerns have been discussed amongst surveyed scientists in Japan, for example (Higashijima et al., 2011); and in the scholarly literature around concerns of appropriate informed consent with BCI research (Klein and Ojemann, 2016). Not unlike genomics, in the case of increasingly large “big data” set analysis in neuroscience, it may be the case that maintaining de-identification (i.e. stripping information that will link data back to the individual) is a lost cause (Choudhury et al., 2014). The more likely possibility with large data sets or small numbers of initial participants is re-identification. In such cases, consent for participation in studies may need to acknowledge these realities. Finally, we show that even data sets that provide coarse information at any or all of the dimensions provide little anonymity and that women are more reidentifiable than men in credit card metadata. These metadata are generated by our use of technology and, hence, may reveal a lot about an individual (16, 17). Making these data sets broadly available, therefore, requires solid quantitative guarantees on the risk of reidentification. A data set’s lack of names, home addresses, phone numbers, or other obvious identifiers [such as required, for instance, under the U.S. personally identifiable information (PII) “specific-types” approach (18)], does not make it anonymous nor safe to release to the public and to third parties.

- New unanticipated/legacy use make predicting risk challenging. Beyond the clinic might predictive information go to employers, insurance agencies, etc.? What new kinds of information may be deduced/disclosed in analysis? With big data, might we revisit meaning of privacy anyway? Privacy intrusions and re-identification may become an inevitable norm. Harms extend beyond the participant with big data. One can experience networked harms from afar. Perrhaps a better discussion is how we do not want information to be used, rather than guarding the flow of information such as for insurance or to employers? In general, online data collection is happening in many ways unbeknownst to the consumer. For example, apps that ask for access to stored data on devices to work, not only access private information like your contacts, calendar information, and your device’s unique id, but also can even save content on your device. Data is often shared actively and passively. Actively though mechanisms like social media, but also passively through connections of app families such as logging into apps via Facebook or Gmail. App partners may share data with their partners and so on. Finally, data can be combined for new uses beyond what the user expected. The app’s networks may tap into large entities like Facebook, Twitter, or Apple and can amplify consumer-generated data. Even phone numbers can link to wide sources like users social networking sites and users credit card. In the end, re-identification is a strong possibility if not inevitable. We also see harms move beyond an individual. we see “network harms” where harms can affect non-participants in studies by virtue of being in a network.

- New unanticipated/legacy use make predicting risk challenging. Beyond the clinic might predictive information go to employers, insurance agencies, etc.? What new kinds of information may be deduced/disclosed in analysis? With big data, might we revisit meaning of privacy anyway? Privacy intrusions and re-identification may become an inevitable norm. Harms extend beyond the participant with big data. One can experience networked harms from afar. Perrhaps a better discussion is how we do not want information to be used, rather than guarding the flow of information such as for insurance or to employers? In general, online data collection is happening in many ways unbeknownst to the consumer. For example, apps that ask for access to stored data on devices to work, not only access private information like your contacts, calendar information, and your device’s unique id, but also can even save content on your device. Data is often shared actively and passively. Actively though mechanisms like social media, but also passively through connections of app families such as logging into apps via Facebook or Gmail. App partners may share data with their partners and so on. Finally, data can be combined for new uses beyond what the user expected. The app’s networks may tap into large entities like Facebook, Twitter, or Apple and can amplify consumer-generated data. Even phone numbers can link to wide sources like users social networking sites and users credit card. In the end, re-identification is a strong possibility if not inevitable. We also see harms move beyond an individual. we see “network harms” where harms can affect non-participants in studies by virtue of being in a network.

- “Research shows that the combination of neuroimaging technology and artificial intelligence allows to “read” correlates of mental states including hidden intentions, visual experiences or even dreams with an increasing degree of accuracy and resolution.”—Ienca 2019; Forbes, 2009 Wolpe

- Confucianism is a biological, social, and metaphysical reality. ”Family reflects the deep structure of the universe namely a union of the universe, yin and yang..[and] carries a profound sense of necessity and normativity for human life Finally, I’d like to mention that out of Confucianism and many of the religions I’ve described like Buddhism, relational identities is a fundamental belief about construction of self and one’s movement throughout the world. Preliminary work in China to explore public values about cognitive enhancement have shown that while Westerner typically cite concerns of violations of authenticity and integrity for the self Taiwan and Chinese populations cite concerns about fairness, whether everyone or who will have access reflecting concerns about the relational self. Both equally cite concerns of safety. Of note is that the relational self is a feature of many collectivist cultures found in East Asia and much of the world. As an aside, 70% of the world’s population are collectivist. We might ask, How might regulation and development differ across differences in cultural values, particularly as we think about broader uses of many of the neurotechnologies being developed now that will often go beyond the lab and beyond the clinic into commercial and legal domains. Core of American bioethics is protecting the autonomy of the individual and many of concerns expressed with these technoloiges 70% worlds populations are collectivist Differing views on moral decision making

- ALSO INS?

- Why is it important to think about oversight here?