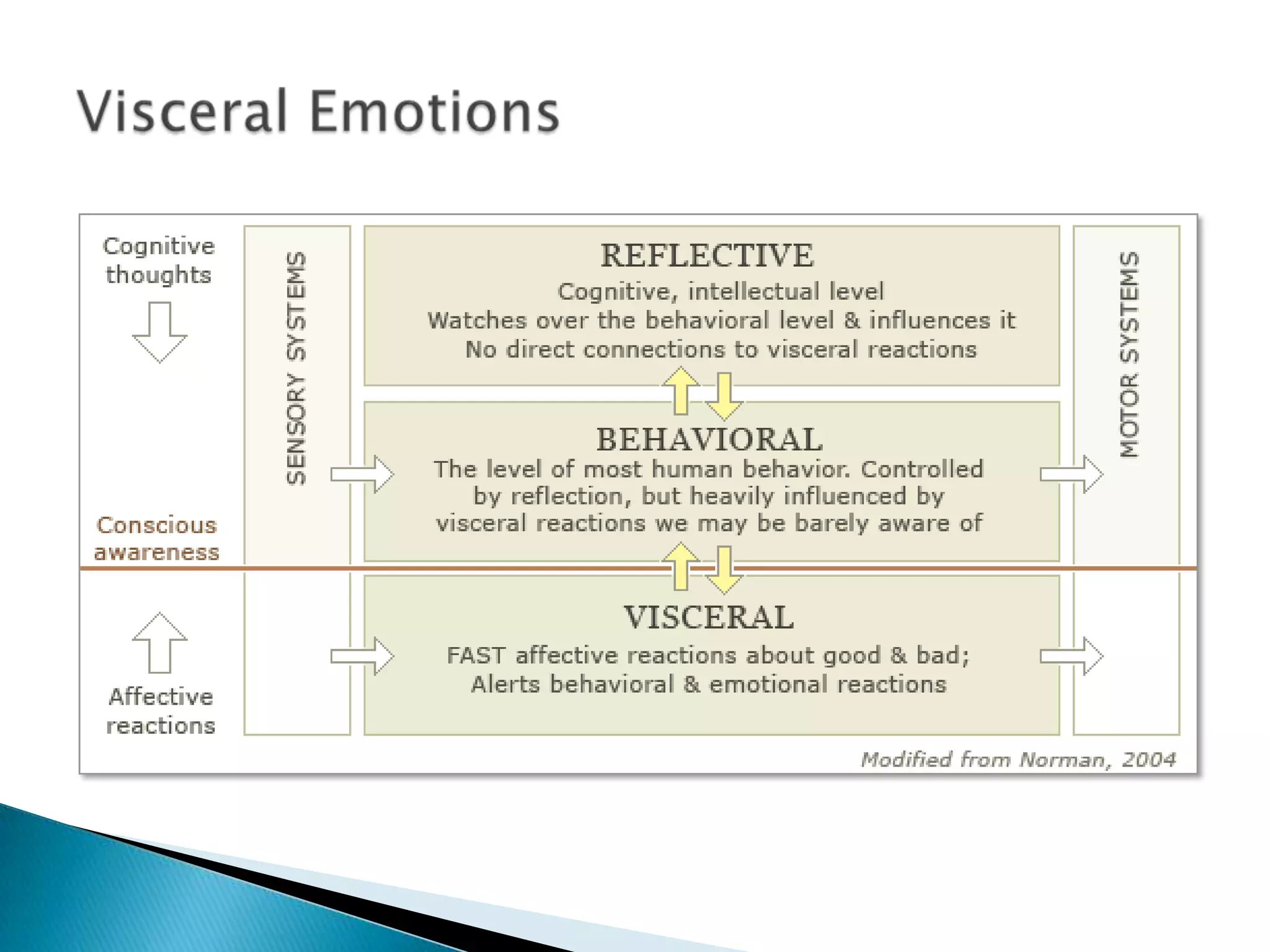

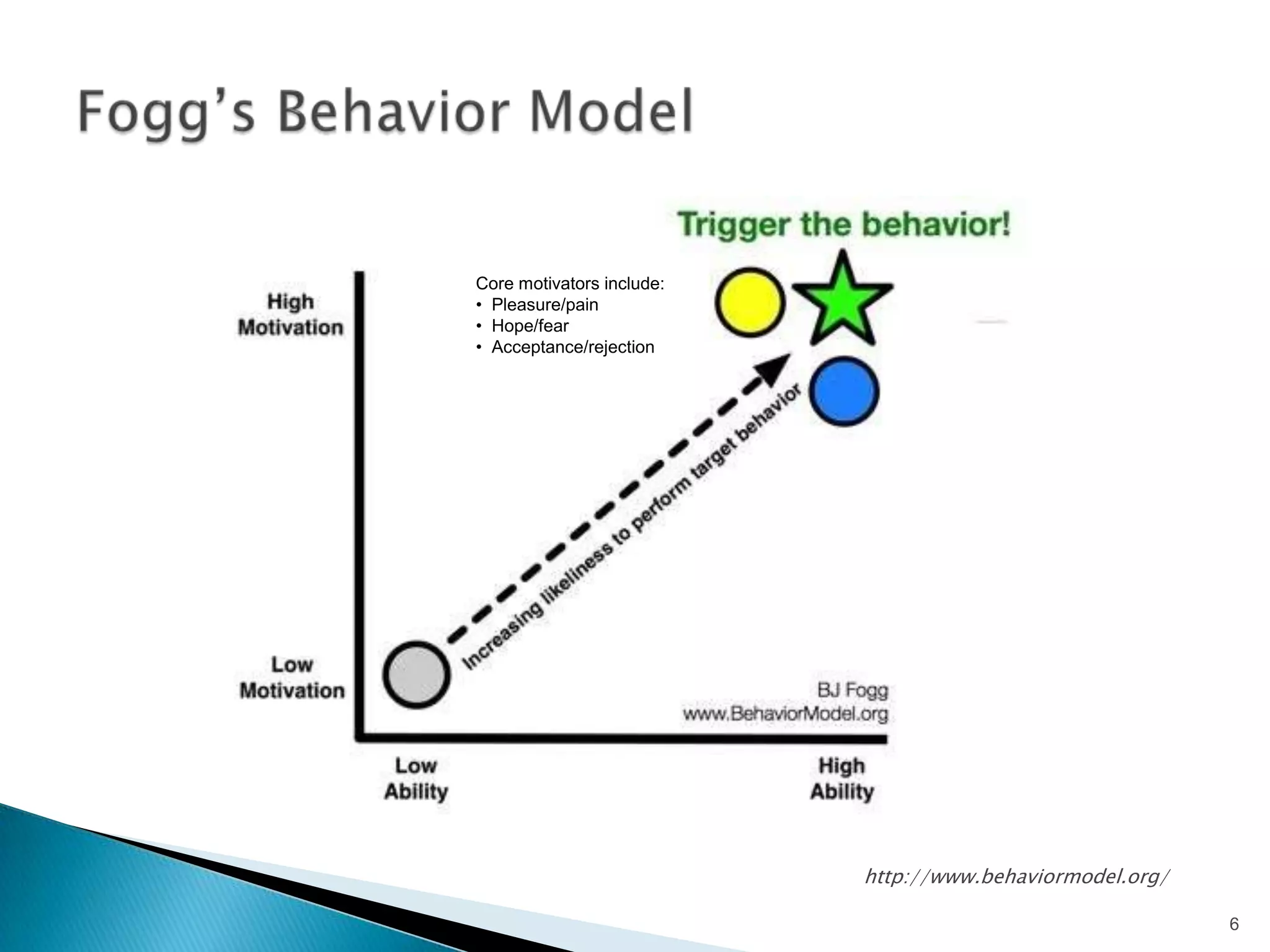

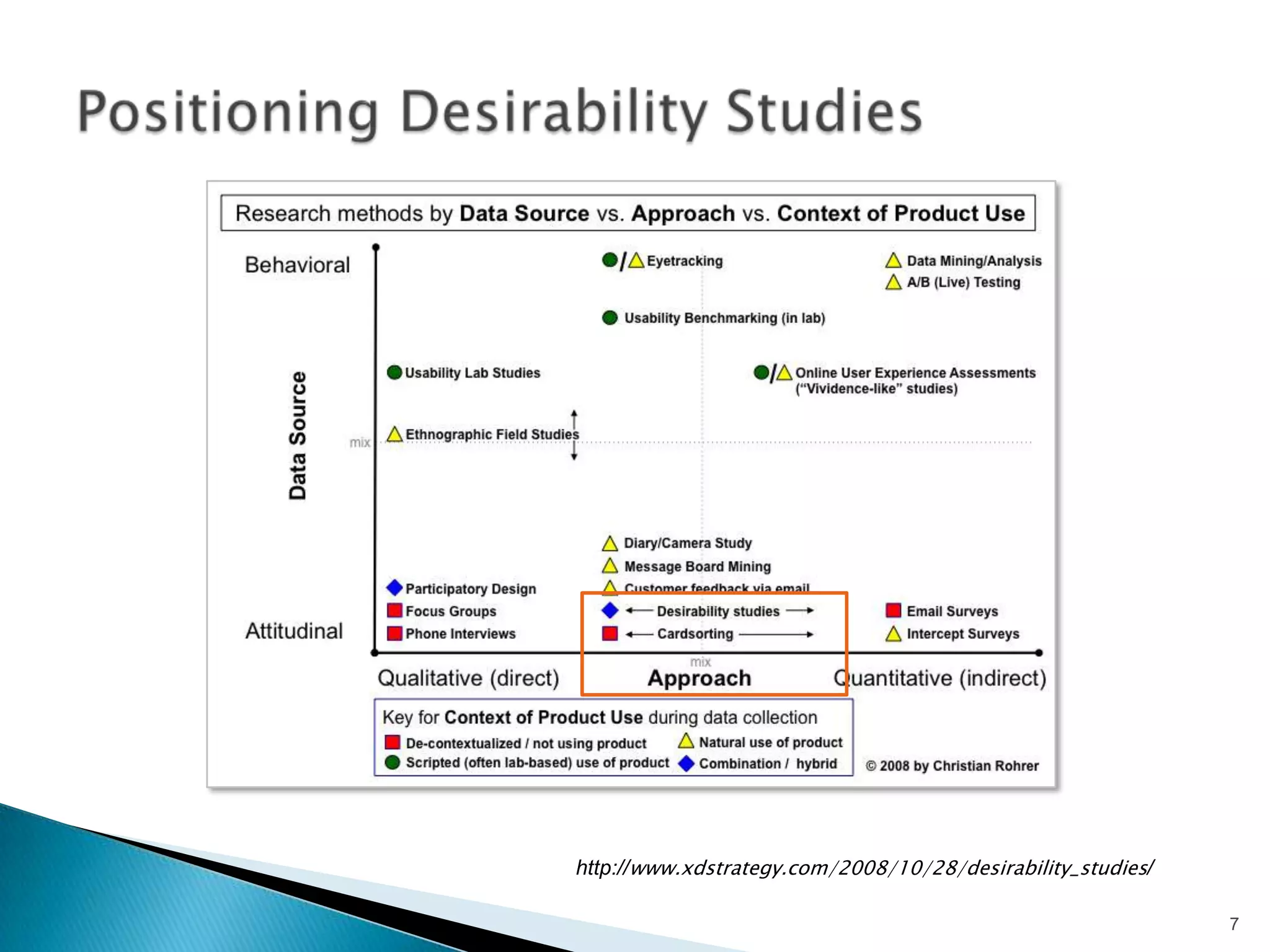

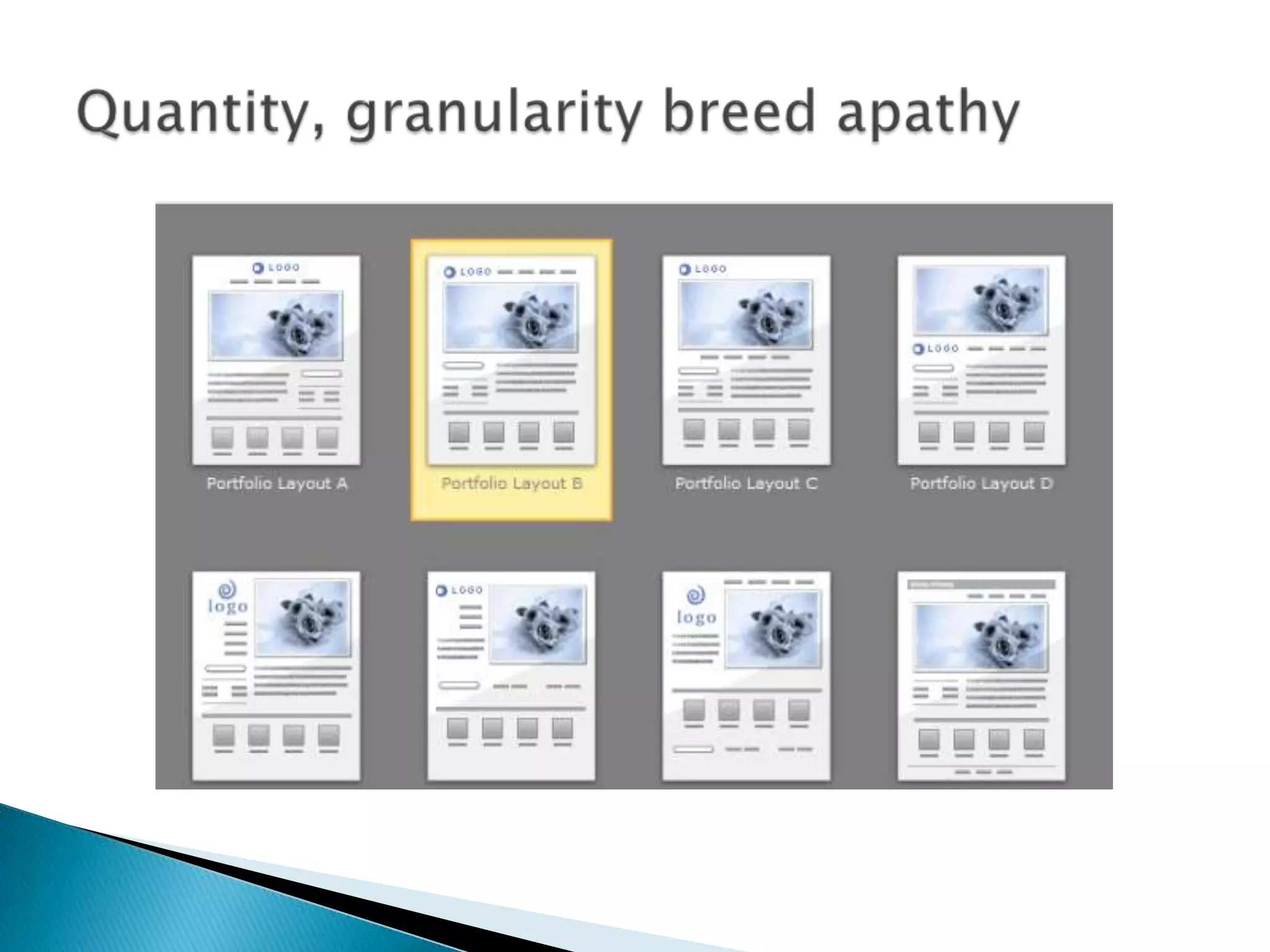

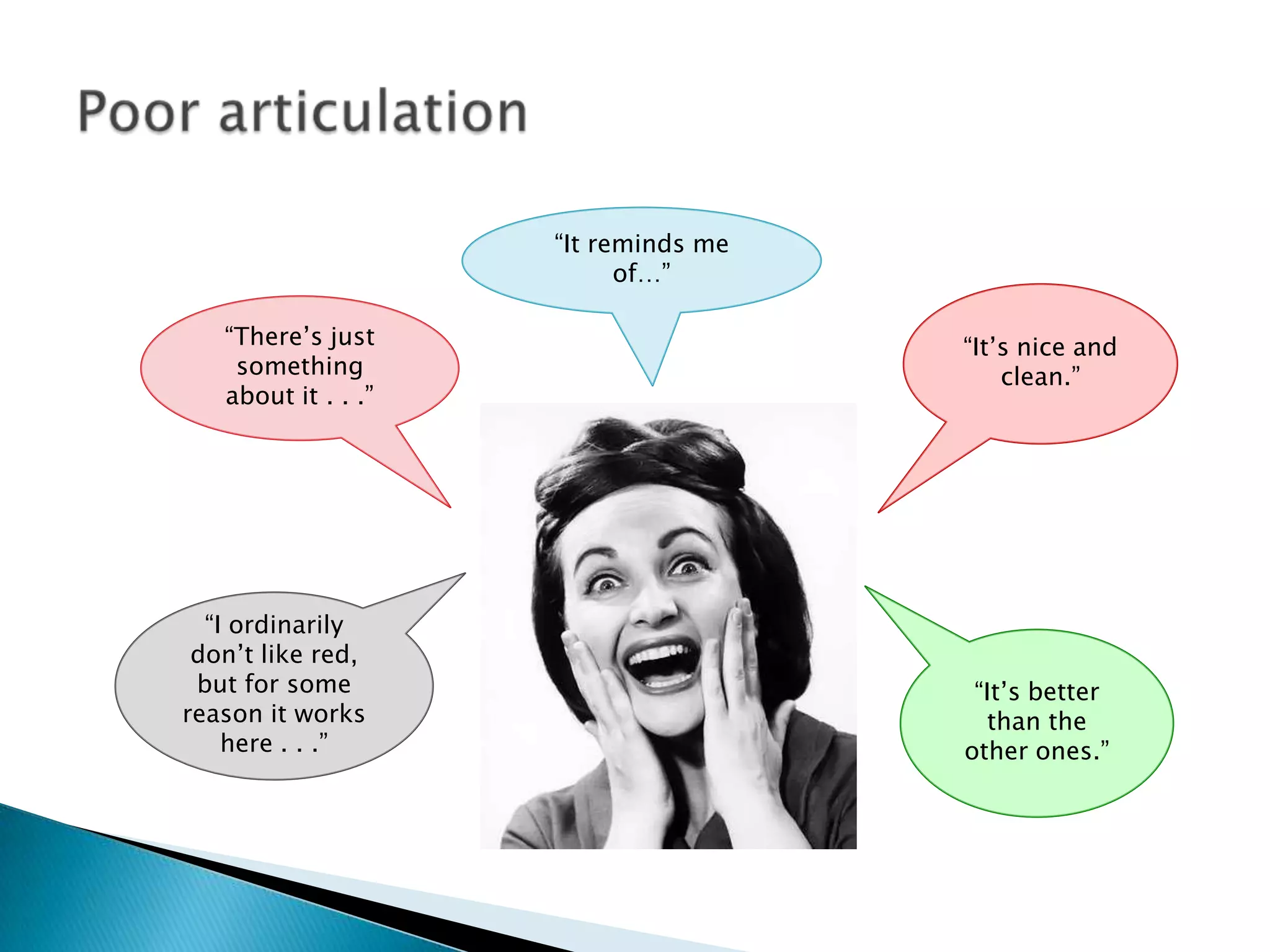

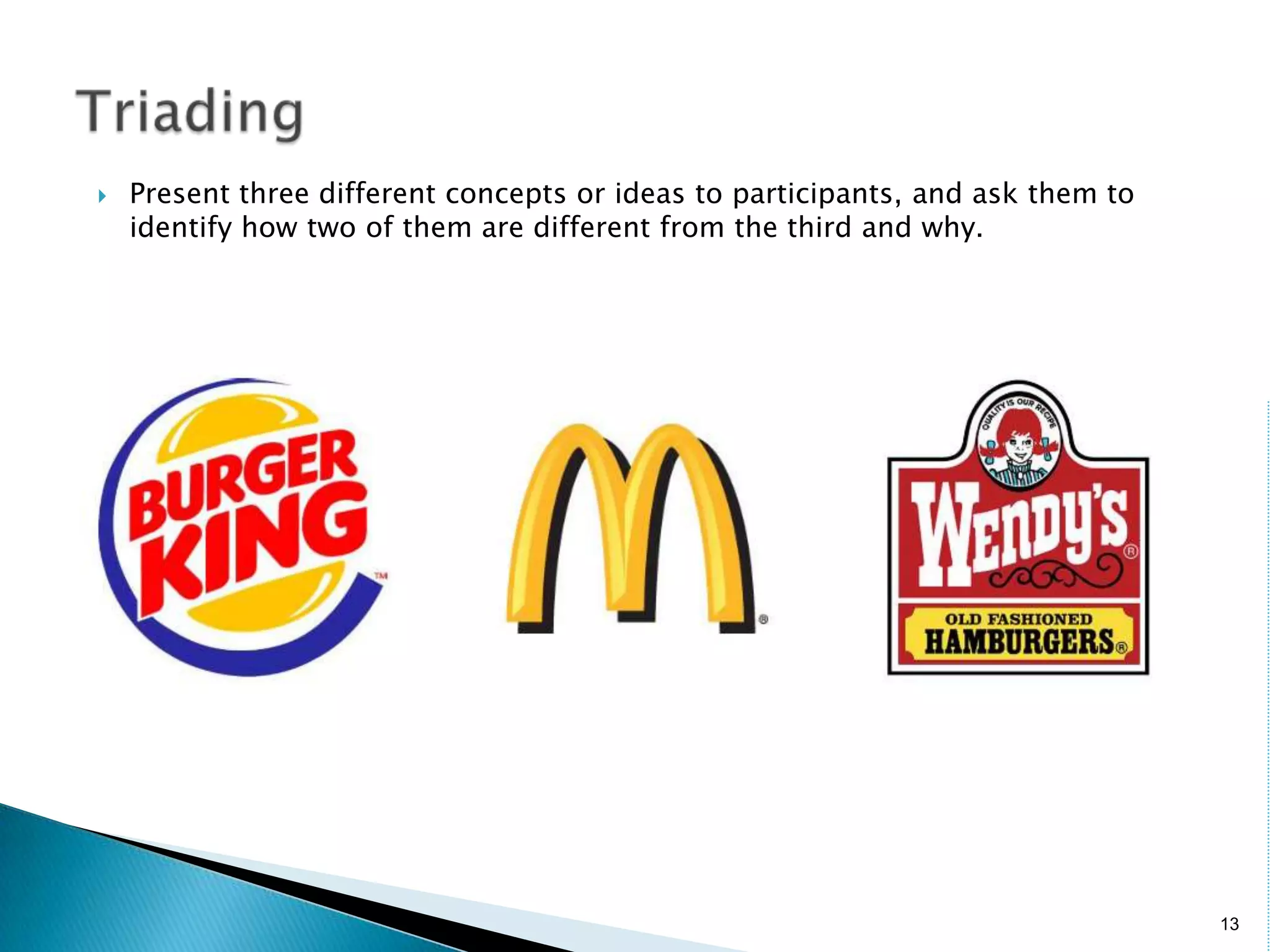

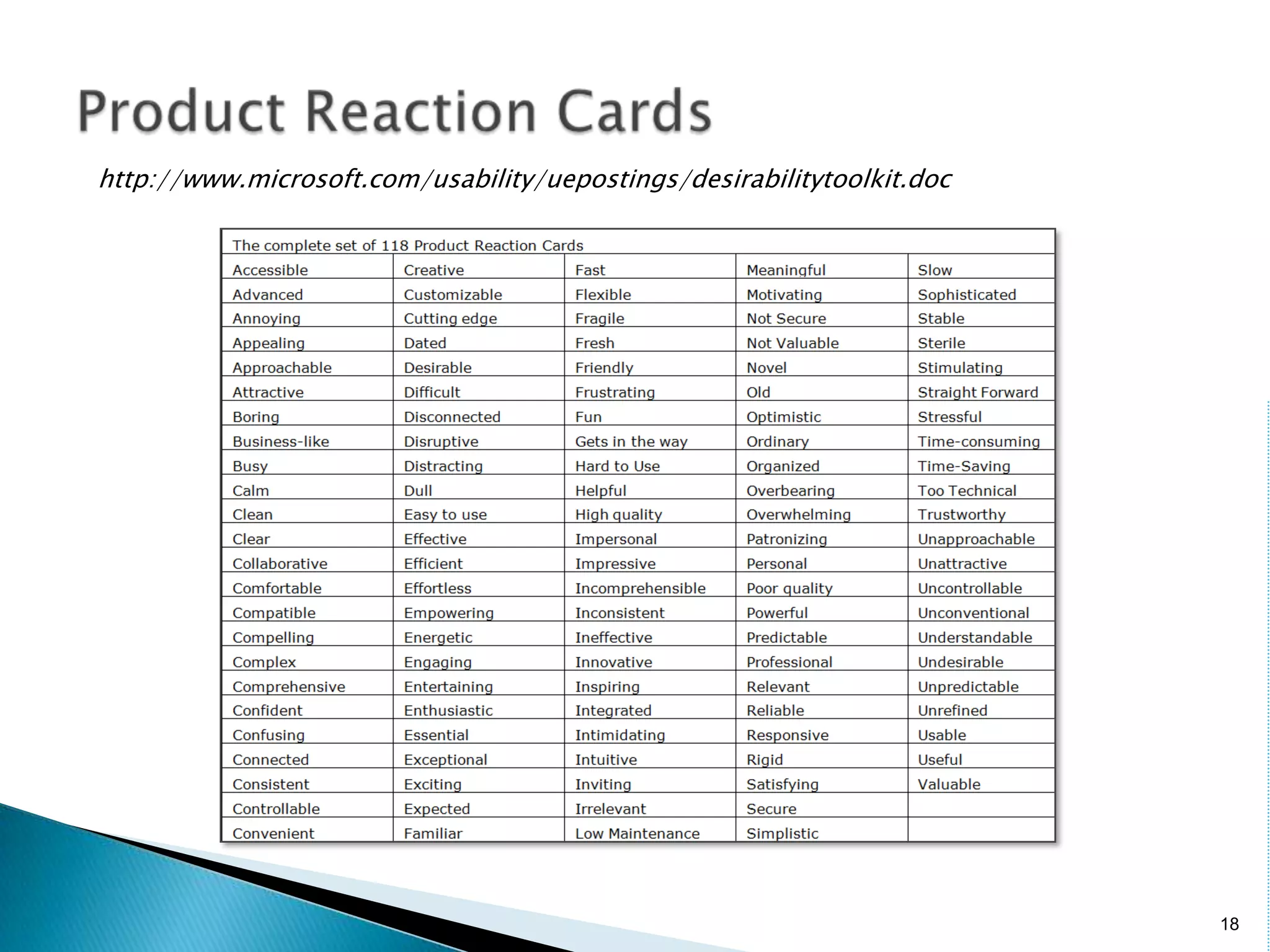

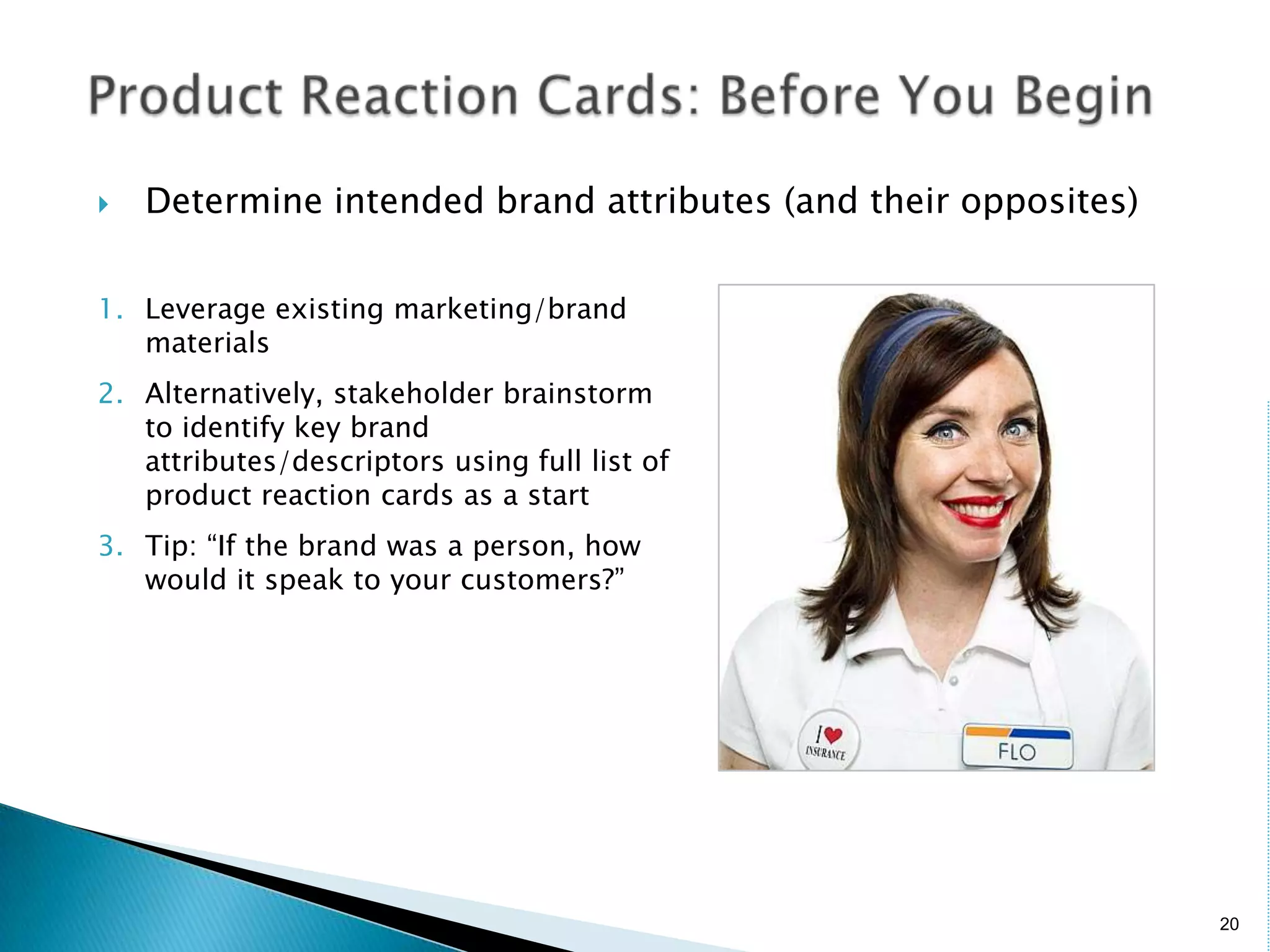

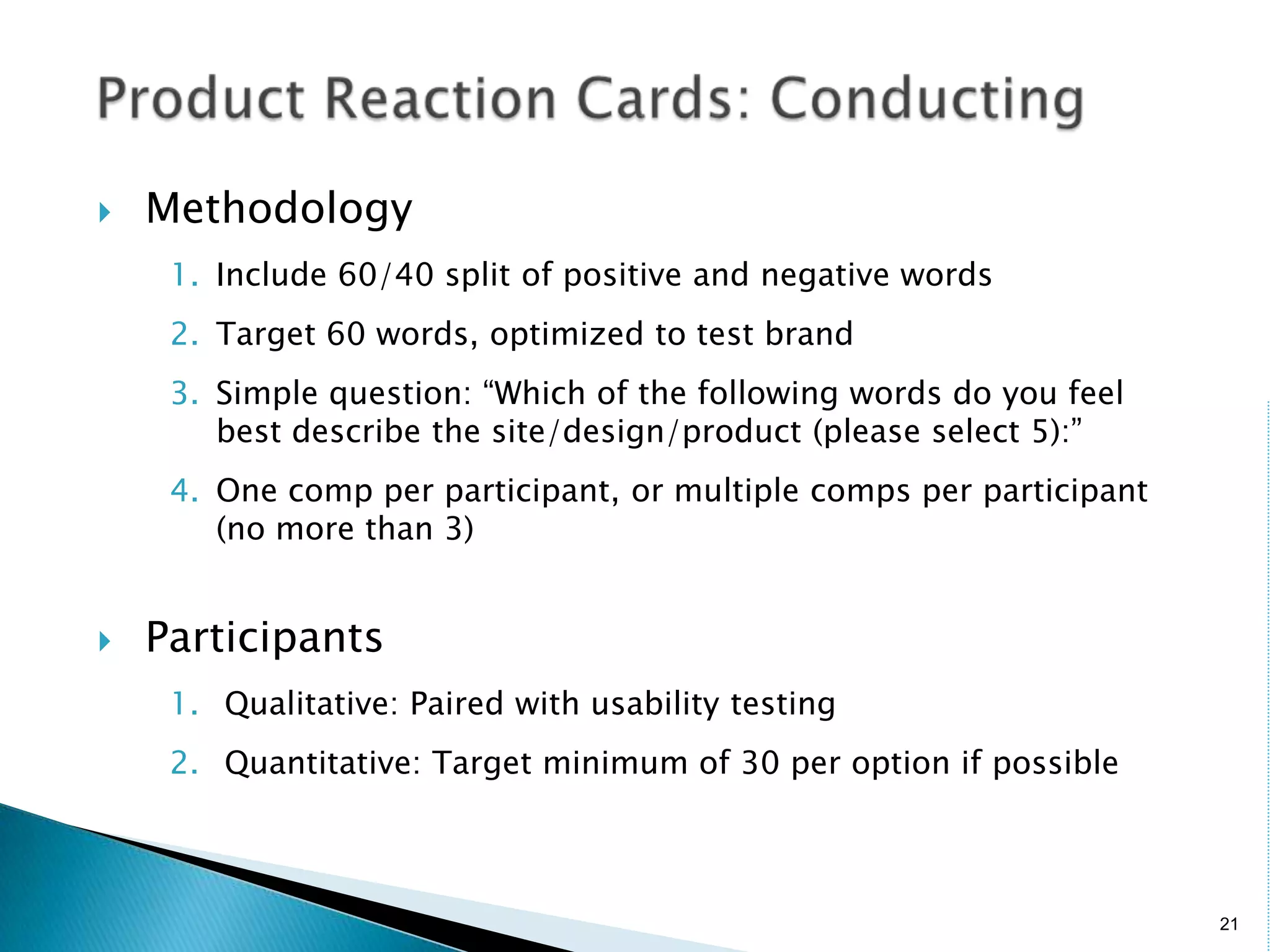

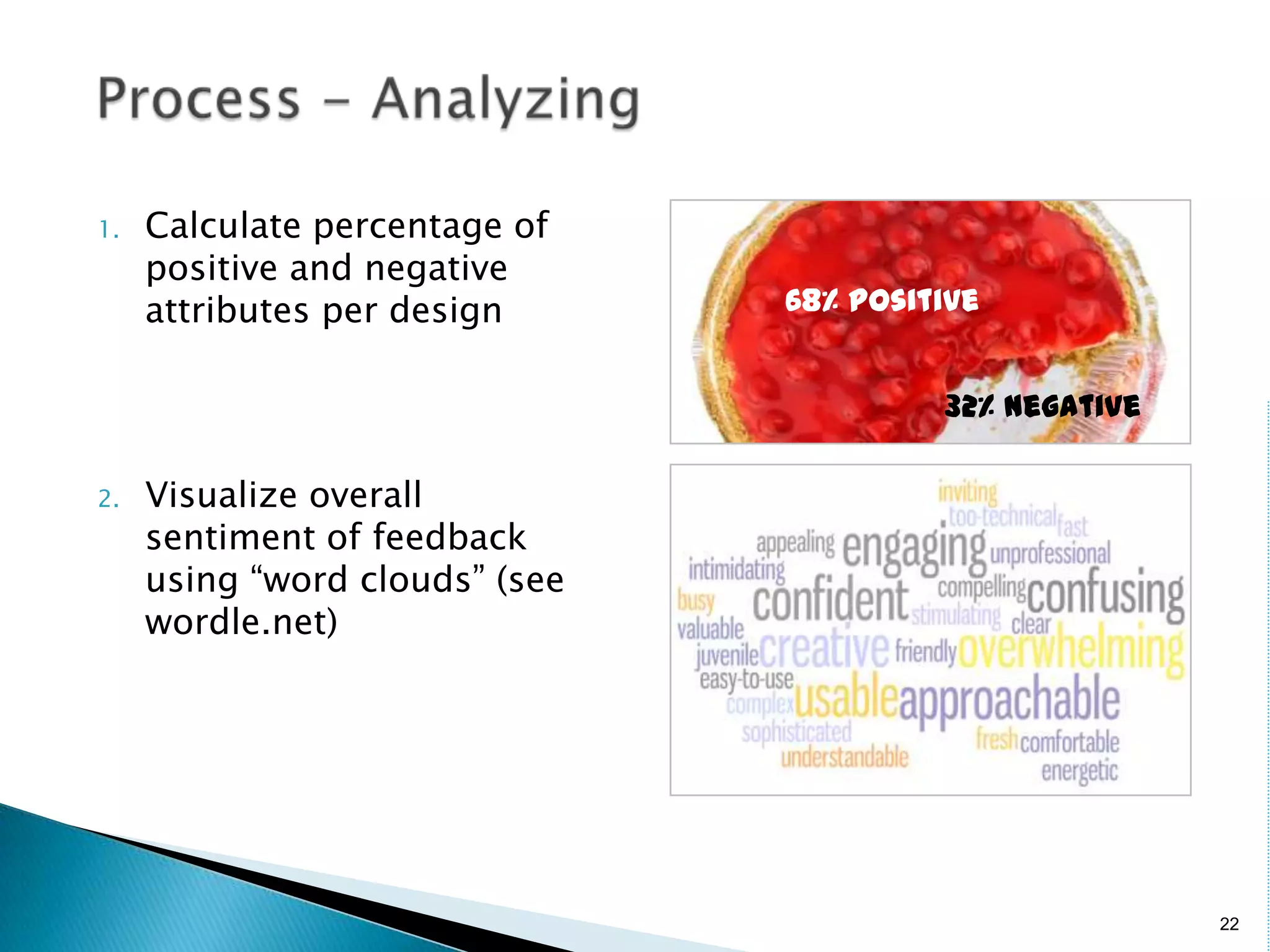

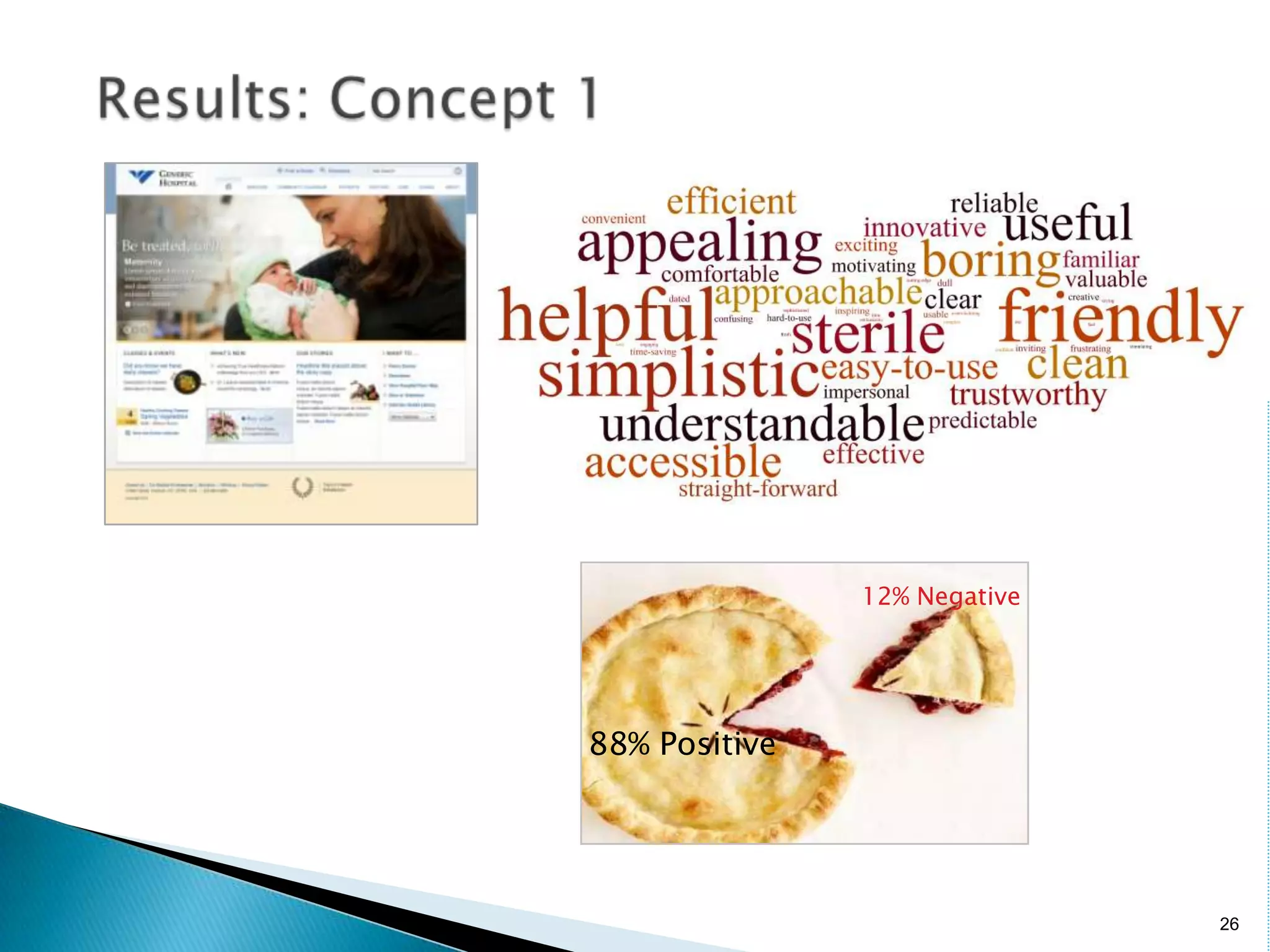

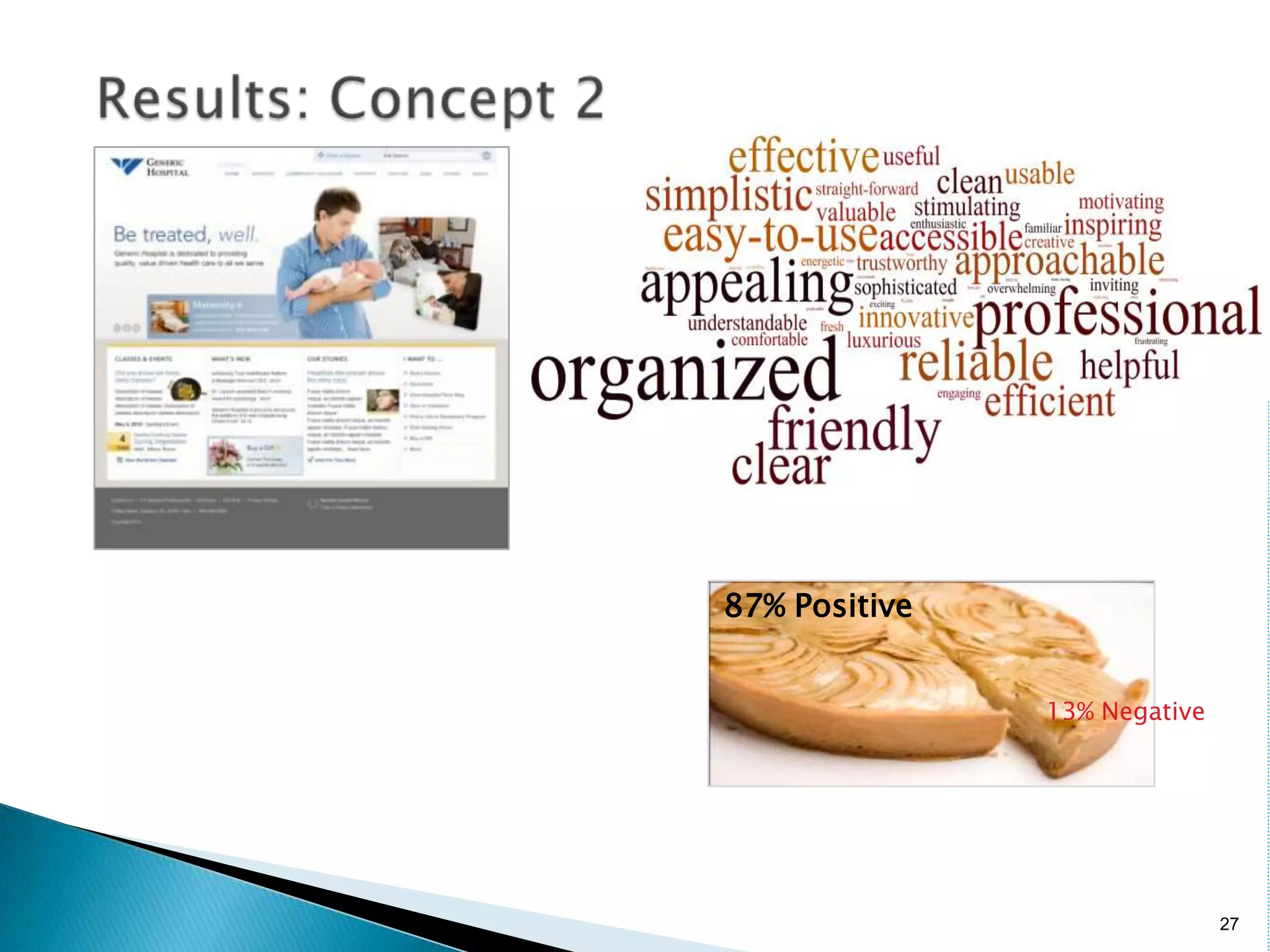

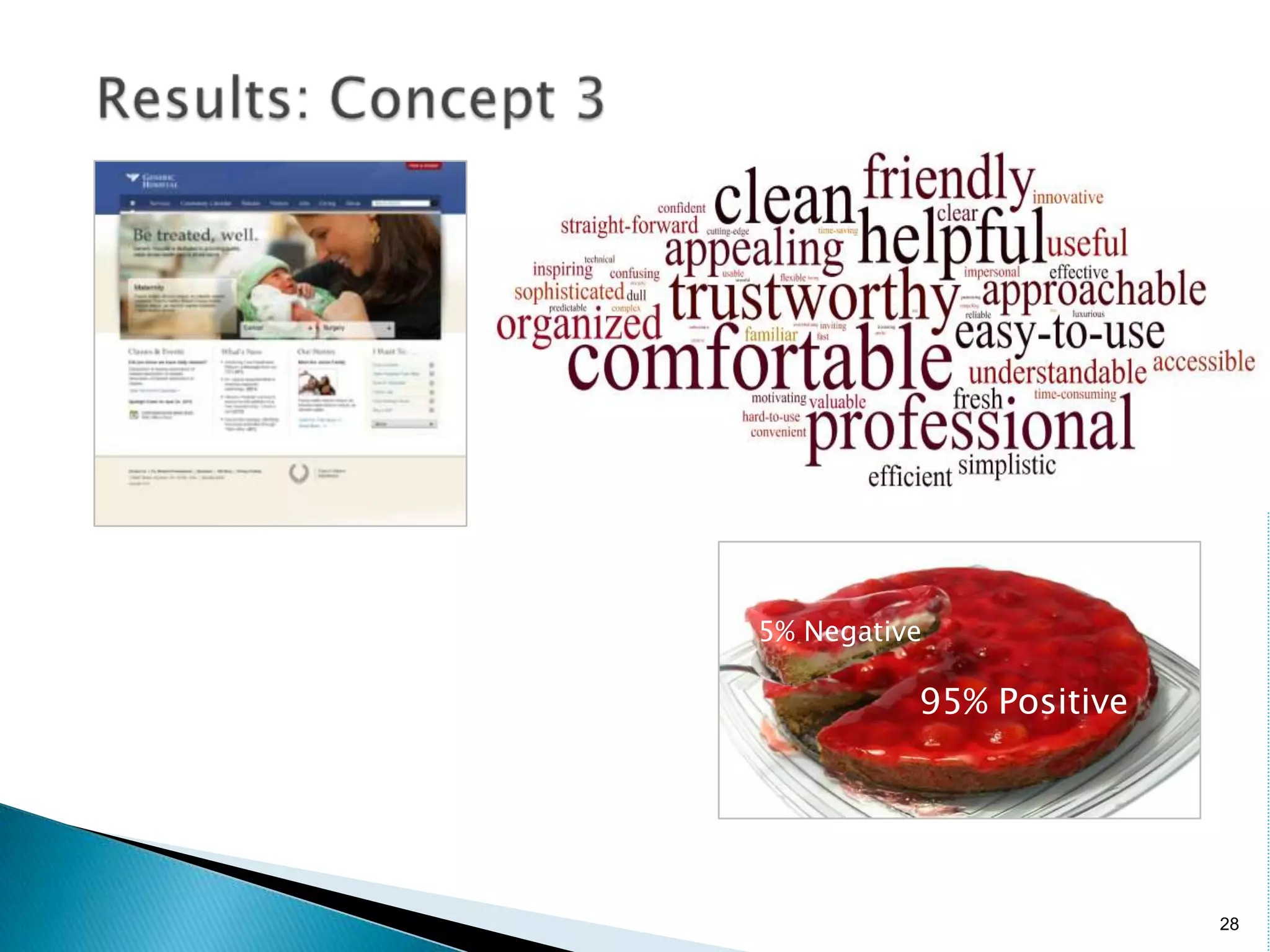

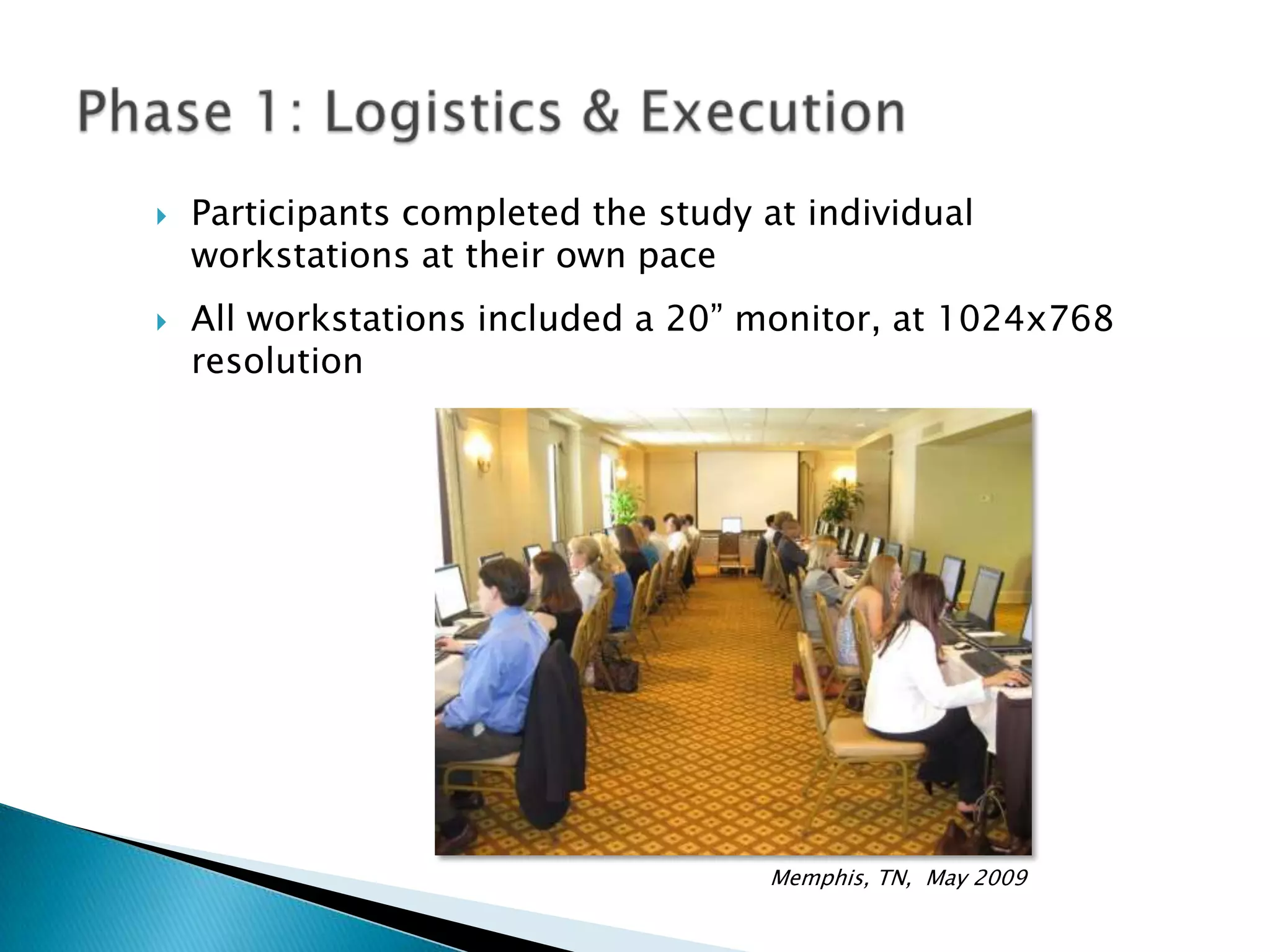

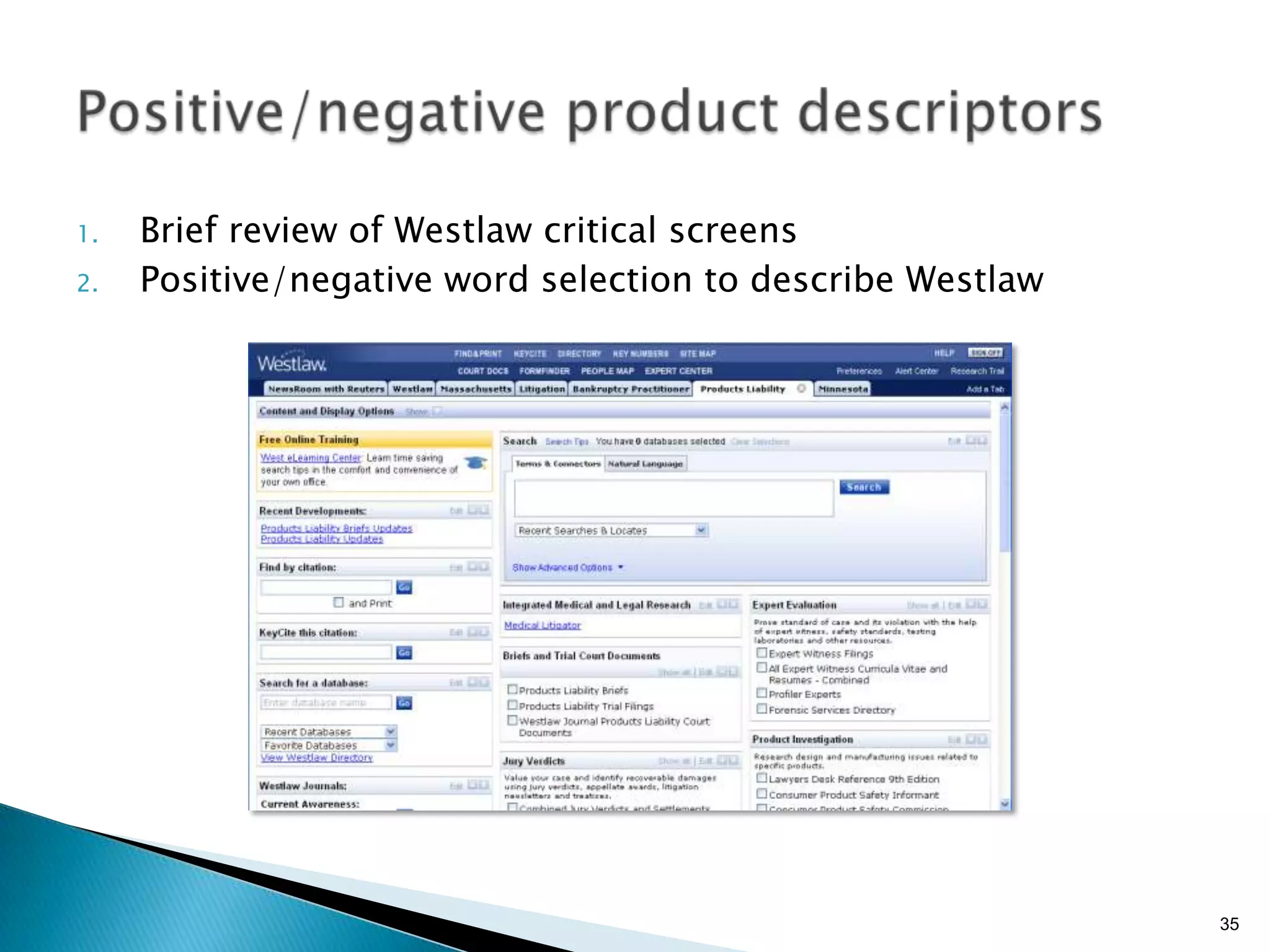

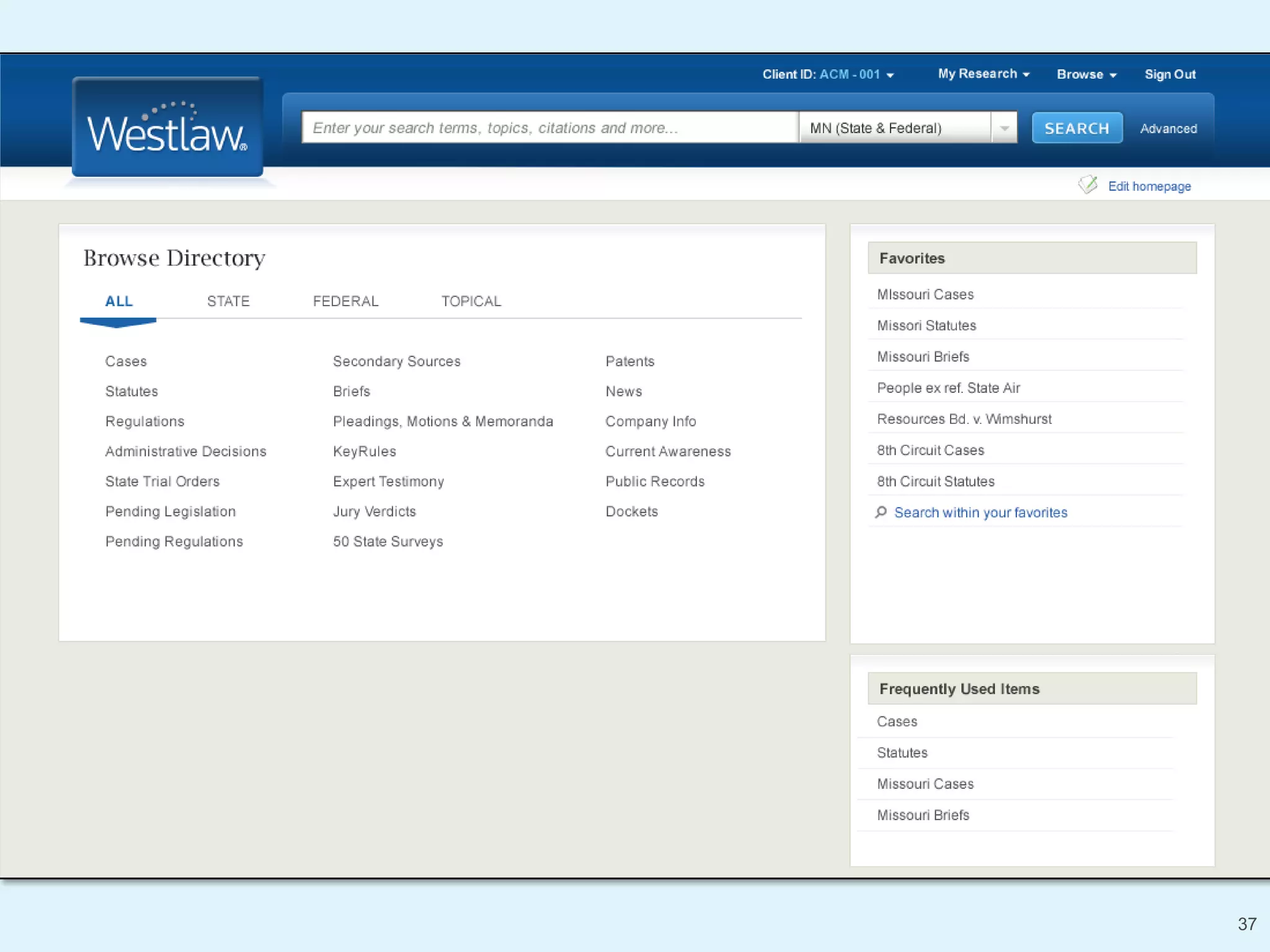

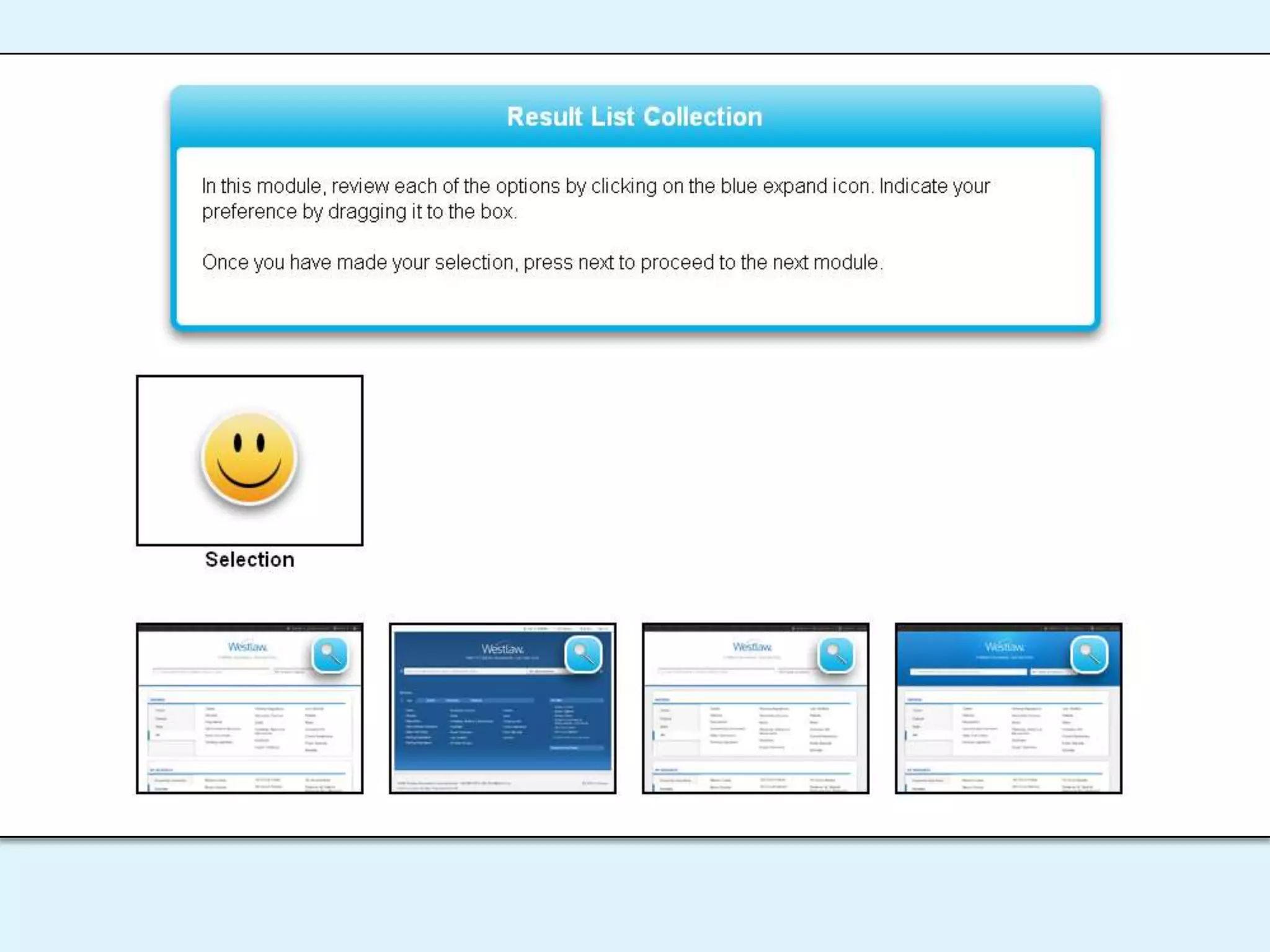

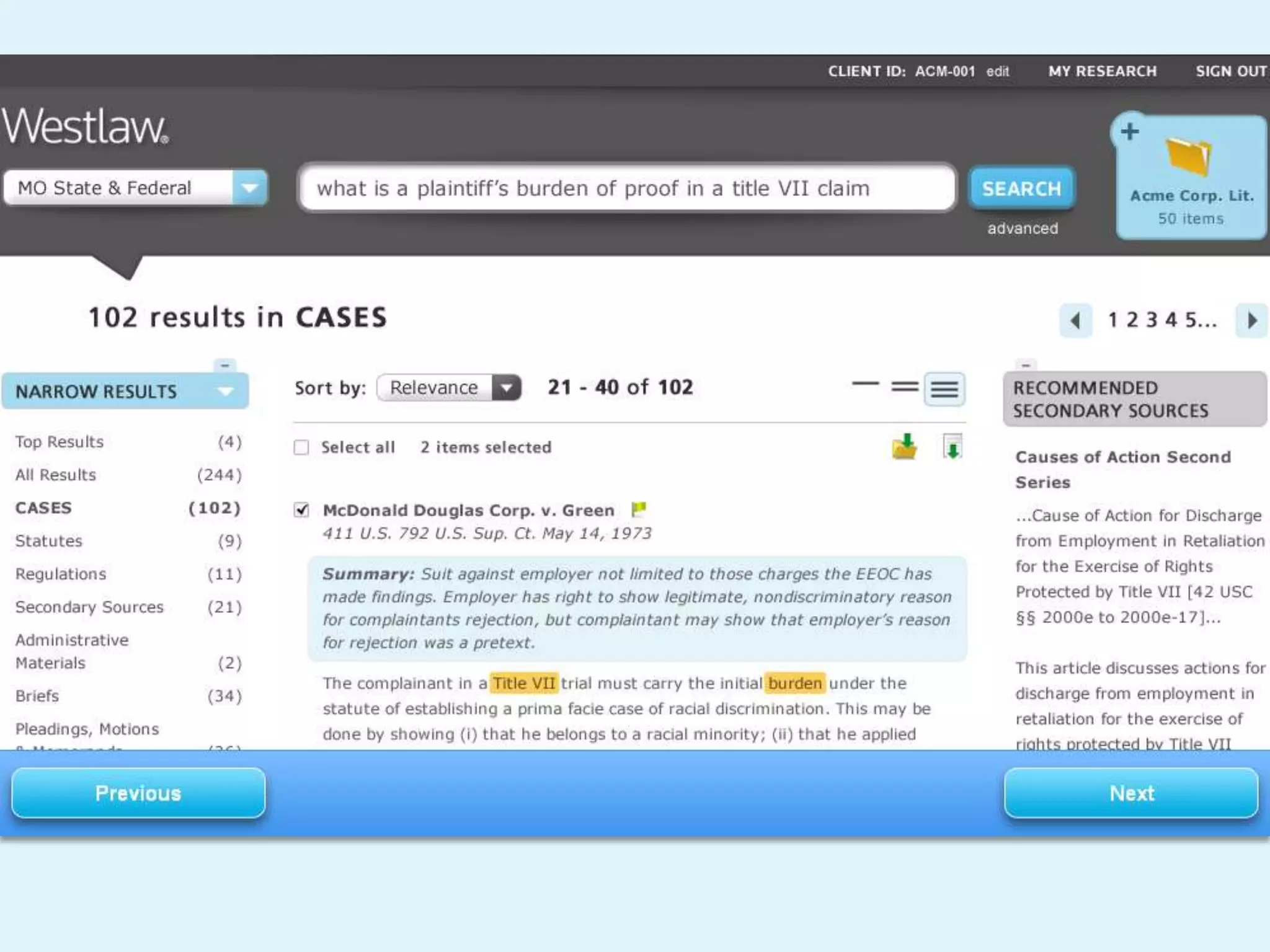

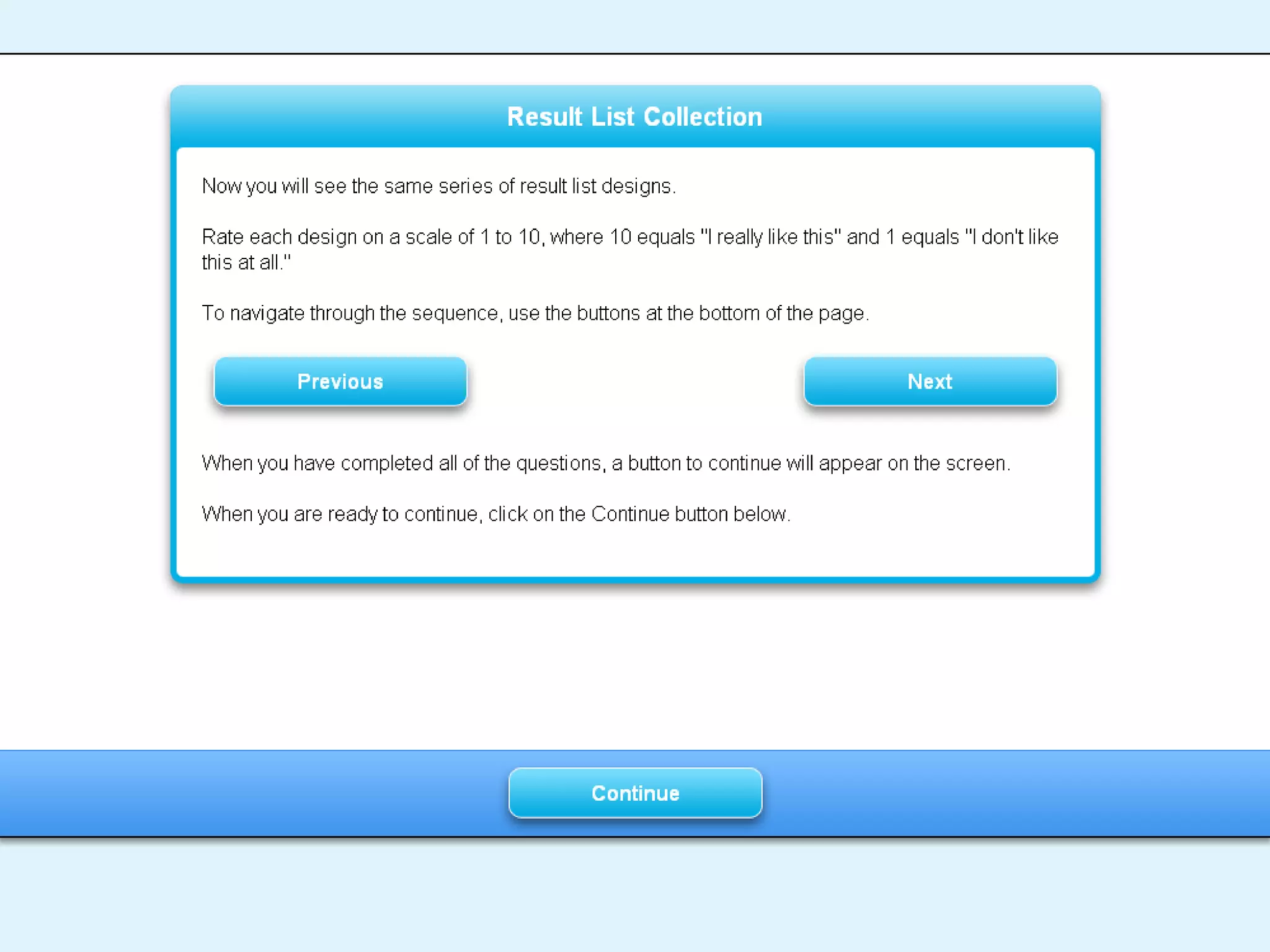

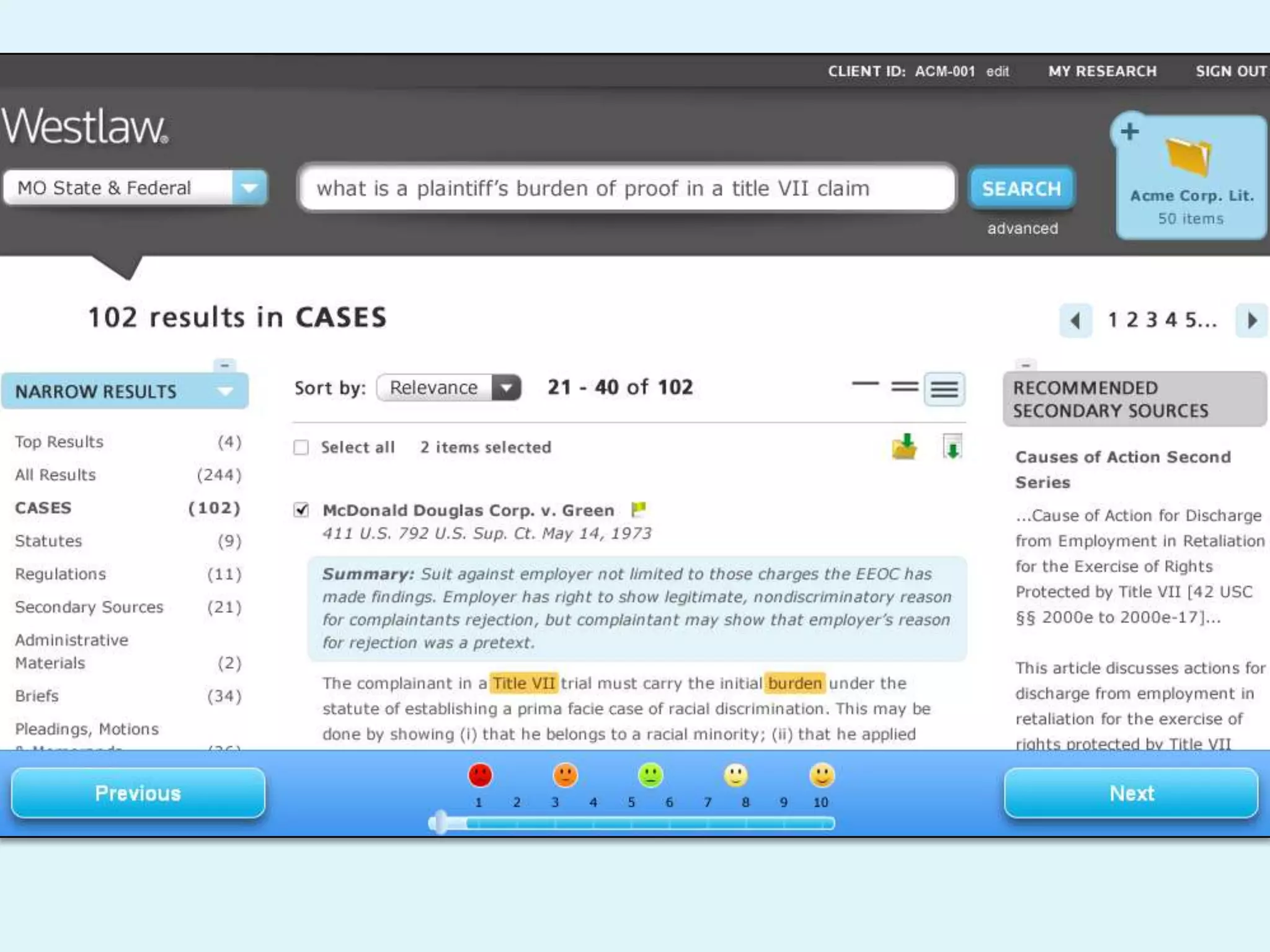

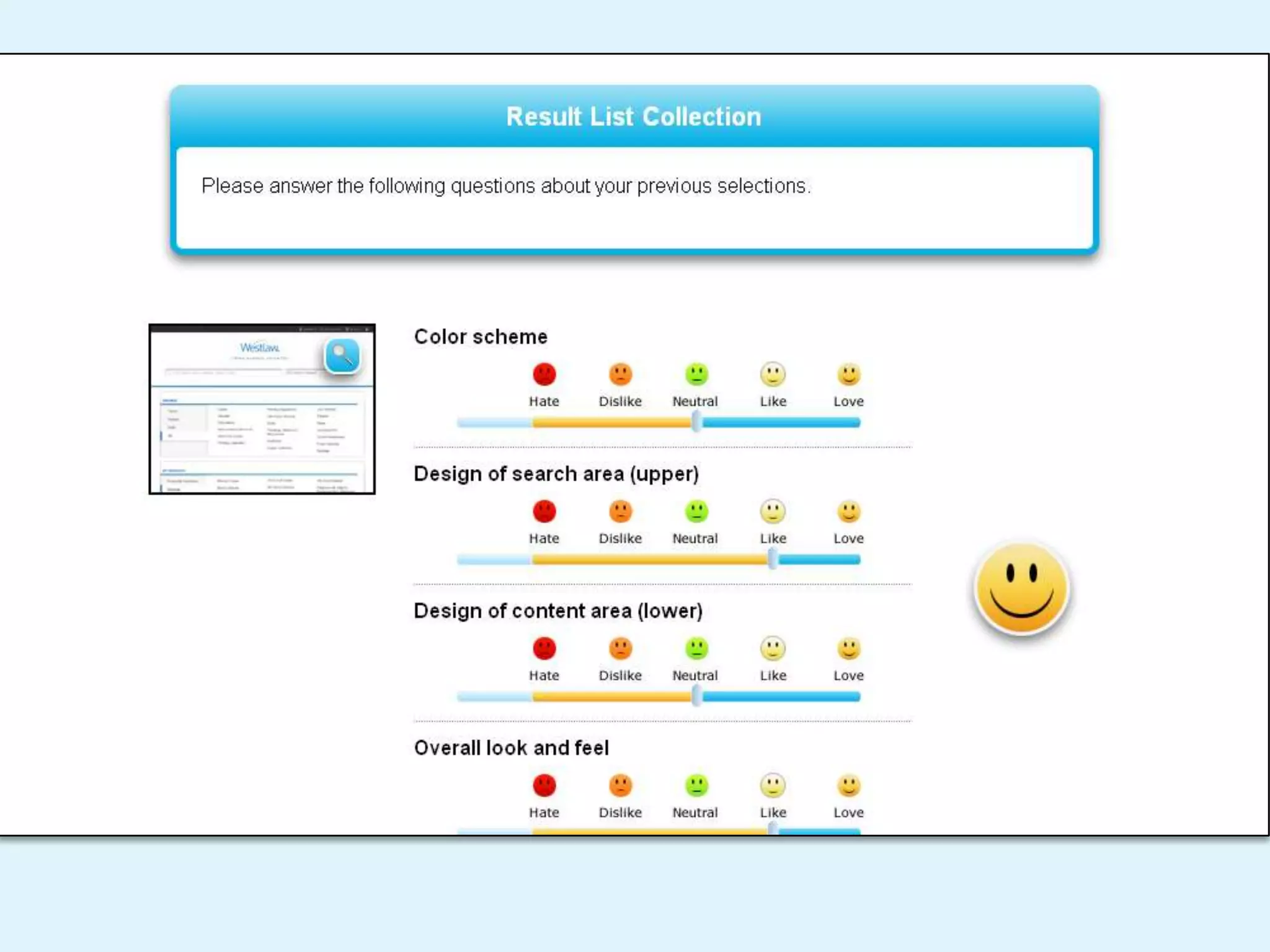

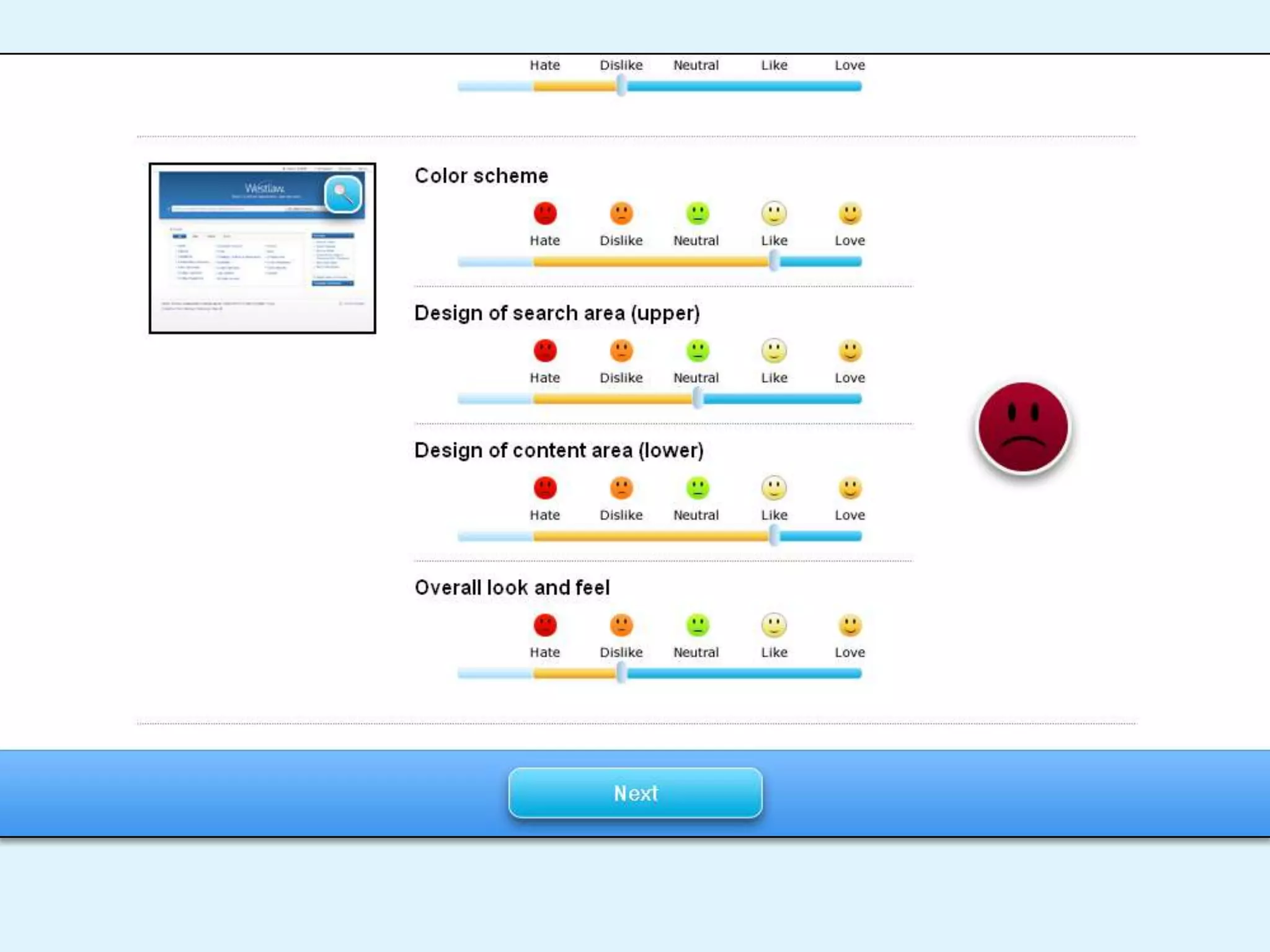

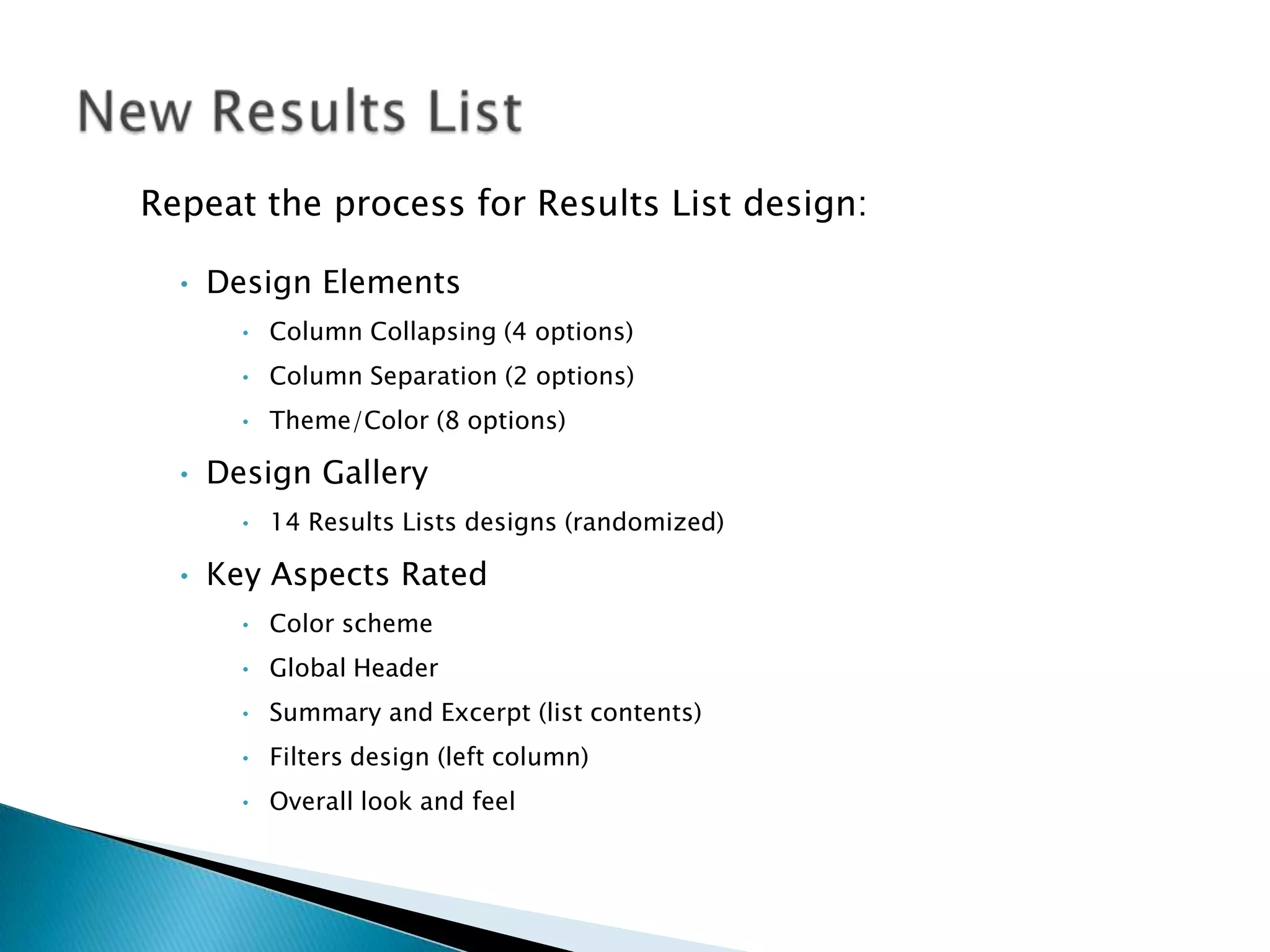

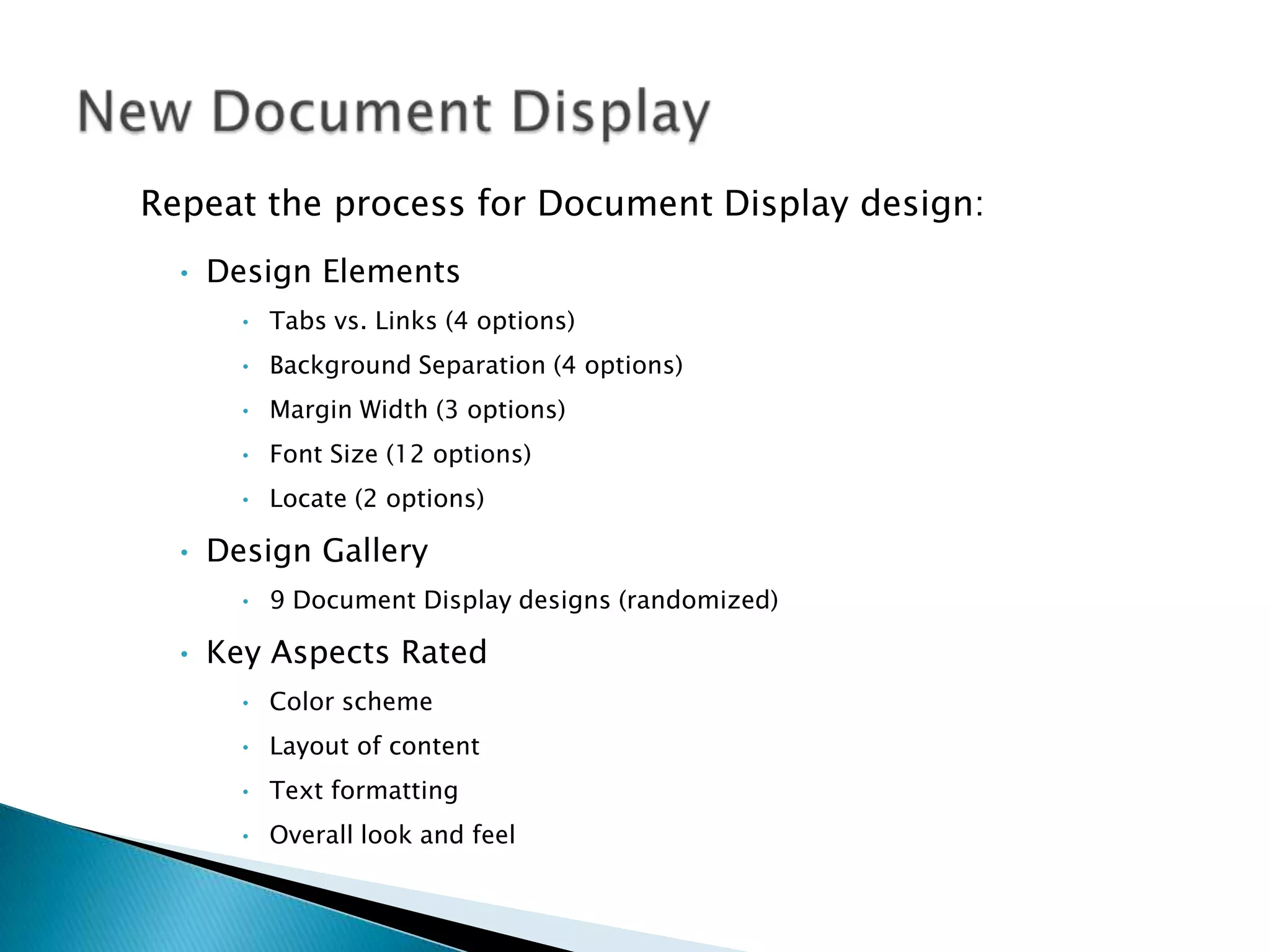

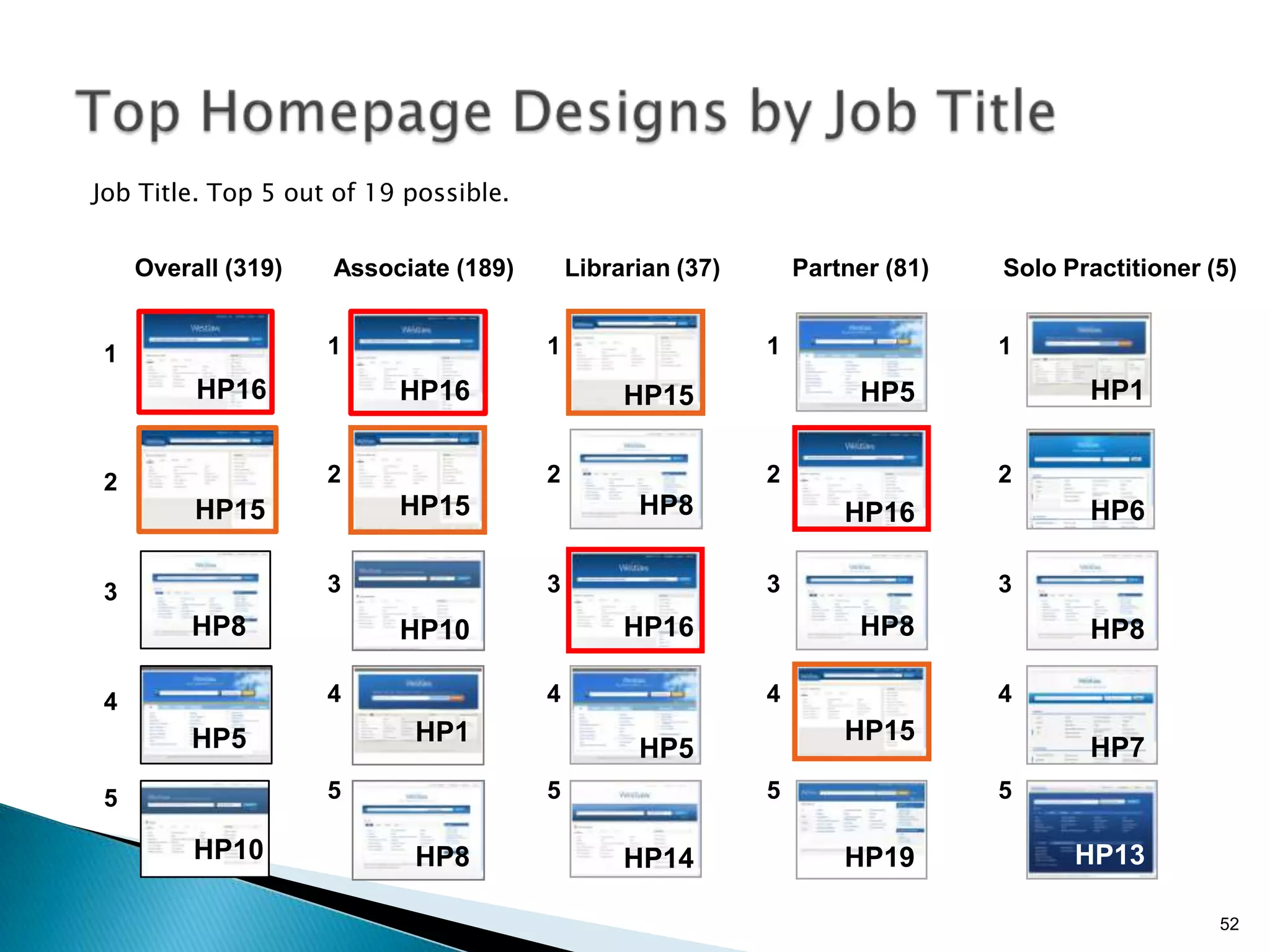

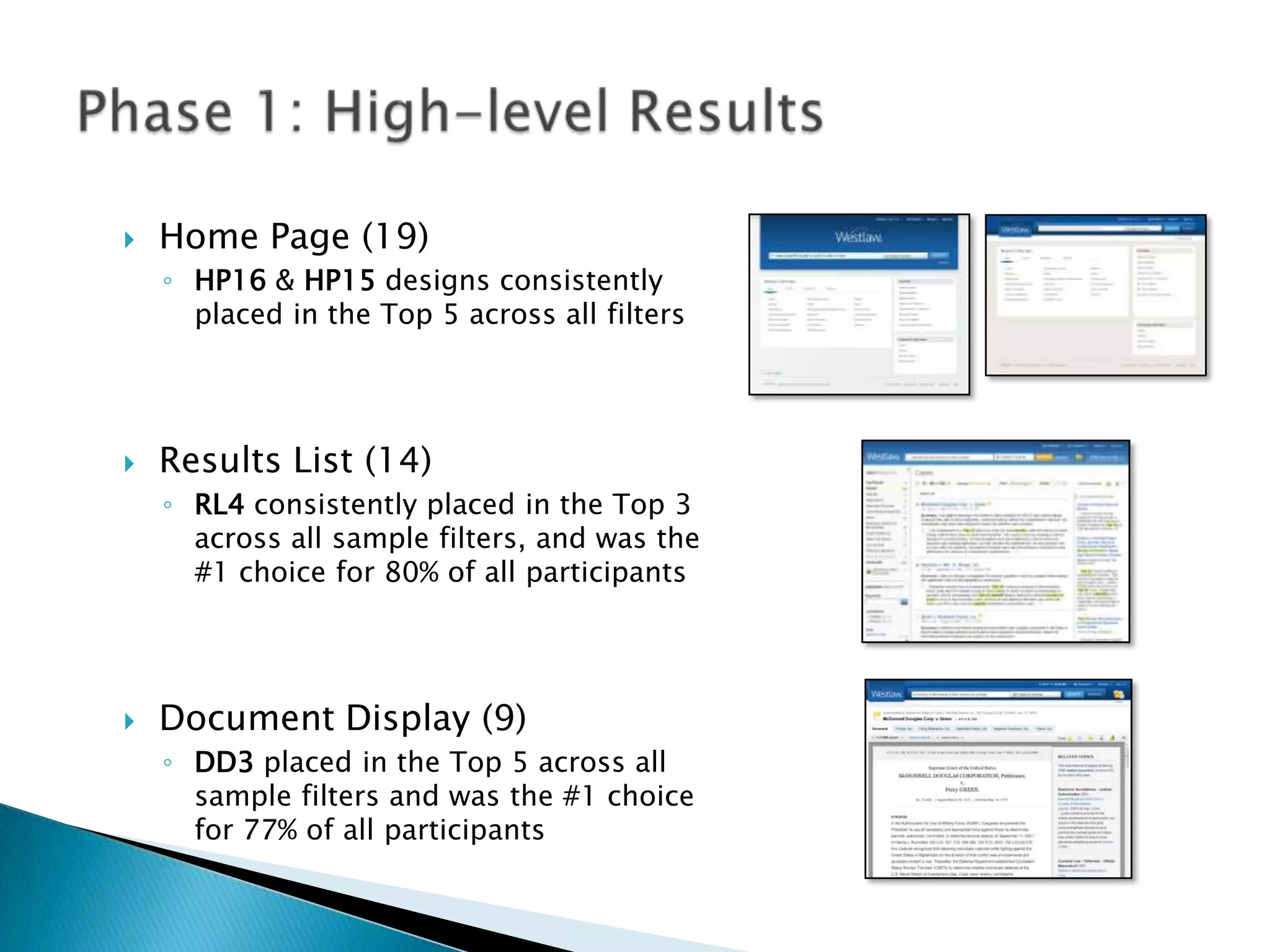

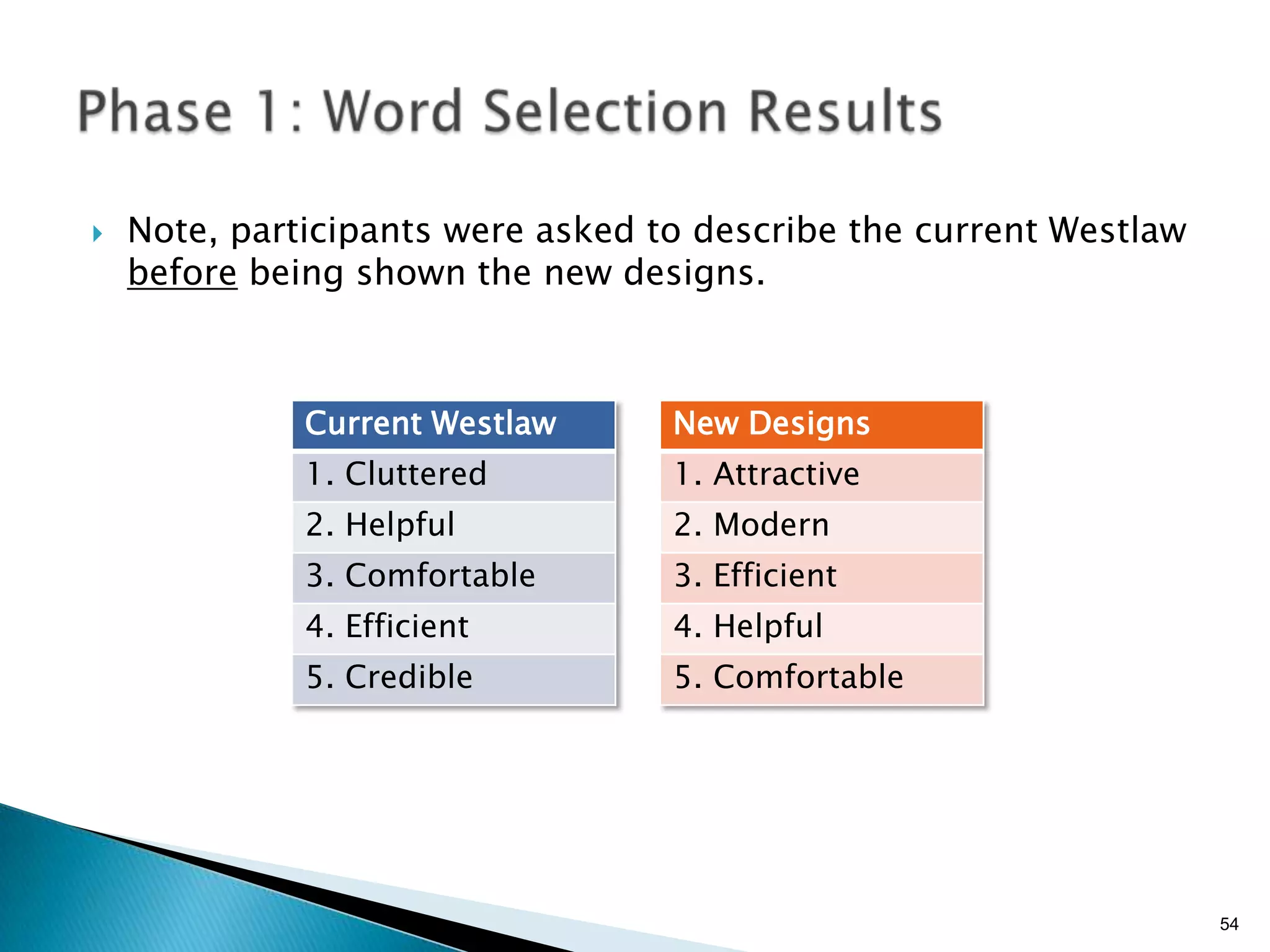

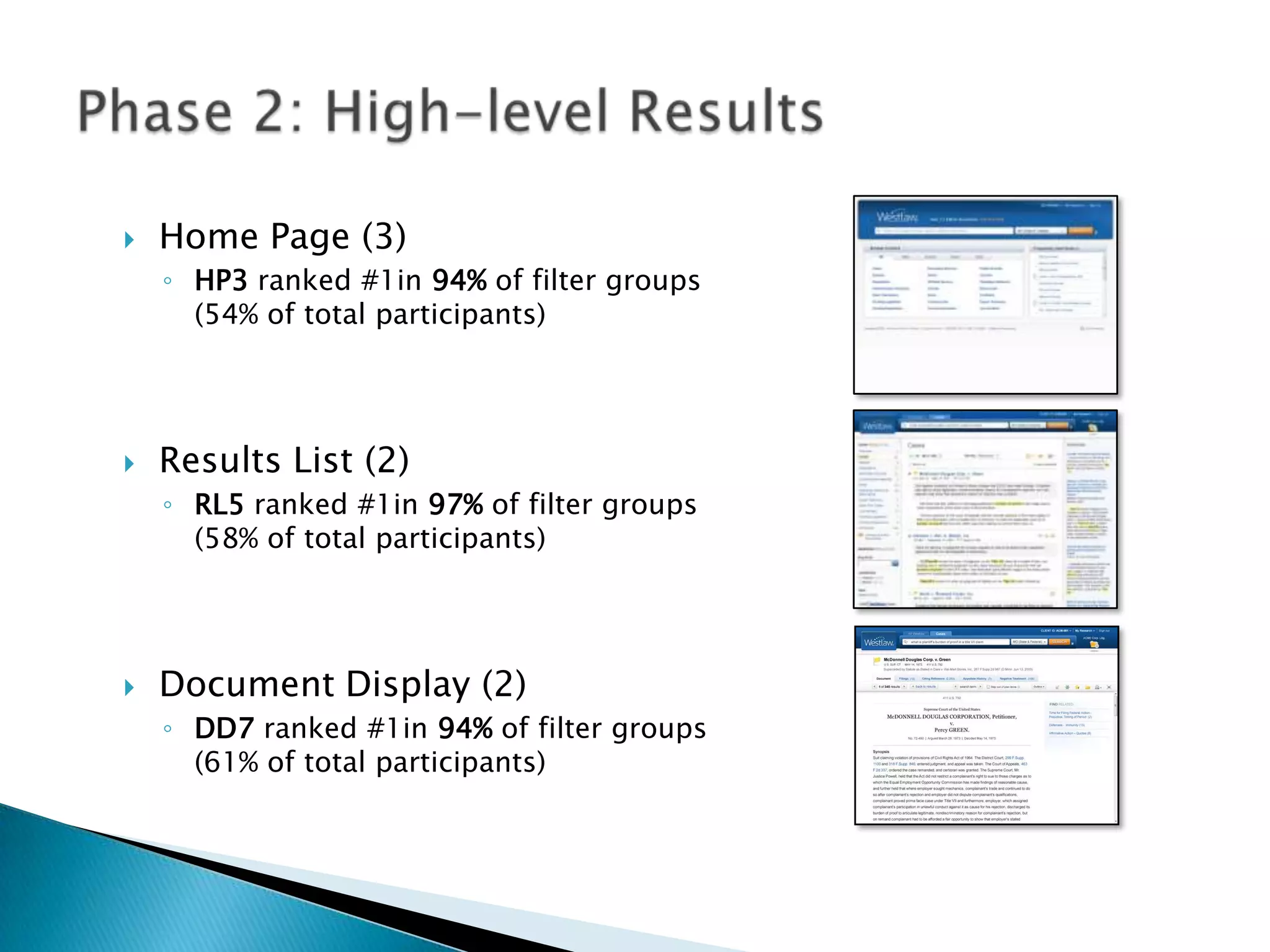

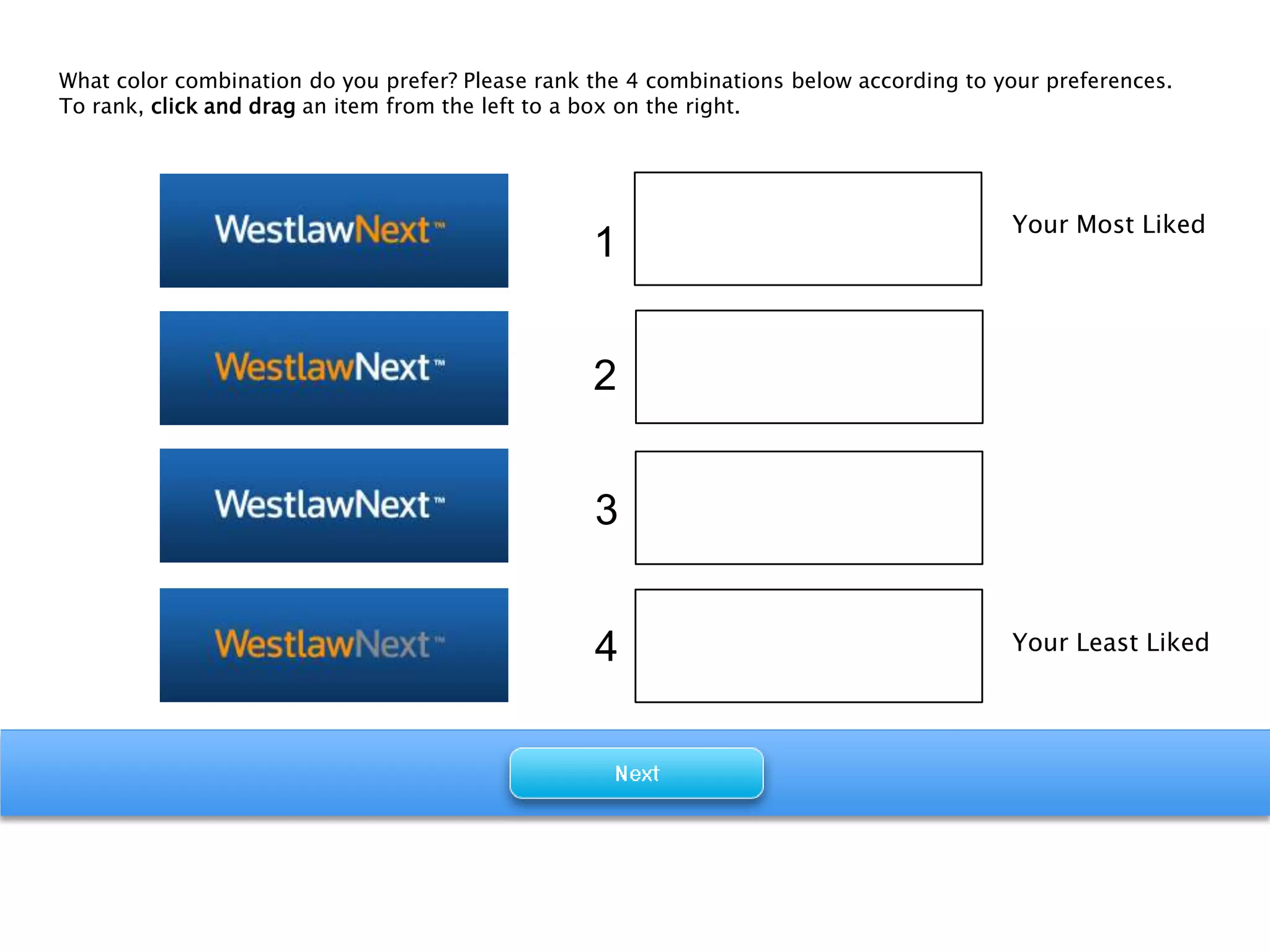

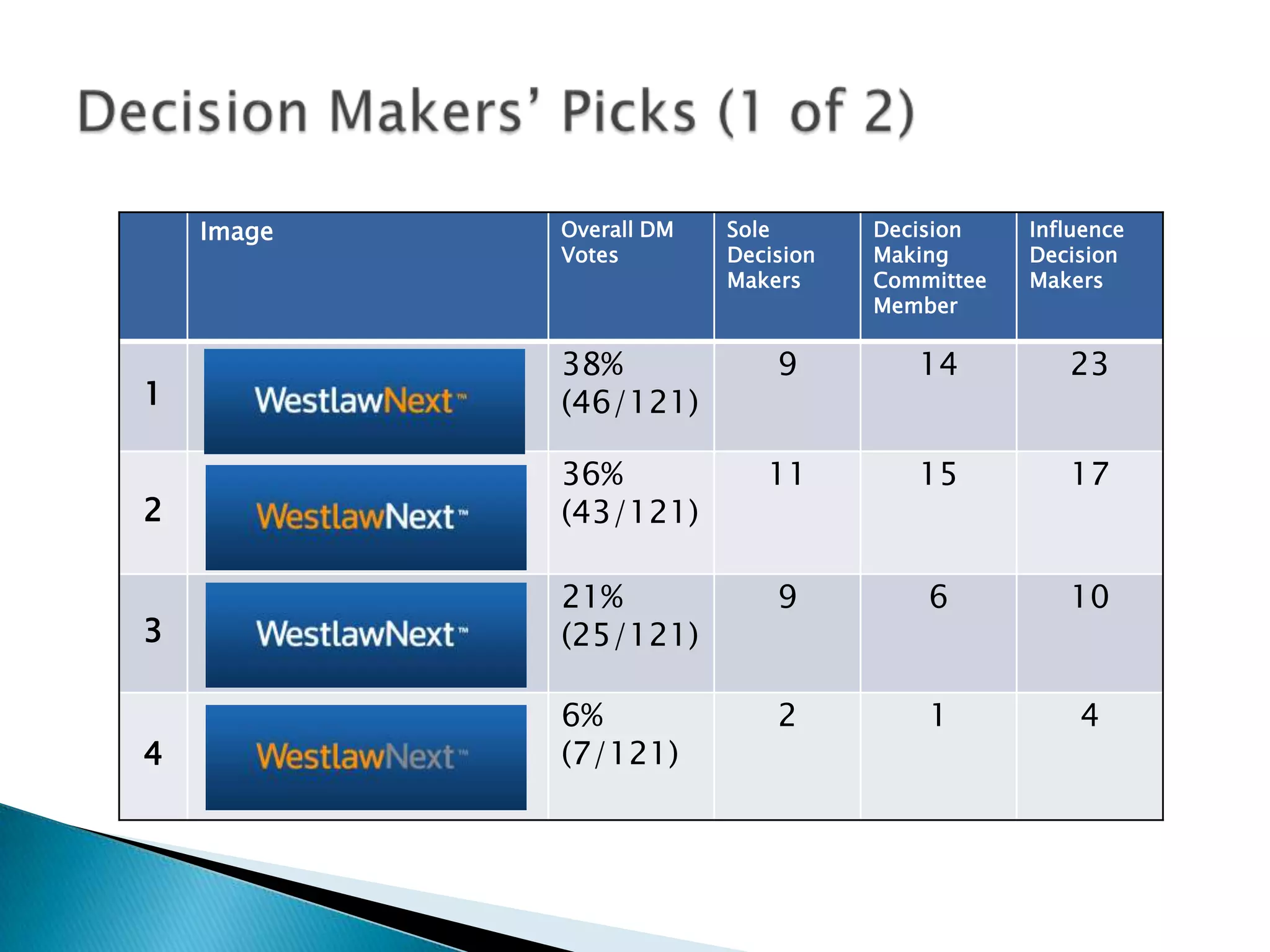

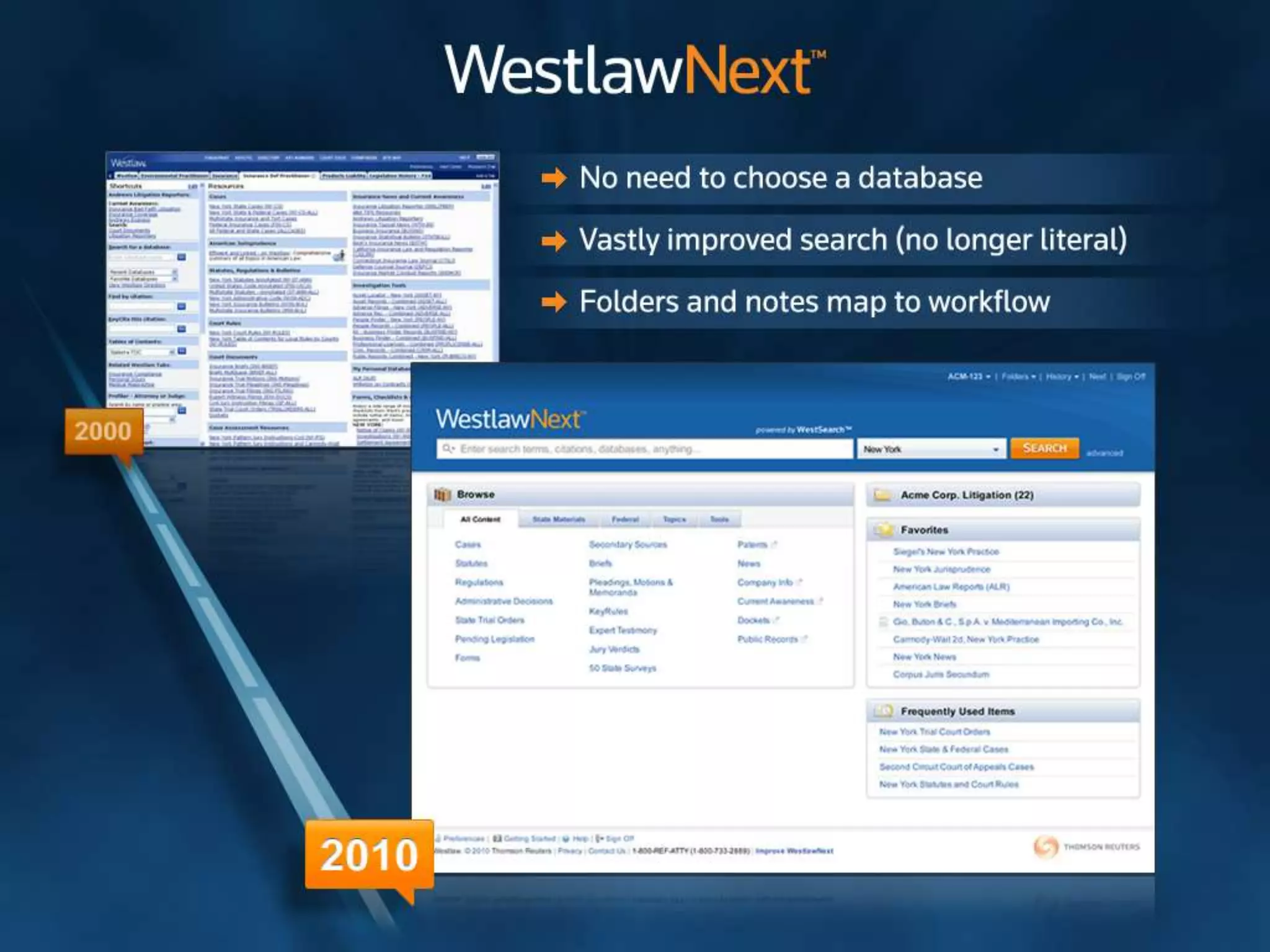

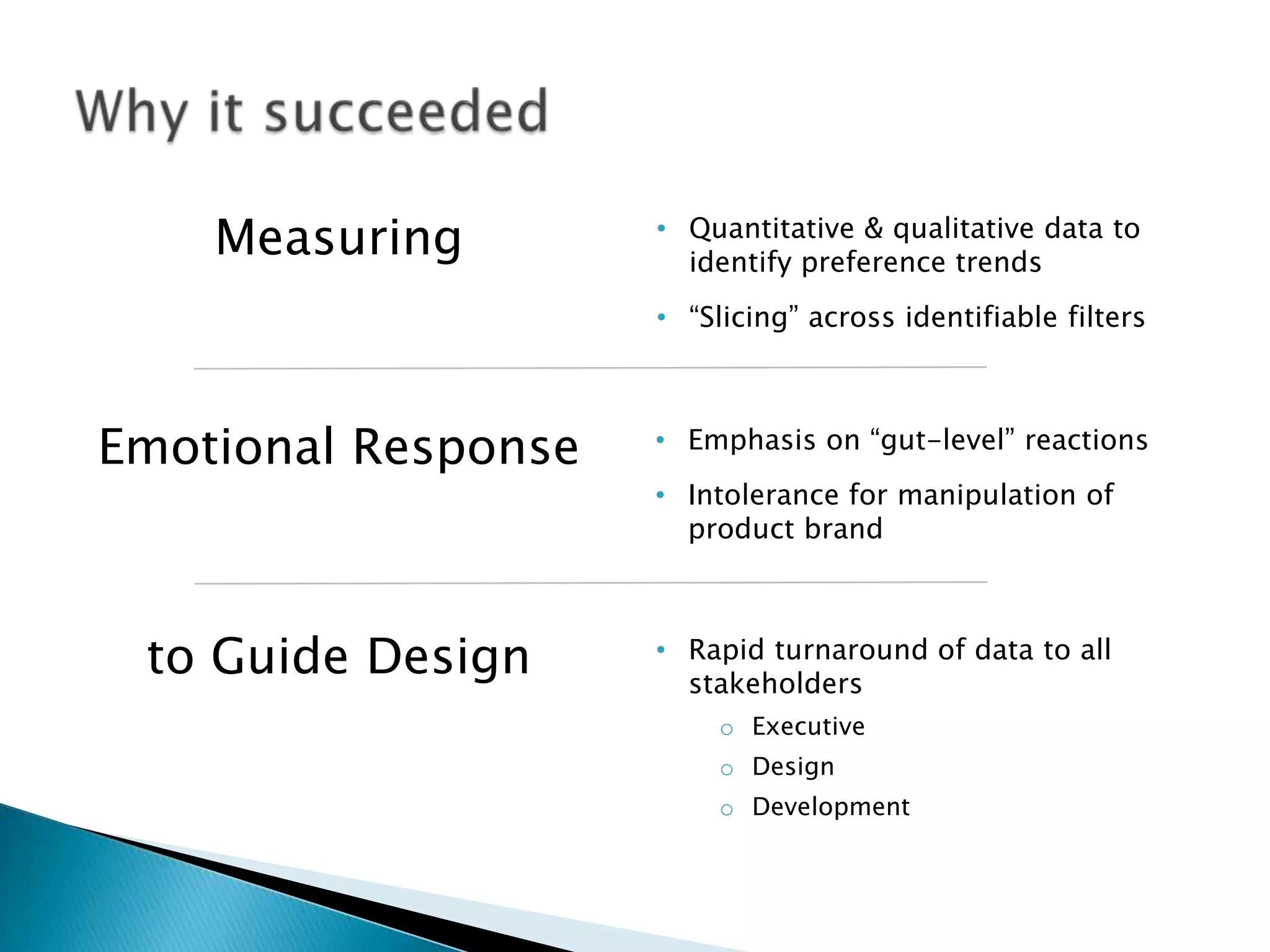

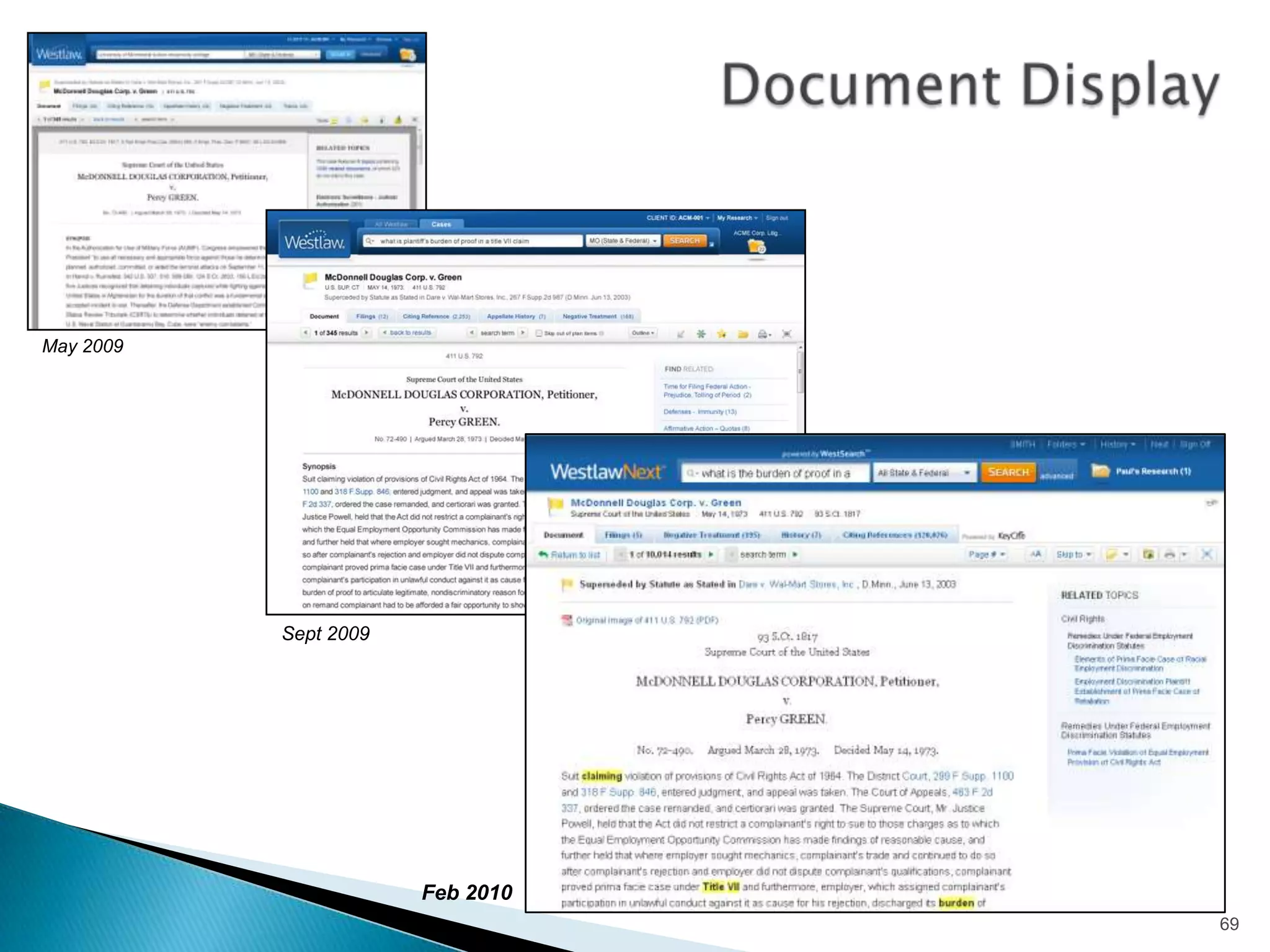

The document discusses preference and desirability testing as methods to measure emotional responses that guide design decisions. It highlights various methodologies, including case studies on Greenwich Hospital and WestlawNext, showcasing the importance of mixing qualitative and quantitative feedback to inform design choices. Key insights are shared on the necessity of understanding visceral emotions and stakeholder preferences in the design process.