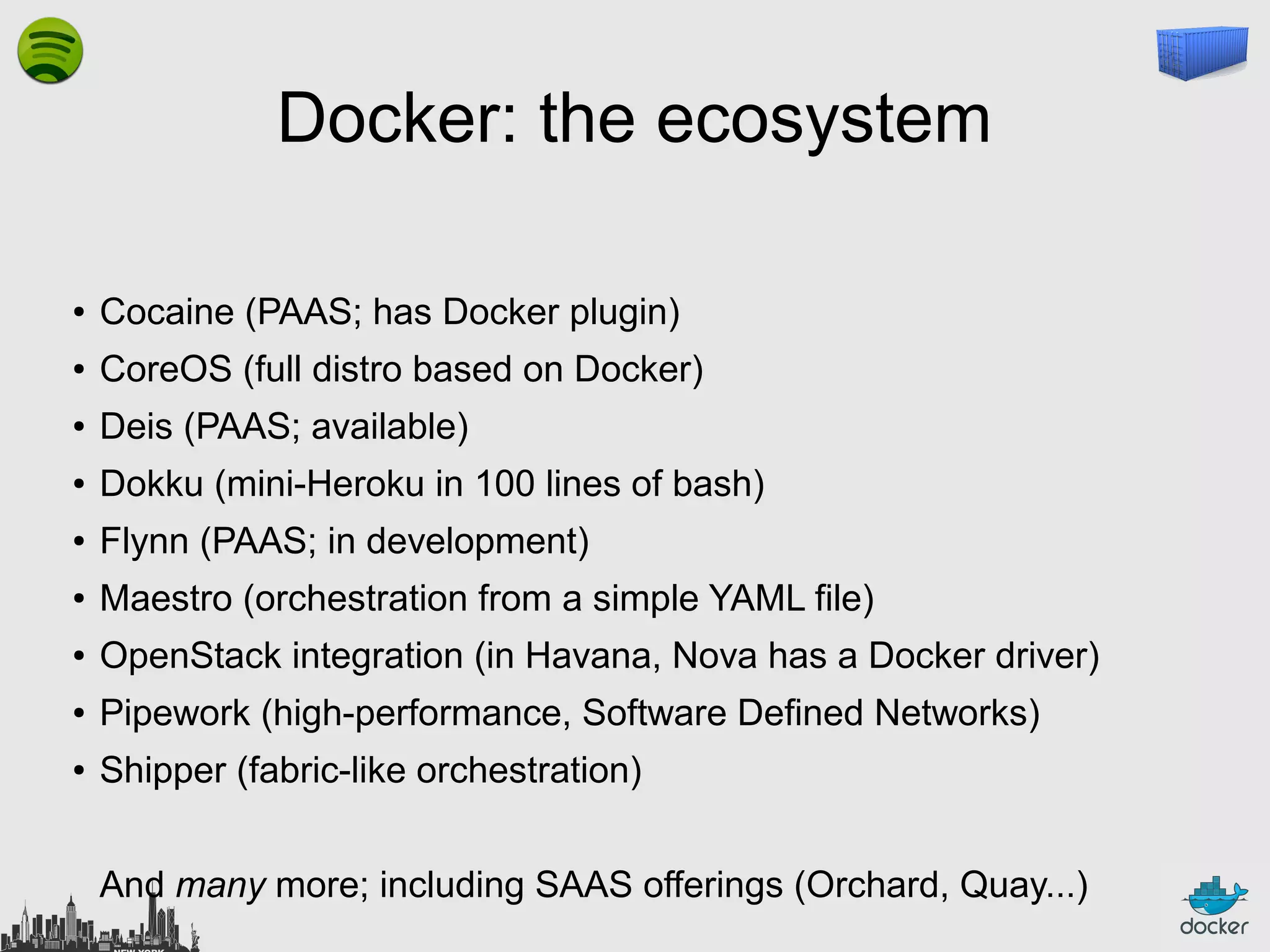

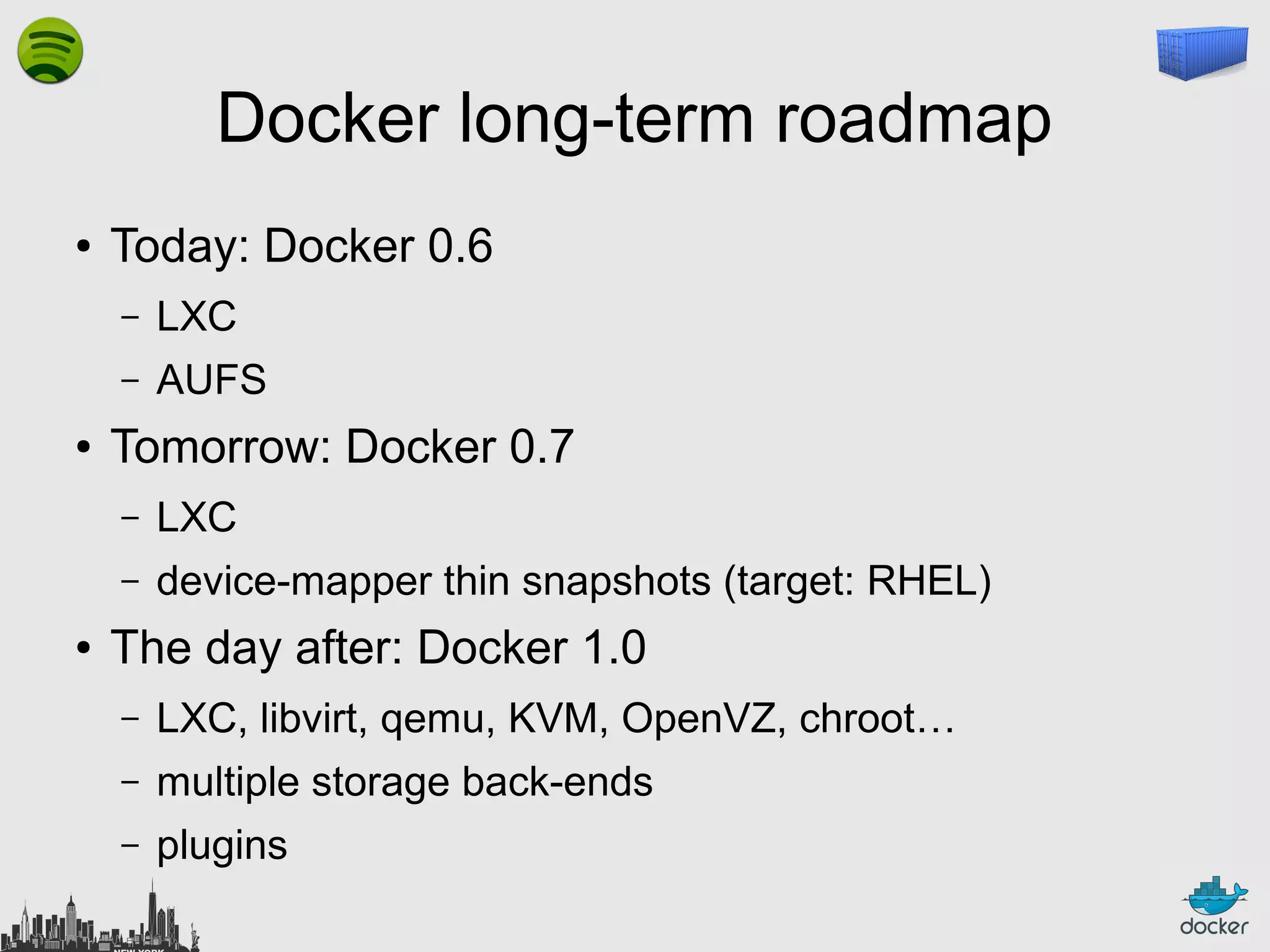

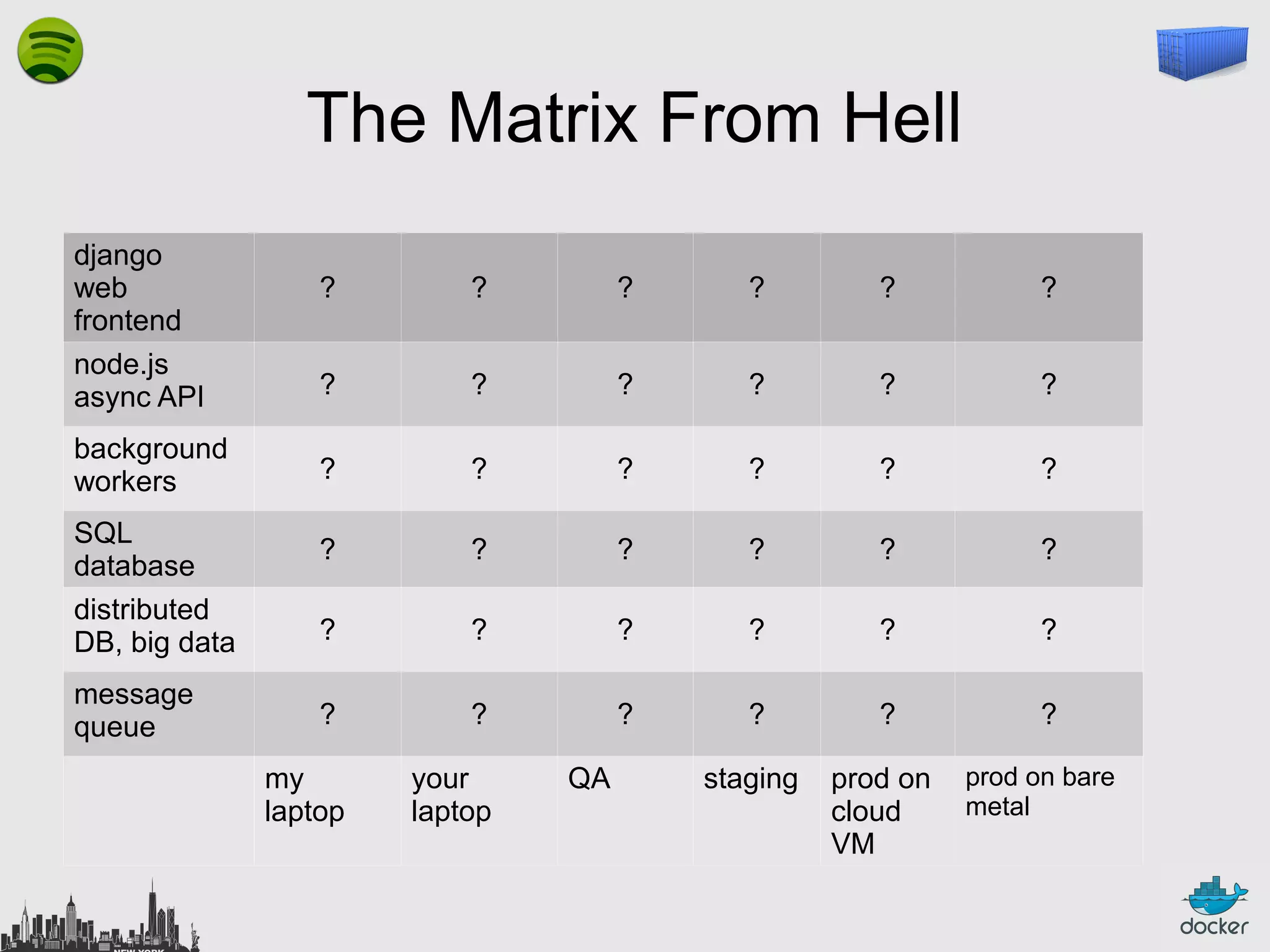

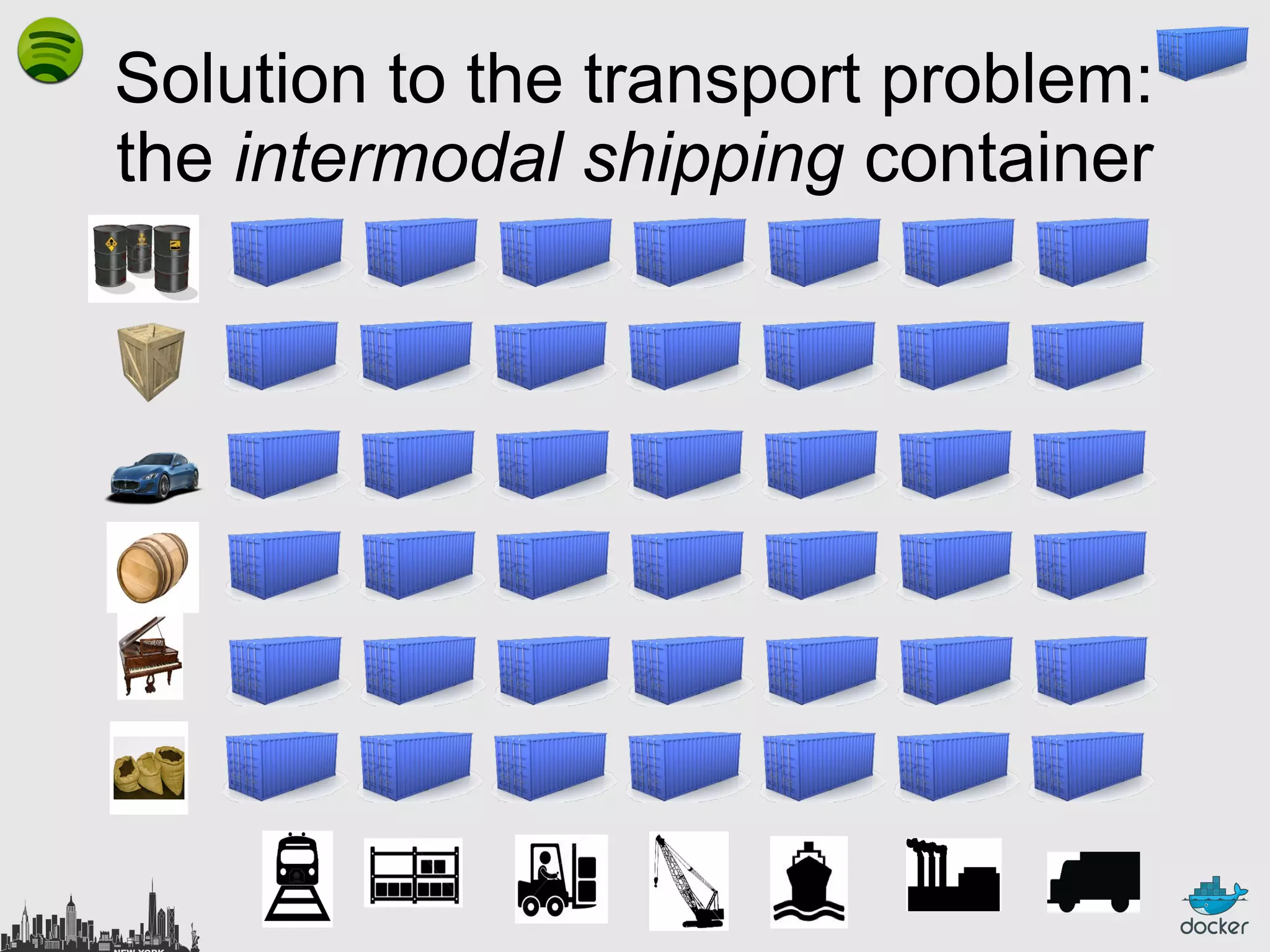

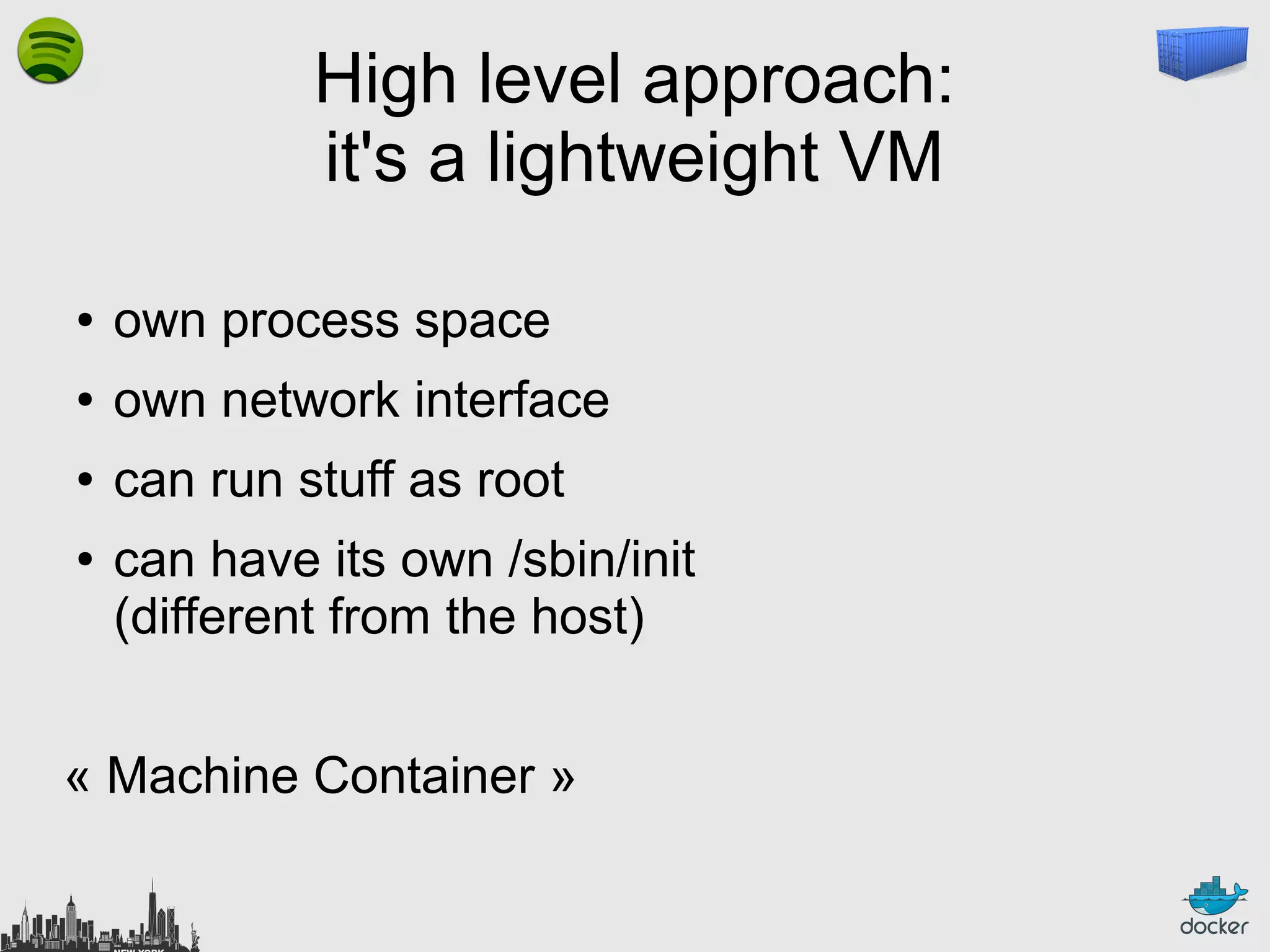

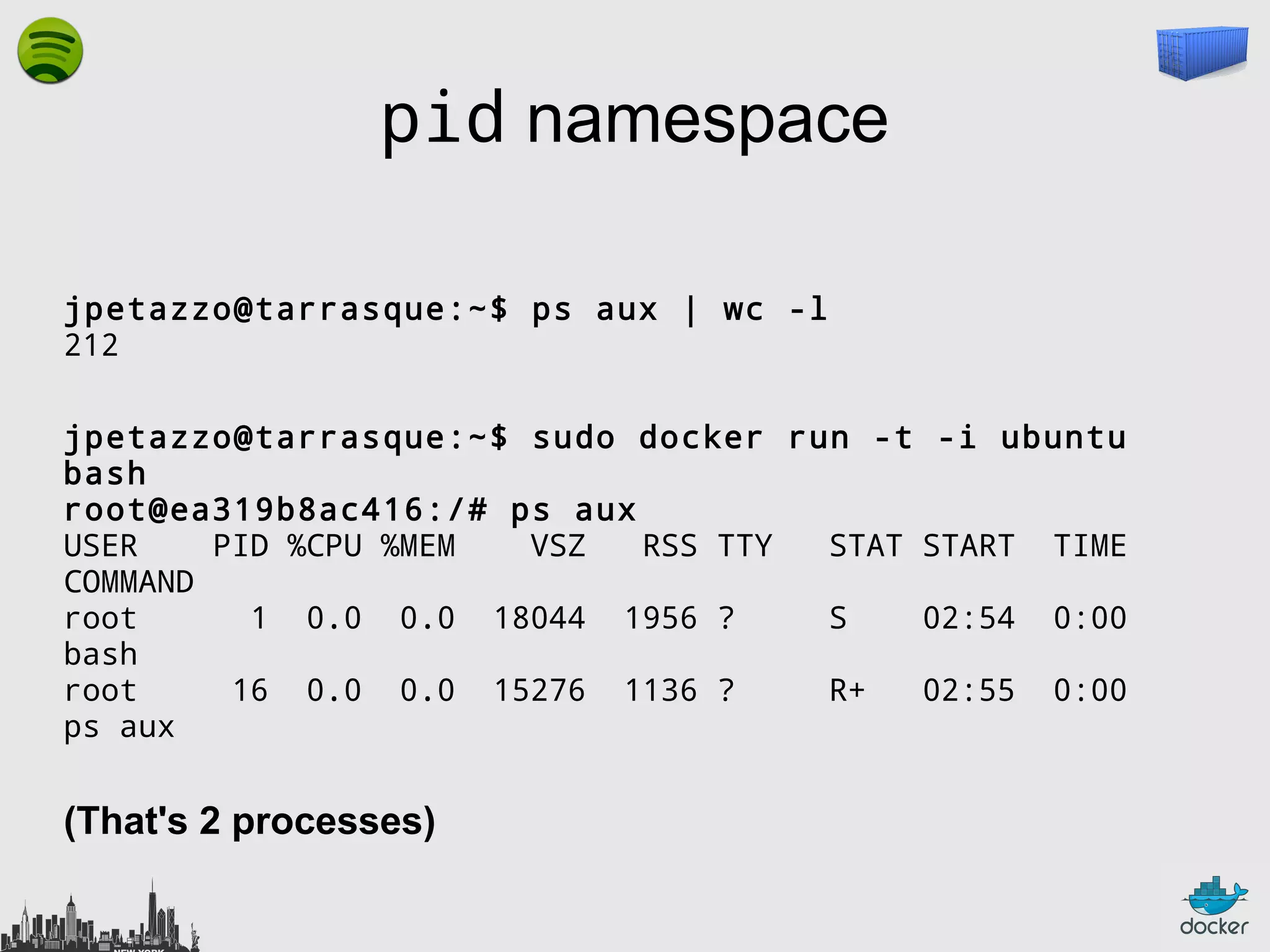

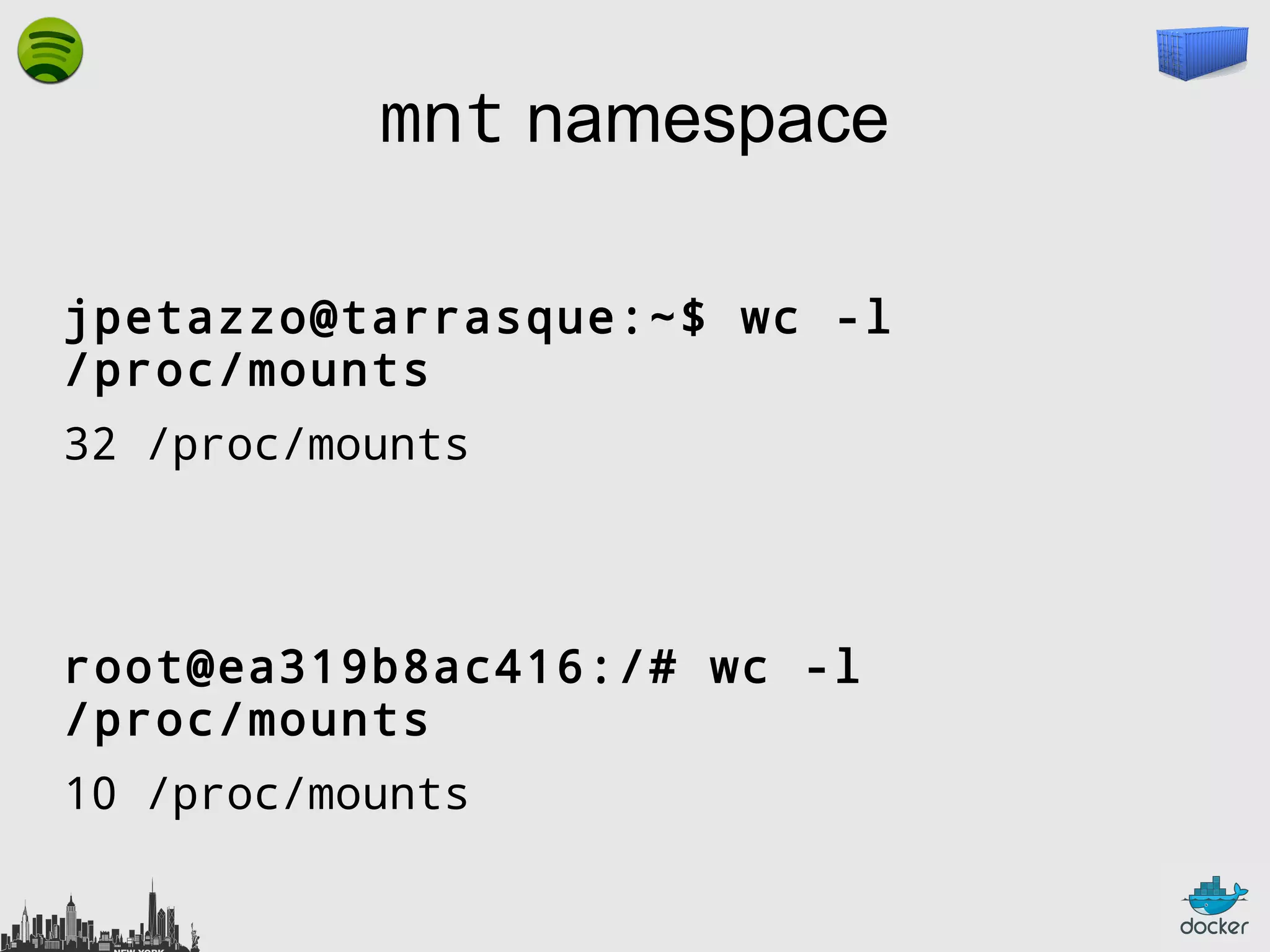

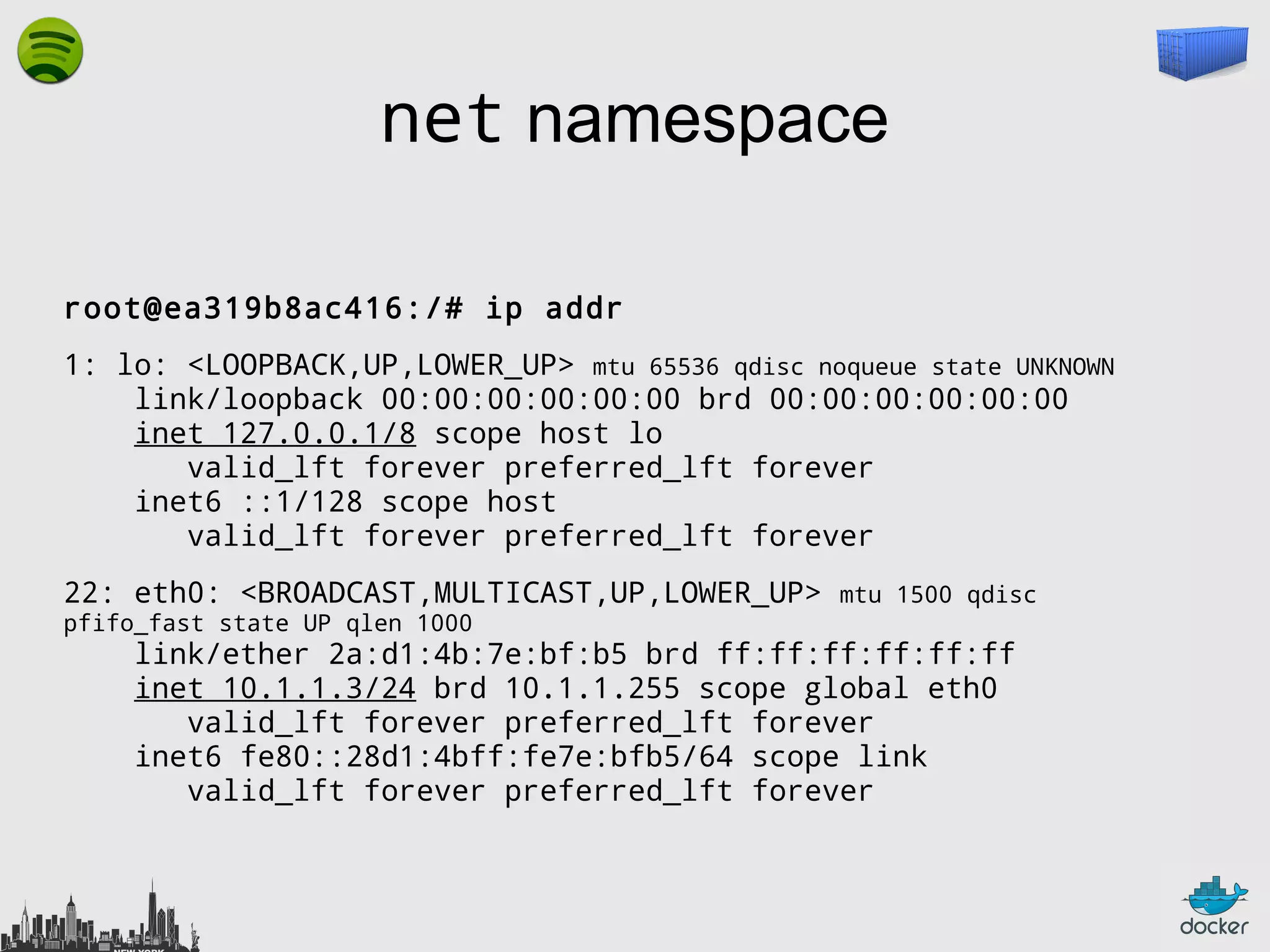

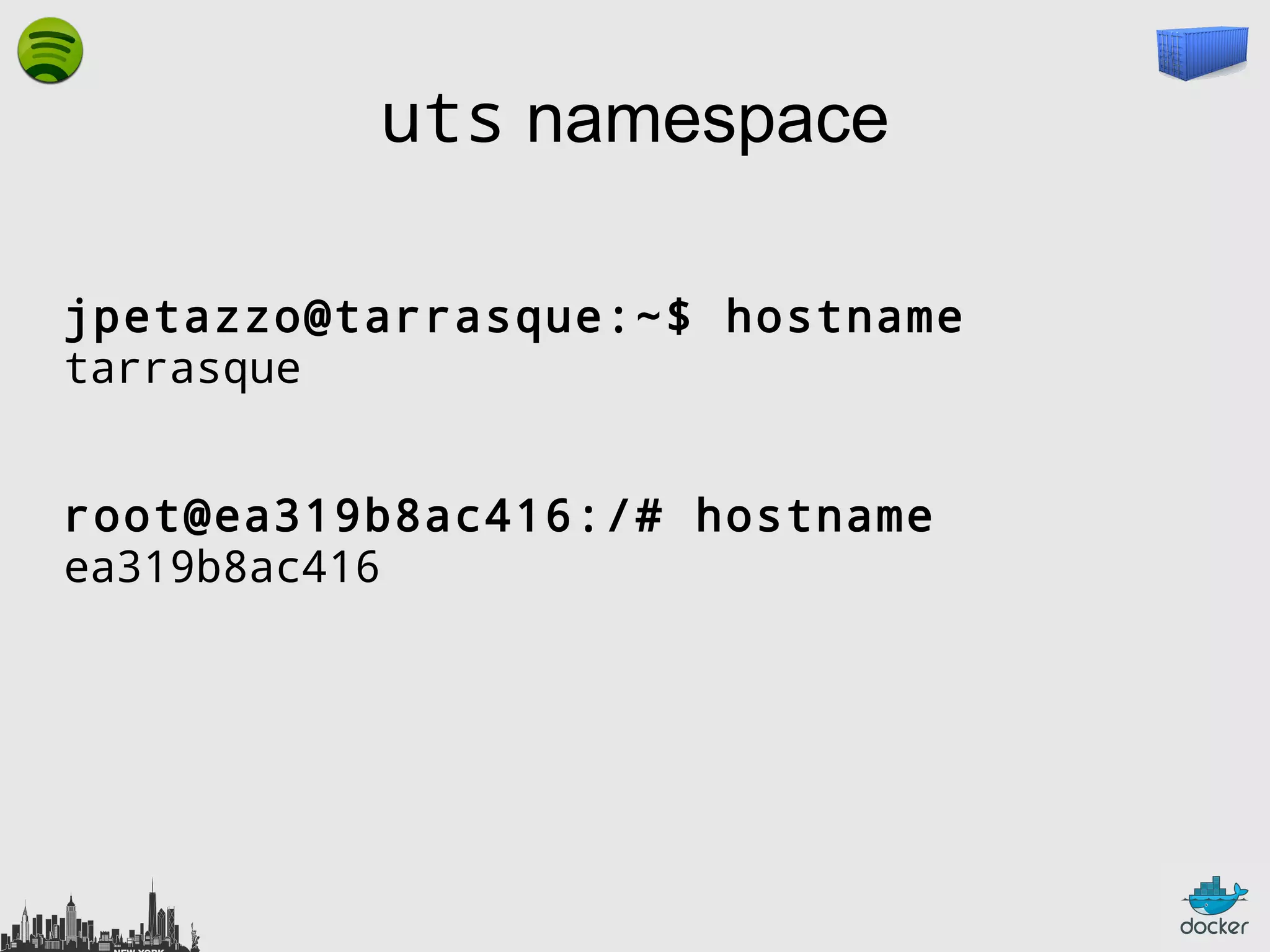

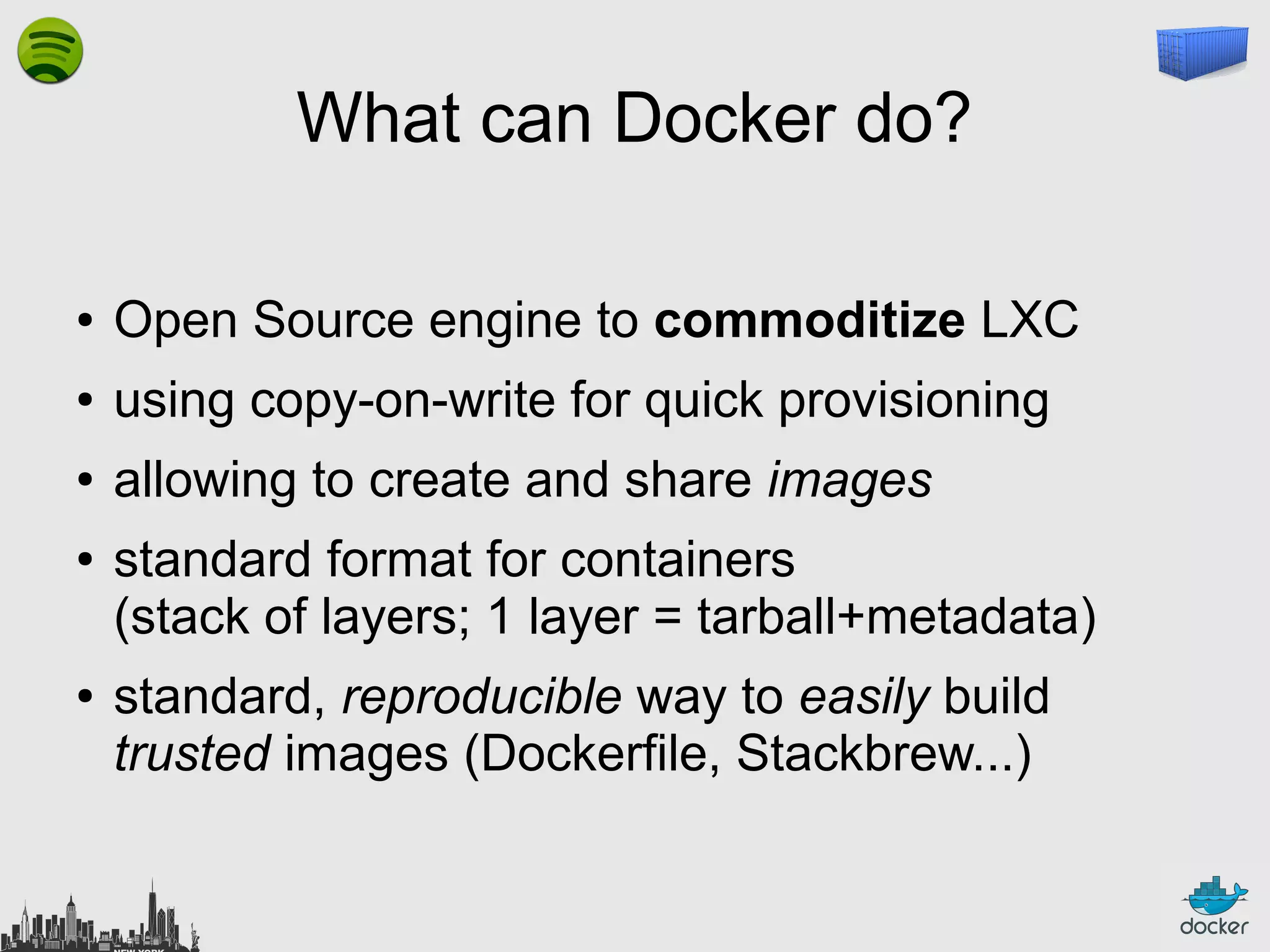

The document outlines the role of Linux containers and Docker in solving complex deployment issues in software development, likening containerization to intermodal shipping containers in logistics. It explains how Linux containers provide lightweight virtualization, improved efficiency, and ease of software delivery while detailing Docker's open-source technology for managing these containers. Additionally, the document discusses the future directions of Docker and related ecosystems, emphasizing its growing community and the importance of orchestration in container management.

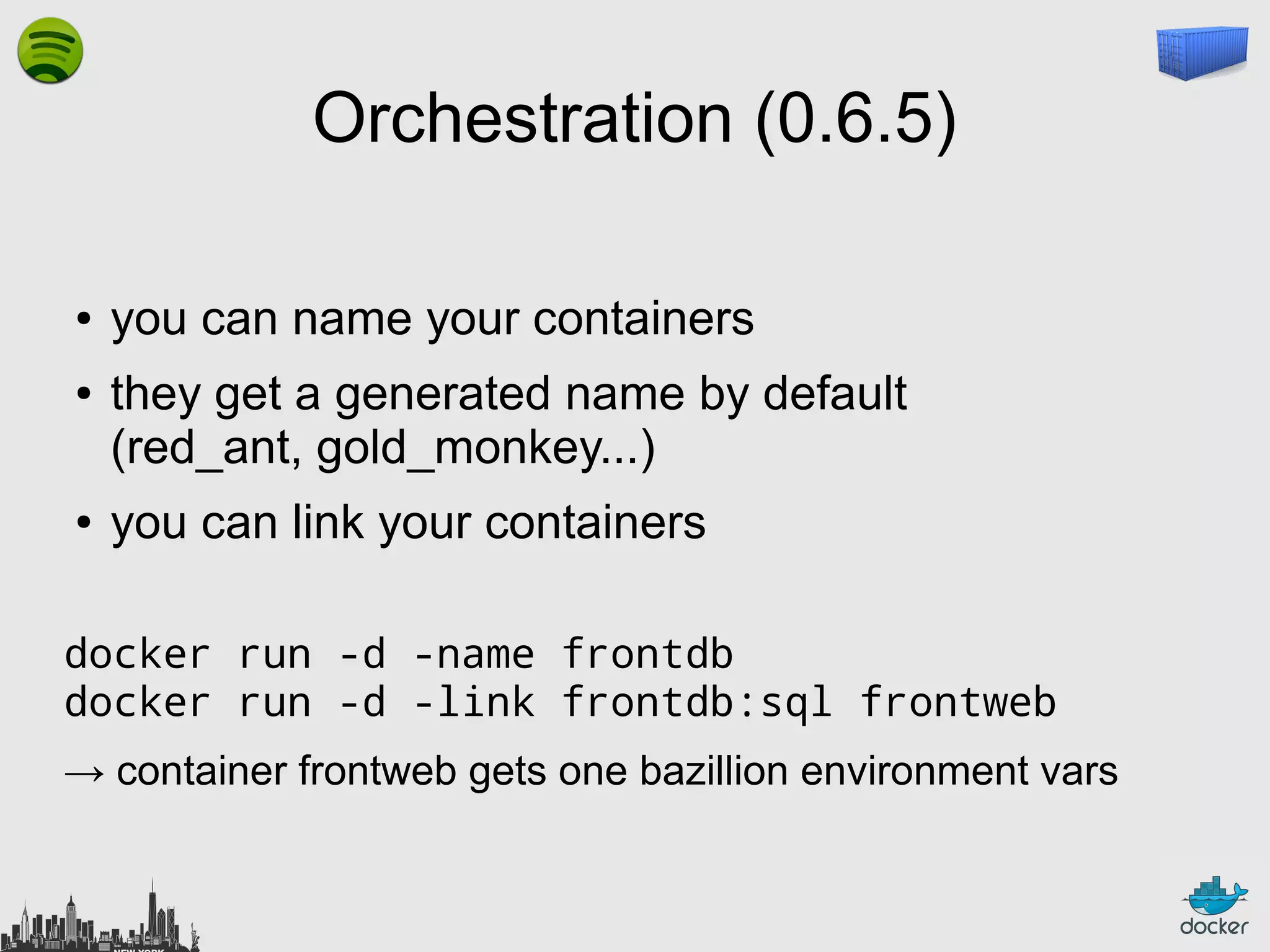

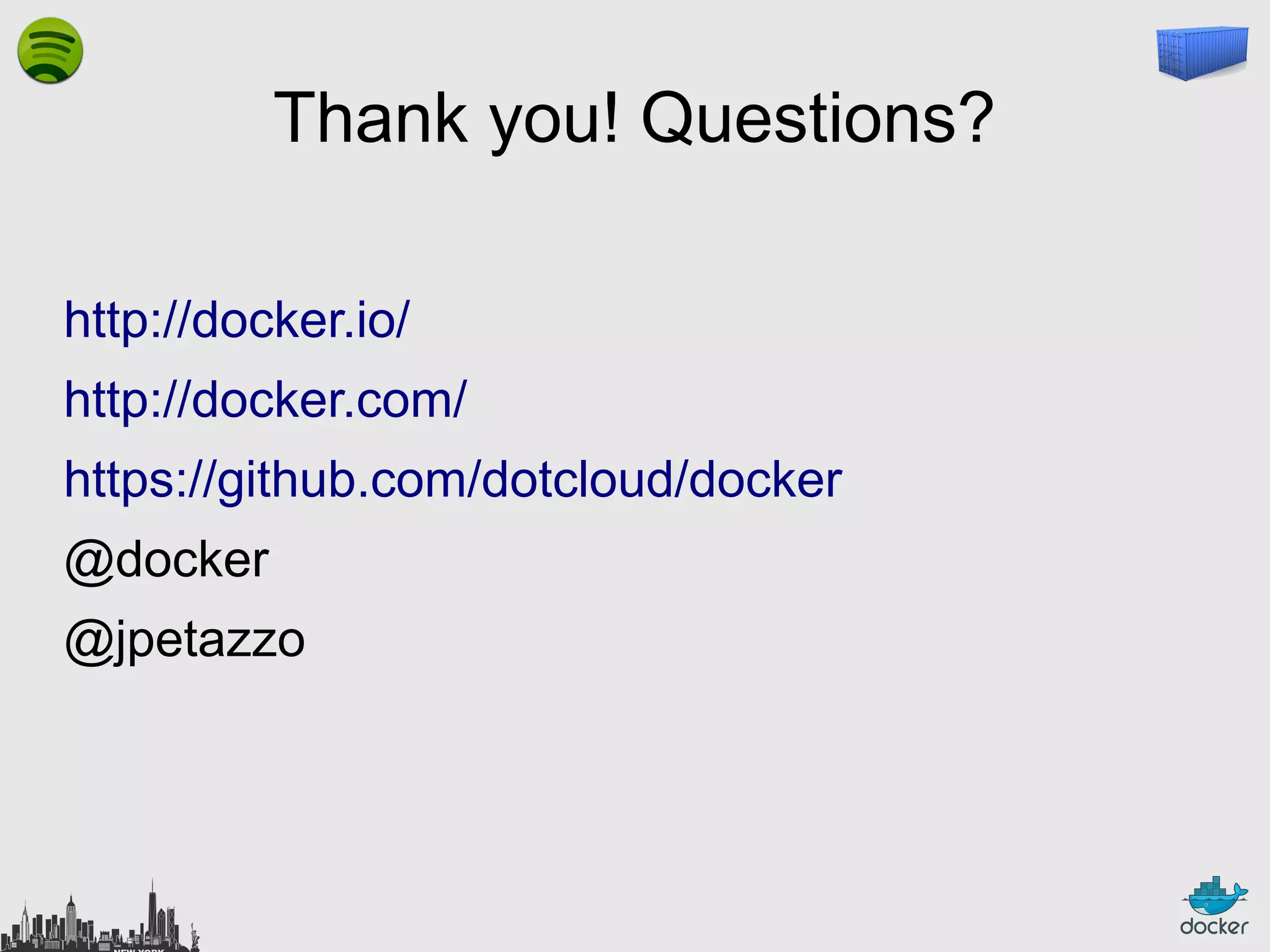

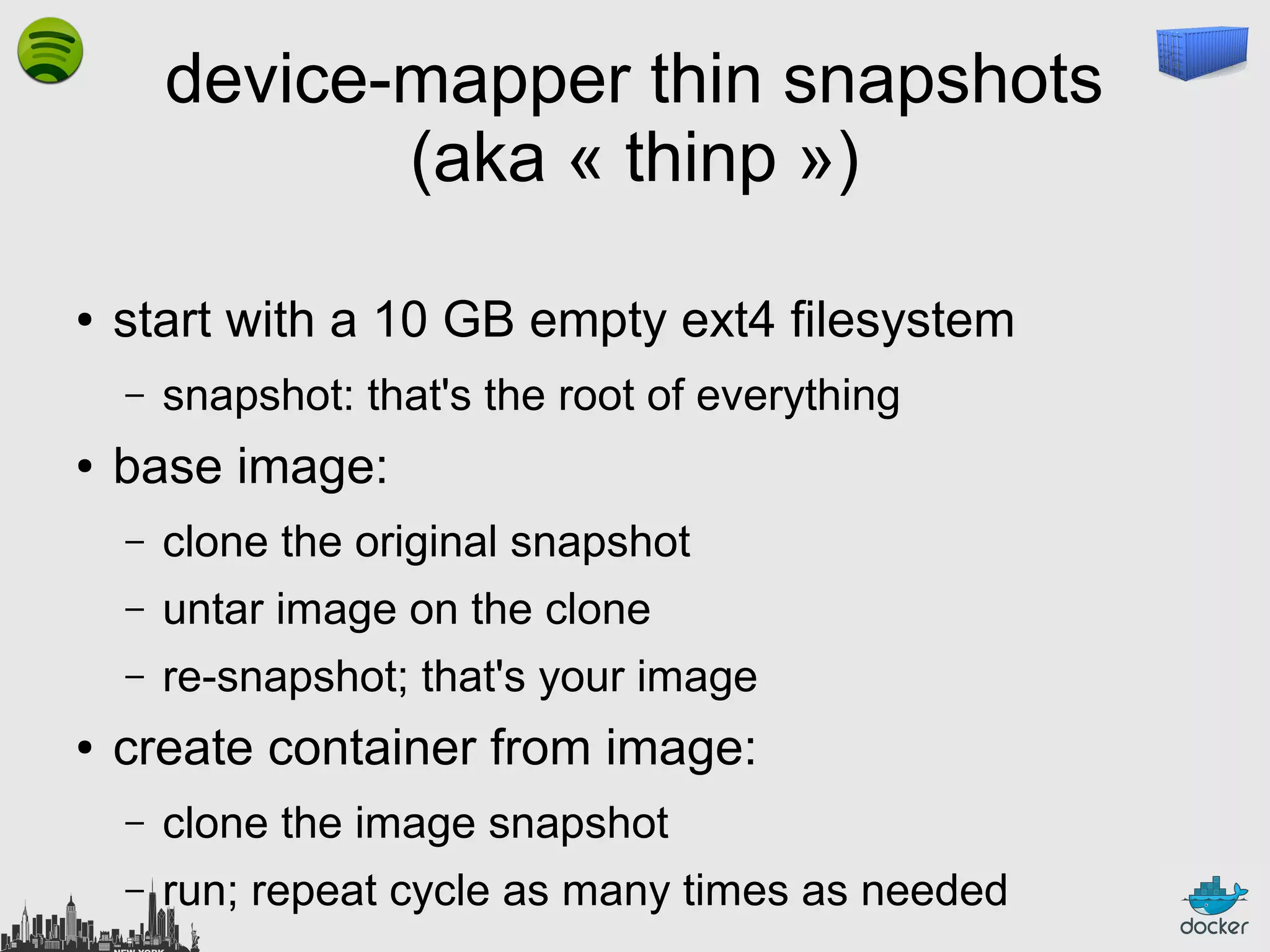

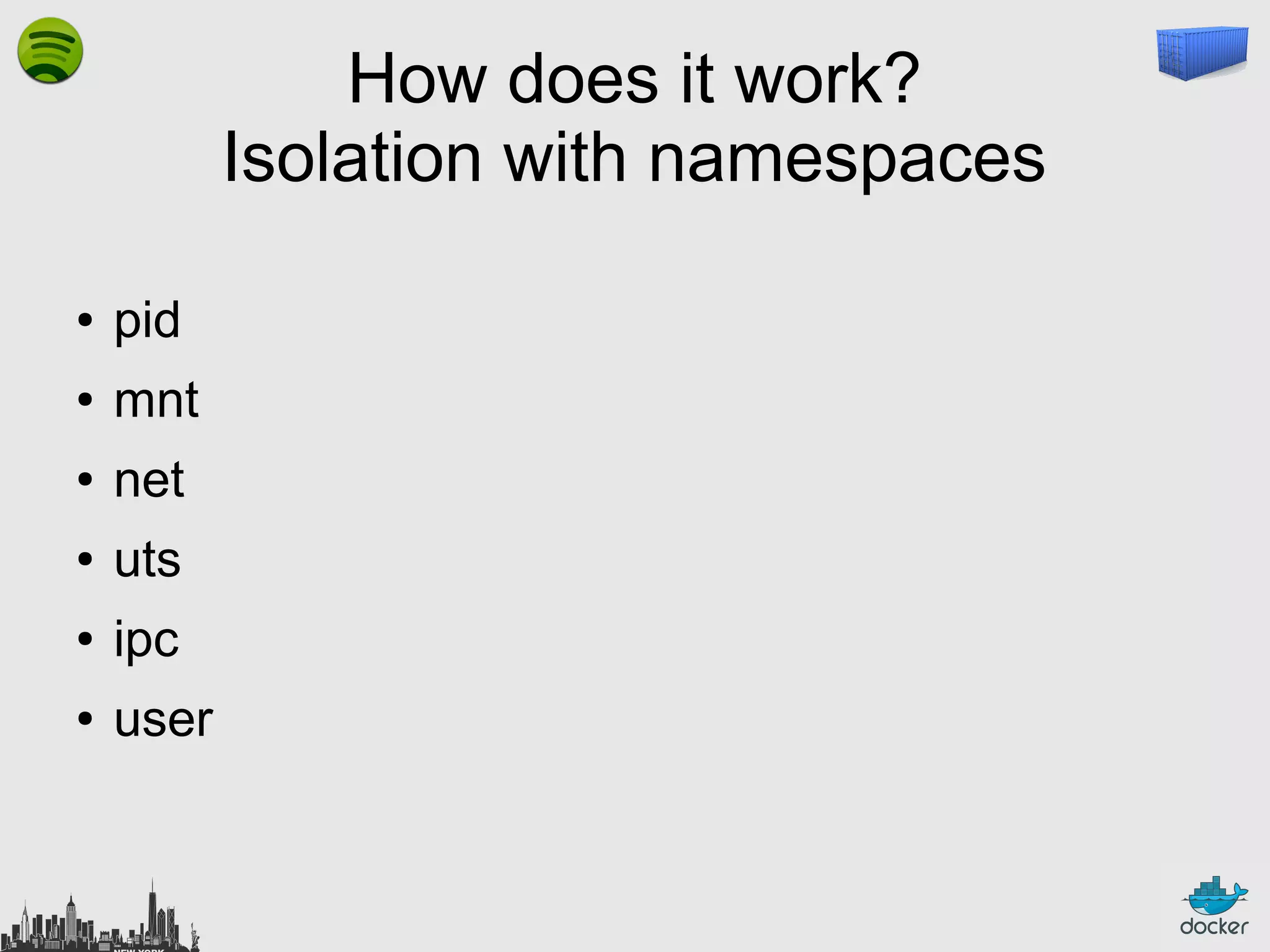

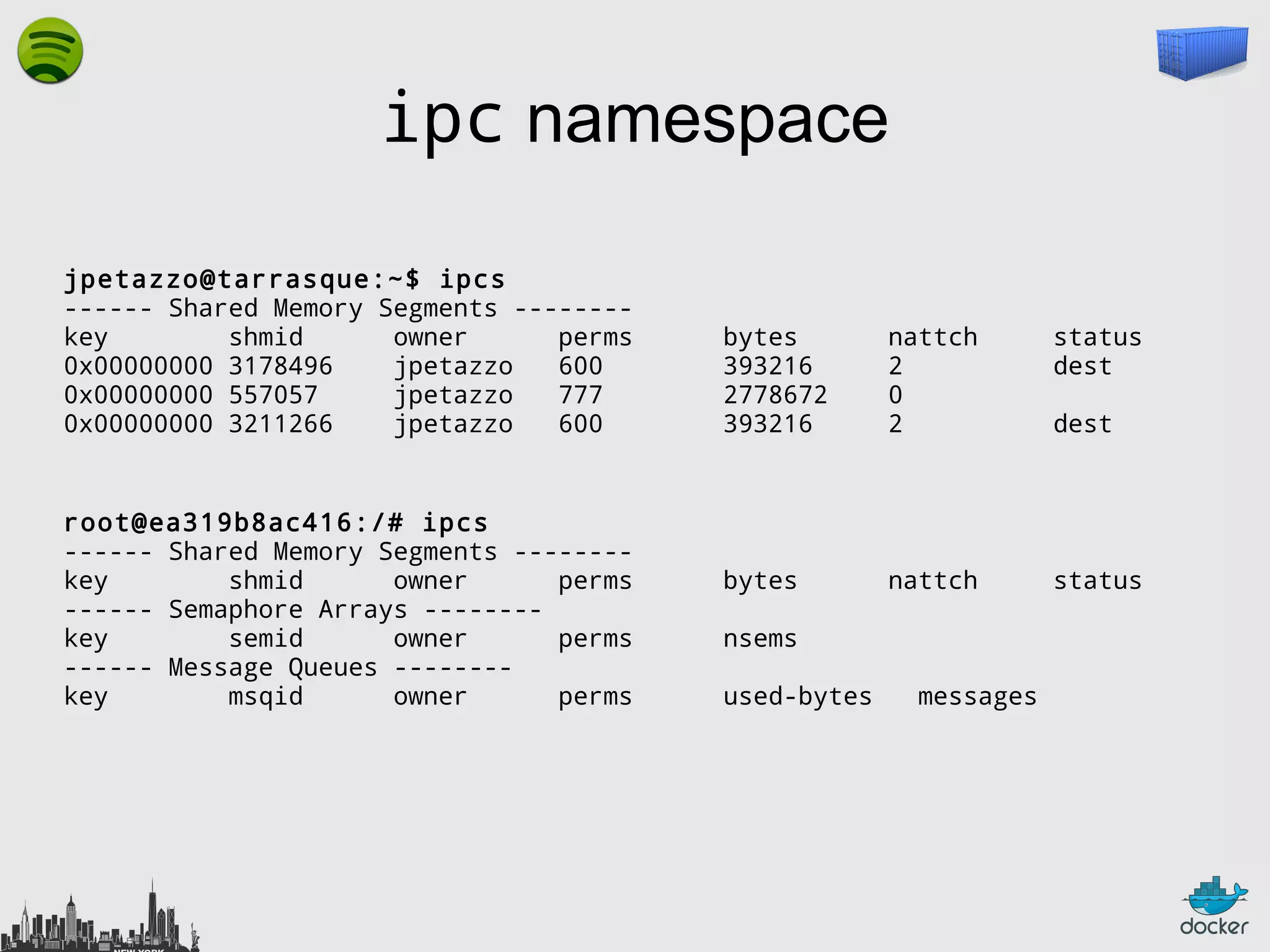

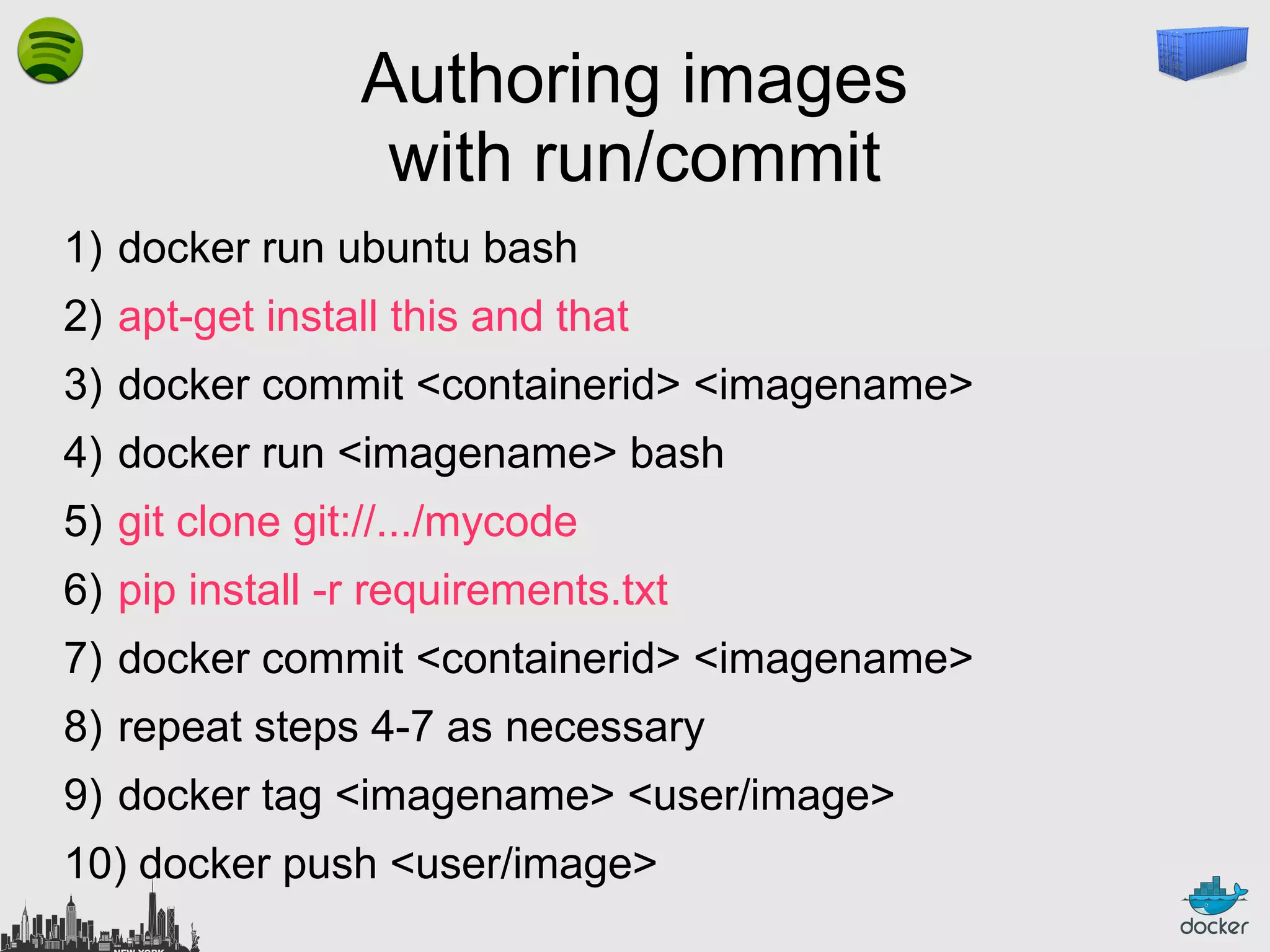

![Authoring images

with a Dockerfile

FROM ubuntu

RUN

RUN

RUN

RUN

RUN

apt-get

apt-get

apt-get

apt-get

apt-get

-y update

install -y

install -y

install -y

install -y

g++

erlang-dev erlang-manpages erlang-base-hipe ...

libmozjs185-dev libicu-dev libtool ...

make wget

RUN wget http://.../apache-couchdb-1.3.1.tar.gz | tar -C /tmp -zxfRUN cd /tmp/apache-couchdb-* && ./configure && make install

RUN printf "[httpd]nport = 8101nbind_address = 0.0.0.0" >

/usr/local/etc/couchdb/local.d/docker.ini

EXPOSE 8101

CMD ["/usr/local/bin/couchdb"]

docker build -t jpetazzo/couchdb .](https://image.slidesharecdn.com/spotify-2013-131104174343-phpapp01/75/Let-s-Containerize-New-York-with-Docker-52-2048.jpg)