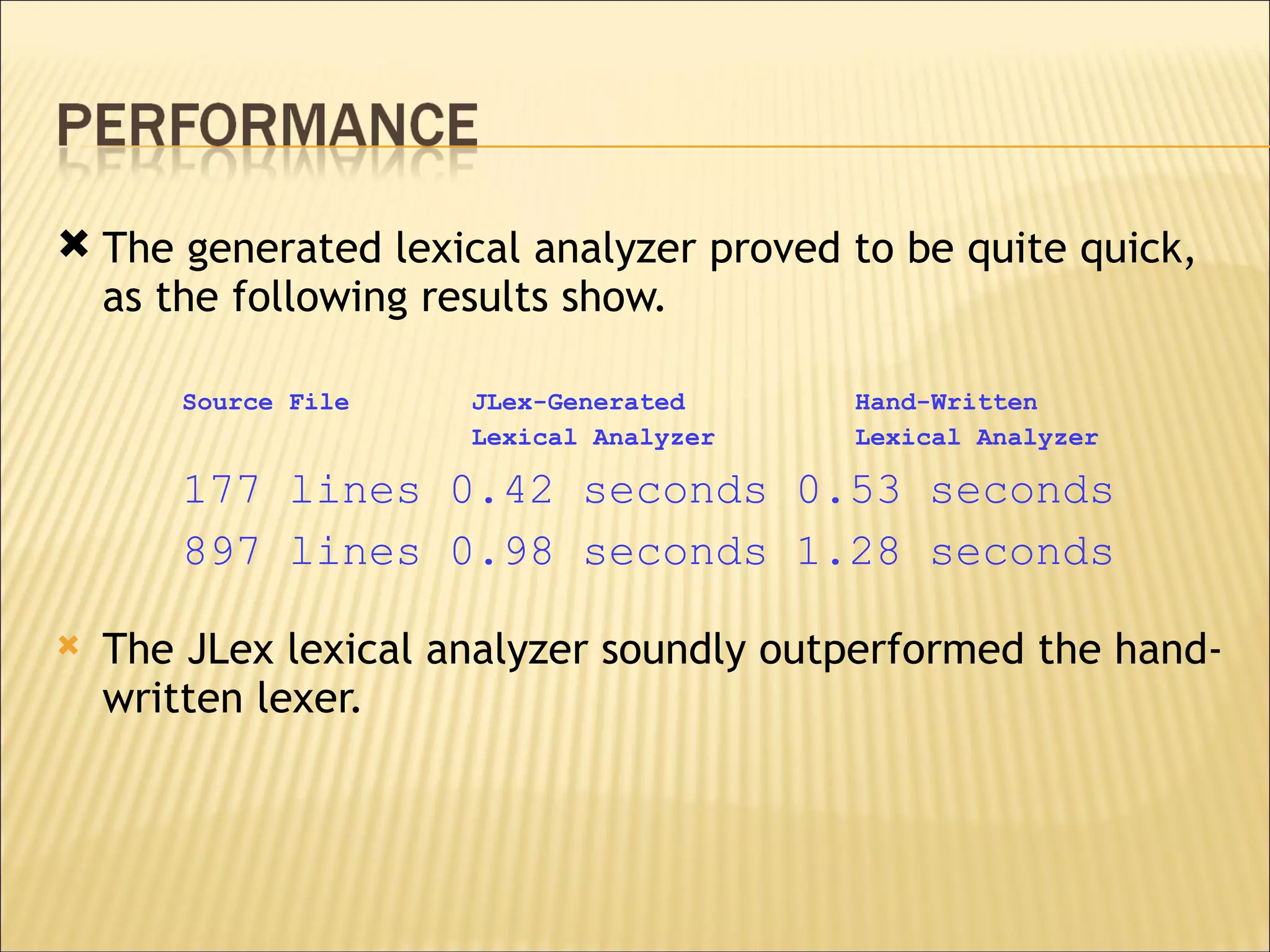

JLex is a lexical analyzer generator for Java that takes a specification file as input and generates a Java source file for a lexical analyzer. It performs lexical analysis faster than a comparable handwritten lexical analyzer. SAX and DOM are XML parser APIs that respectively use event-based and tree-based models to read and process XML documents, with SAX using less memory but DOM allowing arbitrary navigation and modification of the document tree.

![The third part of the JLex specification consists of a series of rules for breaking the input stream into tokens. These rules specify regular expressions, then associate these expressions with actions consisting of Java source code. The rules have three distinct parts: the optional state list, the regular expression, and the associated action. This format is represented as follows. [<states>] <expression> { <action> }](https://image.slidesharecdn.com/lexical-analysers-parsersintroucsc2009-1231438711448314-2/75/Lexical-Analyzers-and-Parsers-12-2048.jpg)