Lex and Yacc are compiler-writing tools that are used to specify lexical tokens and context-free grammars. Lex is used to specify lexical tokens and their processing order, while Yacc is used to specify context-free grammars for LALR(1) parsing. Both tools have a long history in computing and were originally developed in the early days of Unix on minicomputers. Lex reads a specification of lexical patterns and actions and generates a C program that implements a lexical analyzer. The generated C program defines a function called yylex() that scans the input and returns the recognized tokens.

![Tokens

• Tokens in Lex are declared like variable names

in C. Every token has an associated expression.

Token Associated expression Meaning

number ([0-9])+ 1 or more occurrences of a digit

chars [A-Za-z] Any character

blank " " A blank space

word (chars)+ 1 or more occurrences of chars

variable (chars)+(number)*(chars)*( number)*](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-13-320.jpg)

![Skeleton of a lex specification (.l file)

x.l

%{

< C global variables, prototypes,

comments >

%}

[DEFINITION SECTION]

%%

[RULES SECTION]

%%

< C auxiliary subroutines>

lex.yy.c is generated after

running

> lex x.l

LITERAL BLOCK

This part will be embedded into lex.yy.c

substitutions, internal table, character

translation code and start states; will

be copied into lex.yy.c

define how to scan and what action to

take for each token

any user code. For example, a main

function to call the scanning function

yylex().](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-23-320.jpg)

![Definitions Section(substitutions)

• Definitions intended for Lex are given before the first

%% delimiter. Any line in this section not contained

between %{ and %}, and beginning in column 1, is

assumed to define Lex substitution strings.

• The definitions section contains declarations of simple

name definitions to simplify the scanner specification.

• Name definitions have the form:

name definition or NAME expression

• Example:

DIGIT [0-9]

ID [a-z][a-z0-9]*](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-26-320.jpg)

![Example

Using {D} for the digits and {E} for an

exponent field, for example, might abbreviate

rules to recognize numbers:

D [0-9]

E [DEde][-+]?{D}+

%%

{D}+ printf("integer");

{D}+"."{D}*({E})? |

{D}*"."{D}+({E})? |

{D}+{E}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-28-320.jpg)

![Example

[t ]+ /* ignore whitespace */ ;

If action is empty, the matched token is discarded.

tab space Pattern matches 1 or more

copies of subpattern

Semicolon, do nothing C statement.

its effect is to ignore the input.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-37-320.jpg)

![Multiple statements in Action Part

%%

" " ;

[a-z] { c = yytext[0]; yylval = c - 'a'; return(LETTER);

}

[0-9]* { yylval = atoi(yytext); return(NUMBER); }

[^a-z0-9b] { c = yytext[0]; return(c); }

%%](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-42-320.jpg)

![Comments Satements

• Outside “%{“ and “%}”, comments must be indented

with whitespace for lex to recognize them correctly.

Example:

%%

[t ]+ /* ignore whitespace */ ;

%%

Main()

{

/* user code */

yylex(); /* to run the lexer. To translates the lex specification

into a C file */

}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-45-320.jpg)

![Lex program structure

… definitions …

%%

… rules …

%%

… subroutines …

%{

#include <stdio.h>

#include "y.tab.h"

int c;

extern int yylval;

%}

%%

" " ;

[a-z] { c = yytext[0]; yylval = c - 'a'; return(LETTER); }

[0-9]* { yylval = atoi(yytext); return(NUMBER); }

[^a-z0-9b] { c = yytext[0]; return(c); }](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-48-320.jpg)

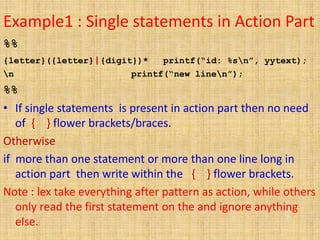

![Lex Source Program example1

• Lex source is a table of

– regular expressions and

– corresponding program fragments

digit [0-9]

letter [a-zA-Z]

%%

{letter}({letter}|{digit})* printf(“id: %sn”, yytext);

n printf(“new linen”);

%%

main()

{

yylex();

}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-49-320.jpg)

![Operators

“ “ : quotation mark

: backward slash(escape character)

[ ] : square brackets (character class)

^ : caret (negation)

- : minus

? : question mark

. : period(dot)

* : asterisk(star)

+ : plus

| : vertical bar(pie)

( ) : parentheses

$ : dollar

/ : forward slash

{ } : flower braces(curly braces)

% : percentage

< > : angular brackets](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-57-320.jpg)

![Metacharacter Matches

. Matches any character except newline

Used to escape metacharacter

* Matches zero or more copies of the preceding expression

+ Matches one or more copies of the preceding expression

? Matches zero or one copy of the preceding expression

^ Matches beginning of line as first character / complement (negation)

$ Matches end of line as last character

| Matches either preceding

( ) grouping a series of RE(one or more copies)

[] Matches any character

{} Indicates how many times the pervious pattern allowed to match.

“ ” Interprets everything within it.

/ Matches preceding RE. only one slash is permitted.

- Used to denote range. Example: A-Z implies all characters from A to Z

Pattern Matching Primitives](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-58-320.jpg)

![Quotation Mark Operator “ “

• The quotation mark operator “…”indicates that

whatever is contained between a pair of quotes is to be

taken as text characters.

Ex: xyz"++“ or "xyz++"

• If they are to be used as text characters, an escape

should be used

Ex: xyz++ = "xyz++"

$ = “$”

= “”

• Every character but blank(b) , tab (t), newline (n),

is backspace and the list above is always a text character.

• Any blank character not contained within [] must be

quoted.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-59-320.jpg)

![Character Classes []

• Classes of characters can be specified using the operator

pair [].

• It matches any single character, which is in the [ ].

• Every operator meaning is ignored except - and ^.

• The - character indicates ranges.

• If it is desired to include the character - in a character

class, it should be first or last.

• If the first character is a circumflex(“ ^”) it changes the

meaning to match any character except the ones within

the brackets.

• C escape sequences starting with “” are recognized.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-60-320.jpg)

![examples

[ab] => a or b

[a-z] => a or b or c or … or z

[-+0-9] => all the digits and the two signs

[^a-zA-Z] => any character which is not a

letter

[a-z0-9<>_] all the lower case letters, the digits,

the angle brackets, and underline.

joke[rs] Matches either jokes or joker.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-61-320.jpg)

![Arbitrary Character .

• To match almost any character, the operator

character . is the class of all characters

except newline

• [40-176] matches all printable

characters in the ASCII character set, from

octal 40 (blank) to octal 176 (tilde~)](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-62-320.jpg)

![Optional & Repeated Expressions

• The operator ? indicates an optional element of an

expression. Thus ab?c matches either ac or abc.

• Repetitions of classes are indicated by the operators * and +.

• a? => zero or one instance of a

• a* => zero or more instances of a

• a+ => one or more instances of a

• E.g.

ab?c => ac or abc

[a-z]+ => all strings of lower case letters

[a-zA-Z][a-zA-Z0-9]* => all alphanumeric strings

with a leading alphabetic character](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-63-320.jpg)

![Examples

• an integer: 12345

[1-9][0-9]*

• a word: cat

[a-zA-Z]+

• a (possibly) signed integer: 12345 or -12345

[“-”+]?[1-9][0-9]*

• a floating point number: 1.2345

[0-9]*”.”[0-9]+](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-64-320.jpg)

![Examples

• a delimiter for an English sentence

“.” | “?” | ! or [“.””?”!]

• C++ comment: // call foo() here!!

“//”.*

•white space

[ t]+

• English sentence: Look at this!

([ t]+|[a-zA-Z]+)+(“.”|”?”|!)](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-65-320.jpg)

![Context Sensitivity

• Lex will recognize a small amount of surrounding

context. The two simplest operators for this are ^

and $.

• If the first character of an expression is ^, the

expression will only be matched at the beginning of

a line (after a newline character, or at the beginning

of the input stream). This can never conflict with

the other meaning of ^, complementation of

character classes, since that only applies within the

[] operators.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-67-320.jpg)

![PLLab, NTHU,Cs2403 Programming Languages 71

Pattern Matching Primitives

Meta

character

Example

. a.b, we.78

n [t n]

* [t n]*, a*. A.*z

+ [t n]+, a+, a.+r

? -?[0-9]+ , ab?c

^ [^t n], ^AD , ^(.*)n

$ end of line as last character

a|b a or b

(ab)+ one or more copies of ab (grouping)

[ab] a or b

a{3} 3 instances of a

“a+b” literal “a+b” (C escapes still work)

A{1,2}shis+ Matches AAshis, Ashis, AAshi, Ashi.

(A[b-e])+ Matches zero or one occurrences of A followed by any character from b to e.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-71-320.jpg)

![Finally, initial % is special, being the separator

for Lex source segments

• [a-z]+ printf("%s", yytext);

will print the string in yytext. The C function printf

accepts a format argument and data to be printed; in

this case, the format is “print string'' (% indicating

data conversion, and %s indicating string type), and

the data are the characters in yytext. So this just

places the matched string on the output. This action is

so common that it may be written as ECHO:

• [a-z]+ ECHO;](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-72-320.jpg)

![Regular Expression (1/3)

x match the character 'x'

. any character (byte) except newline

[xyz] a "character class"; in this case, the pattern matches either

an 'x', a 'y', or a 'z‘

[abj-oZ] a "character class" with a range in it; matches an 'a', a 'b',

any letter from 'j' through 'o', or a 'Z‘

[^A-Z] a "negated character class", i.e., any character but those in

the class. In this case, any character EXCEPT an uppercase

letter.

[^A-Zn] any character EXCEPT an uppercase letter or a newline](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-74-320.jpg)

![Regular Expression (2/3)

r* zero or more r's, where r is any regular expression

r+ one or more r's

r? zero or one r's (that is, "an optional r")

r{2,5} anywhere from two to five r's

r{2,} two or more r's

r{4} exactly 4 r's

{name} the expansion of the "name" definition (see above)

"[xyz]"foo“ the literal string: [xyz]"foo

X if X is an 'a', 'b', 'f', 'n', 'r', 't', or 'v‘, then the ANSI-C interpretation of x.

Otherwise, a literal 'X' (used to escape operators such as '*')](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-75-320.jpg)

![Lex Predefined Variables

yytext

• Wherever a scanner matches TOKEN ,the text of the

token is stored in the null terminated string yytext.

• The contents of yytext are replaced each time a new

token is matched.

External char yytext[]; //array

External char *yytext; //pointer

To increases the size of buffer. Format in AT&T and MKS

%{

#undef YYLMAX /*remove default defintion*/

#define YYLMAX 500 /* new size */

%}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-78-320.jpg)

![Continued..

• If yytext is an array, any token which is longer

than yytext will overflow the end of the array

and cause the lexer to fail.

• Yytext[] is 200 ,100character in different lex

tools.

• Flex have default I/O buffer is 16k,which can

handled token upto 8k.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-79-320.jpg)

![yyleng

The length of the token is stored in it. It is similar

to strlen(yytext).

• Example :

[a-z]+ printf(“%s”, yytext);

[a-z]+ ECHO;

[a-zA-Z]+ {words++; chars += yyleng;}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-80-320.jpg)

![Example

"[^"]* {

if (yytext[yyleng-1] == '')

yymore();

else

... normal user processing

}

which will, when faced with a string such as "abc" “def"

first match the five characters "abc”; then the call to

yymore() will cause the next part of the string, "def, to be

tacked on the end.

Note that the final quote terminating the string should be

picked up in the code labeled ``normal processing''.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-82-320.jpg)

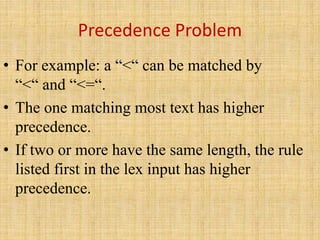

![Ambiguous Source Rules

Lex can handle ambiguous specifications. When more than one

expression can match the current input, Lex chooses as follows:

1) The longest match is preferred.

2) Among rules which matched the same number of characters,

the rule given first is preferred. Thus, suppose the rules

integer keyword action ...;

[a-z]+ identifier action ...;

to be given in that order. If the input is integers, it is taken as an

identifier, because [az]+ matches 8 characters while integer

matches only 7. If the input is integer, both rules match 7

characters, and the keyword rule is selected because it was given

first. Anything shorter (e.g. int) will not match the expression

integer and so the identifier interpretation is used](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-84-320.jpg)

![Example

%{

int counter = 0;

%}

letter [a-zA-Z]

%%

{letter}+ {printf(“a wordn”); counter++;}

%%

main()

{

yylex();

printf(“There are total %d wordsn”, counter);

}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-88-320.jpg)

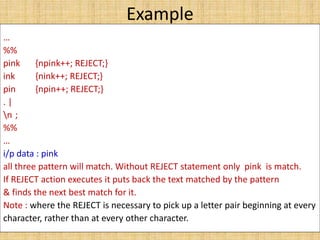

![Examples

she {s++; REJECT;}

he {h++; REJECT;}

n |

. ;

a[bc]+ { ... ; REJECT;}

a[cd]+ { ... ; REJECT;}

If the input is ab, only the first

rule matches, and on ad only the

second matches.

The input string accb matches

the first rule for four characters

and then the second rule for

three characters.

In contrast, the input accd agrees

with the second rule for four

characters and then the first rule

for three.](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-92-320.jpg)

![Lex Predefined Variables

• yytext -- a string containing the lexeme

• yyleng -- the length of the lexeme

• yyin -- the input stream pointer

– the default input of default main() is stdin

• yyout -- the output stream pointer

– the default output of default main() is stdout.

• cs20: %./a.out < inputfile > outfile

• E.g.

[a-z]+ printf(“%s”, yytext);

[a-z]+ ECHO;

[a-zA-Z]+ {words++; chars += yyleng;}](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-94-320.jpg)

![Token Values

• Each symbol in a yacc parser can have an associated

value. (See "Symbol Values.")

• Since tokens can have values, you need to set the

values as the lexer returns tokens to the parser.

• The token value is always stored in the variable

yylval.

• Example :

[0-9]+ { yylval = atoi (yytext ) ; return NUMBER; }](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-135-320.jpg)

![Rule Reduction and Action

stat: expr {printf("%dn",$1);}

| LETTER '=' expr {regs[$1] = $3;} ;

expr:

expr '+' expr {$$ = $1 + $3;} |

LETTER {$$ = regs[$1];} ;

Grammar rule Action

“or” operator:

For multiple RHS](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-157-320.jpg)

![Yacc Program Structure

% {

# i n c l u d e < s t d i o . h >

i n t r e g s [ 2 6 ] ;

i n t b a s e ;

% }

% t o k e n n u m b e r l e t t e r

% l e f t ' + ' ' - ‘

% l e f t ' * ' ' / ‘

% %

l i s t : | l i s t s t a t ' n ' | l i s t e r r o r ' n ' { y y e r r o k ; } ;

s t a t : e x p r { p r i n t f ( " % d n " , $ 1 ) ; }

| L E T T E R ' = ' e x p r { r e g s [ $ 1 ] = $ 3 ; } ;

e x p r :

' ( ' e x p r ' ) ' { $ $ = $ 2 ; } |

e x p r ' + ' e x p r { $ $ = $ 1 + $ 3 ; } |

L E T T E R { $ $ = r e g s [ $ 1 ] ; }

%%

m a i n ( ) { r e t u r n ( y y p a r s e ( ) ) ; }

y y e r r o r ( C H A R * s ) { f p r i n t f ( s t d e r r, " % s n " , s ) ; }

y y w r a p ( ) { r e t u r n ( 1 ) ; }

… definitions …

%%

… rules …

%%

… subroutines …](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-170-320.jpg)

![Works with Lex

YACC

yyparse()

Input programs

12 + 26

LEX

yylex()

call yylex()

[0-9]+

next token is NUM

NUM ‘+’ NUM](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-180-320.jpg)

![Communication between LEX and YACC

YACC

yyparse()

Input programs

12 + 26

LEX

yylex()

call yylex()

[0-9]+

next token is NUM

NUM ‘+’ NUM

LEX and YACCtoken](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-181-320.jpg)

![Communication between LEX and YACC

%{

#include <stdio.h>

#include "y.tab.h"

%}

id [_a-zA-Z][_a-zA-Z0-9]*

%%

int { return INT; }

char { return CHAR; }

float { return FLOAT; }

{id} { return ID;}

%{

#include <stdio.h>

#include <stdlib.h>

%}

%token CHAR, FLOAT, ID, INT

%%

yacc -d xxx.y

Produced

y.tab.h:

# define CHAR 258

# define FLOAT 259

# define ID 260

# define INT 261

parser.y

scanner.l](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-183-320.jpg)

![Scanner

%{

#include "y.tab.h"

#include "parser.h"

#include <math.h>

%}

%%

([0-9]+|([0-9]*.[0-9]+)([eE][-+]?[0-9]+)?) {

yylval.dval = atof(yytext);

return NUMBER;

}

[ t] ; /* ignore white space */

scanner.l](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-192-320.jpg)

![Scanner (cont’d)

[A-Za-z][A-Za-z0-9]* { /* return symbol pointer */

yylval.symp = symlook(yytext);

return NAME;

}

"$" { return 0; /* end of input */ }

n|”=“|”+”|”-”|”*”|”/” return yytext[0];

%%

scanner.l](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-193-320.jpg)

![Variables and Typed Tokens

% {

double vbltable [26 ];

%}

%union {

double dval;

int vblno ;

}

%type <dval> expression](https://image.slidesharecdn.com/module4lexandyacc-230809134834-add5ecf0/85/Module4-lex-and-yacc-ppt-222-320.jpg)