The document discusses lexical analysis as the first phase of a compiler, outlining the role of the lexical analyzer in reading source code, grouping characters into lexemes, and generating tokens. It also emphasizes the separation of lexical analysis from parsing for simplicity, efficiency, and portability, and covers the structures and definitions related to tokens, patterns, and lexemes. Additionally, it explores error handling, token recognition methods, and the use of regular expressions for specifying token patterns.

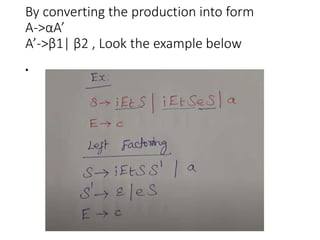

![Extensions

• One or more instances: (r)+

• Zero of one instances: r?

• Character classes: [abc]

• Example:

• letter_ -> [A-Za-z_]

• digit -> [0-9]

• id -> letter_(letter|digit)*](https://image.slidesharecdn.com/atc3rdmodule-240618050228-8b4a7860/85/atc-3rd-module-compiler-and-automata-ppt-18-320.jpg)

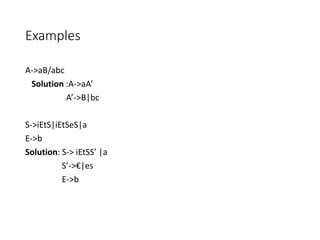

![Recognition of tokens (cont.)

• The next step is to formalize the patterns:

digit -> [0-9]

Digits -> digit+

number -> digit(.digits)? (E[+-]? Digit)?

letter -> [A-Za-z_]

id -> letter (letter|digit)*

If -> if

Then -> then

Else -> else

Relop -> < | > | <= | >= | = | <>

• We also need to handle whitespaces:

ws -> (blank | tab | newline)+](https://image.slidesharecdn.com/atc3rdmodule-240618050228-8b4a7860/85/atc-3rd-module-compiler-and-automata-ppt-20-320.jpg)