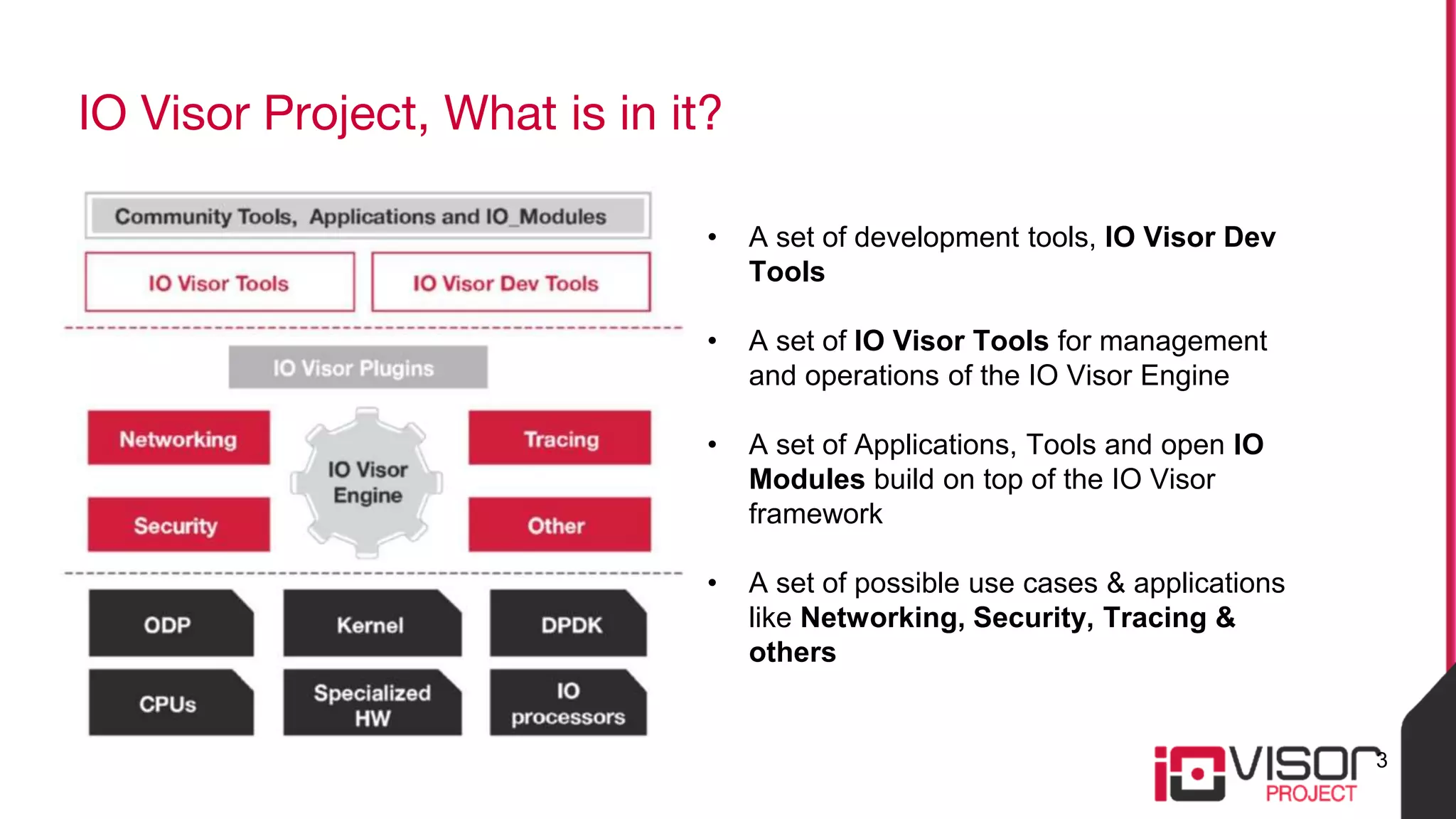

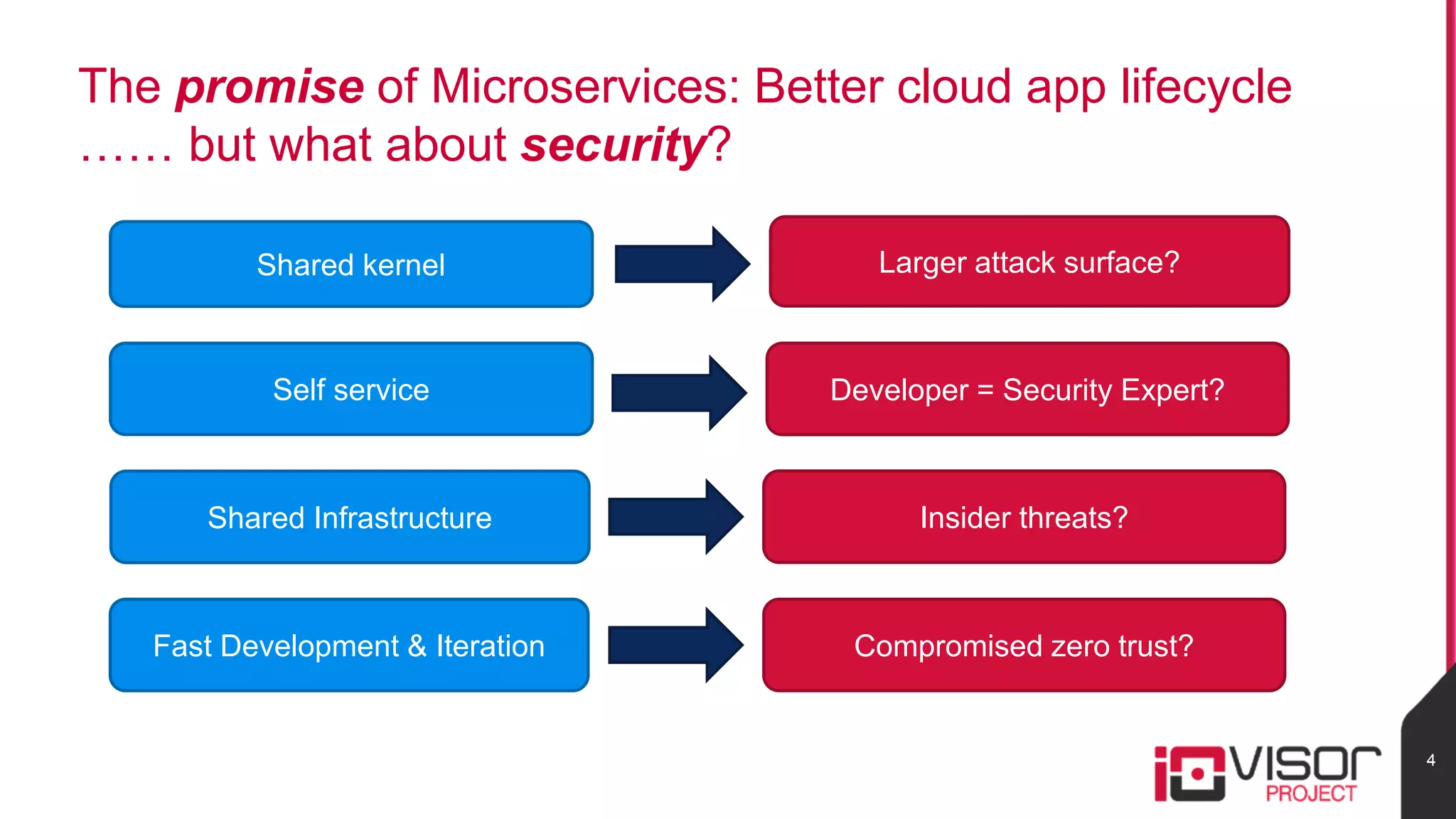

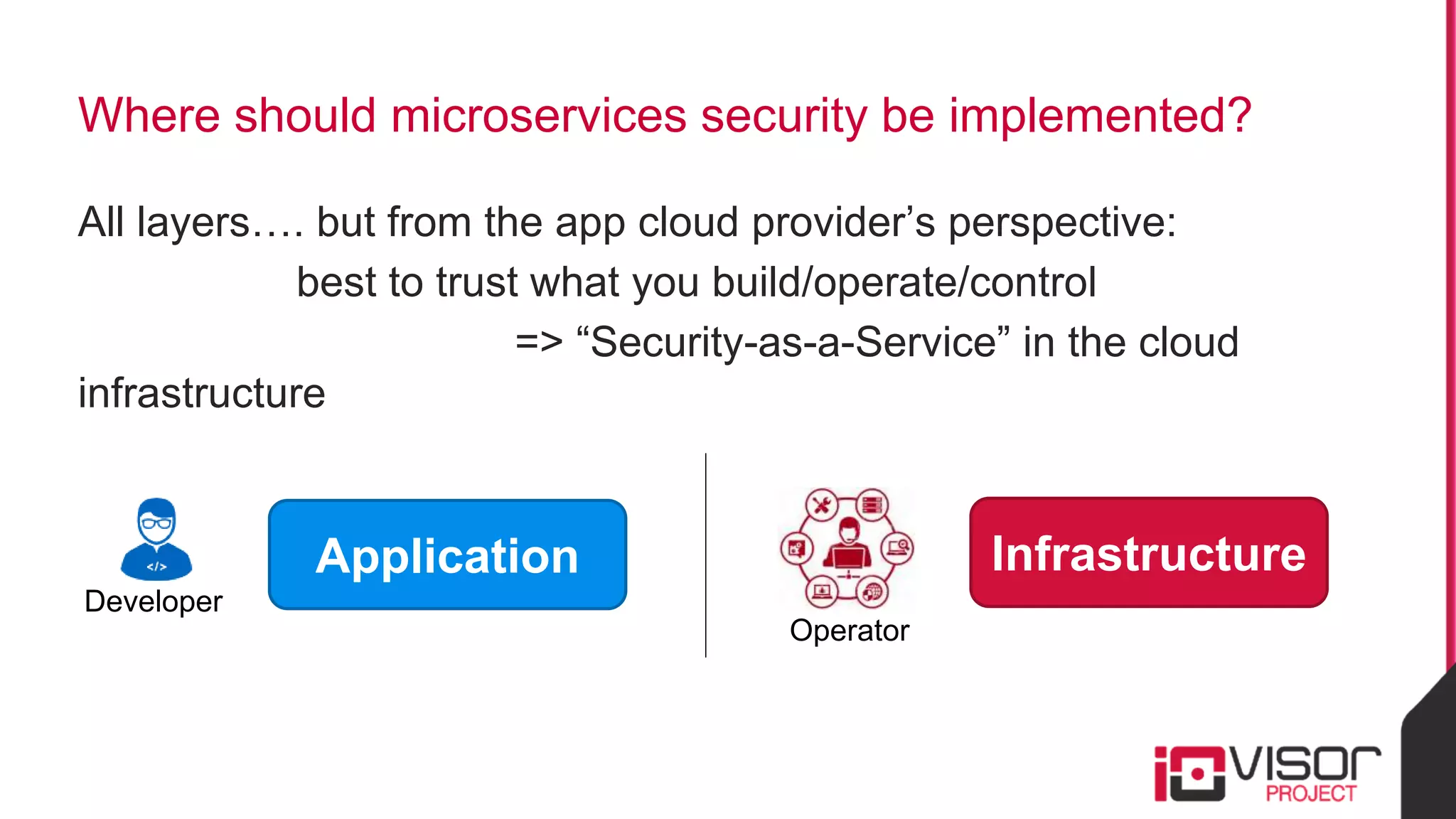

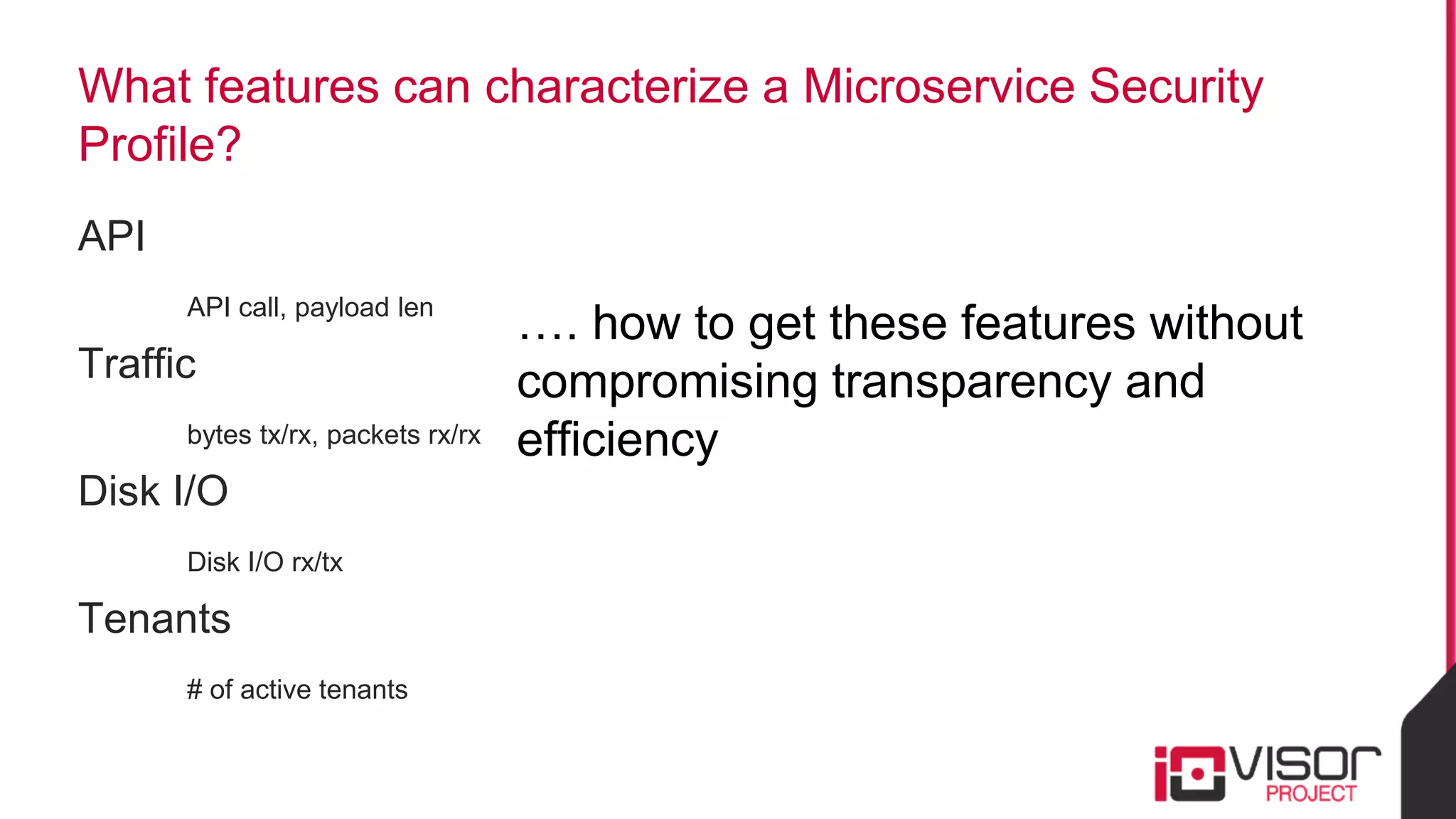

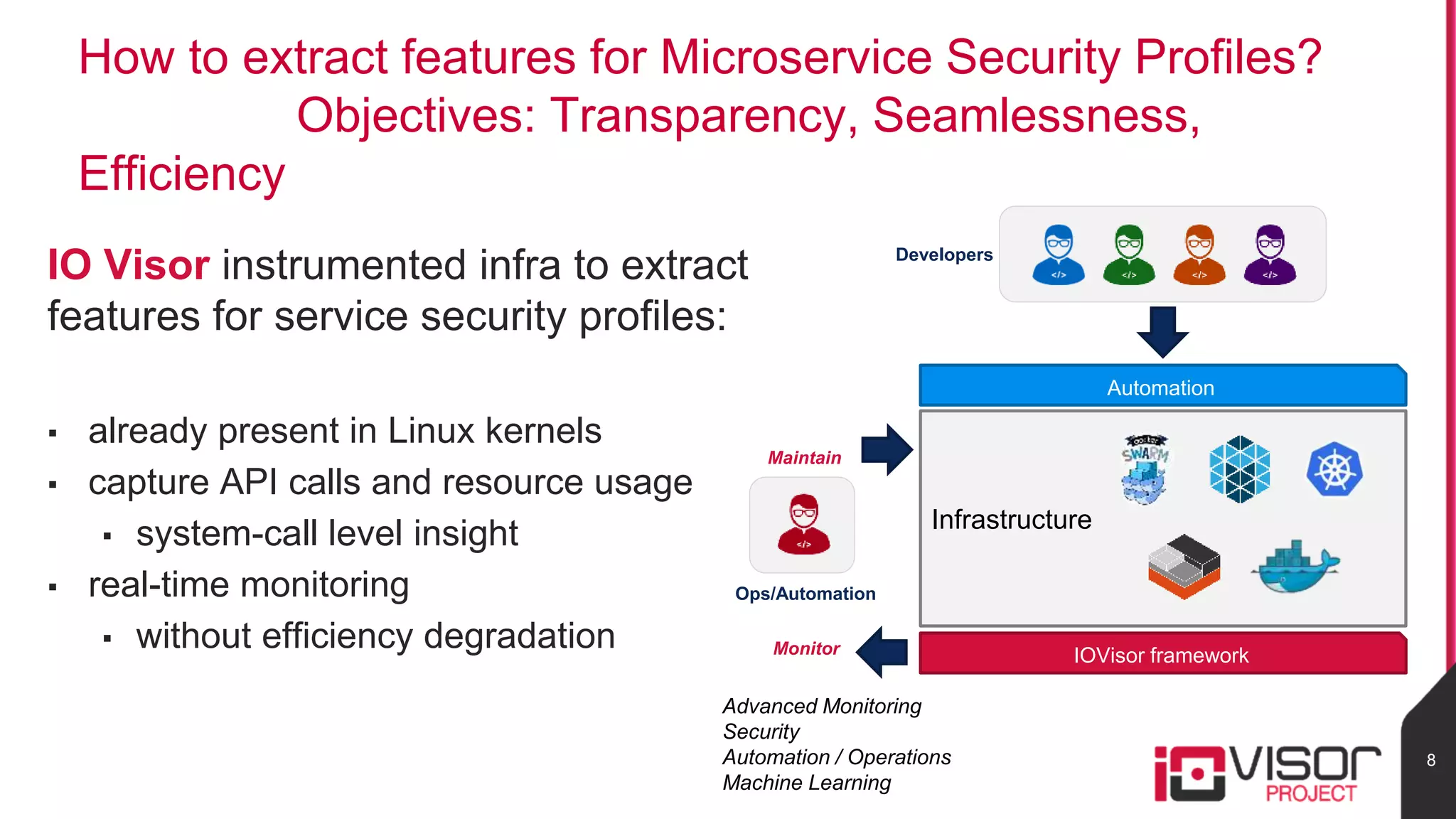

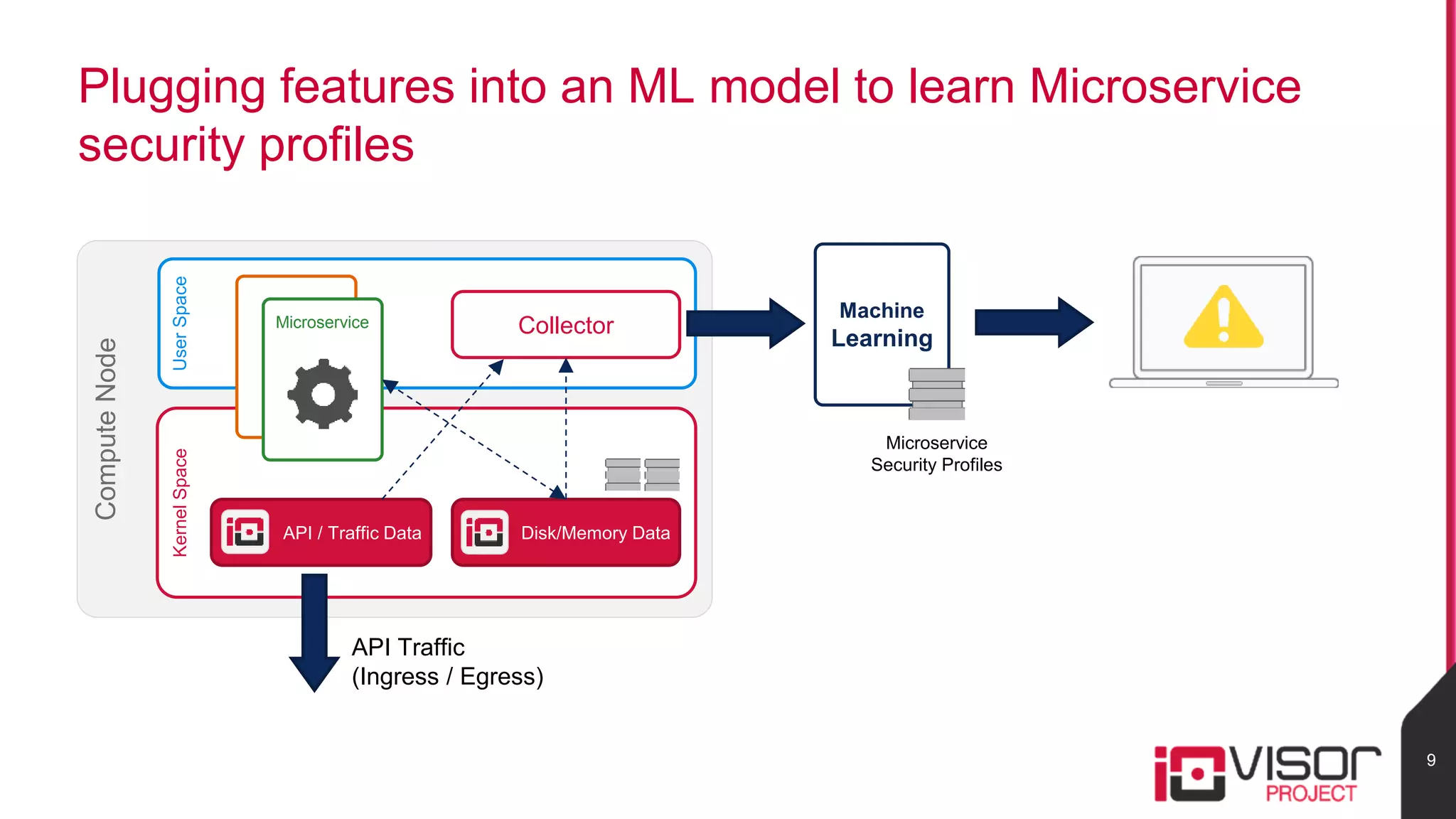

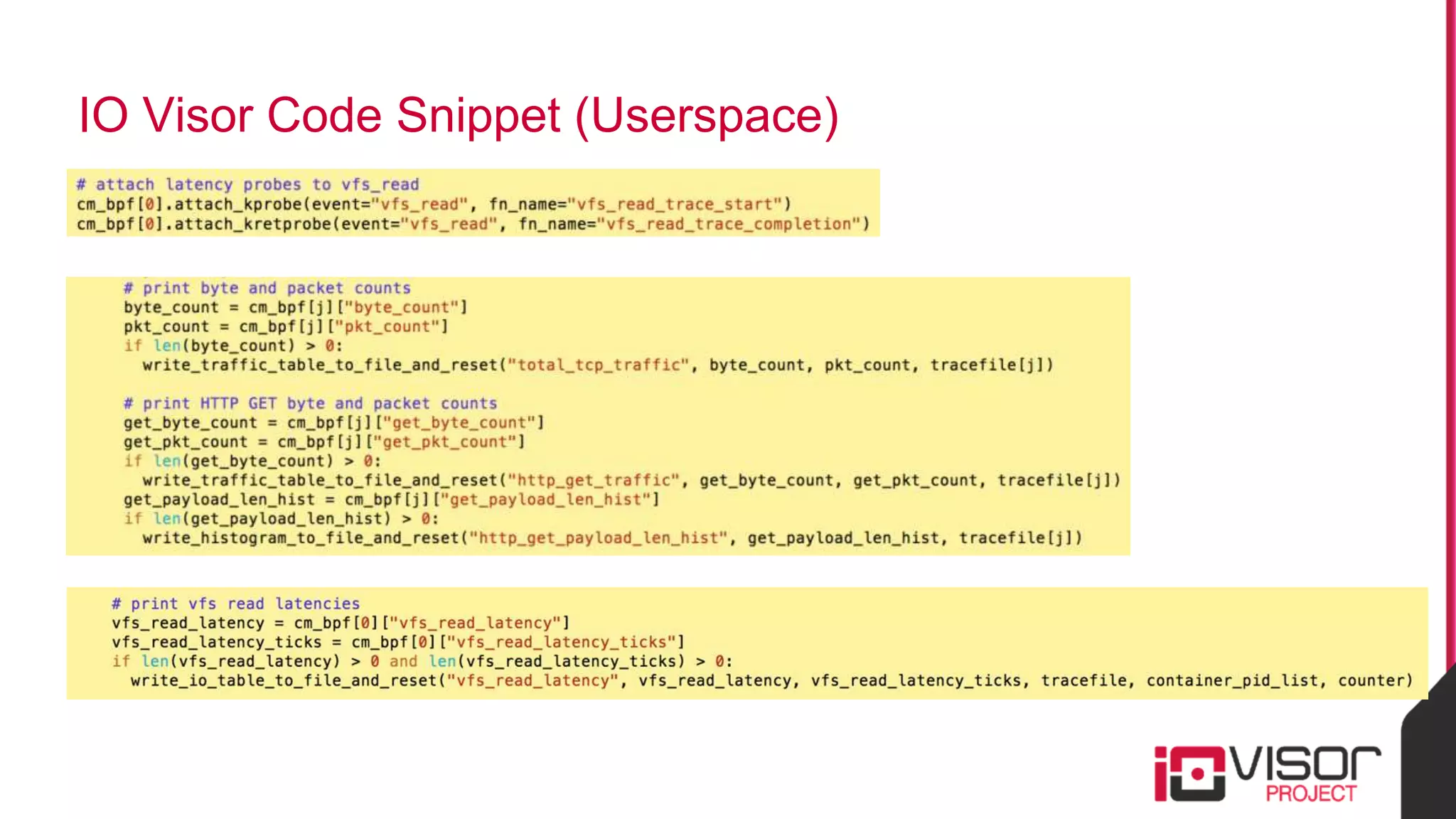

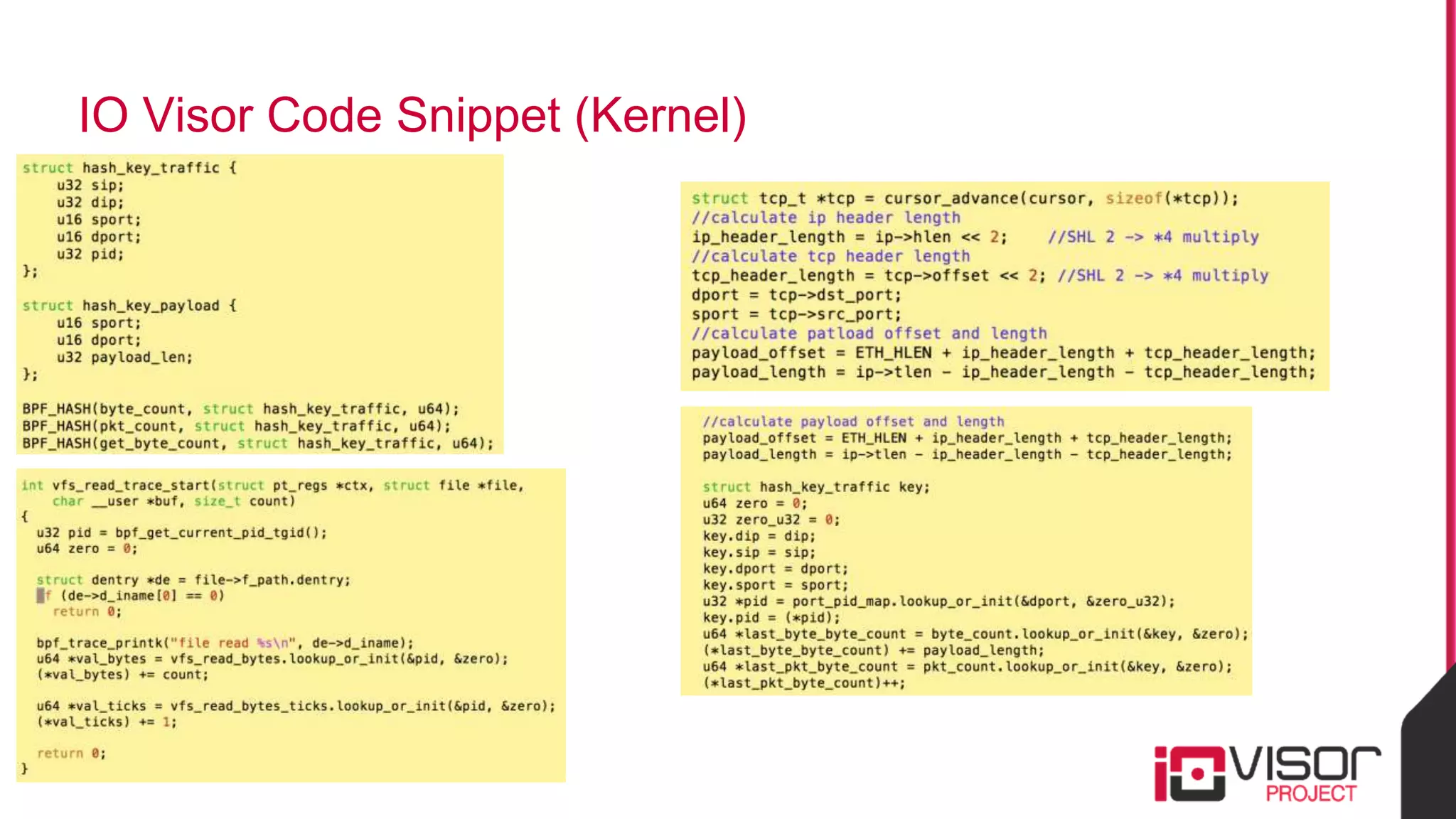

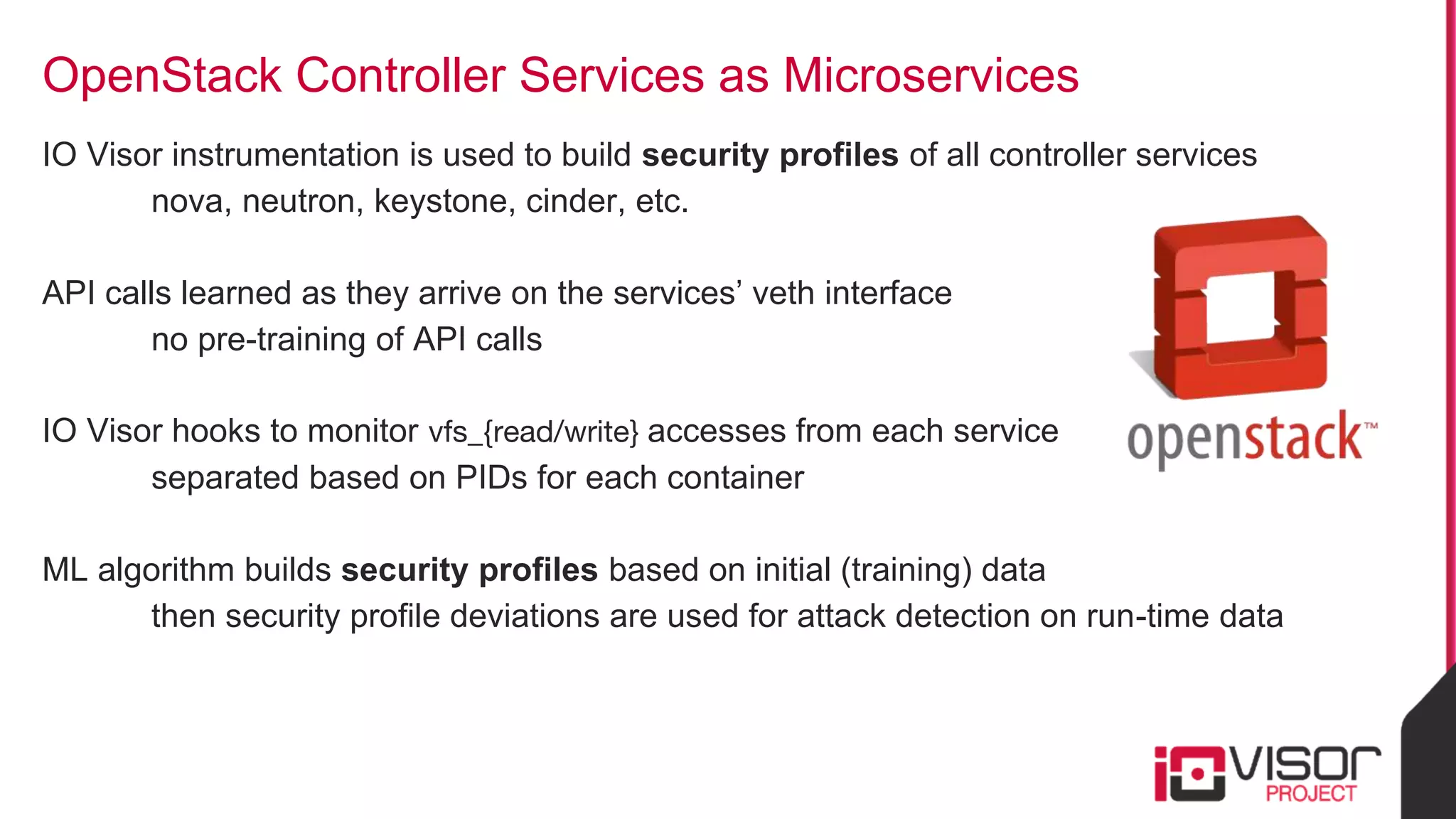

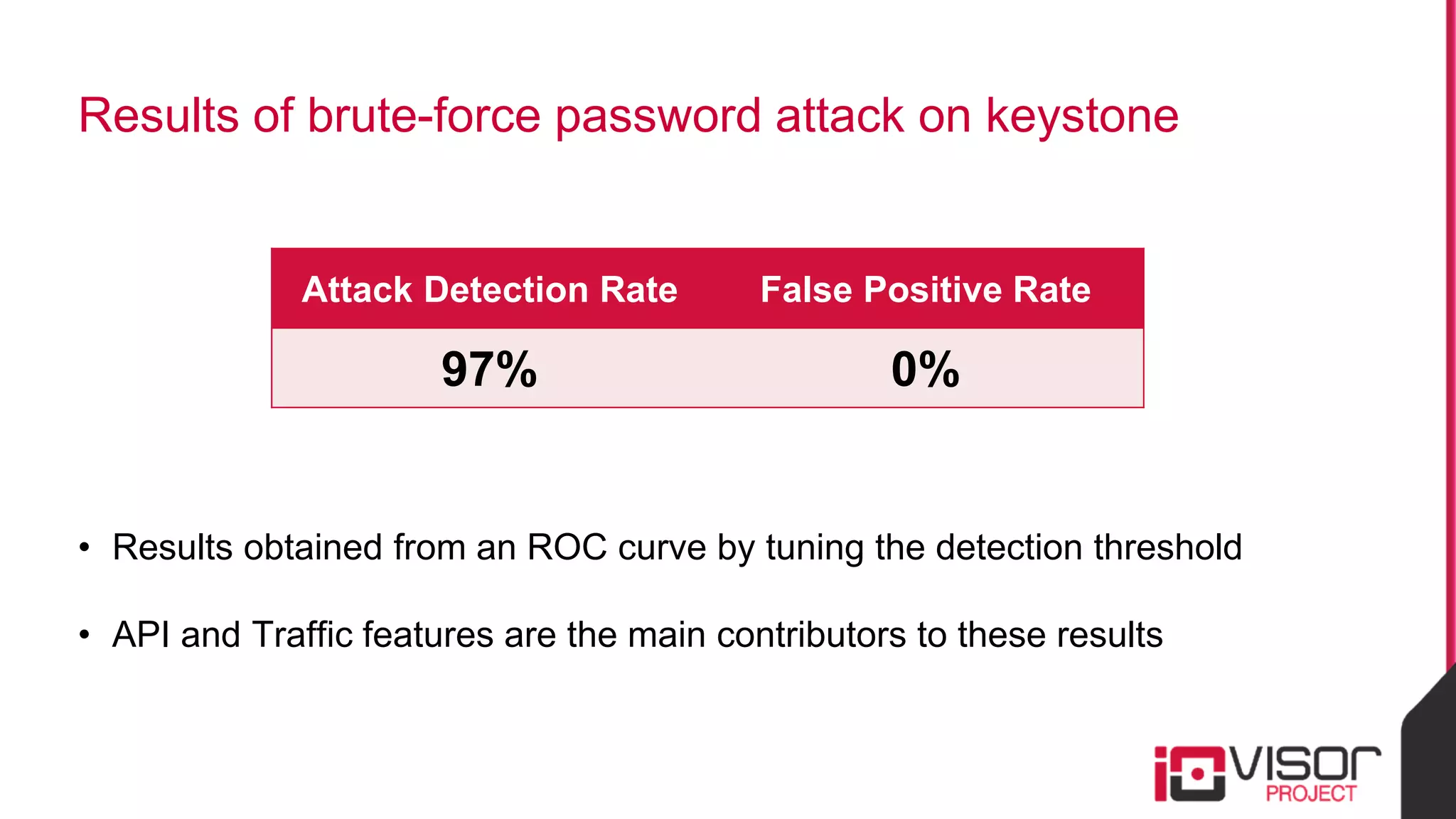

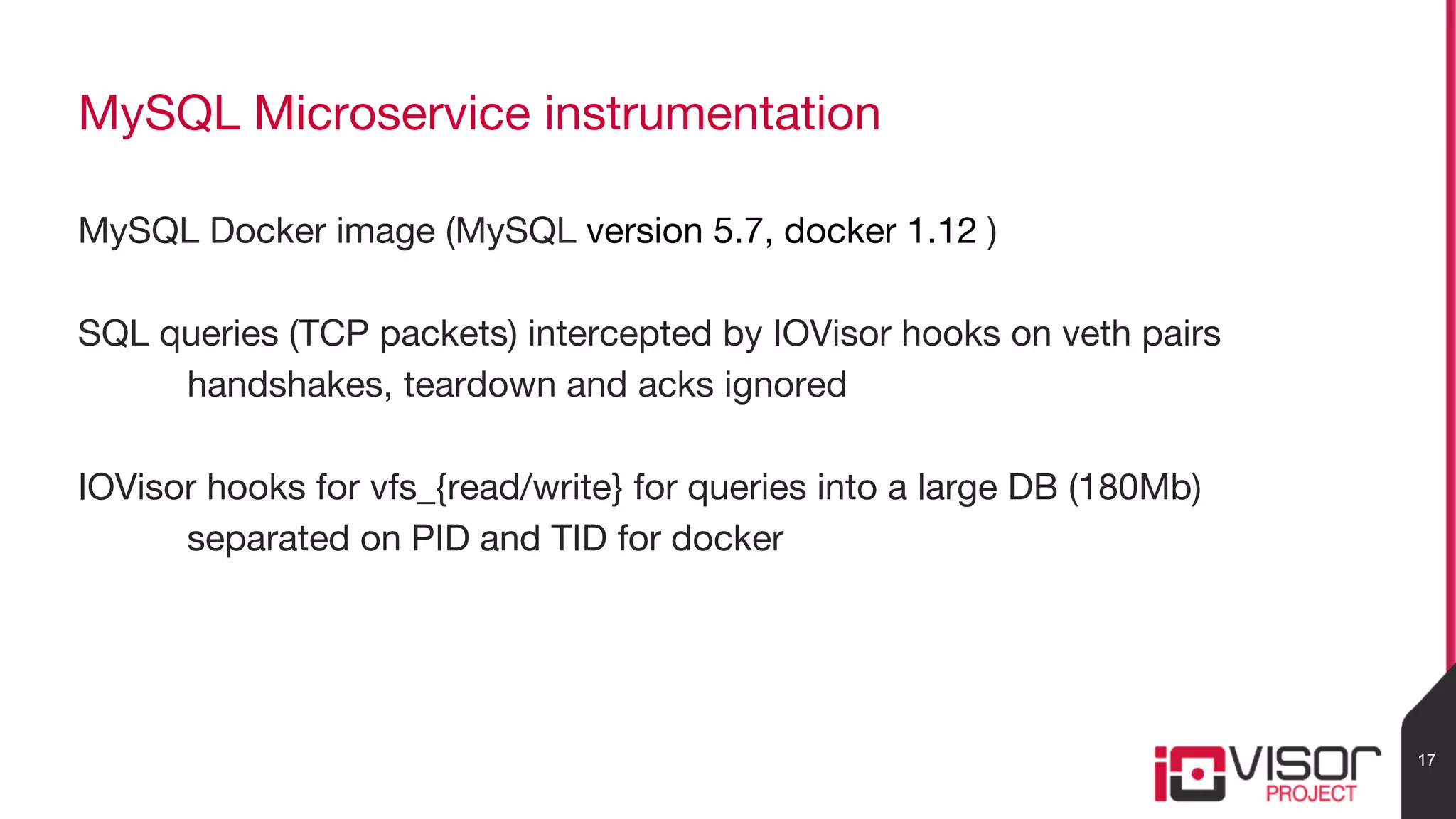

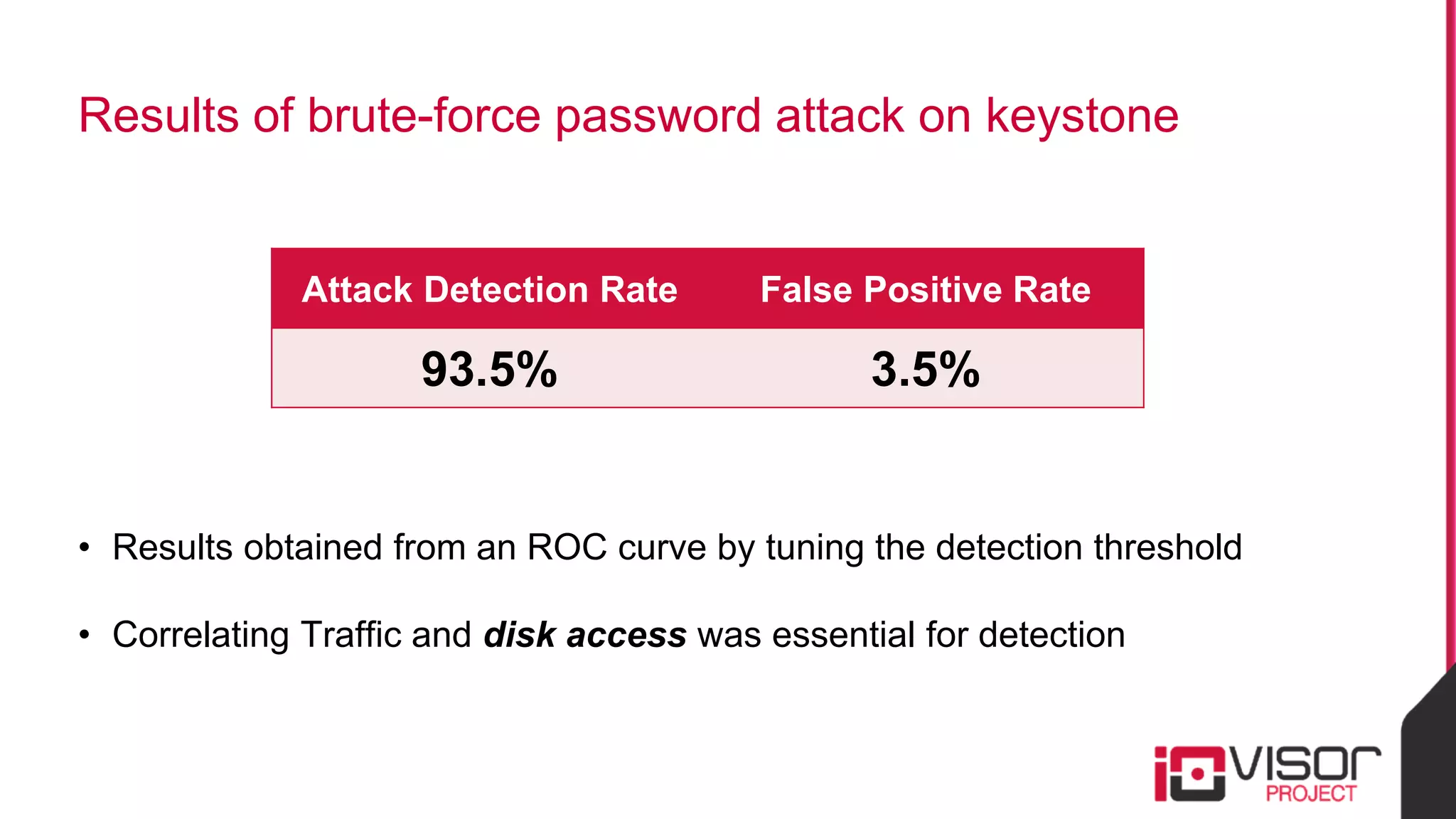

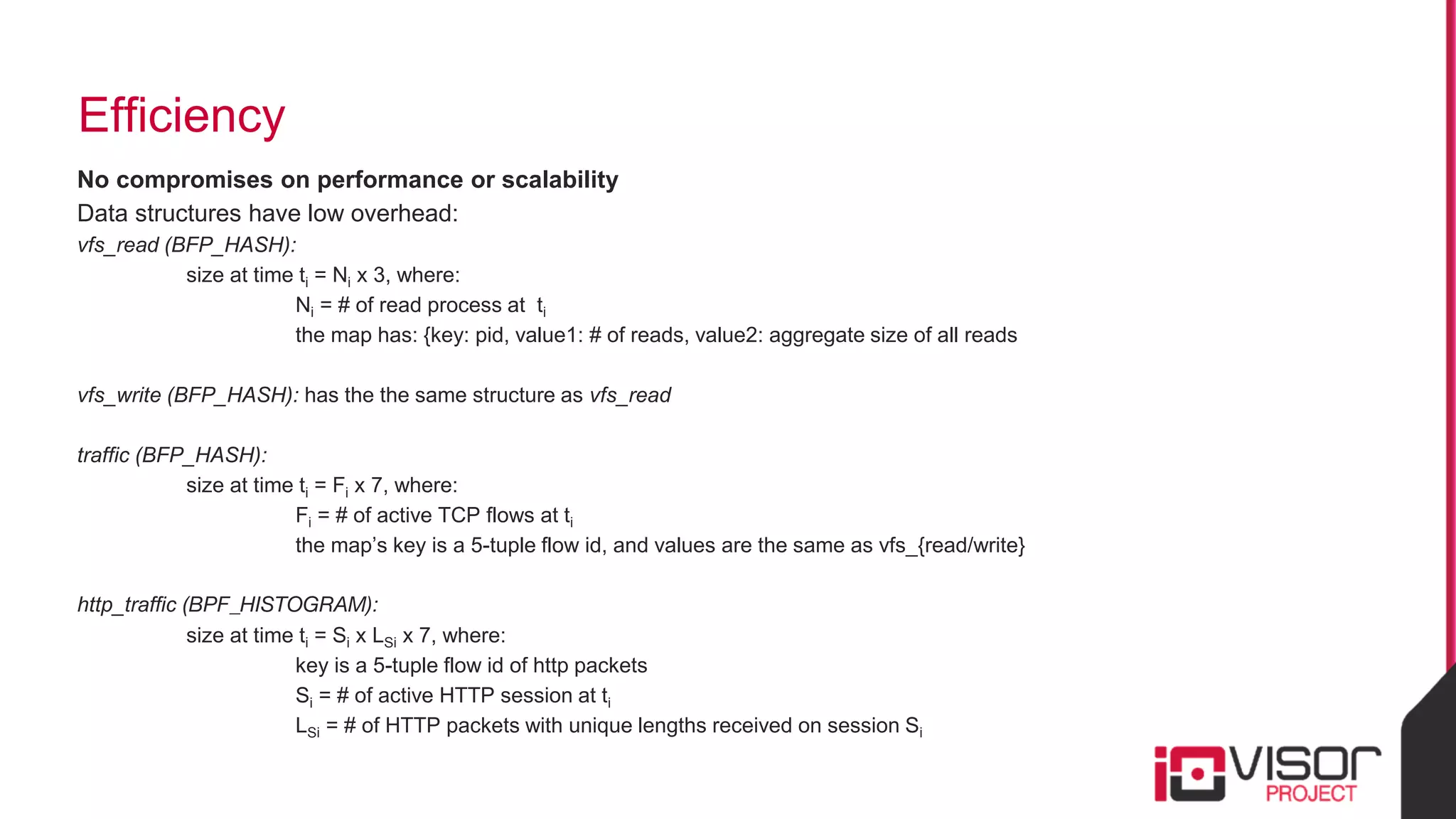

This document discusses the use of eBPF-based in-kernel analytics and tracing within OpenStack clouds, focusing on enhancing microservice security through the ioVisor framework. It outlines the extraction of security profiles from microservices, enabling real-time monitoring and efficient attack detection without impacting performance. The evaluation results showcase successful detection of threats like brute-force attacks and SQL injection with high accuracy rates.