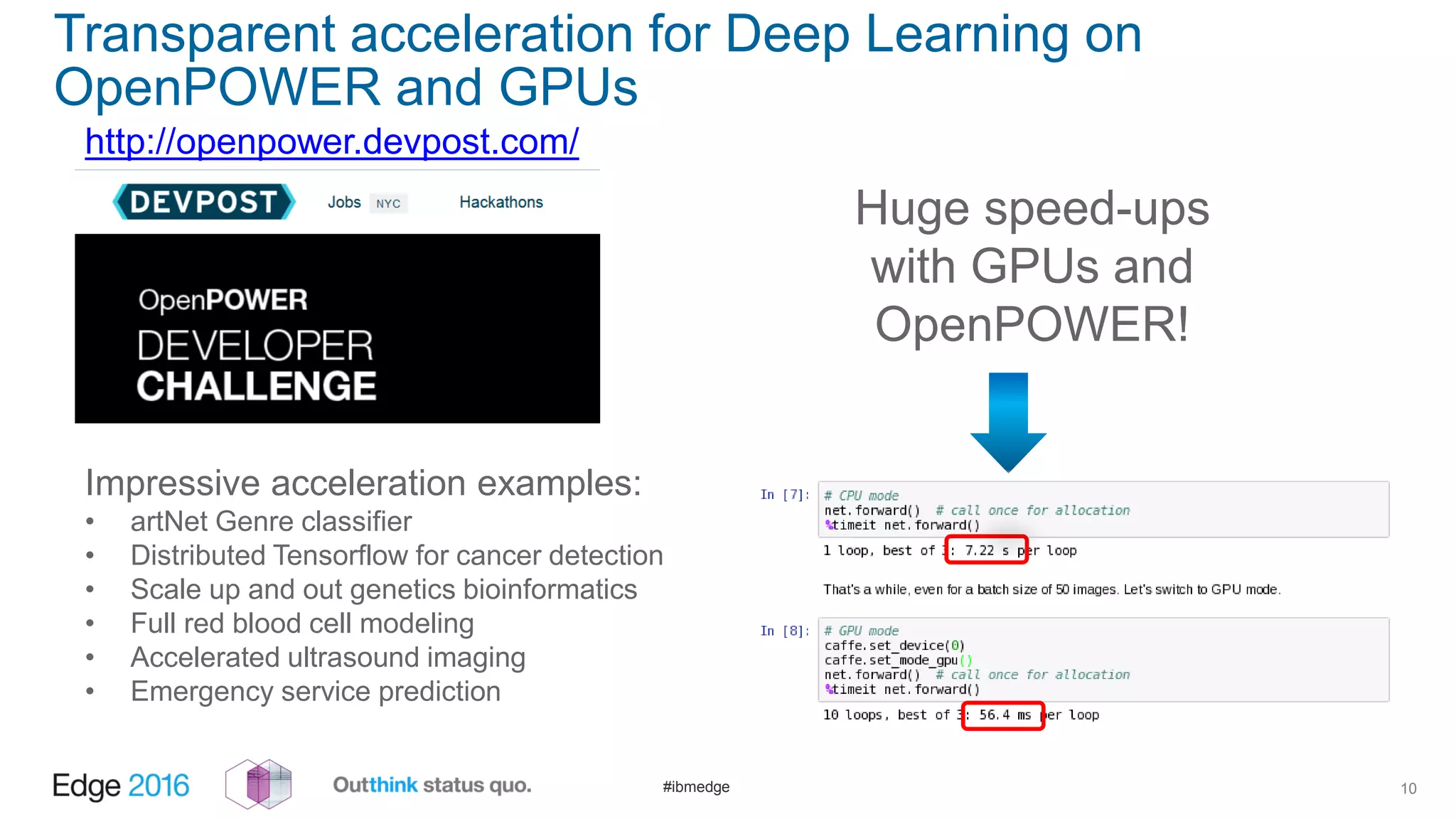

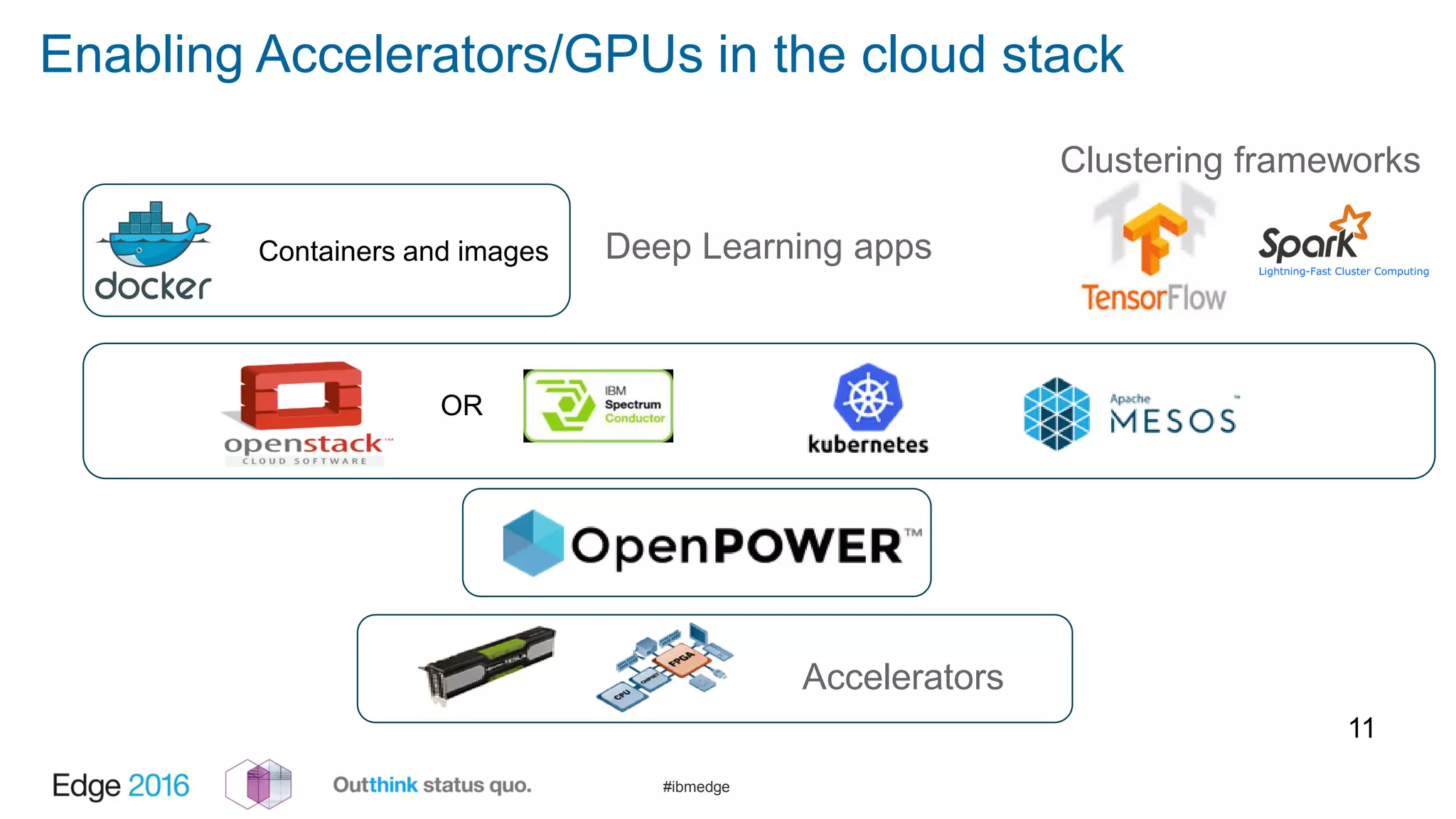

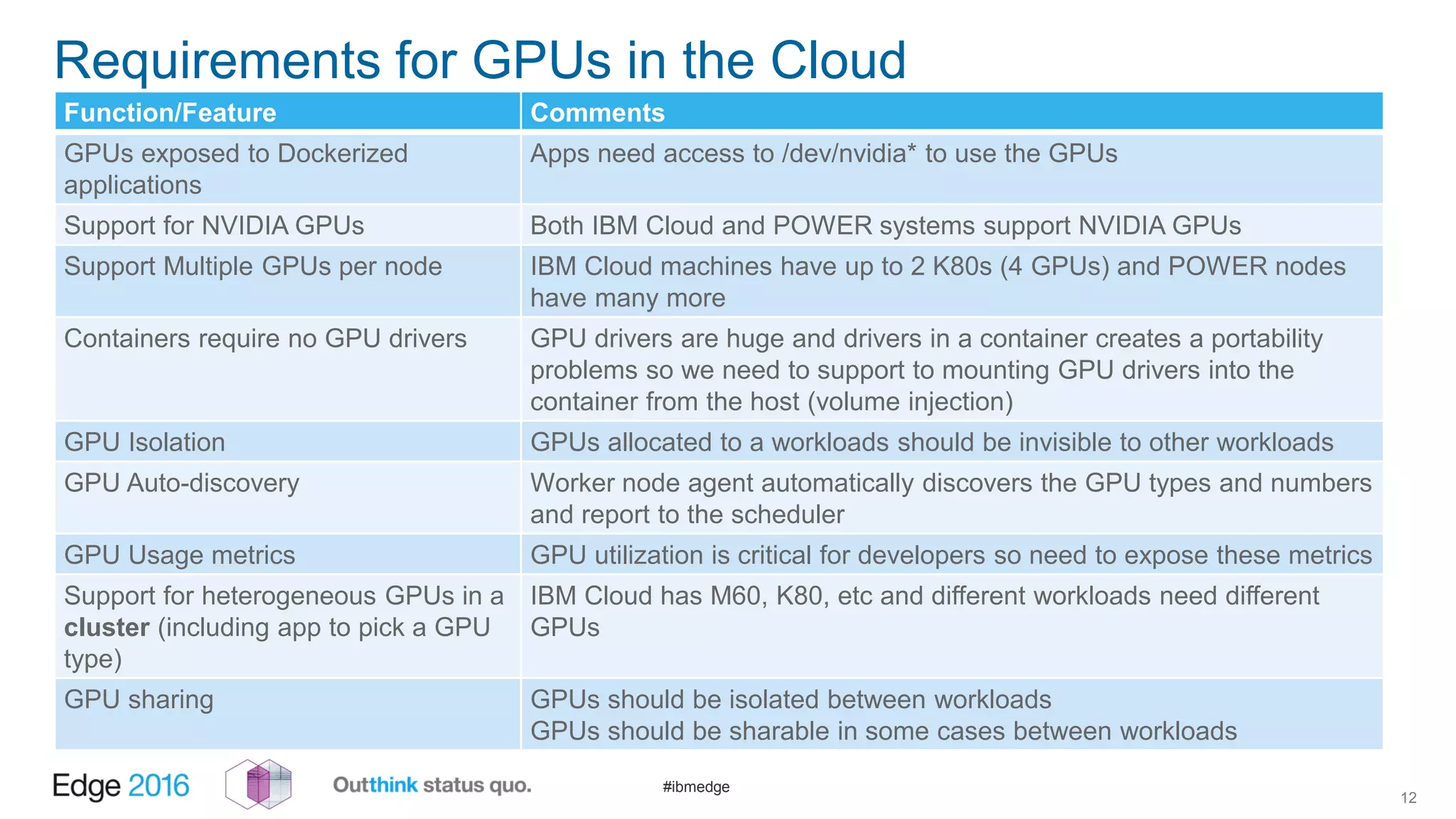

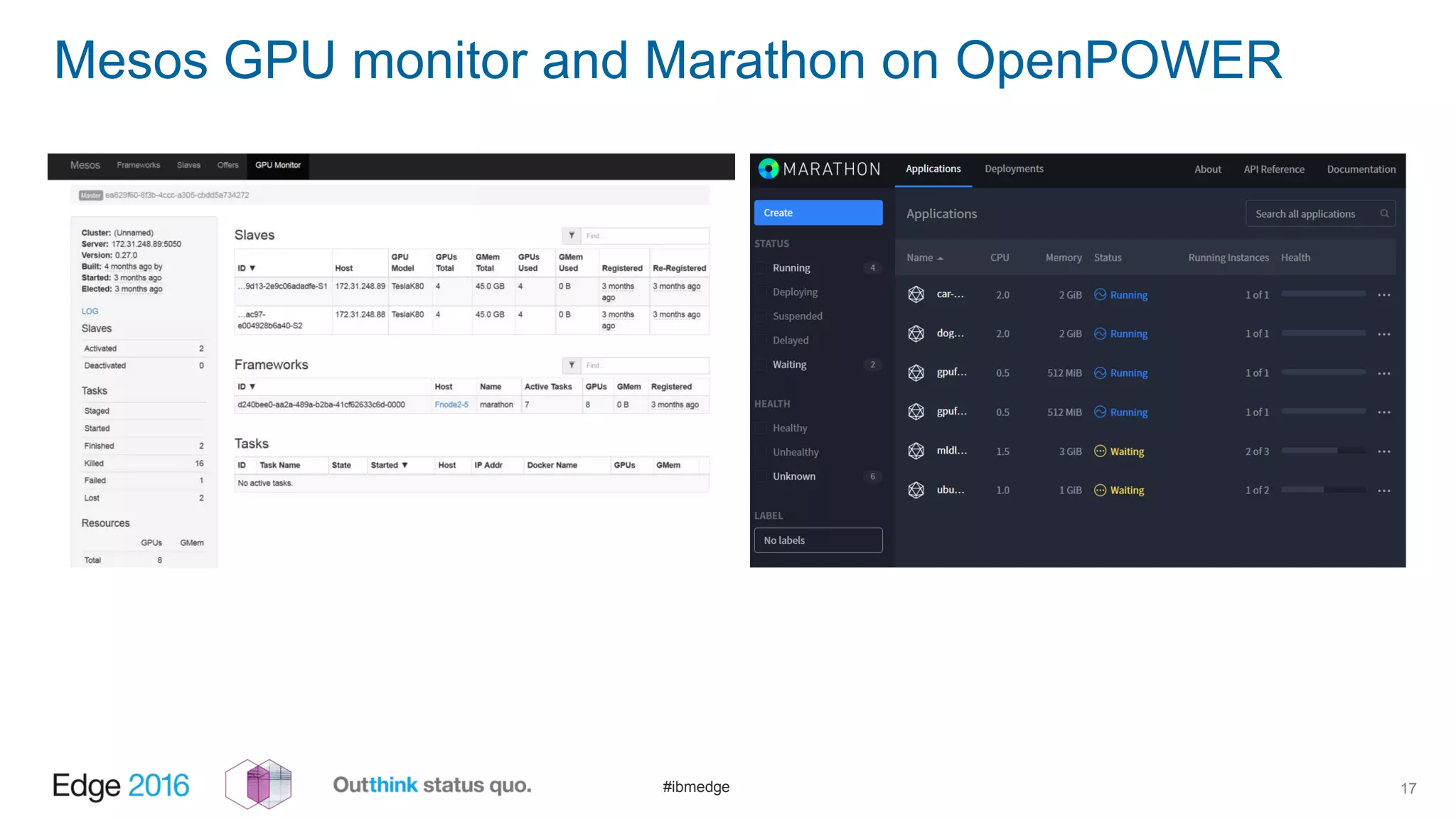

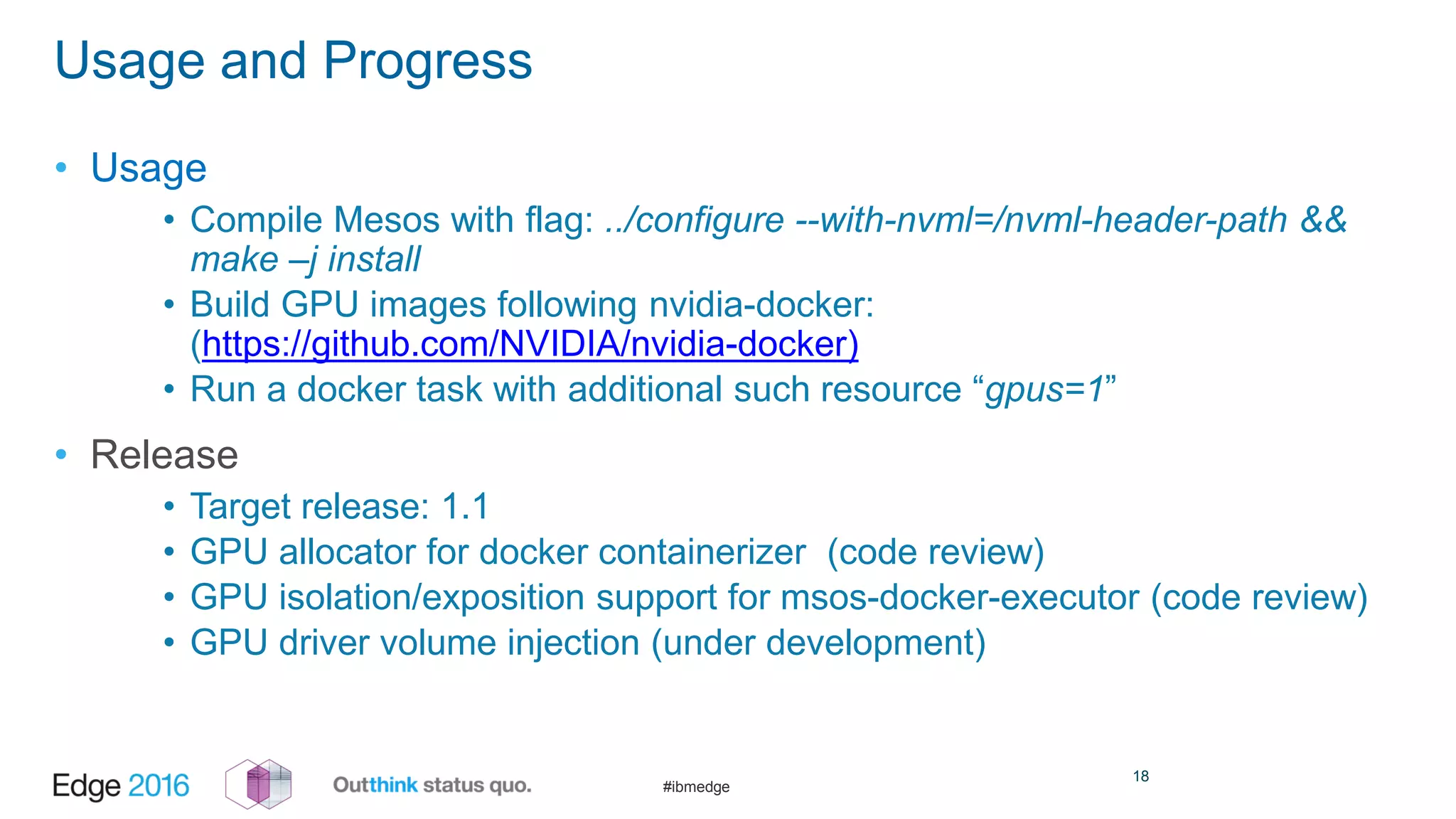

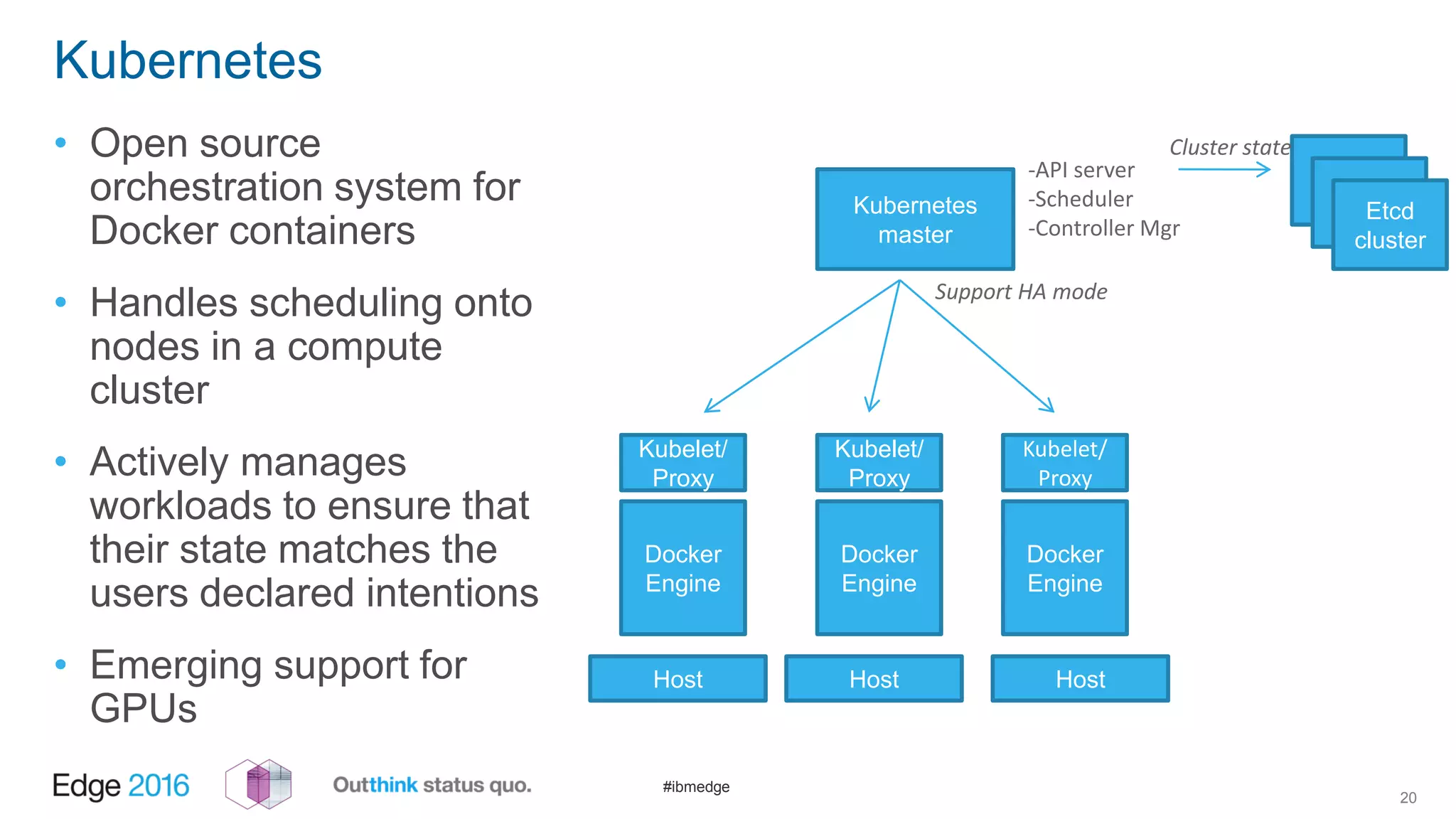

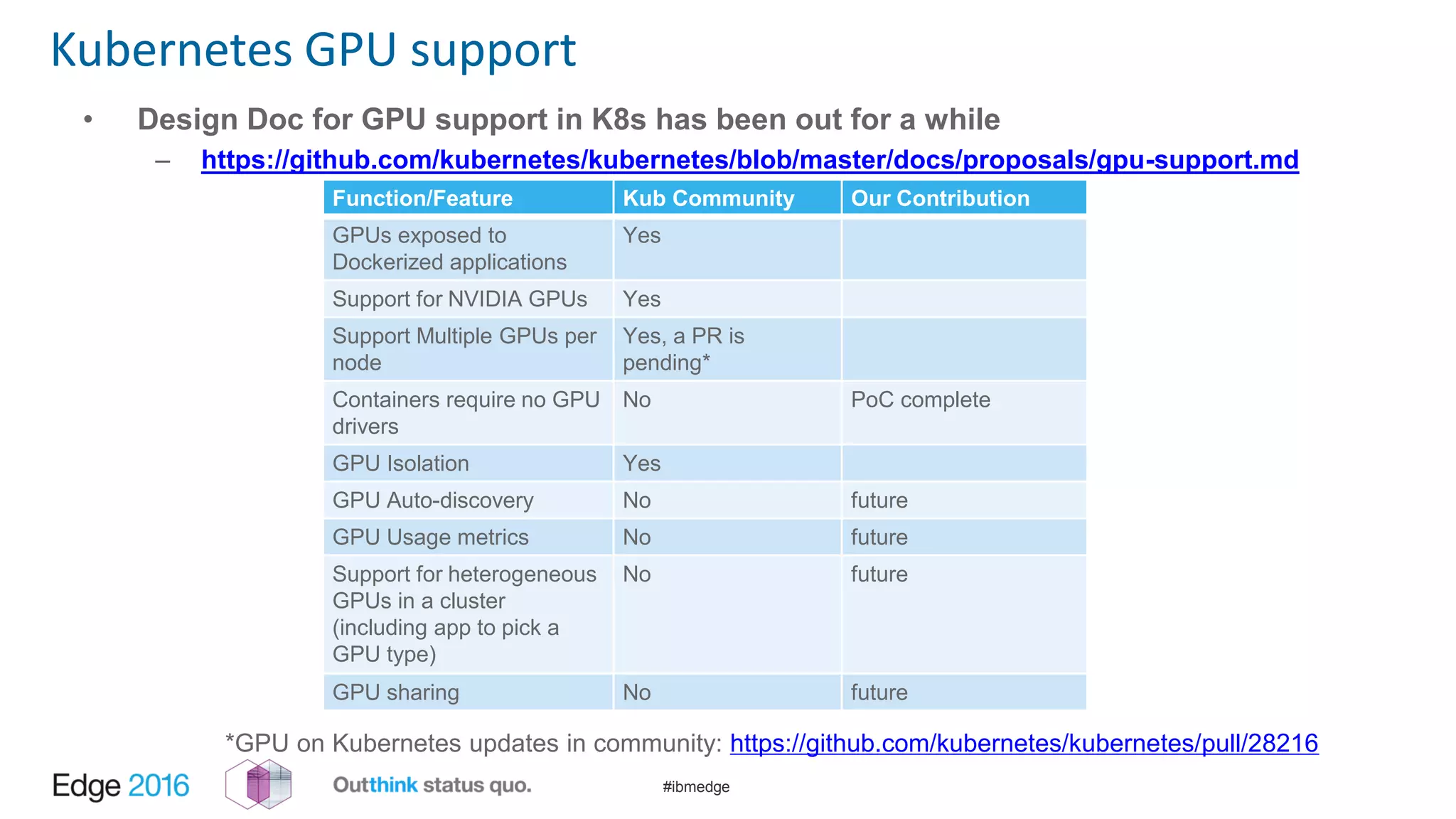

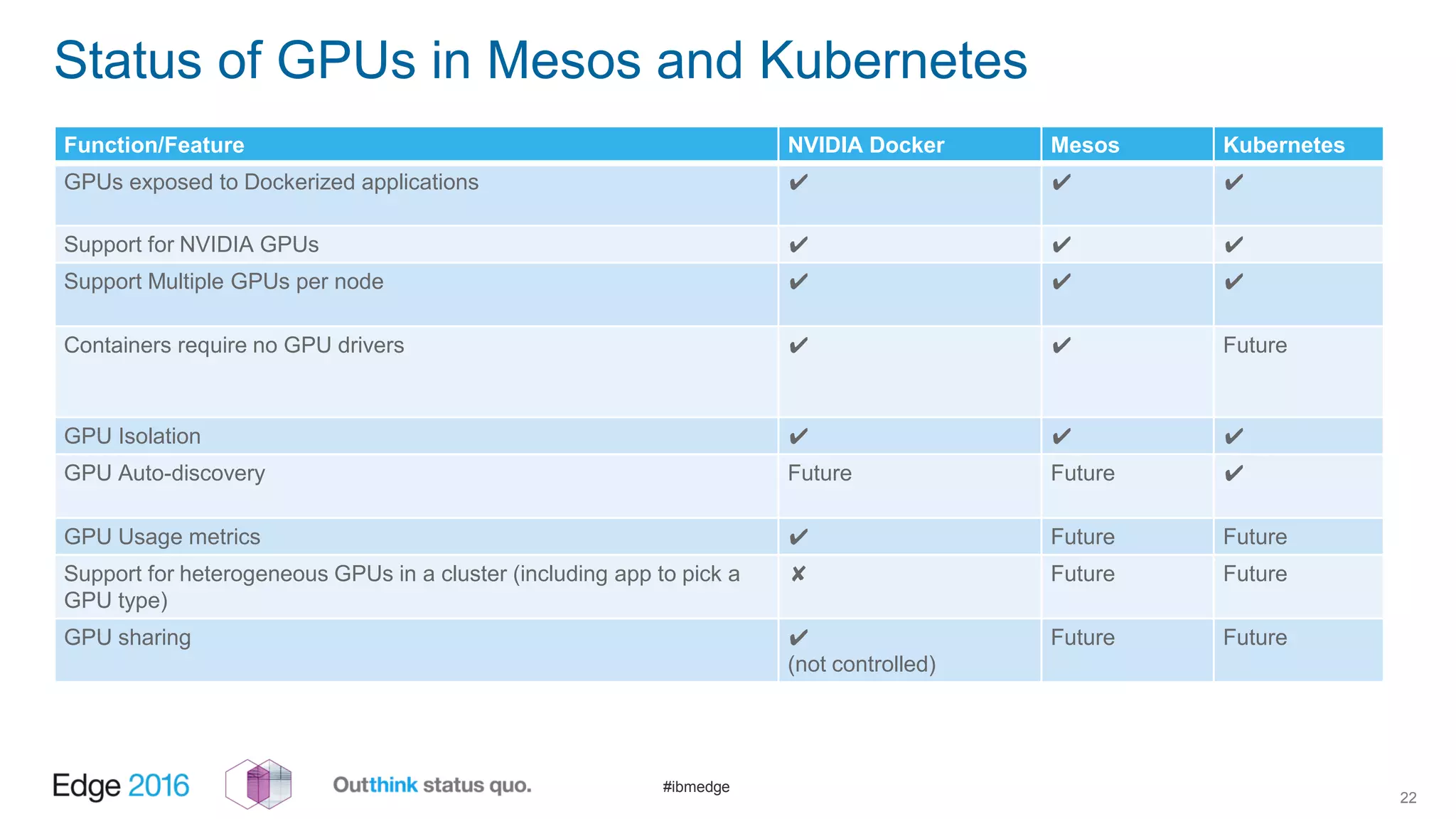

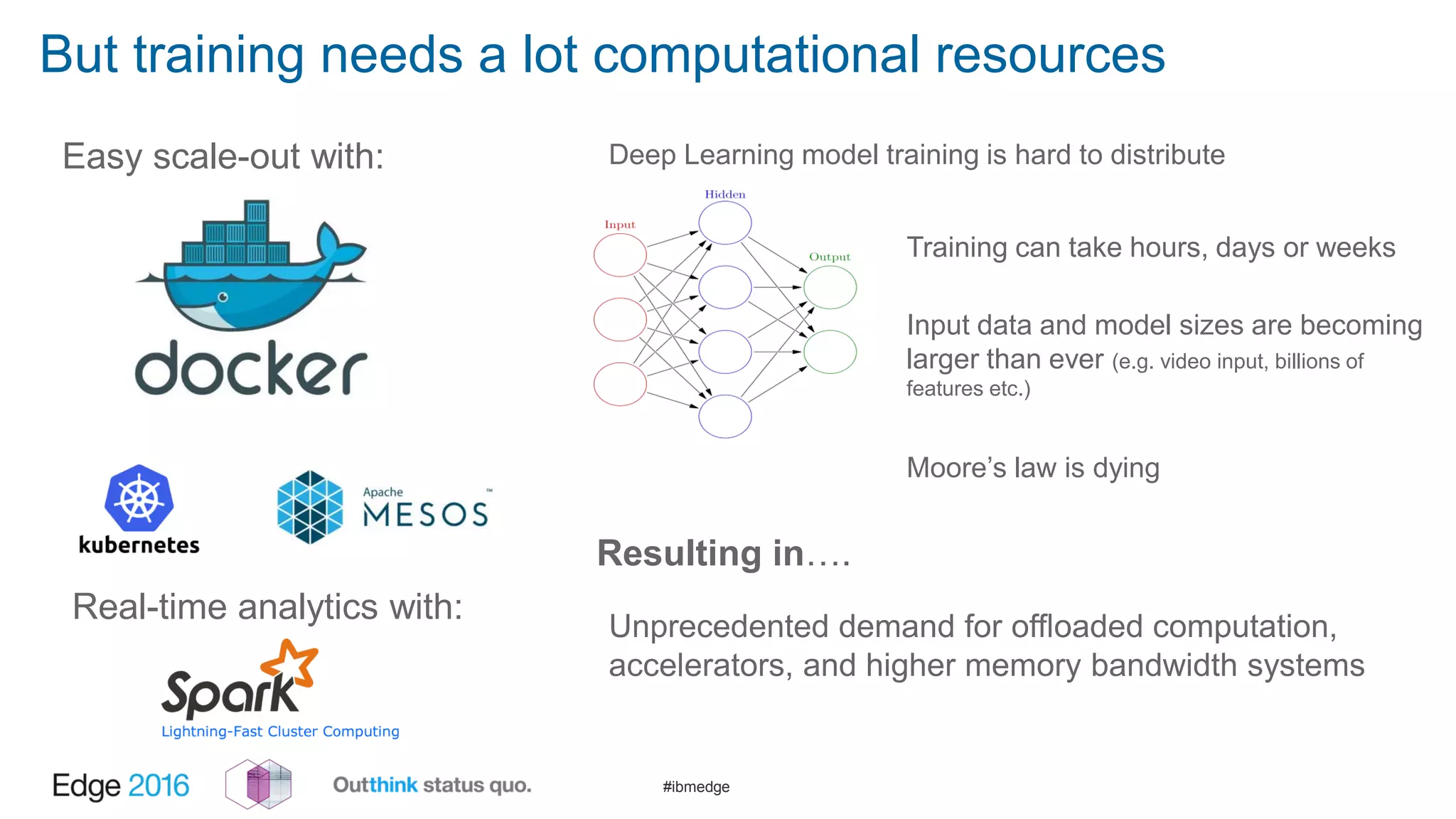

This document provides an overview of enabling cognitive workloads on the cloud using GPUs with Mesos, Docker, and Marathon on IBM's POWER systems. It discusses requirements for GPUs in the cloud like exposing GPUs to containers and supporting multiple GPUs per node. It also summarizes Mesos and Kubernetes support for GPUs, and demonstrates running a deep learning workload on OpenPOWER hardware to identify dog breeds using Docker containers and GPUs.

![#ibmedge

OpenPOWER: Open Hardware for High Performance

8

Systems designed for

big data analytics

and superior cloud economics

Upto:

12 cores per cpu

96 hardware threads per cpu

1 TB RAM

7.6Tb/s combined I/O Bandwidth

GPUs and FPGAs coming…

OpenPOWER

Traditional

Intel x86

http://www.softlayer.com/bare-metal-search?processorModel[]=9](https://image.slidesharecdn.com/edge1423-enablingcognitiveworkloadsonthecloud-gpuenablementwithmesosdockerandmarathononpowerv5-160927210758/75/Enabling-Cognitive-Workloads-on-the-Cloud-GPUs-with-Mesos-Docker-and-Marathon-on-POWER-9-2048.jpg)