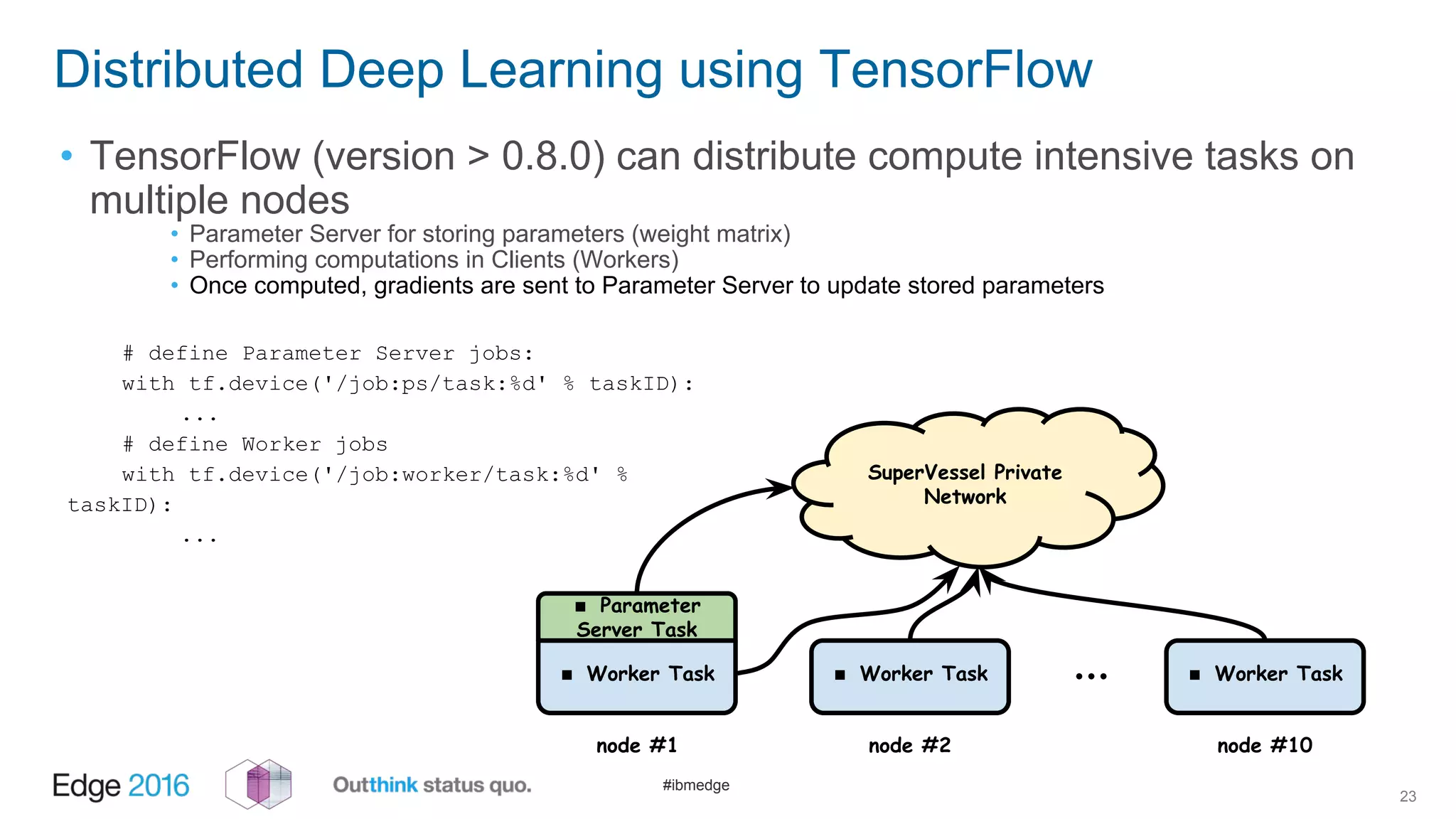

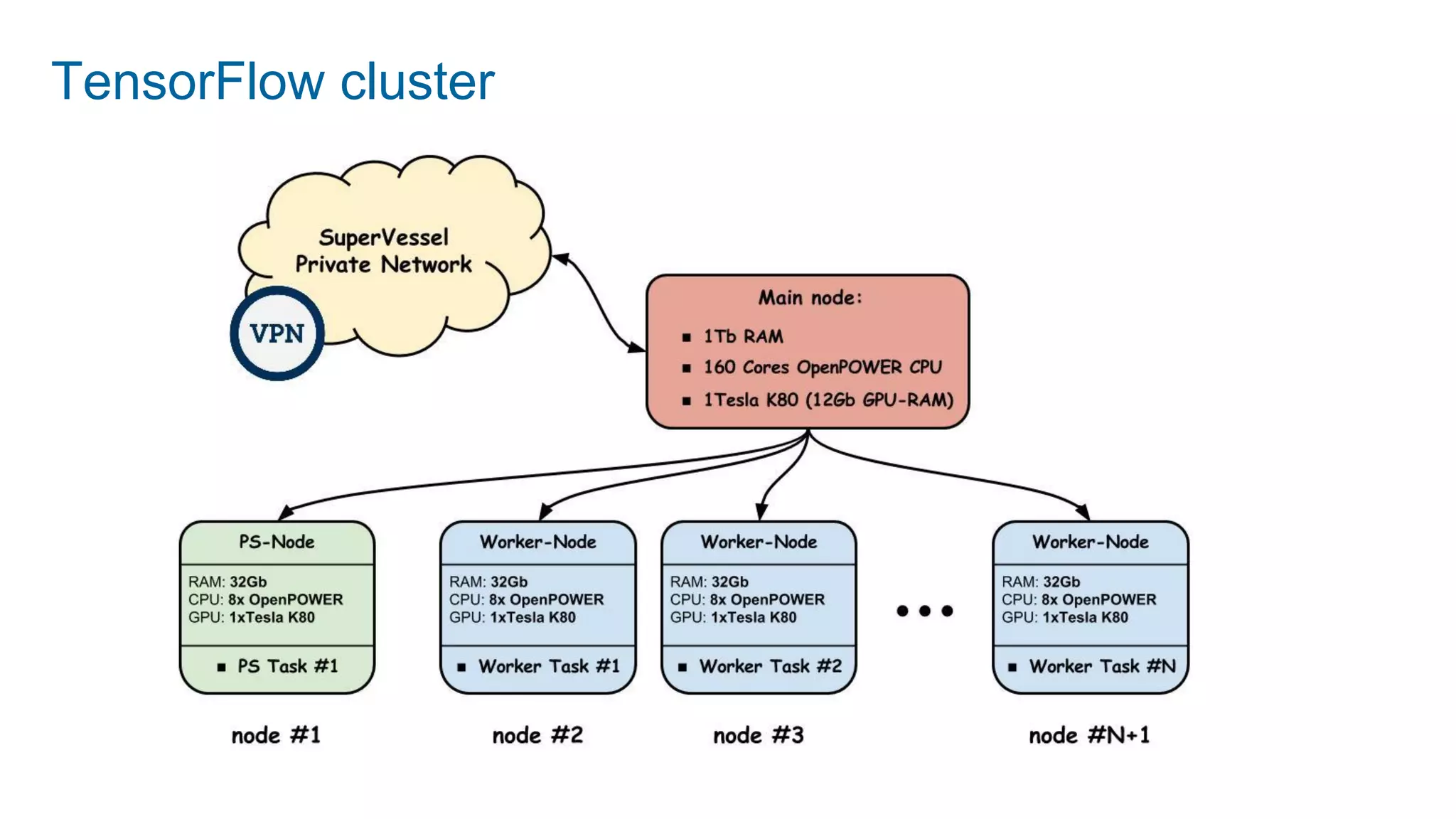

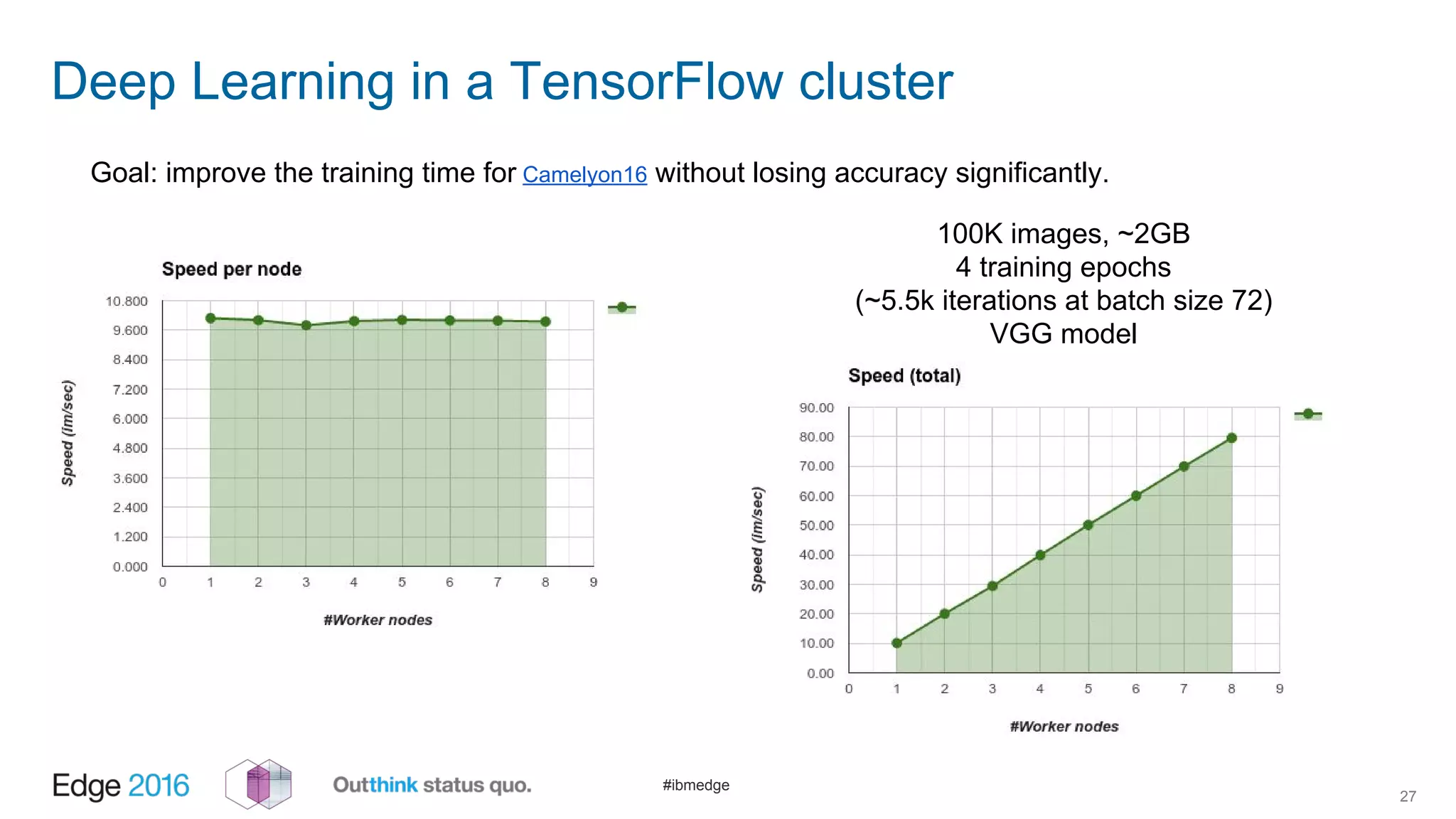

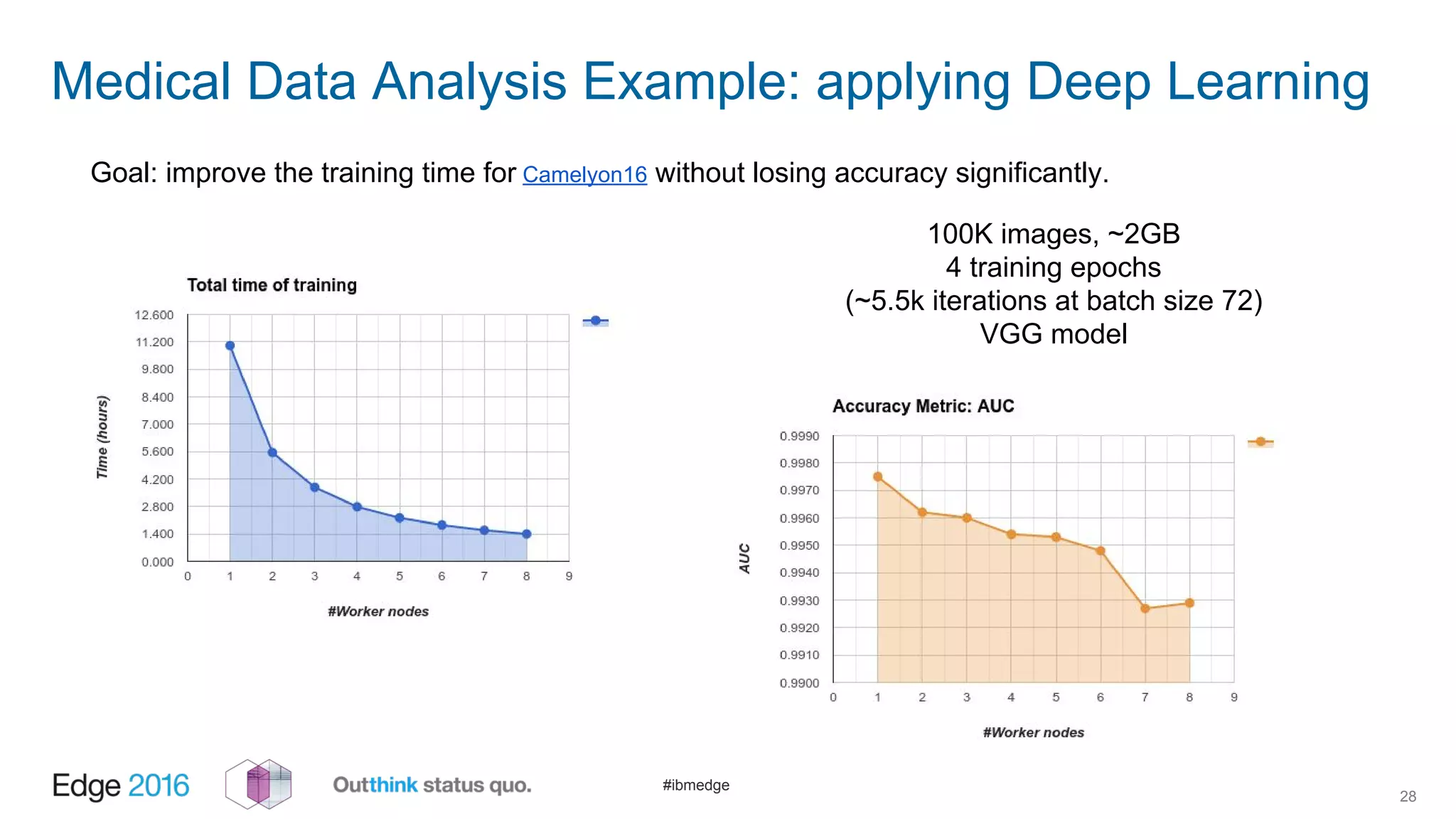

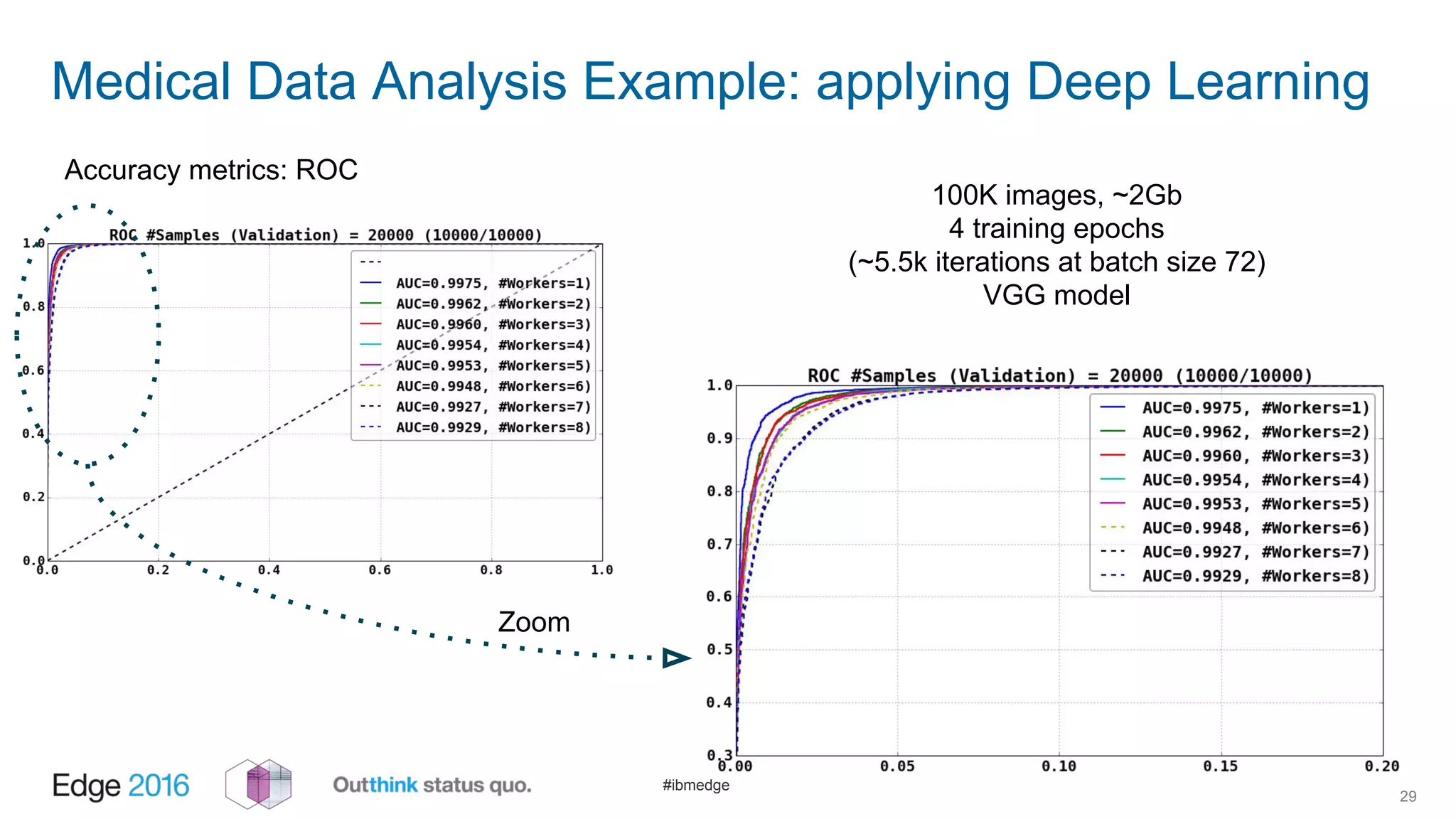

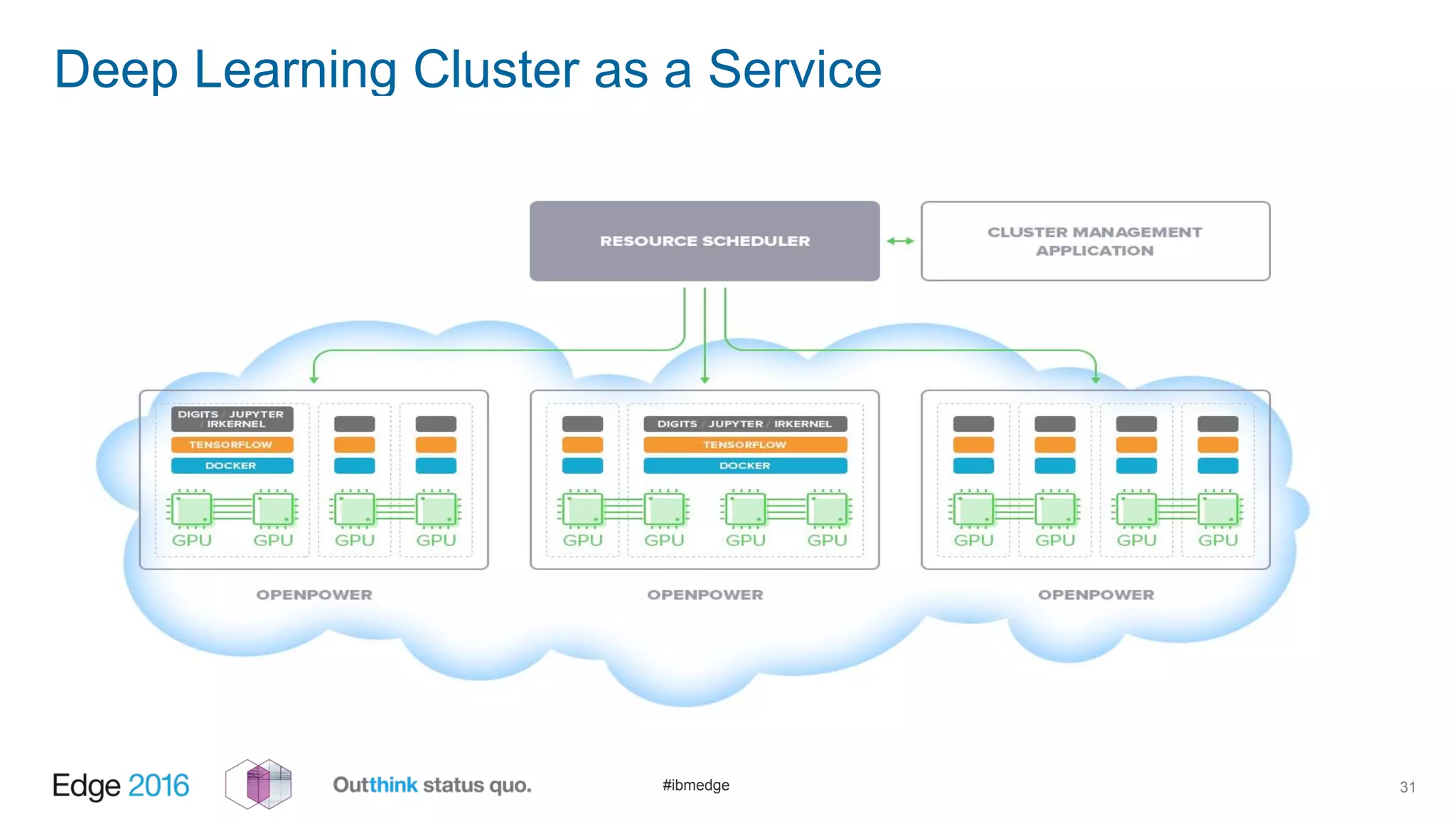

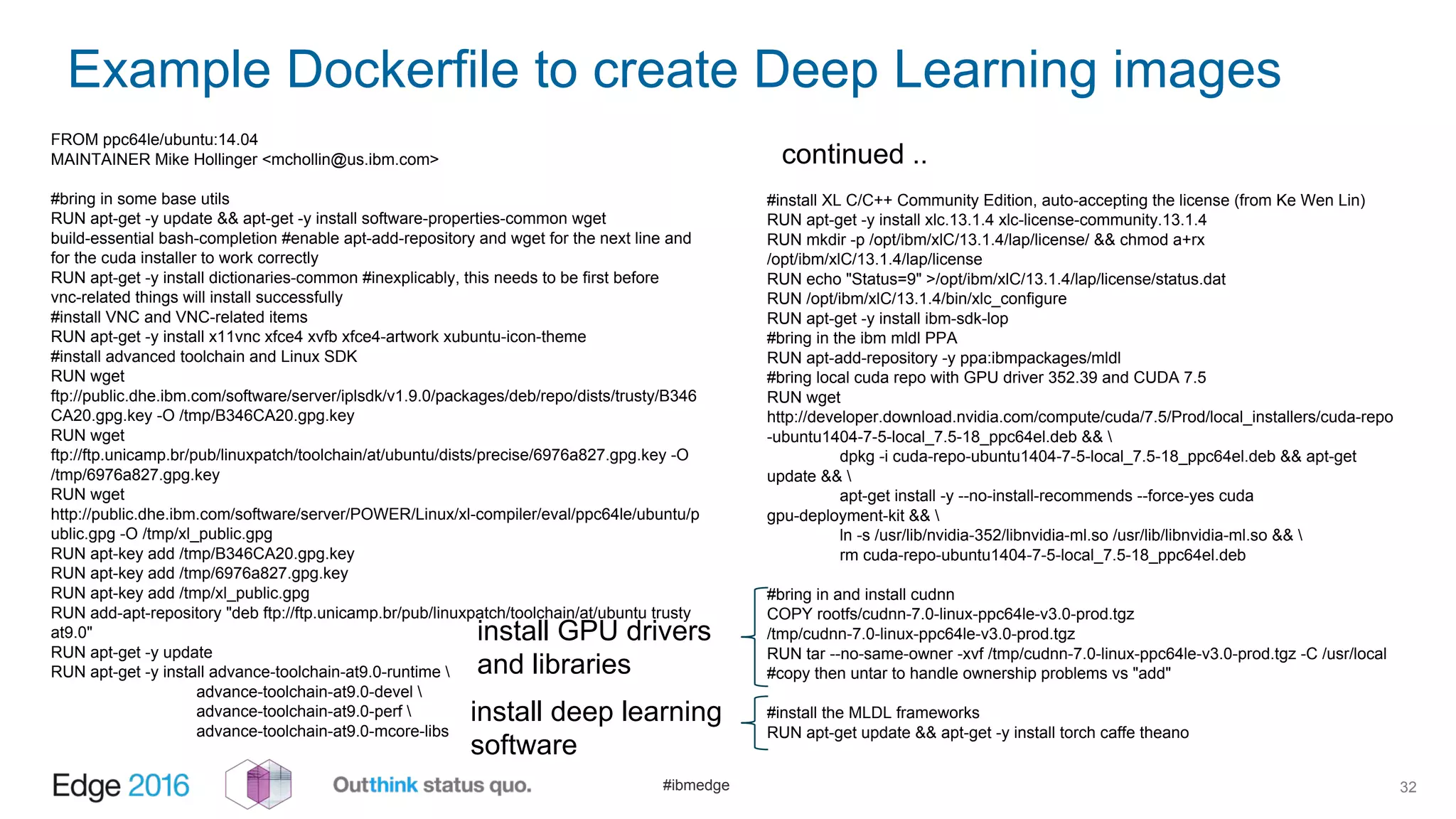

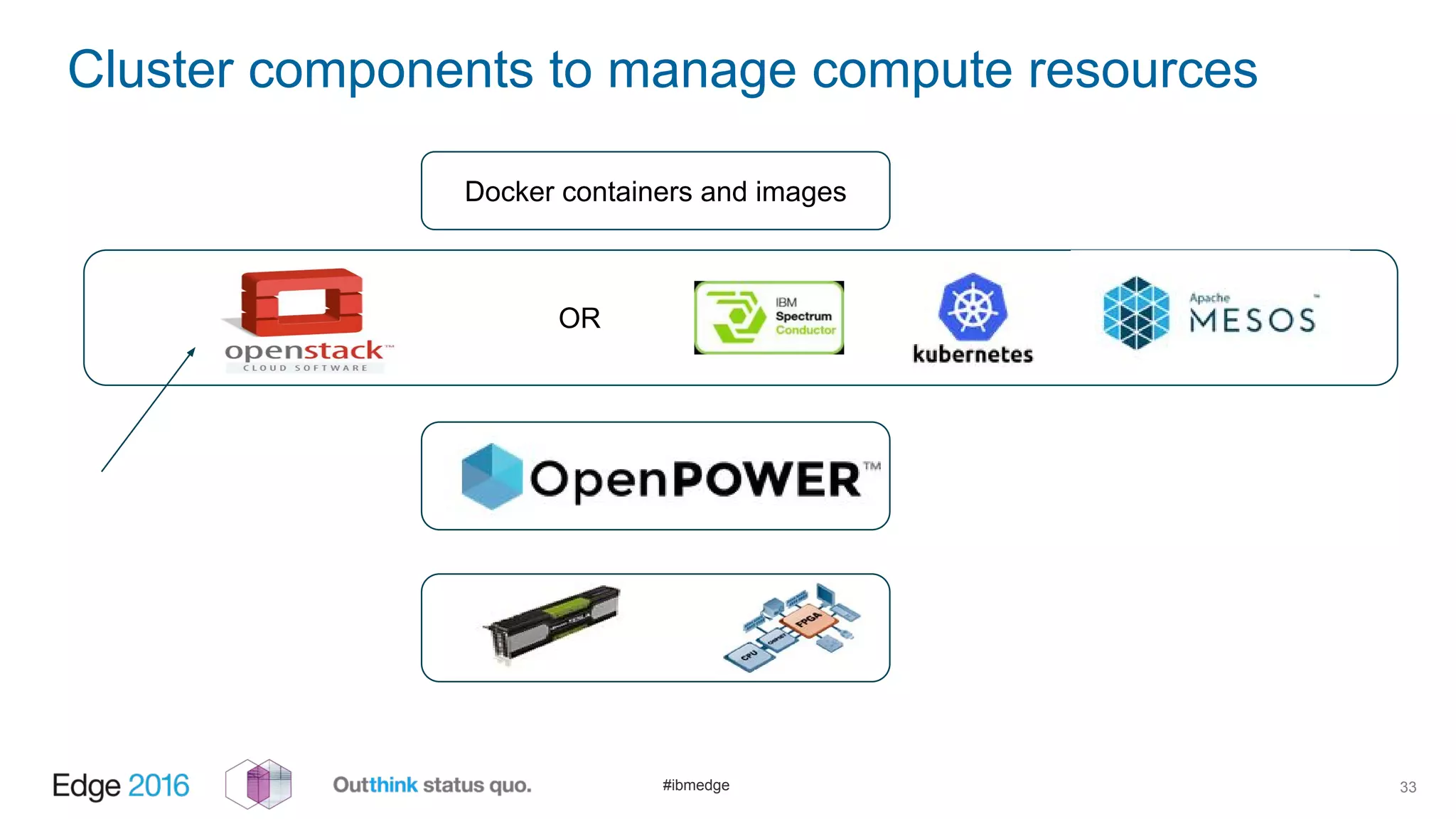

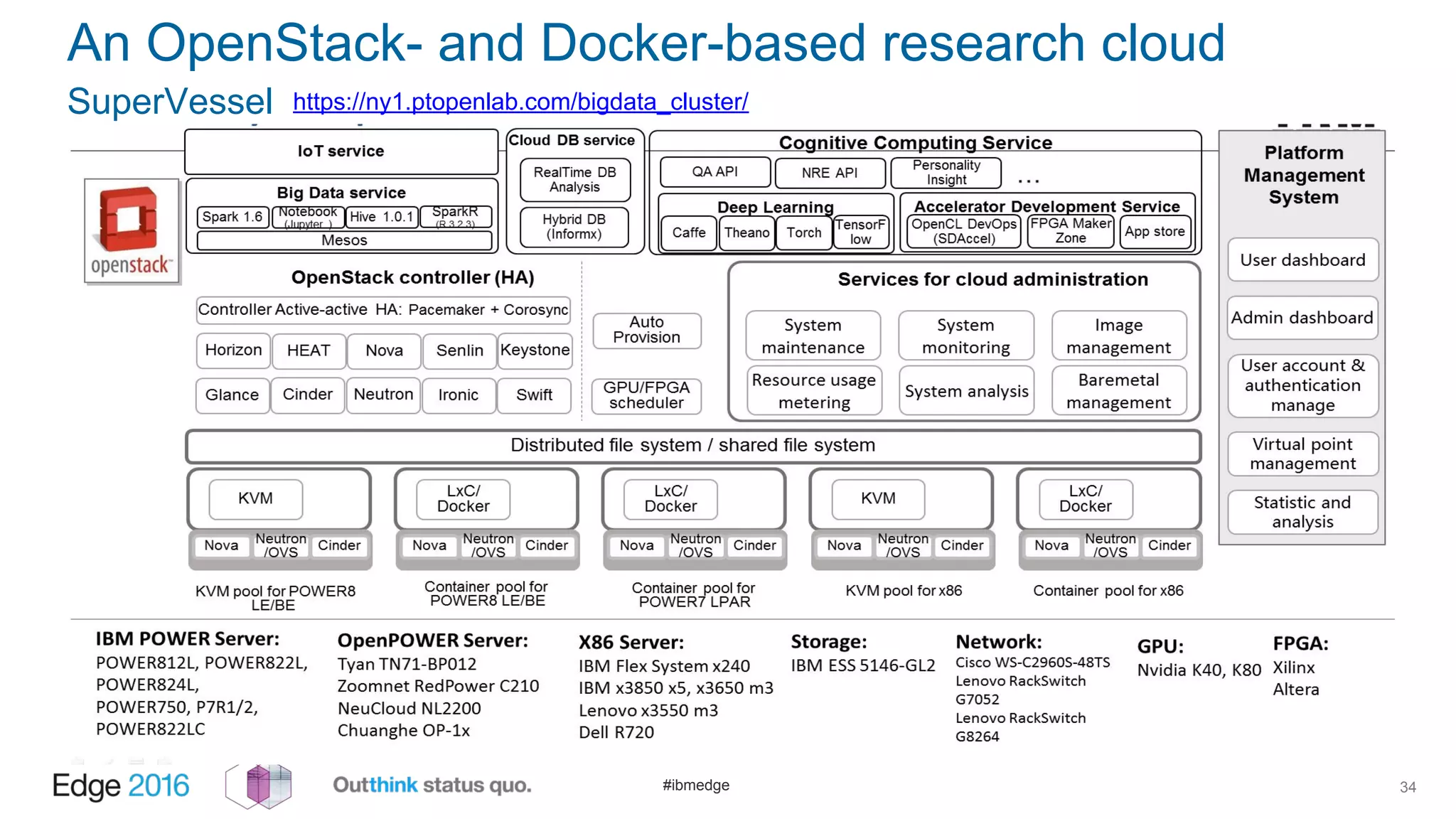

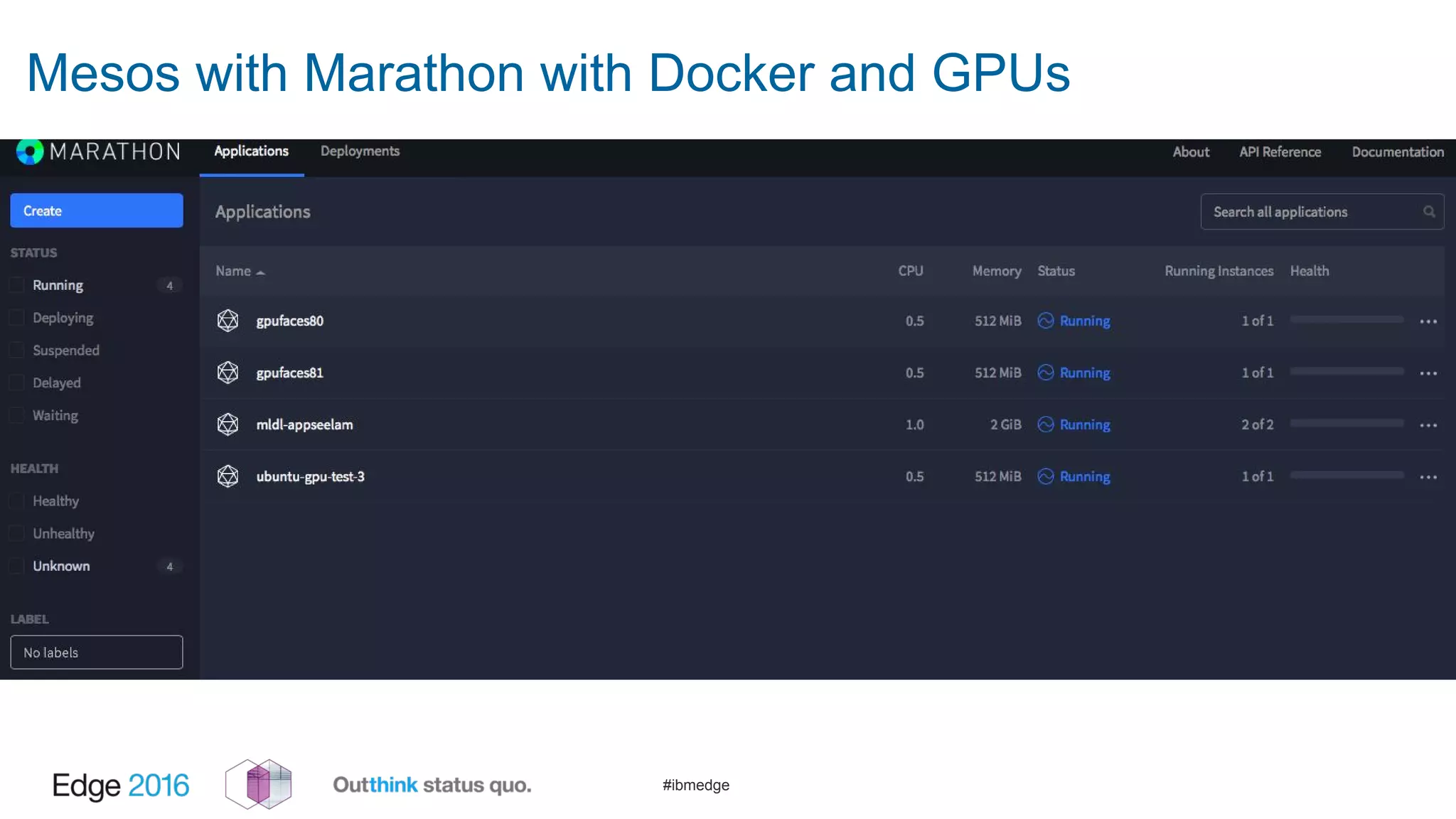

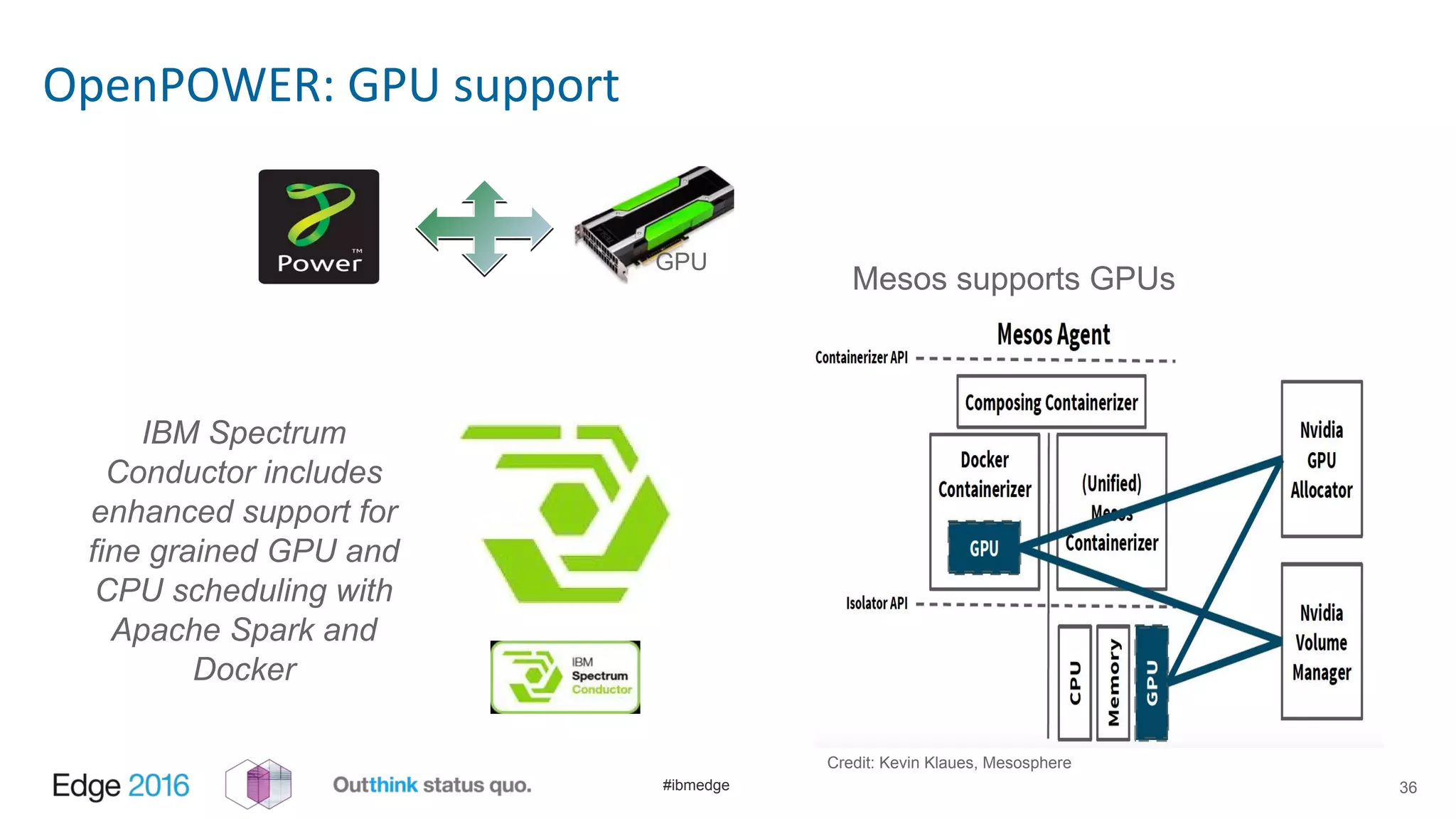

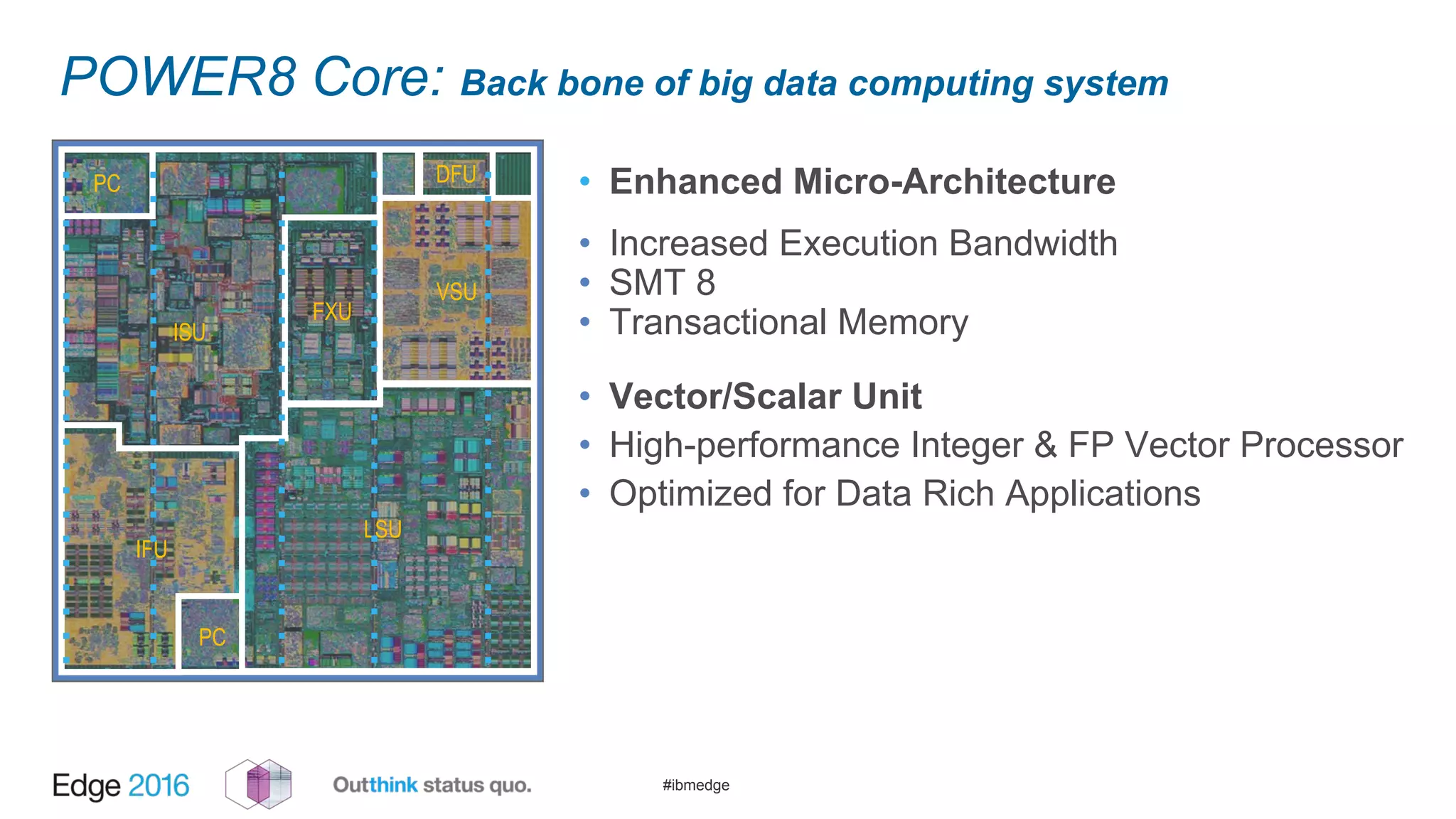

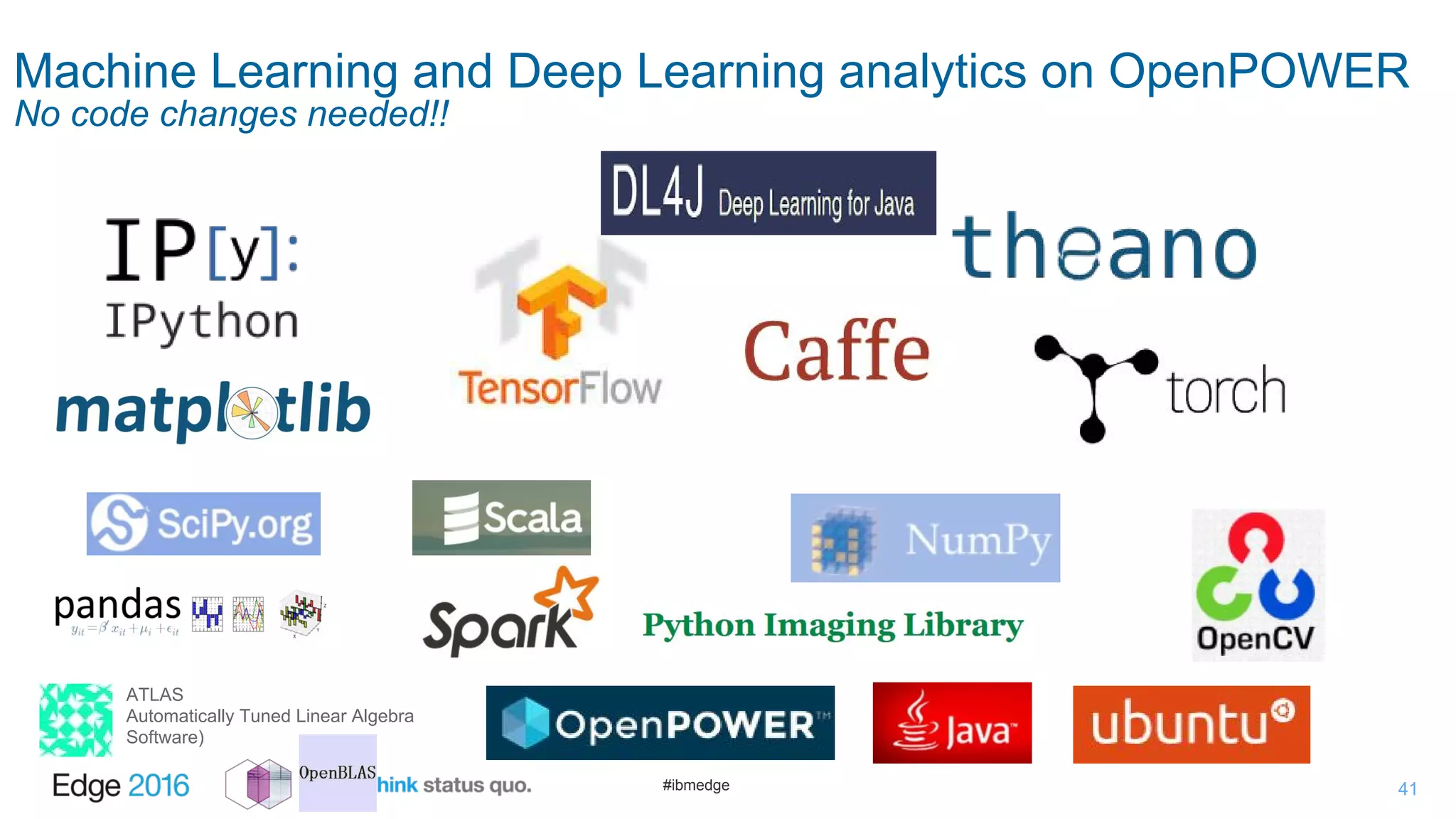

This document discusses scaling TensorFlow deep learning using Docker, OpenPOWER systems, and GPUs. It provides an overview of distributing TensorFlow in a cluster with parameter servers and worker nodes. Example Dockerfiles are shown for creating deep learning images. The discussion also covers infrastructure components like Docker, OpenStack, and Mesos for managing compute resources in a deep learning cluster as a service.