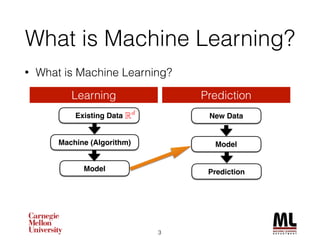

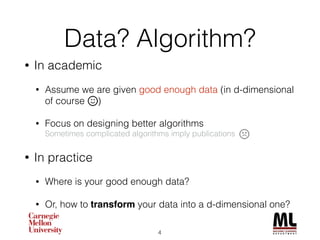

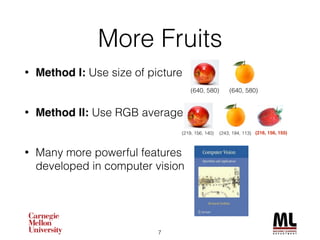

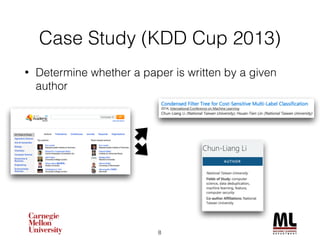

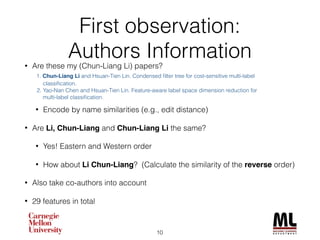

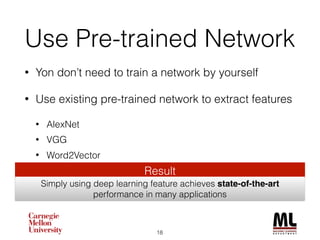

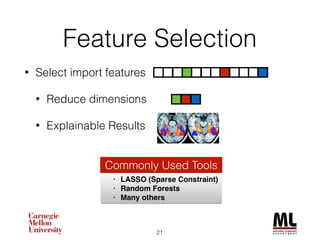

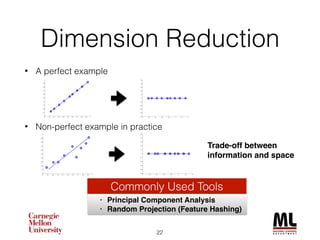

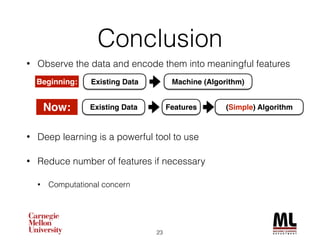

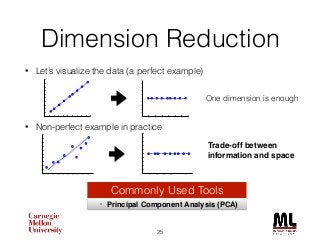

This document discusses feature engineering in machine learning, highlighting the importance of creating meaningful features from existing data to improve algorithm performance. It includes case studies, such as the KDD Cup 2013, illustrating the effective use of feature selection and dimensionality reduction techniques like PCA. Additionally, it emphasizes the role of deep learning in automating feature extraction, showcasing its impact on various applications.