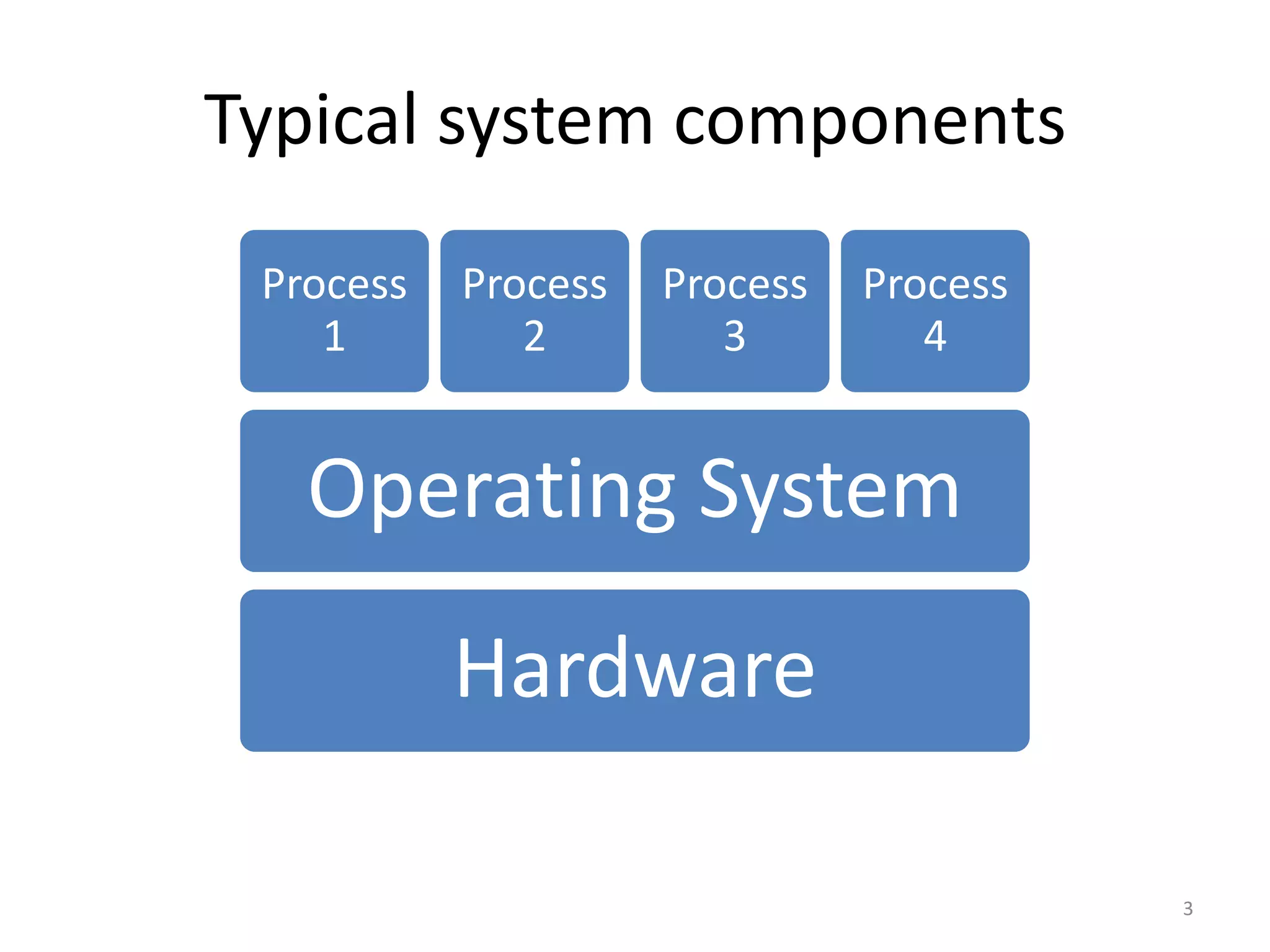

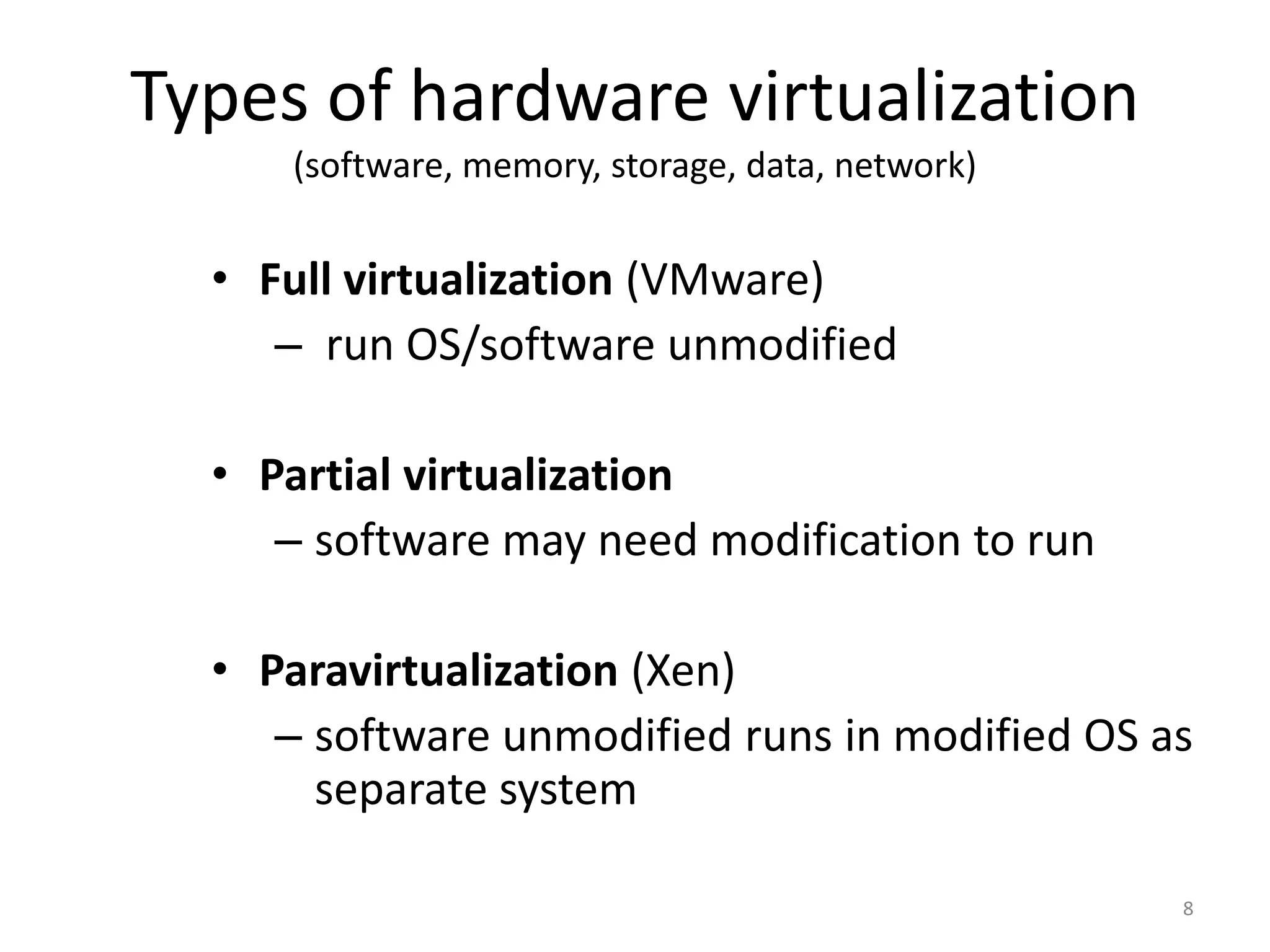

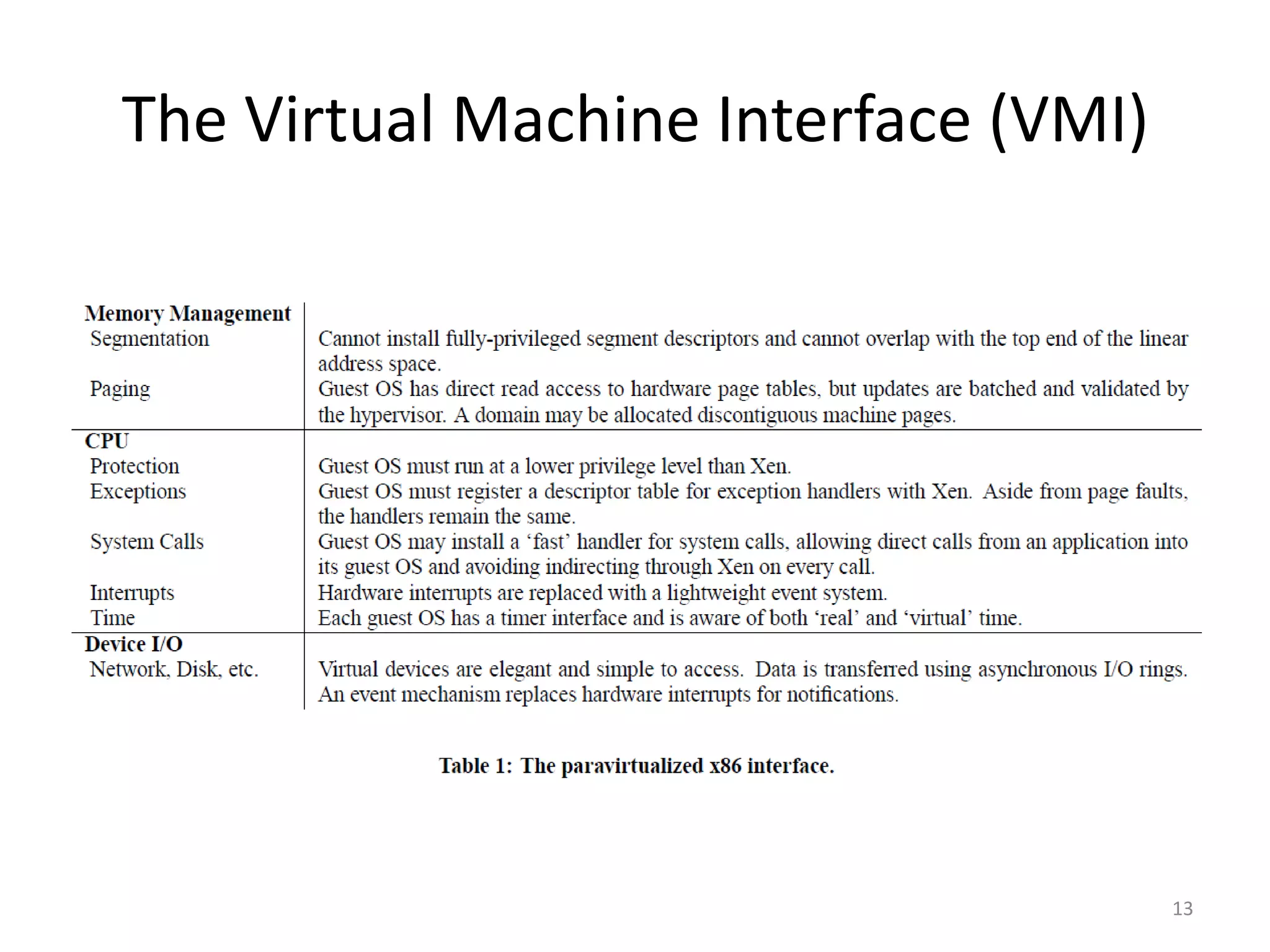

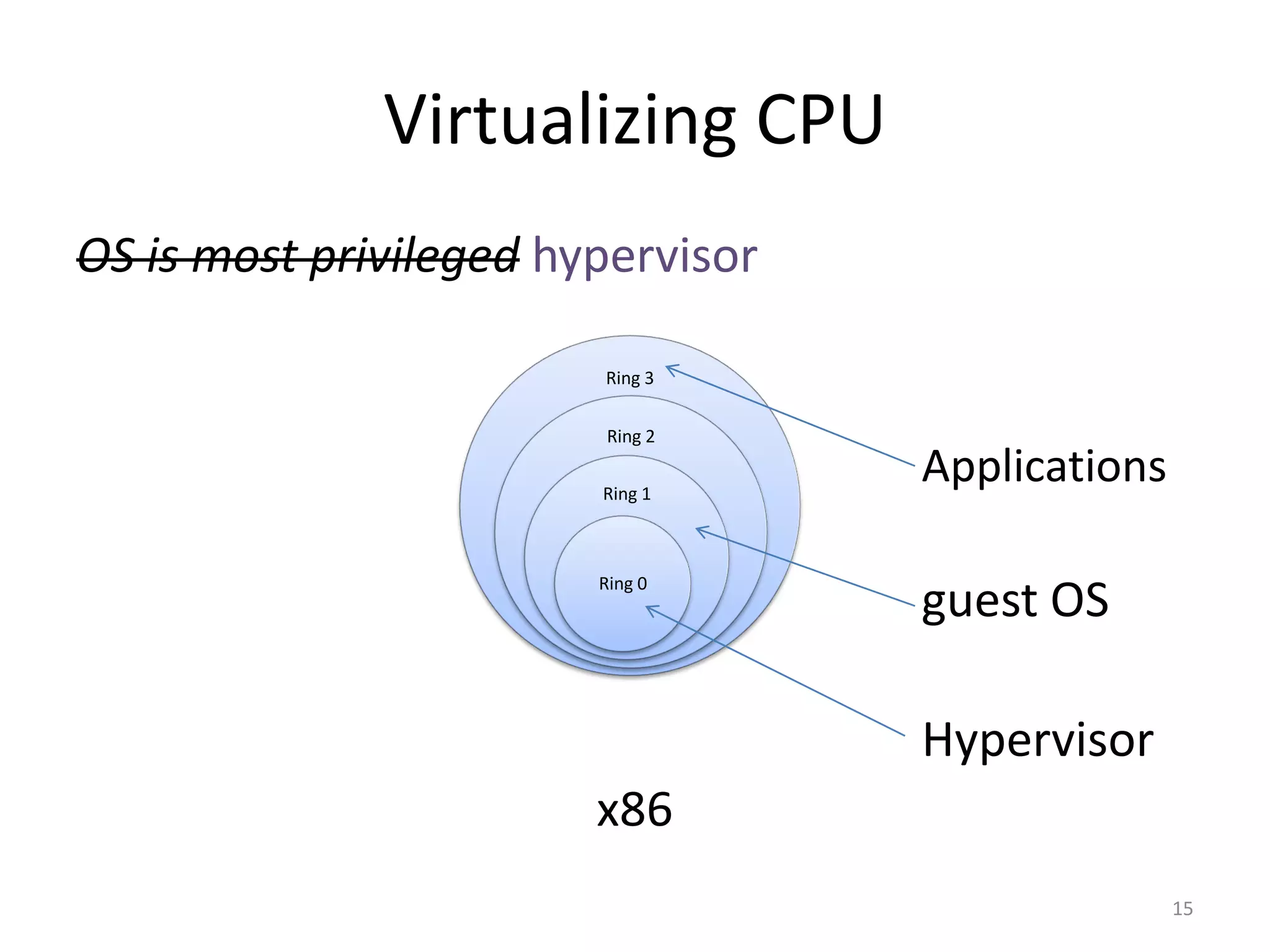

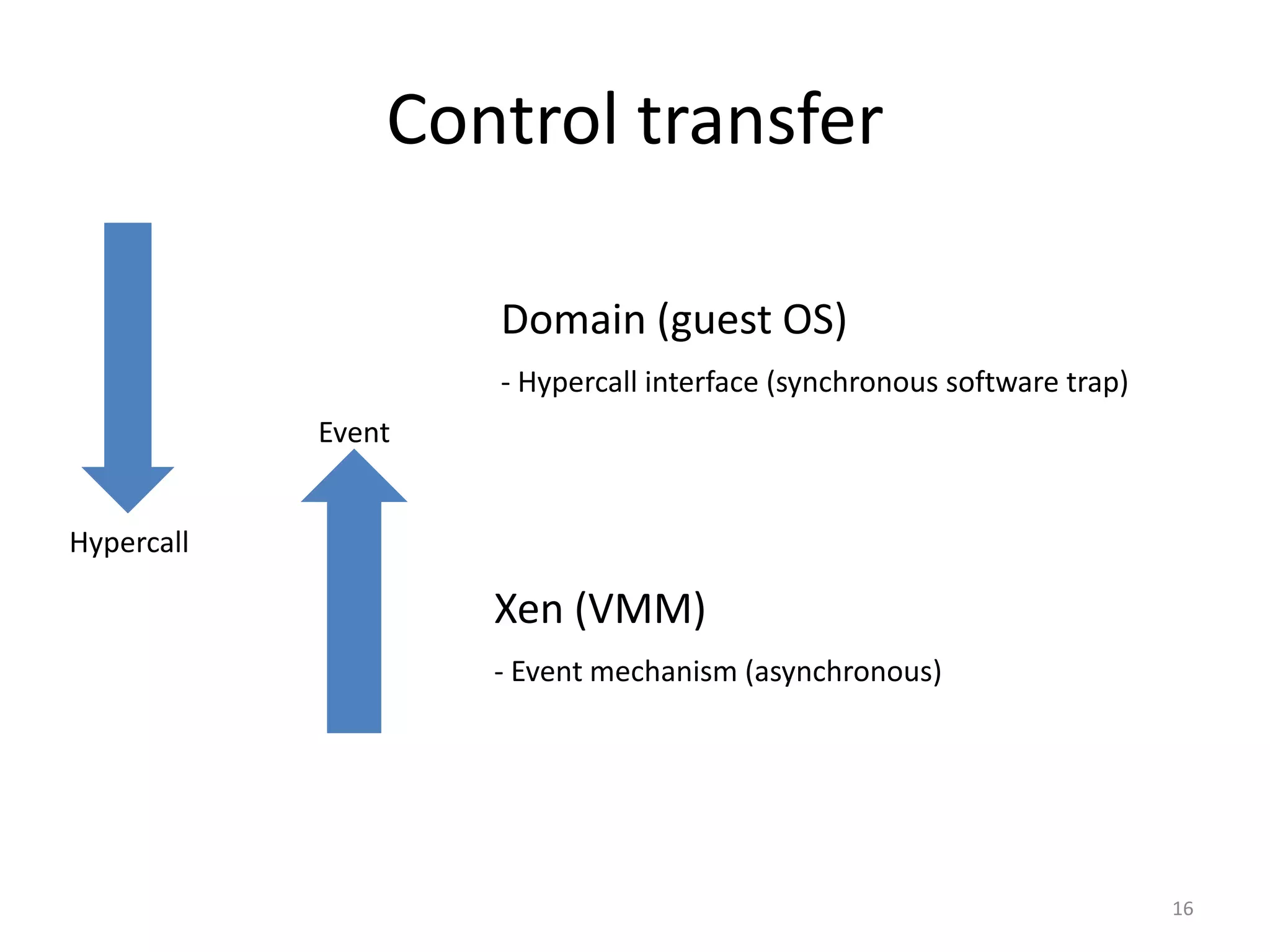

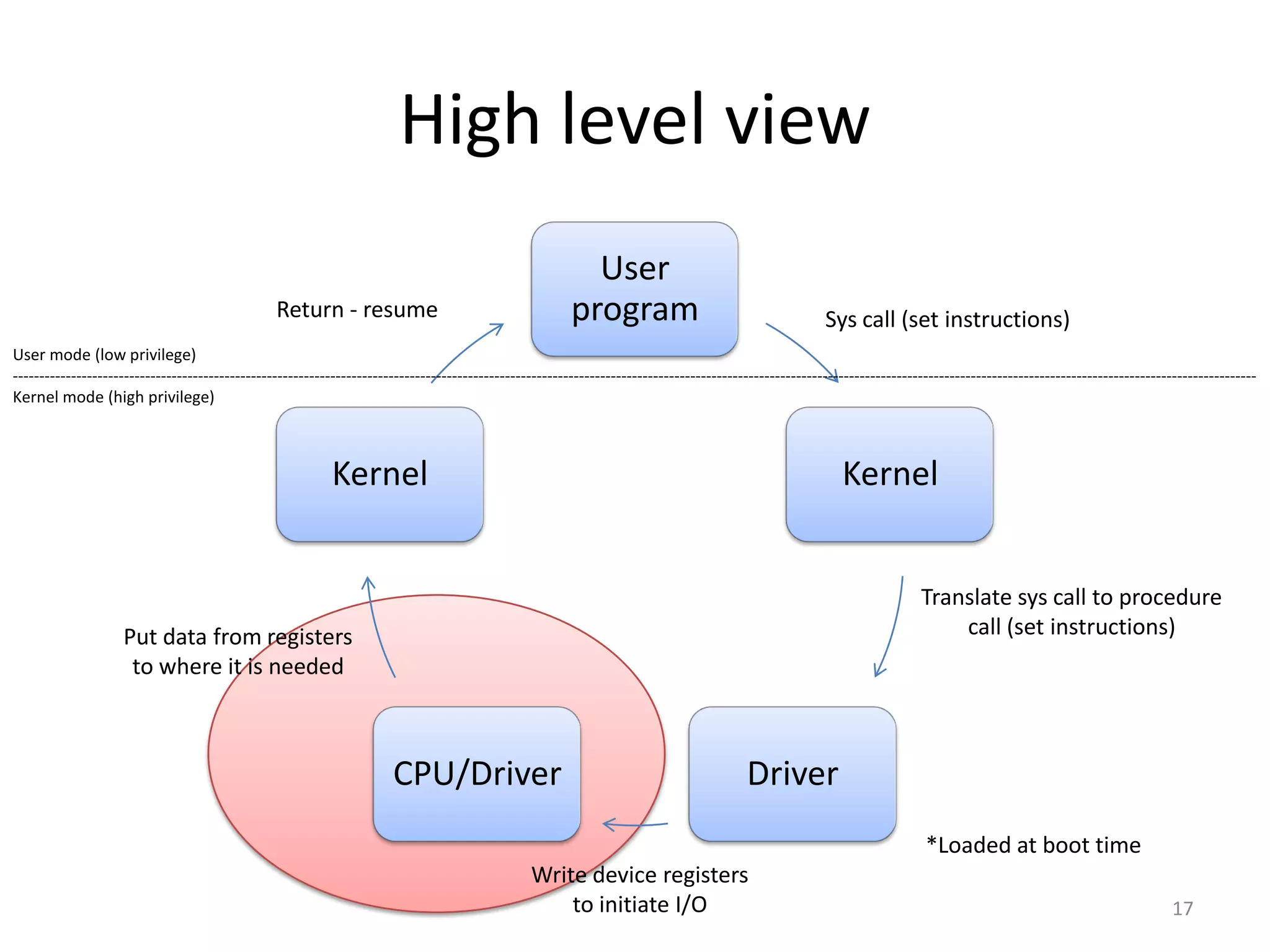

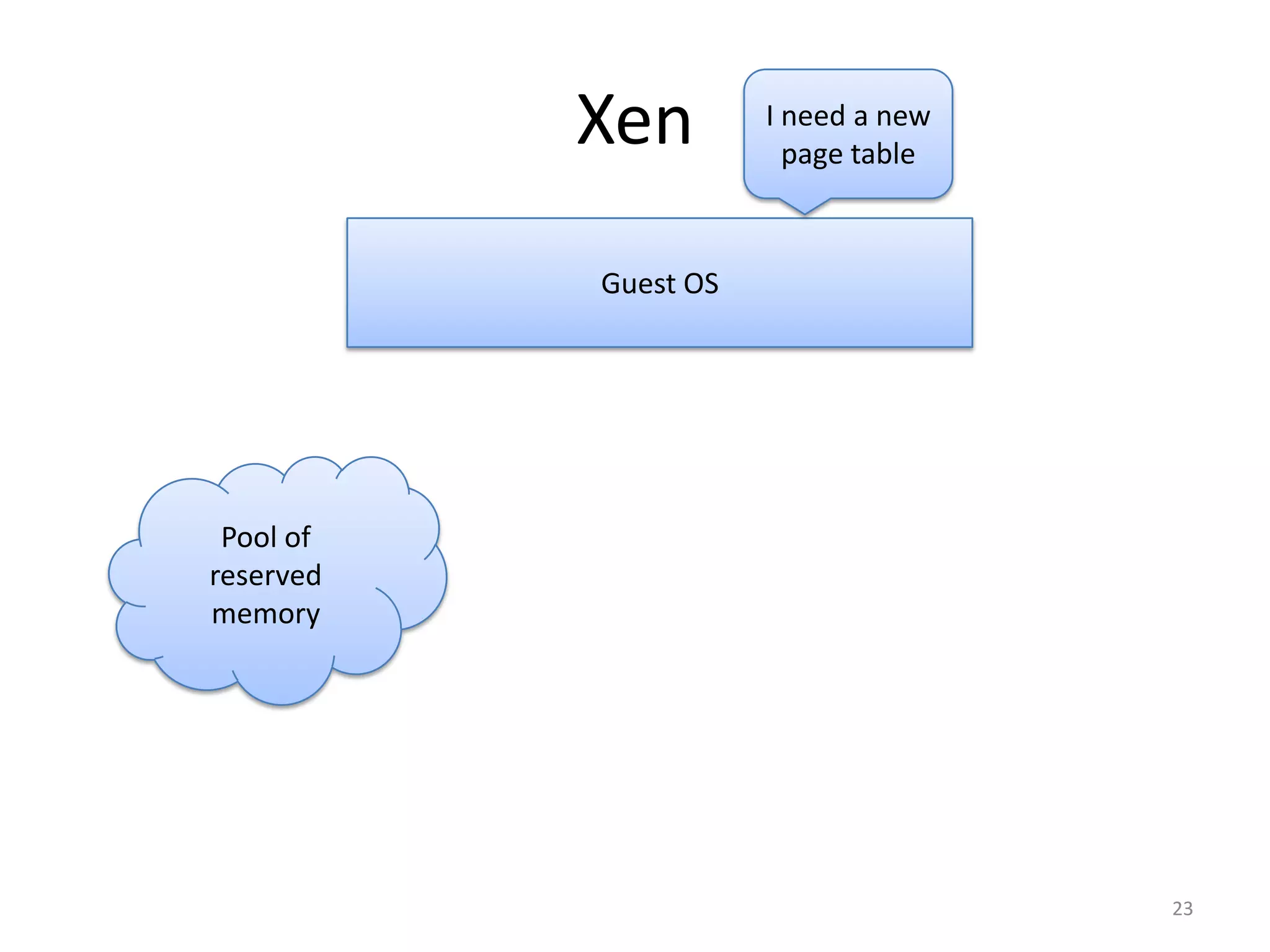

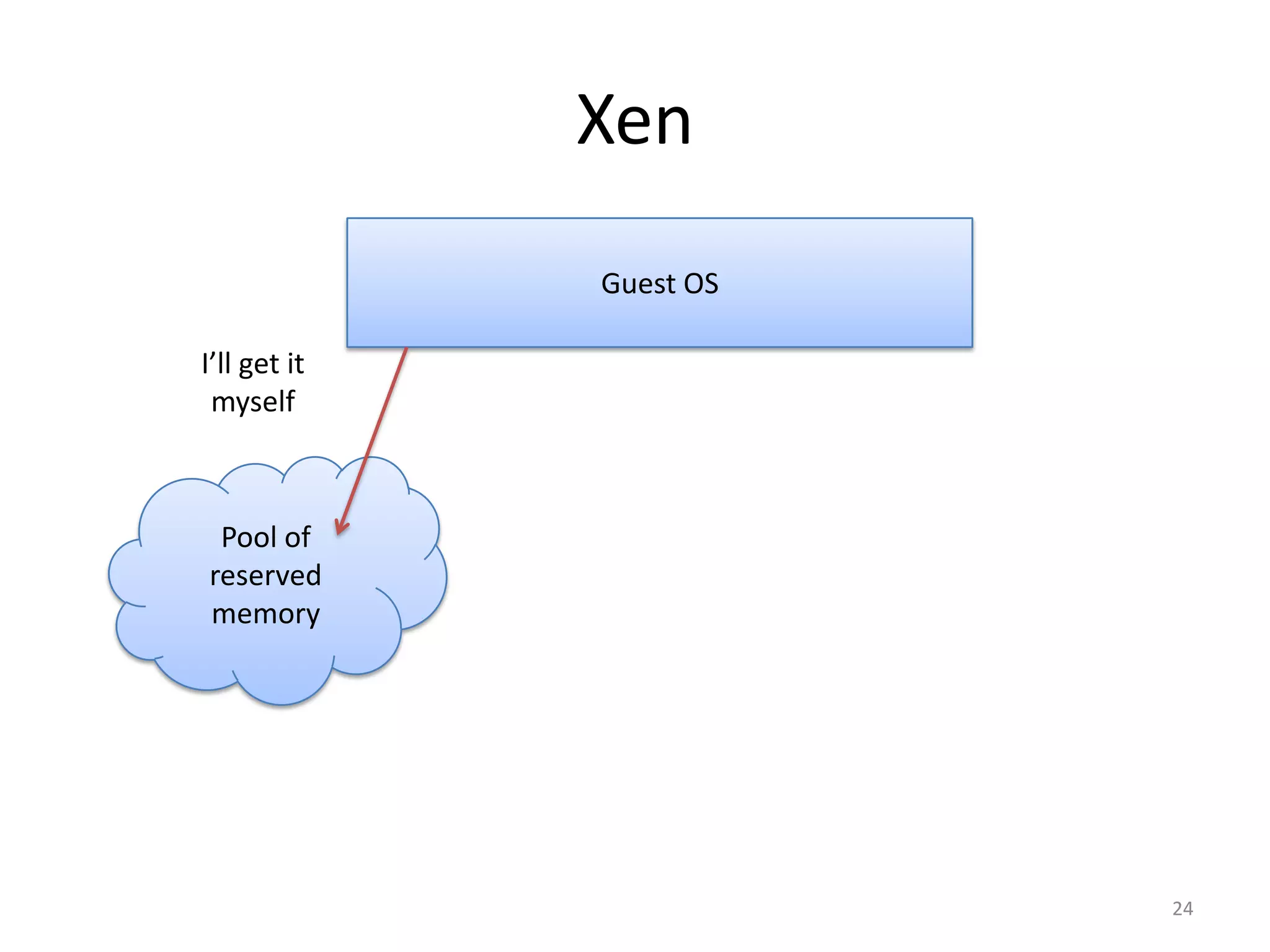

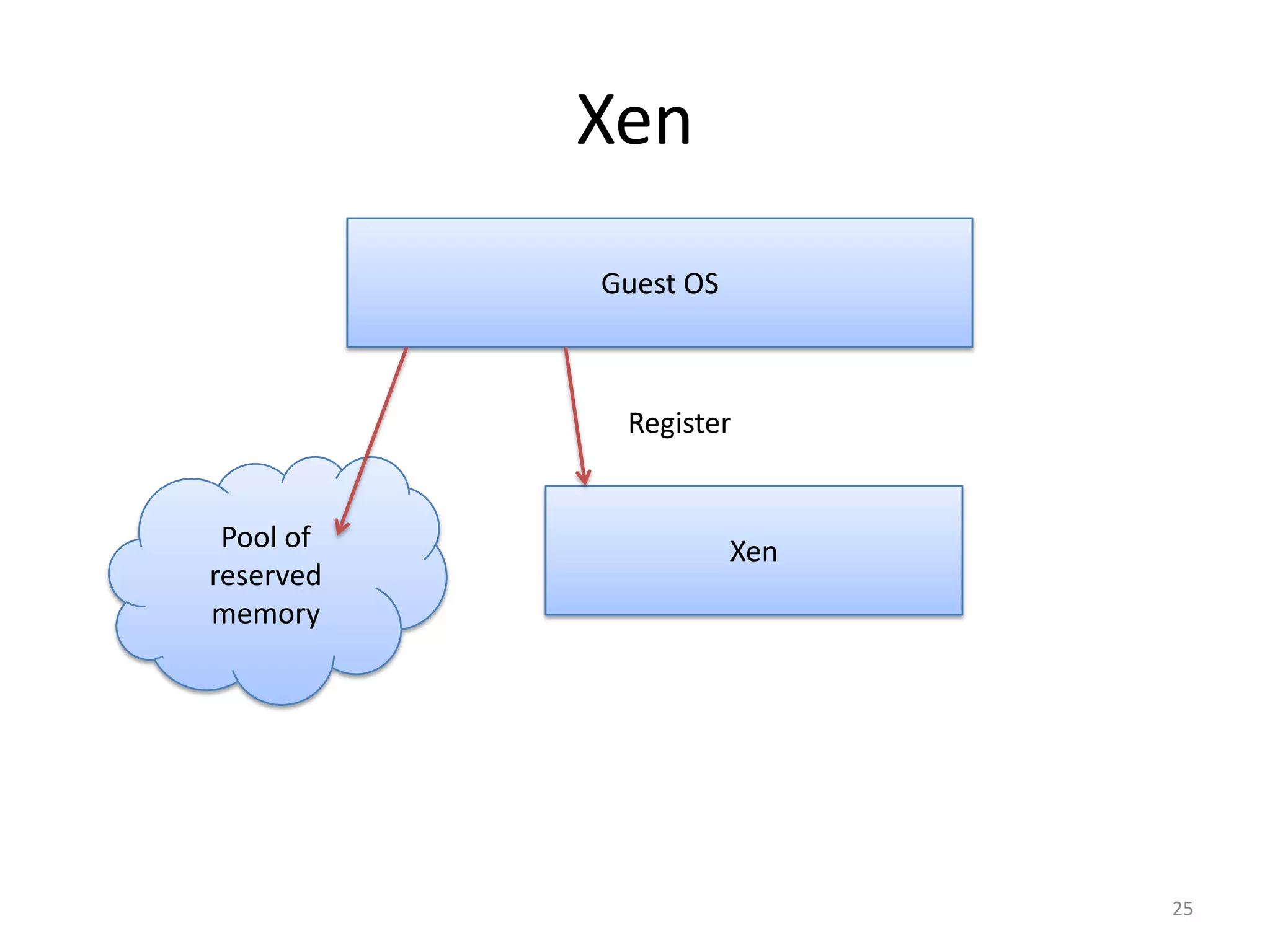

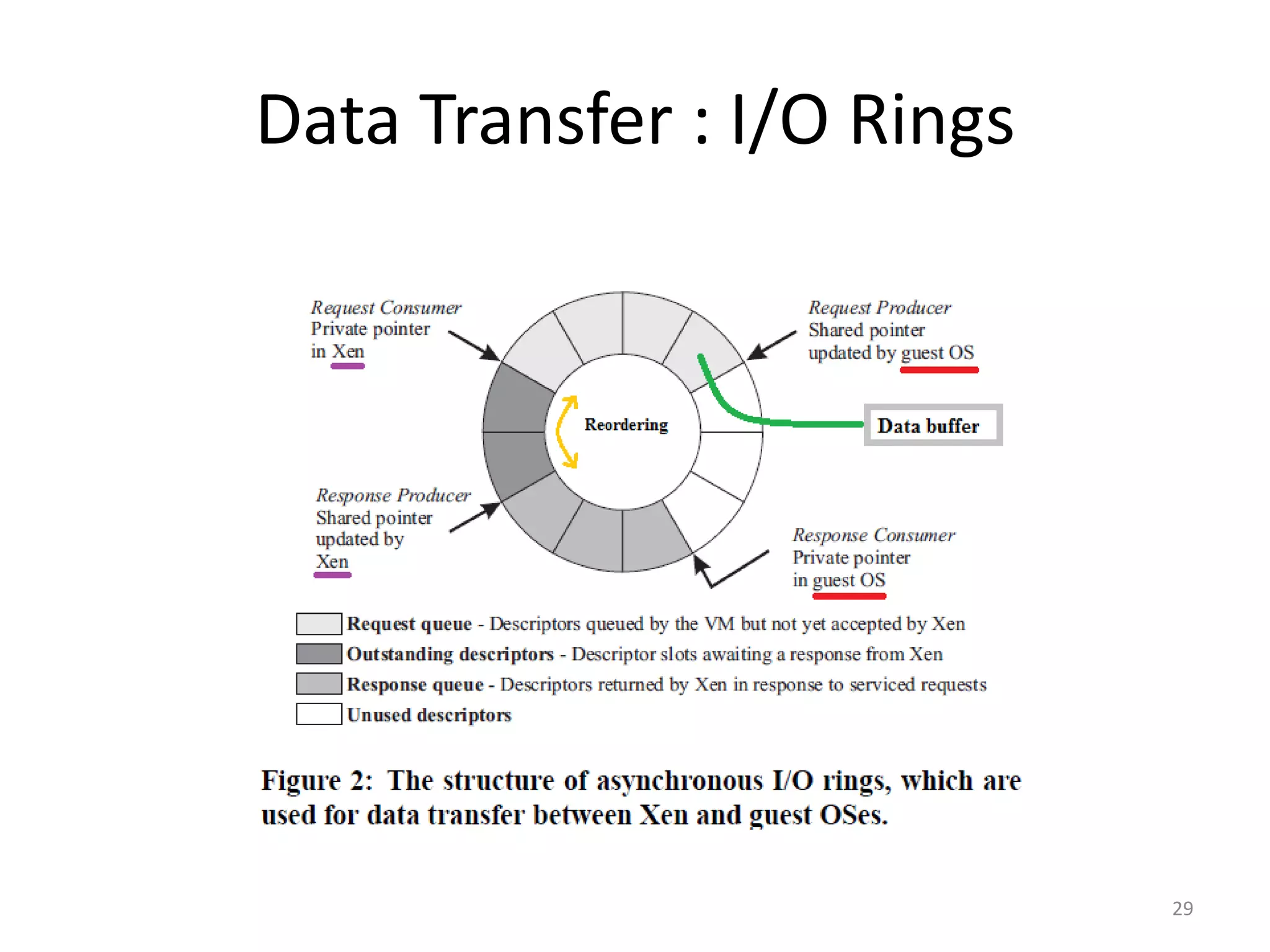

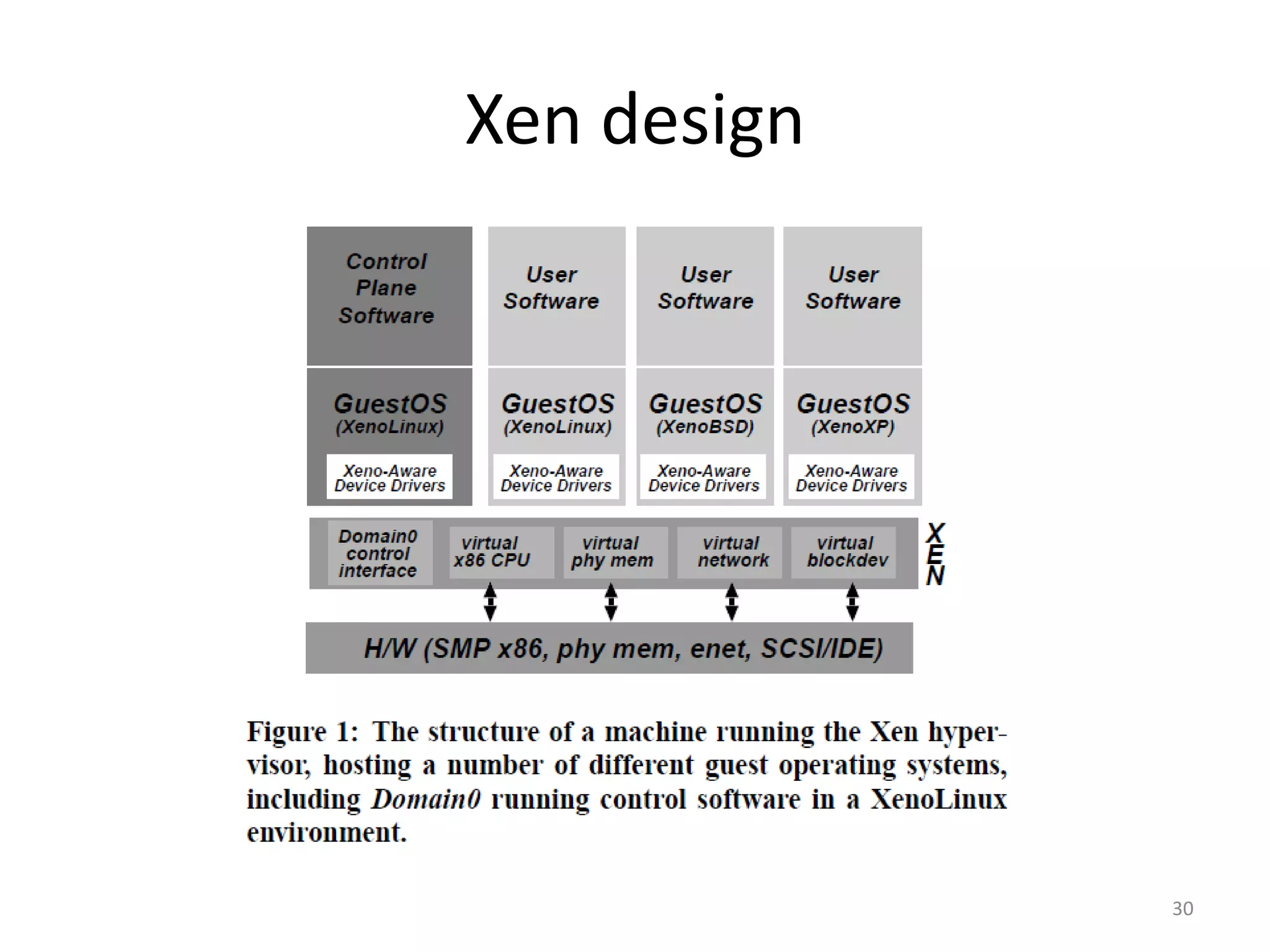

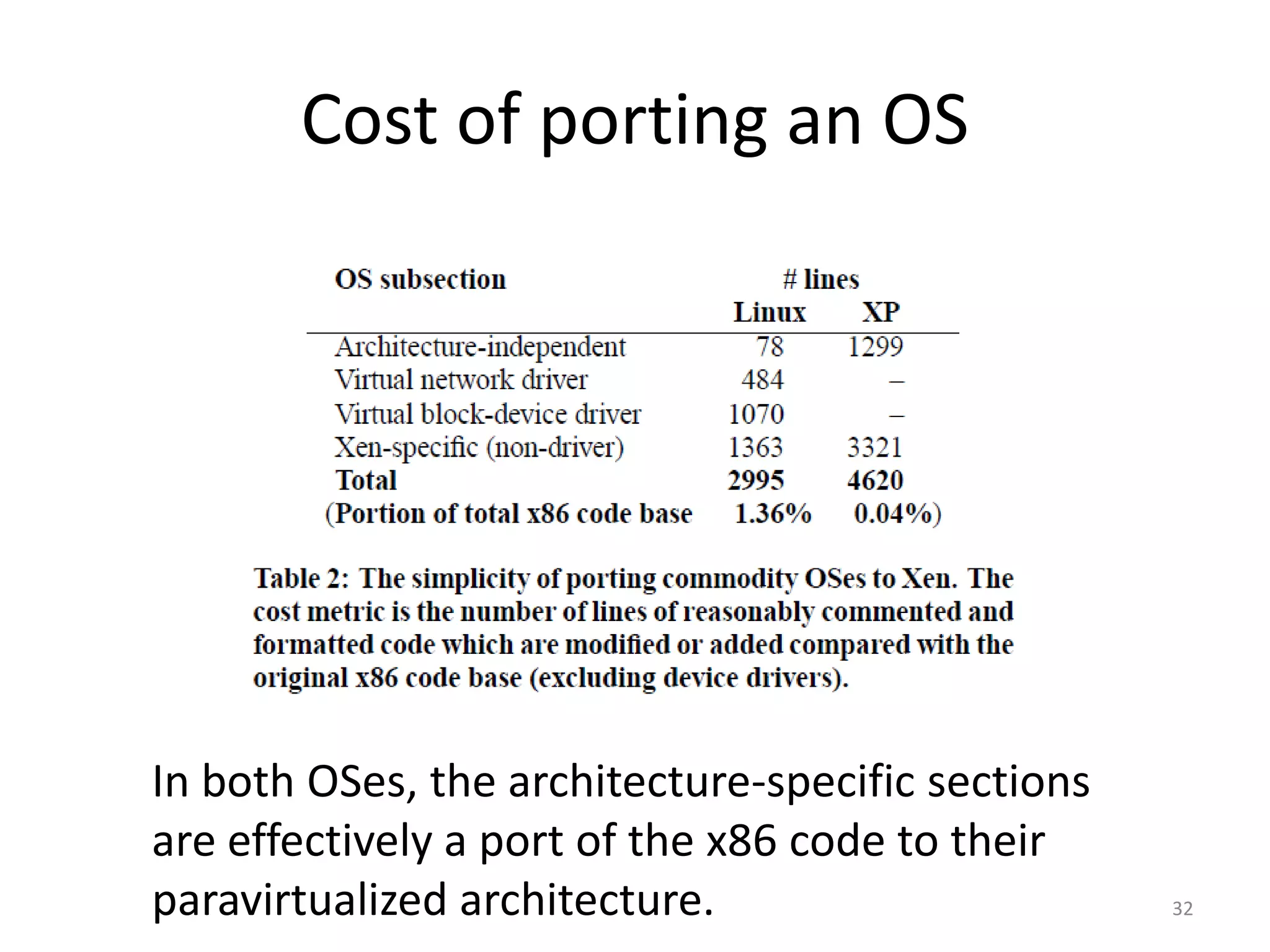

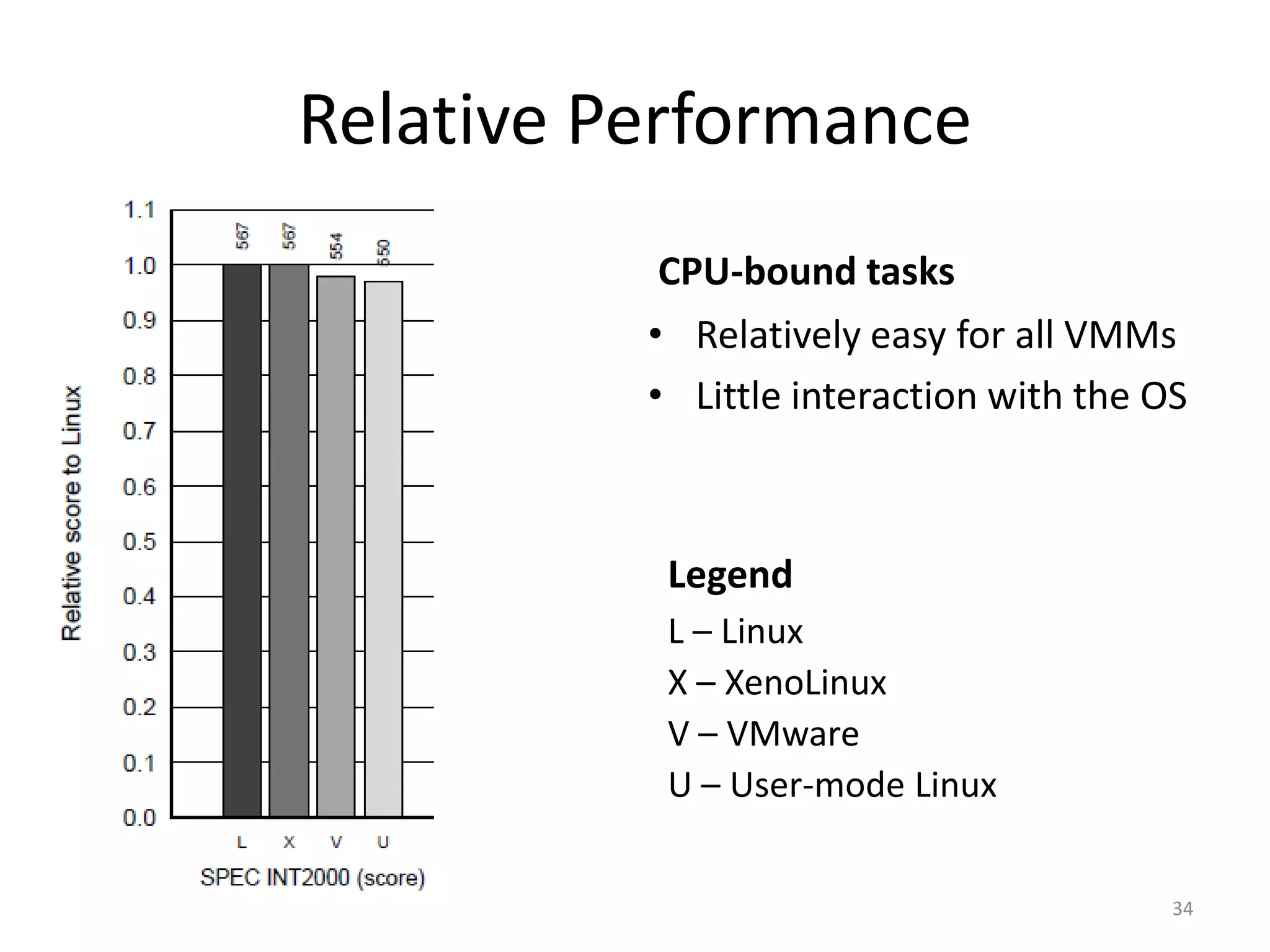

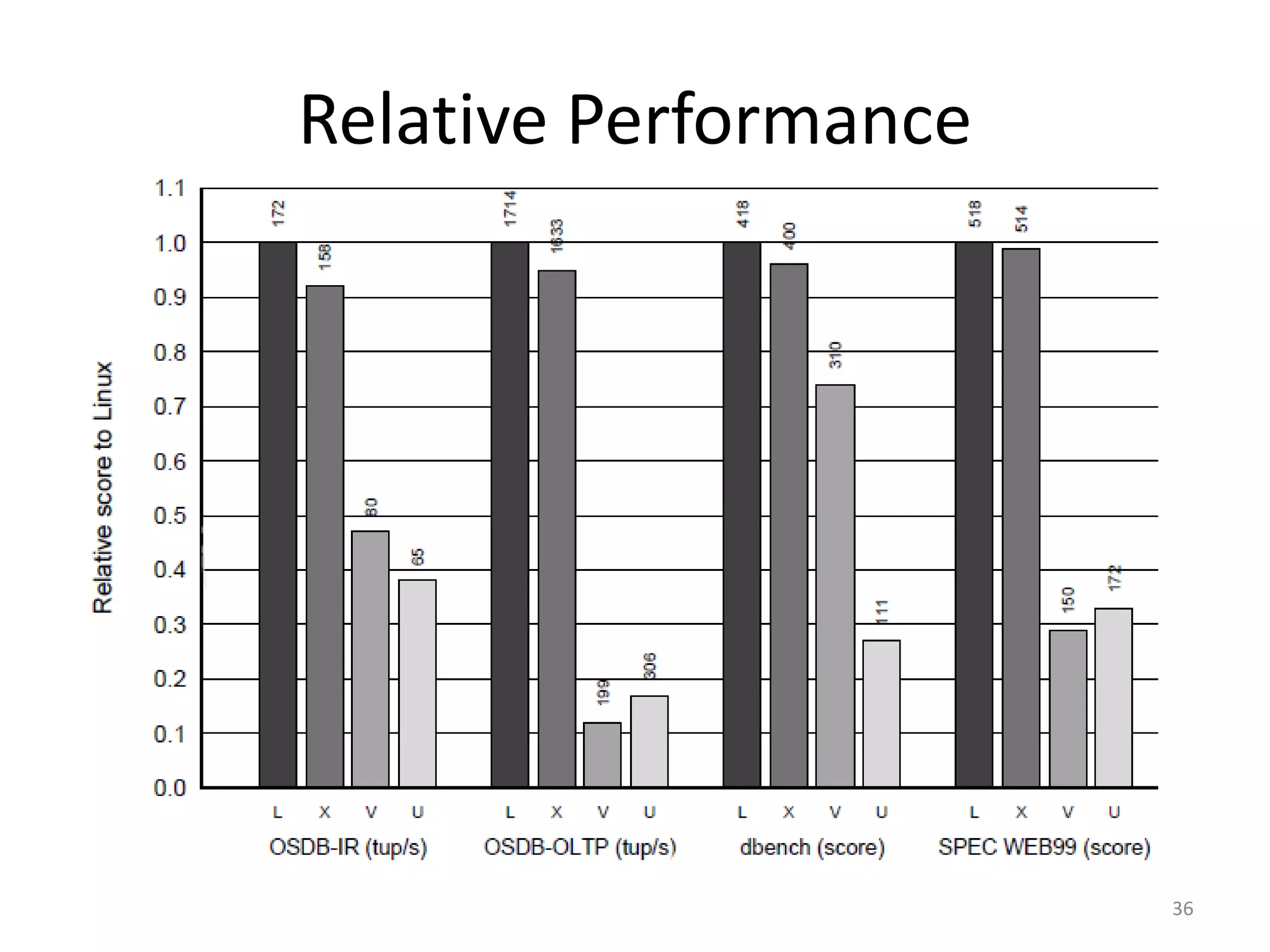

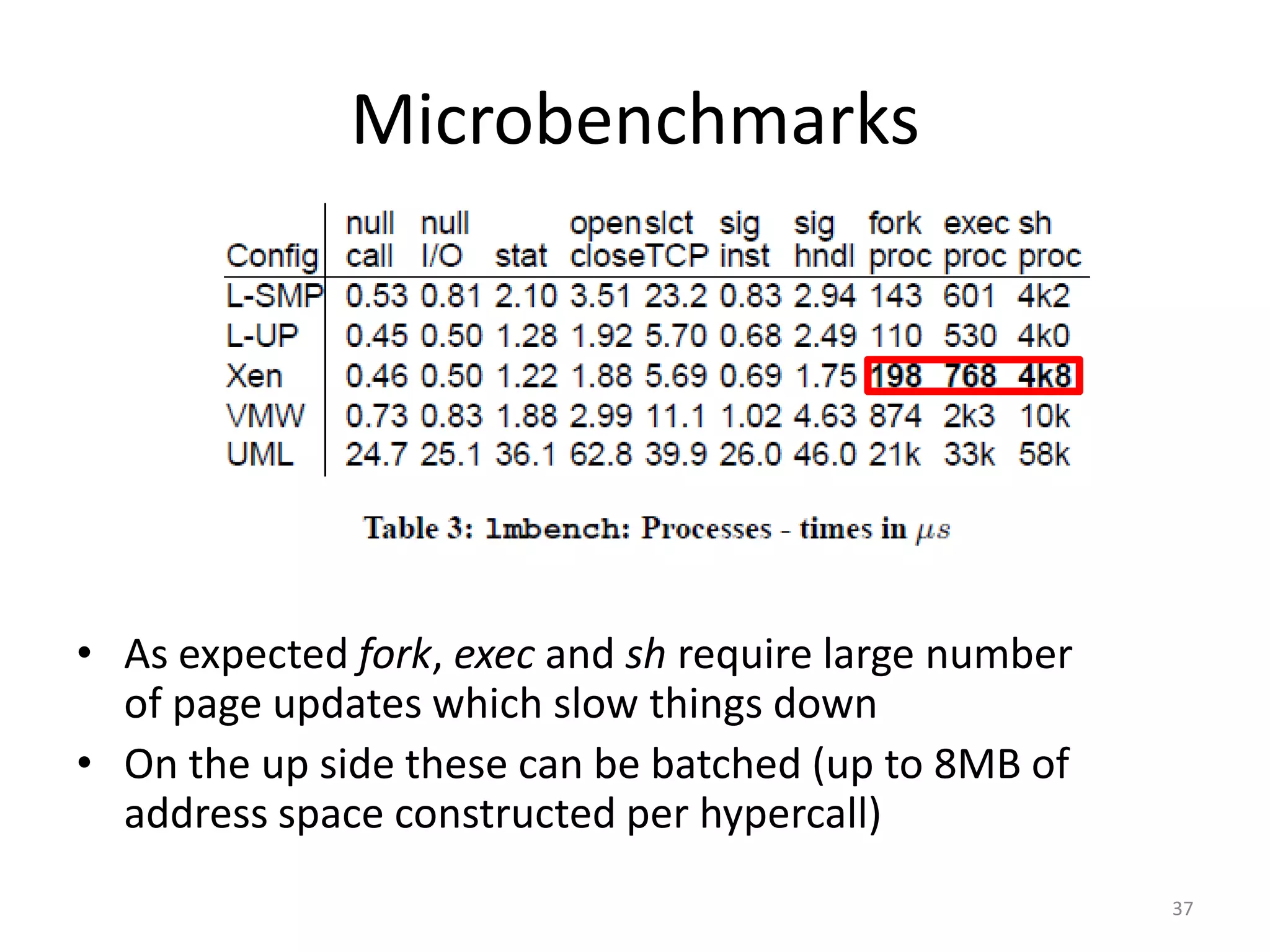

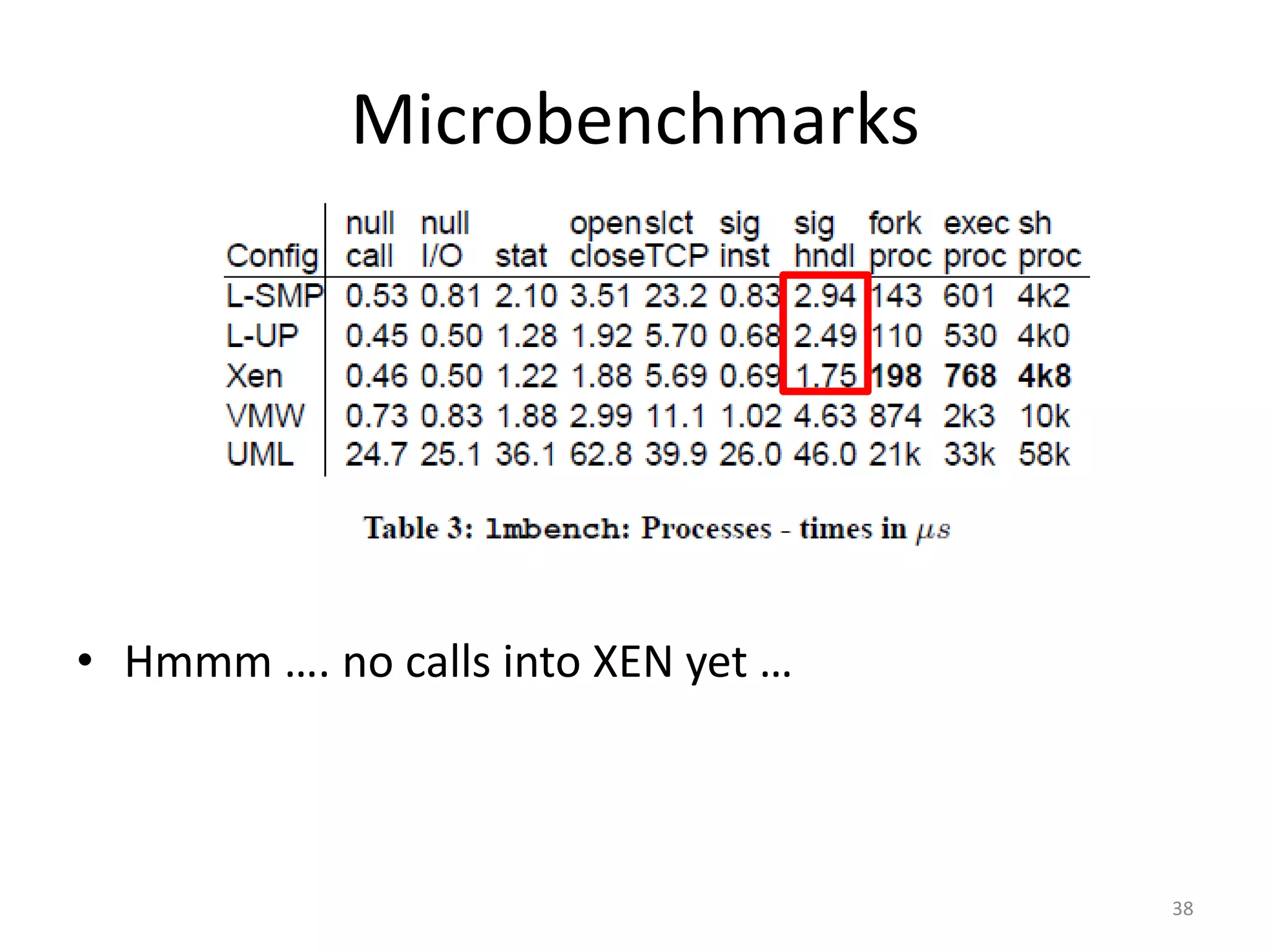

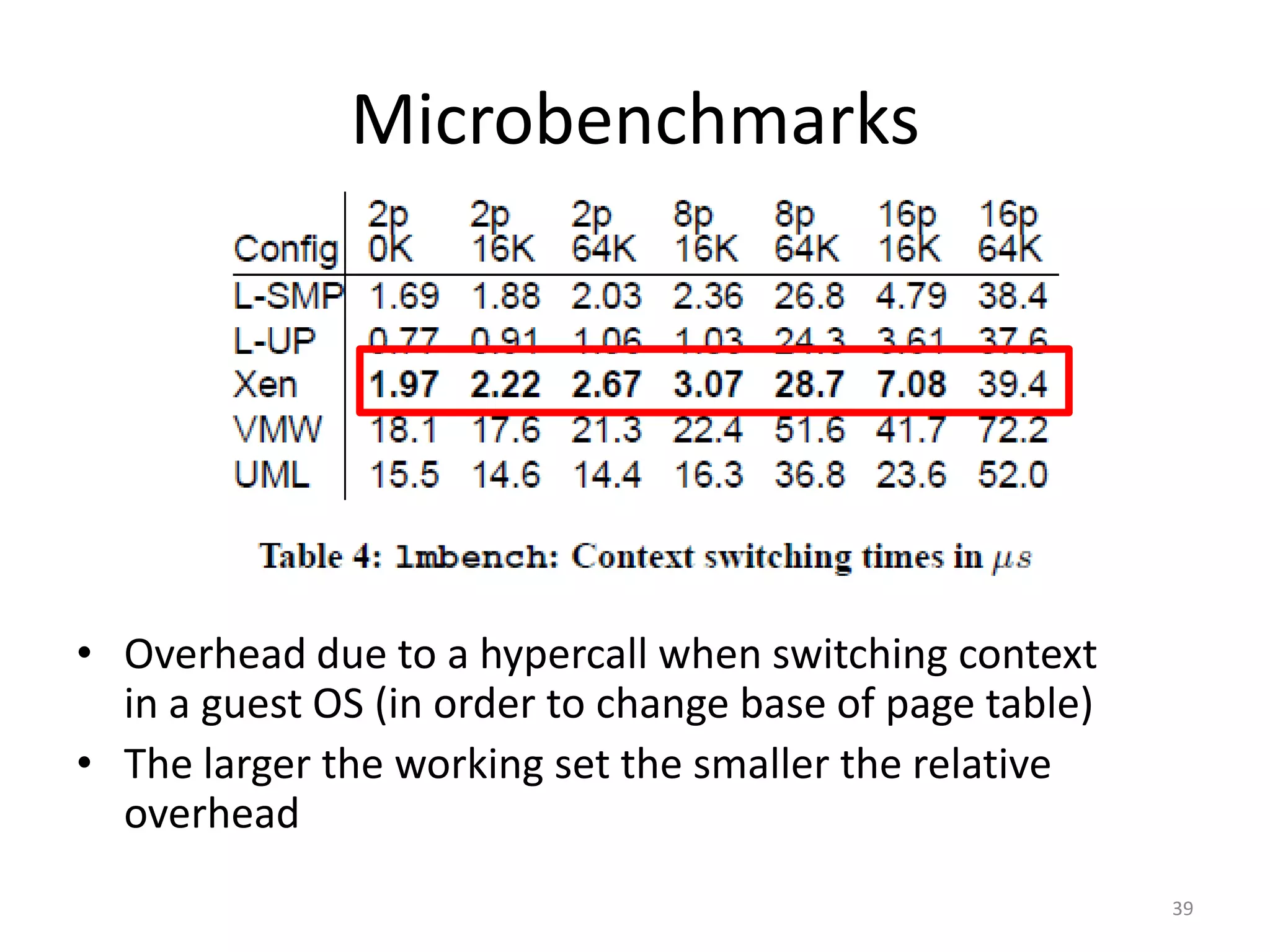

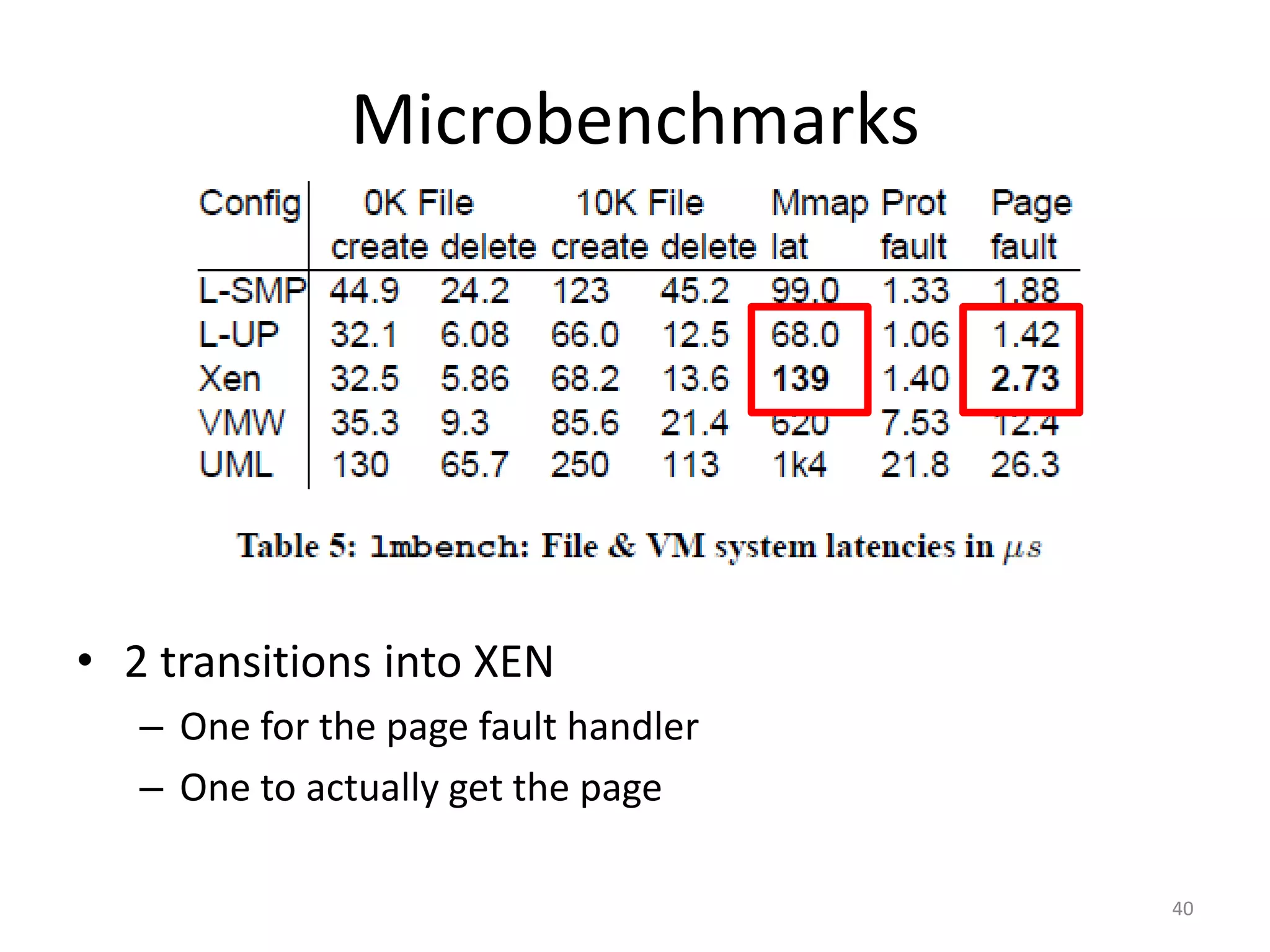

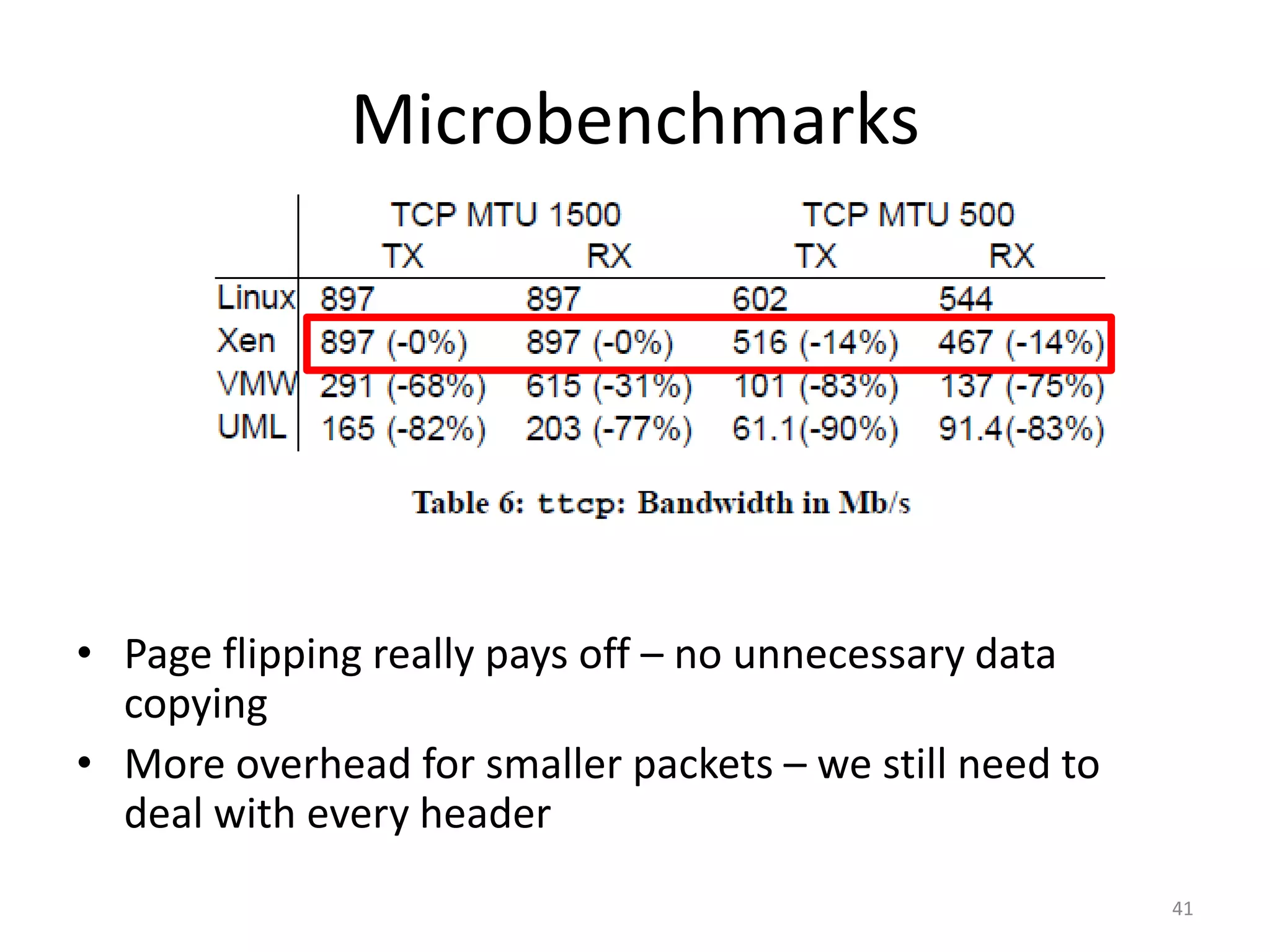

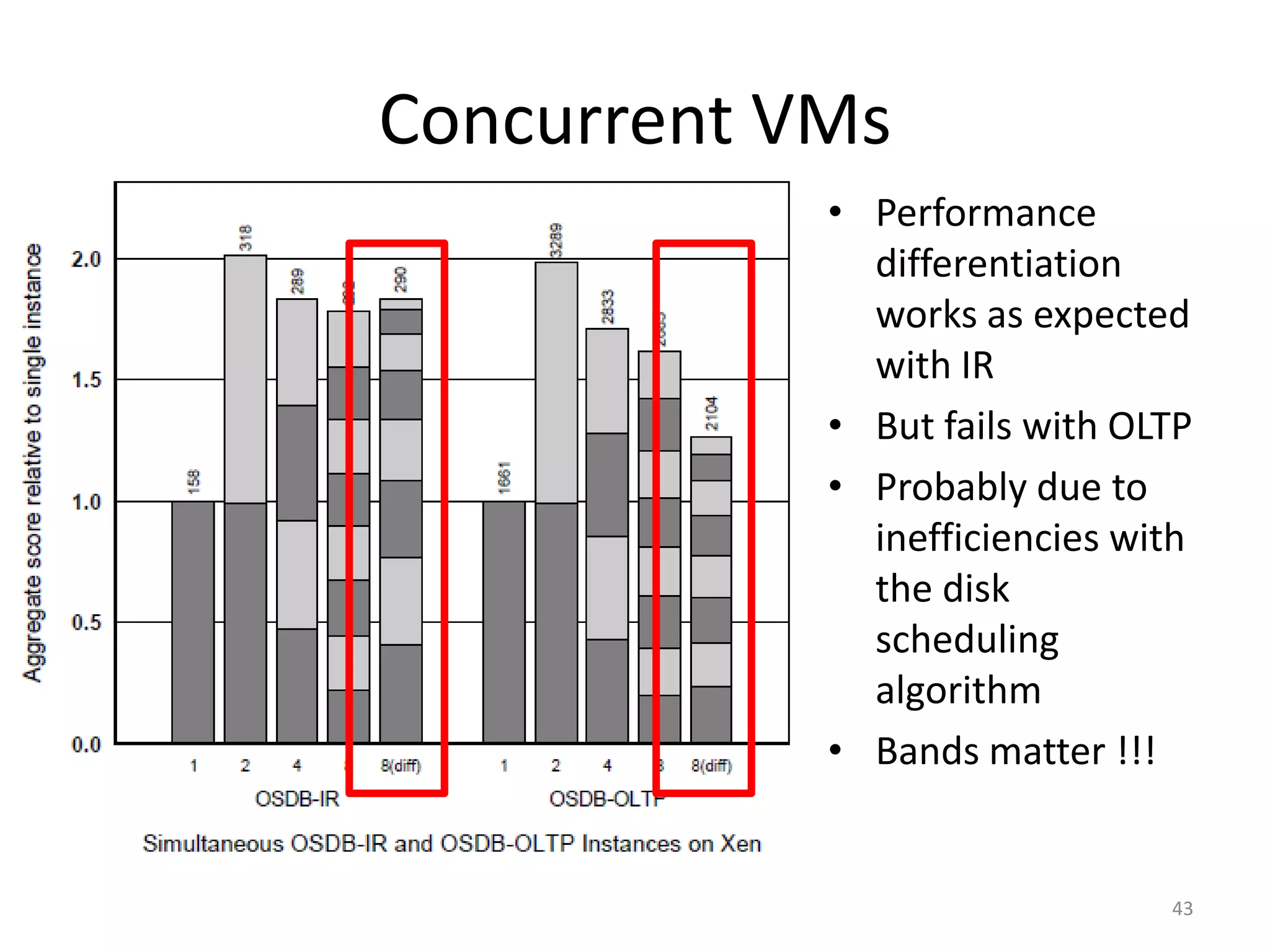

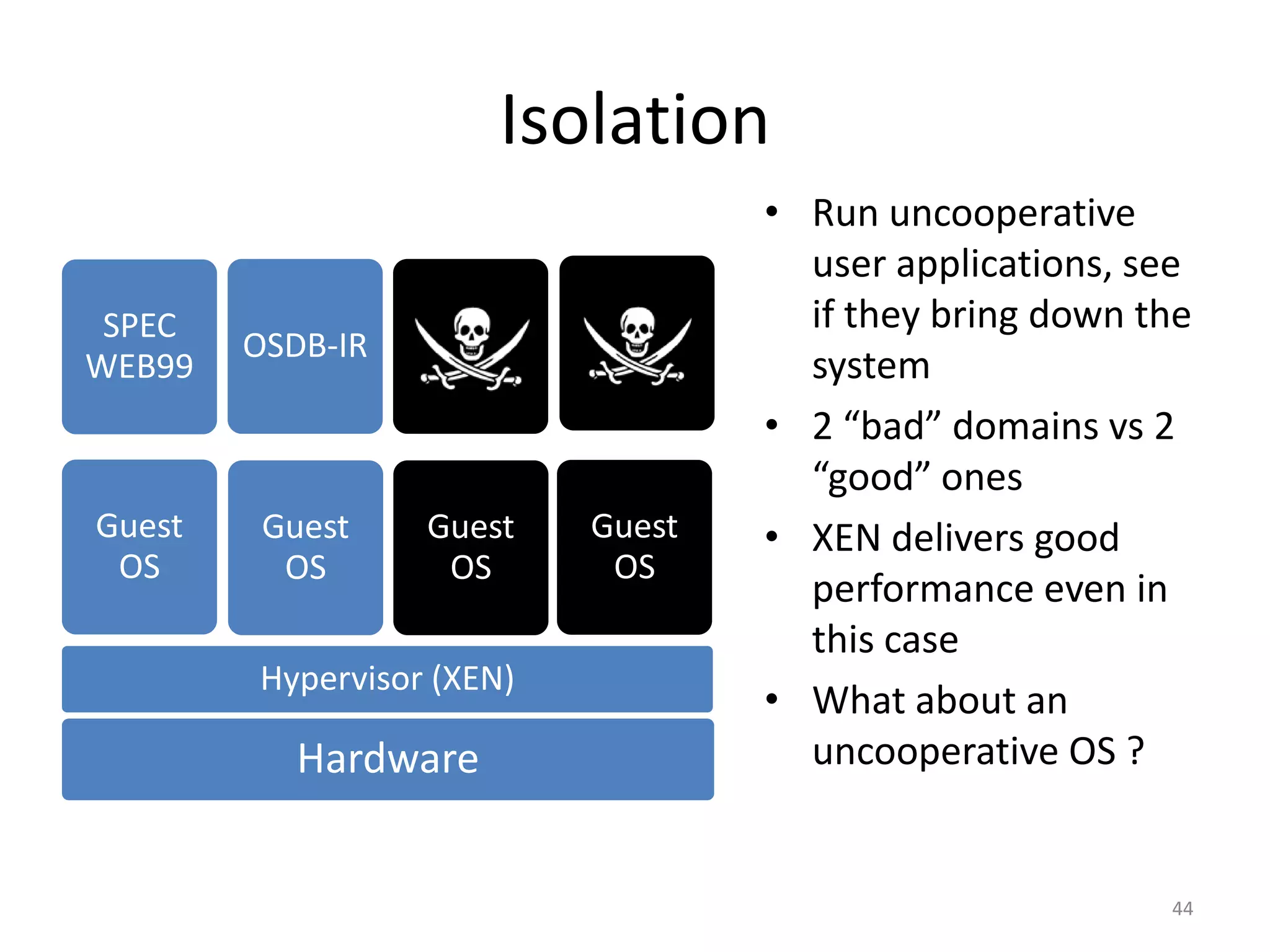

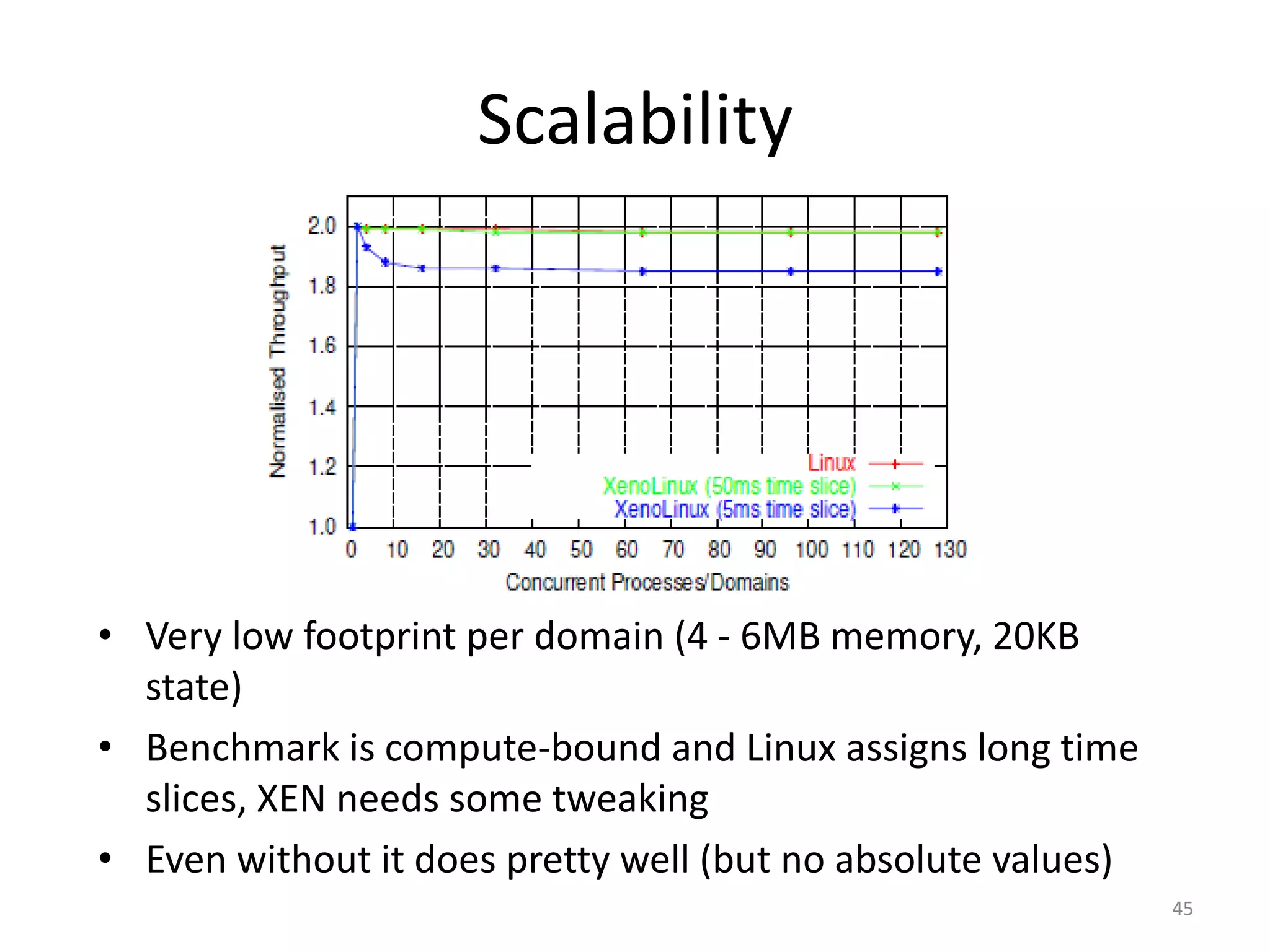

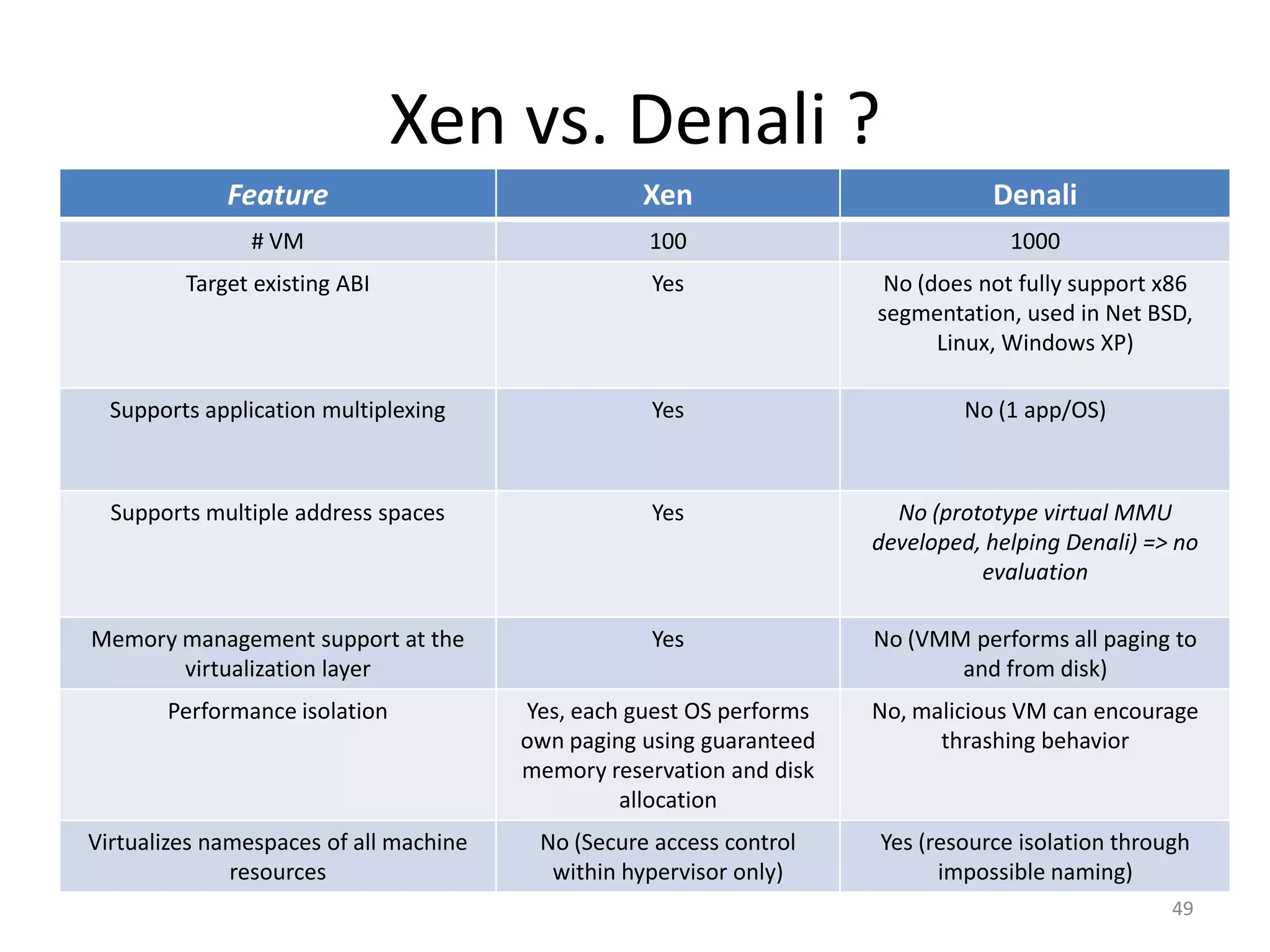

The document discusses the Xen virtualization system. It begins by outlining the goals of Xen, which include running on commodity x86 hardware and operating systems without performance or functionality sacrifices, while allowing up to 100 virtual machine instances per server. It then describes Xen's design, which uses a thin hypervisor and paravirtualization to multiplex physical resources between guest operating systems. The document evaluates Xen's performance, finding it imposes small overhead and provides good isolation between virtual machines. It concludes that Xen is a promising virtualization platform and its development is ongoing.