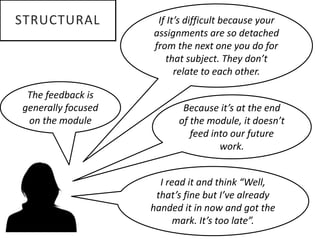

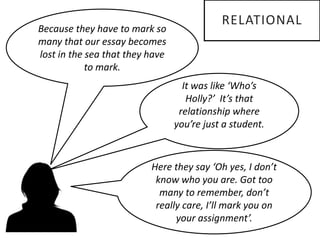

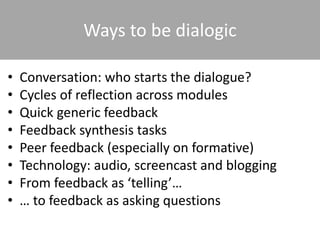

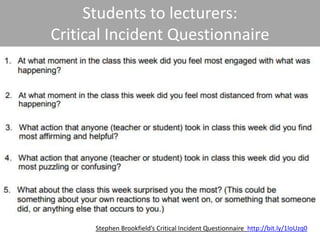

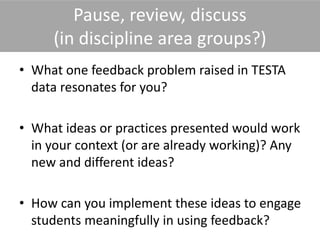

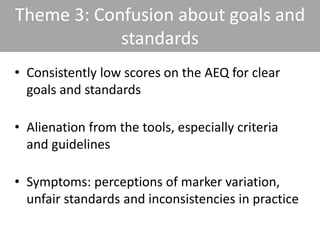

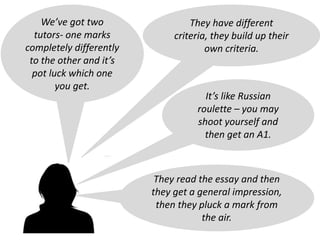

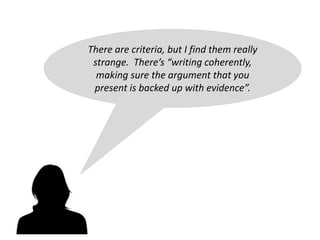

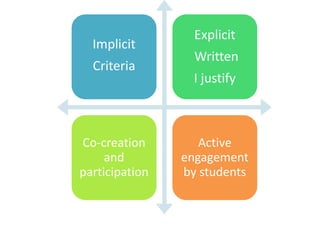

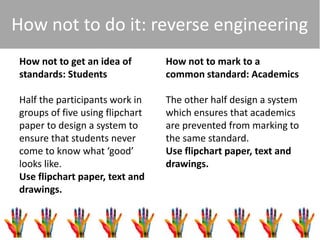

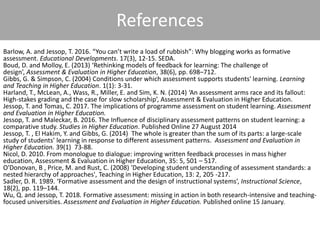

This document summarizes a presentation on improving feedback practices between students and instructors. It discusses issues with current feedback, such as feedback being too late to incorporate into future work, instructors not knowing individual students, and inconsistencies in grading standards between instructors. The presentation provides suggestions for more dialogic feedback such as using audio/video feedback, peer feedback, and question-based feedback. It also discusses internalizing goals and standards through activities like discussing rubrics, exemplars, and calibration exercises between instructors. The goal is to move from a transmission model of feedback to a more social constructivist model of active engagement between students and instructors.