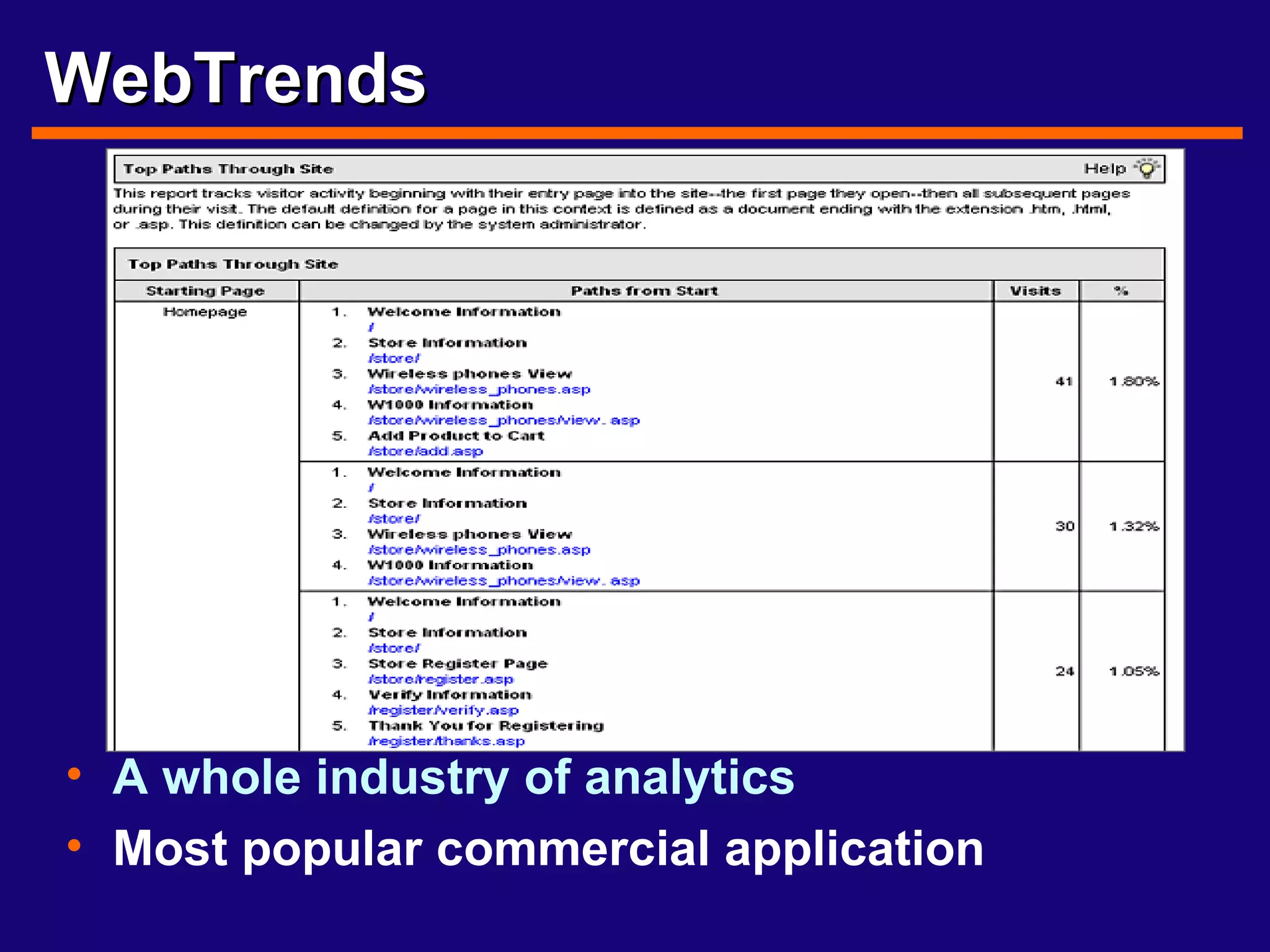

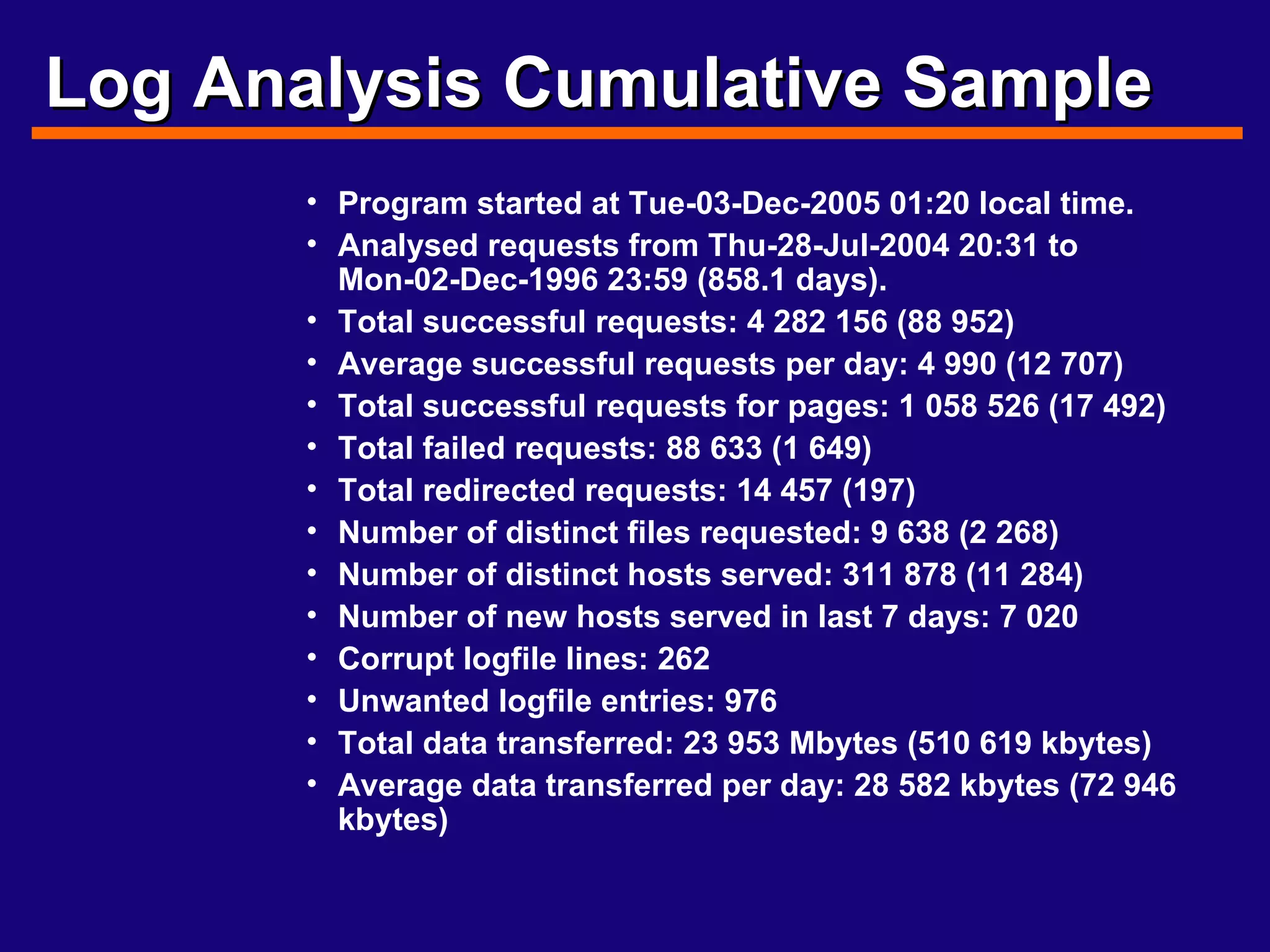

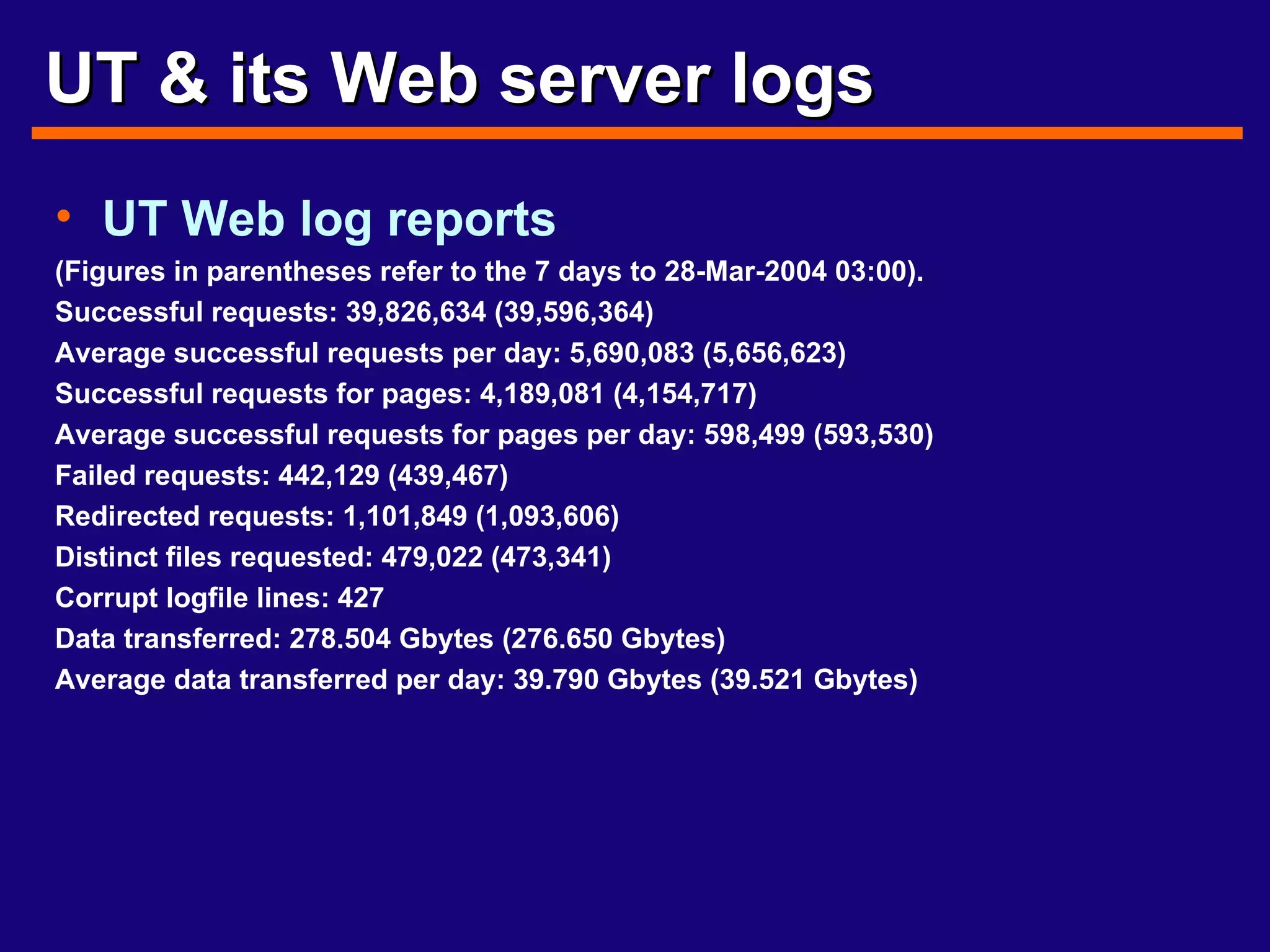

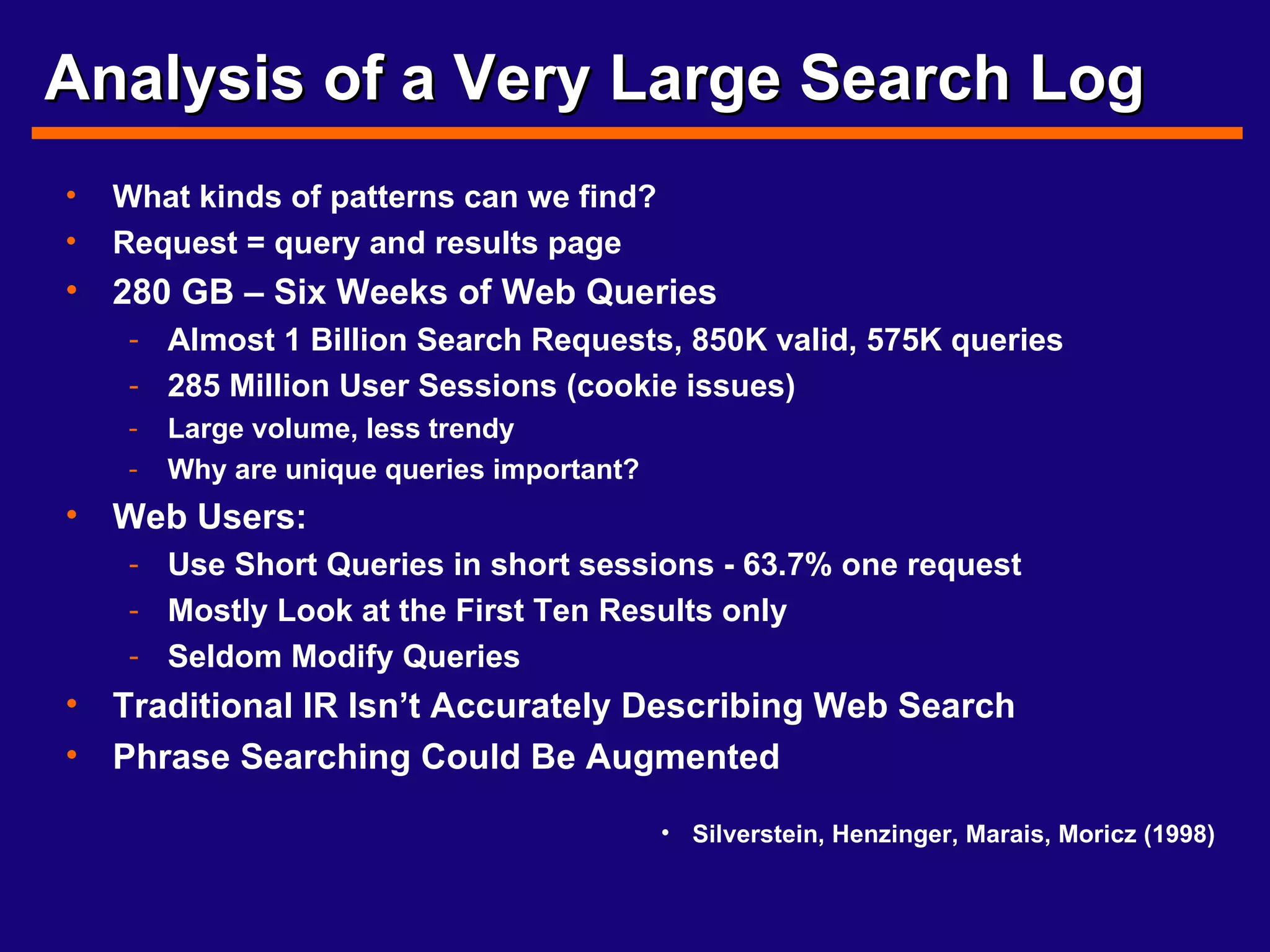

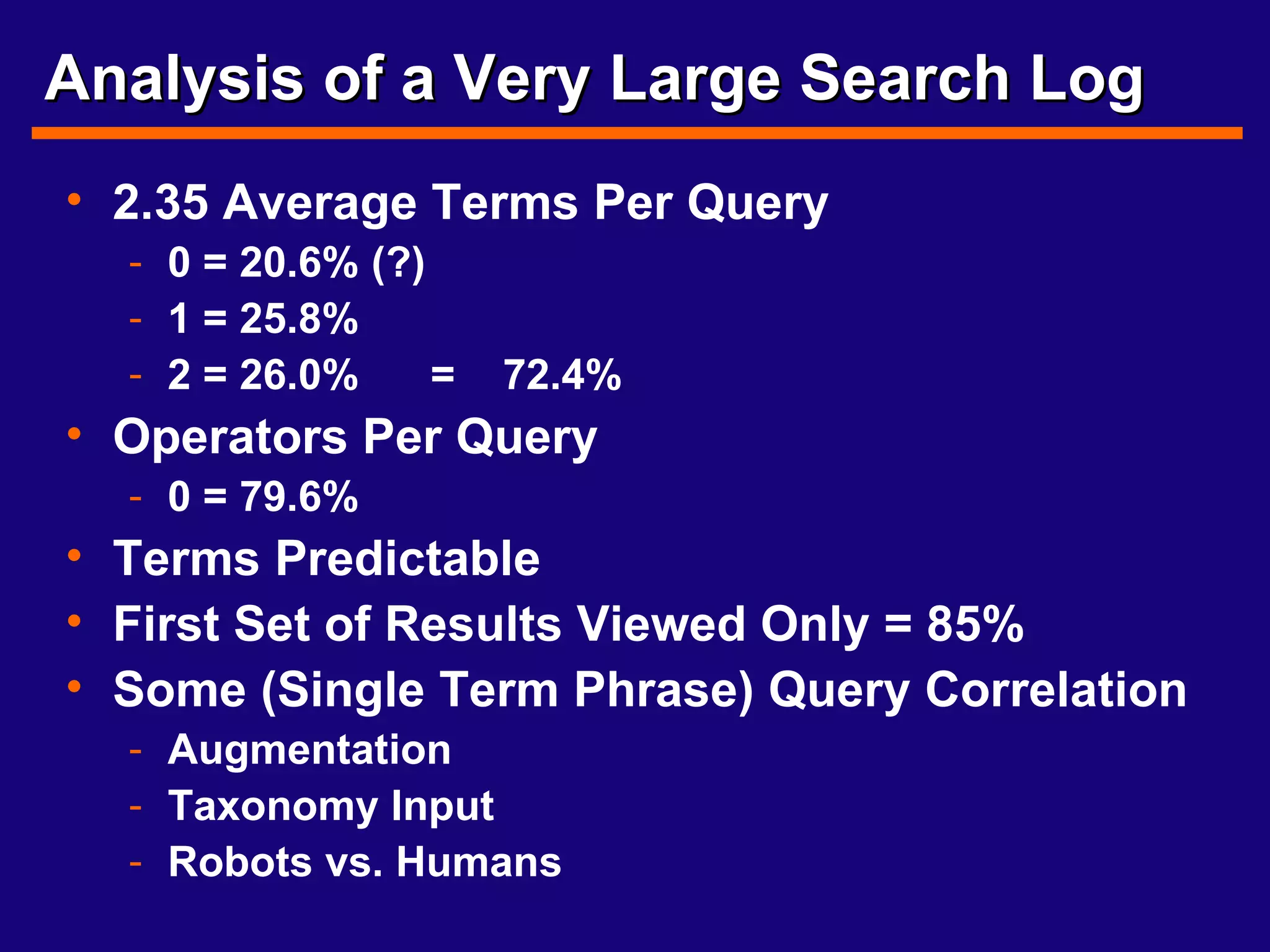

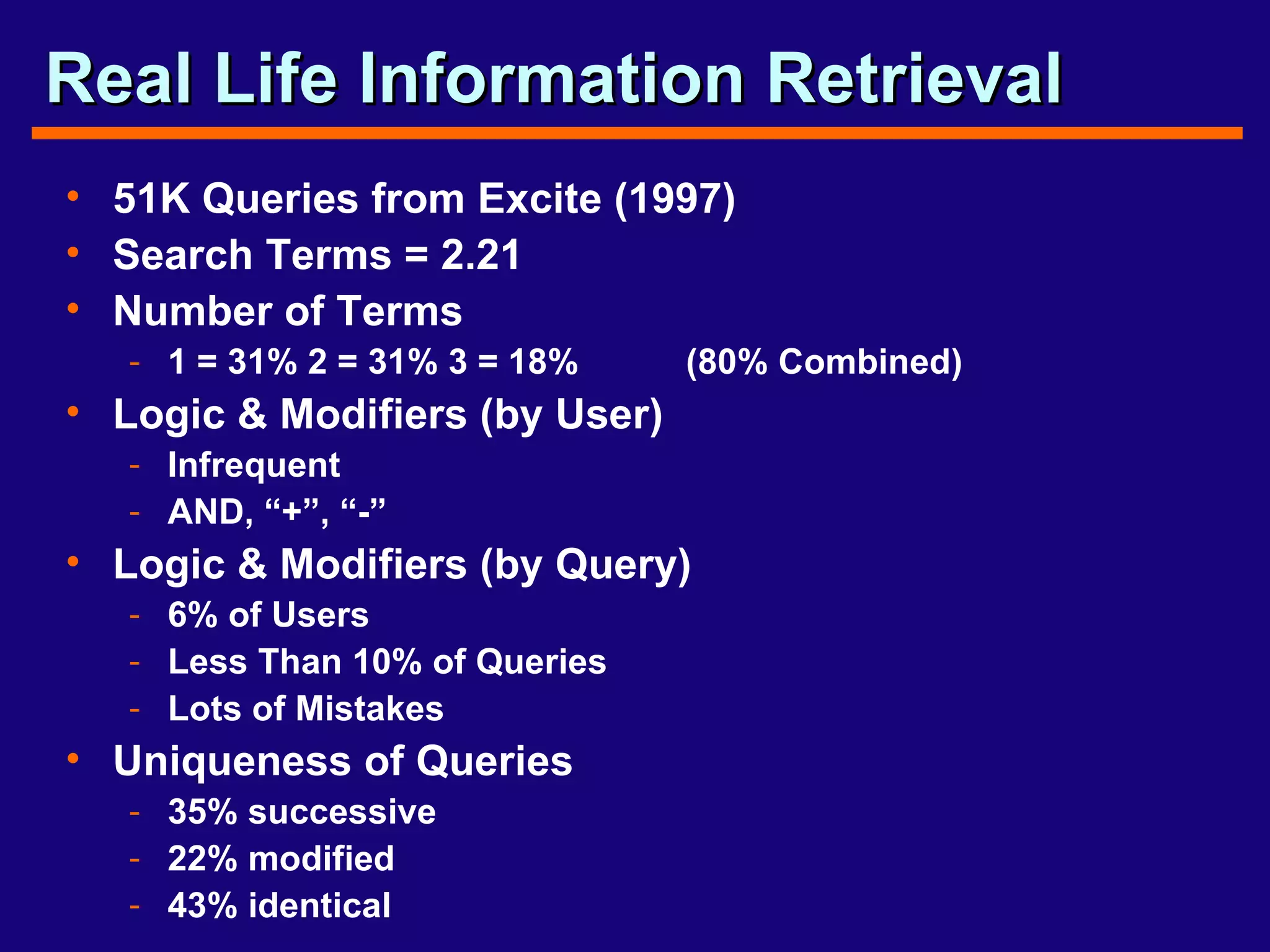

The document discusses analyzing web server logs to understand how users interact with websites. It covers the types of information that can be gleaned from server logs like files requested, time of requests, user IP addresses and browser/OS used. It also discusses common log file formats and tools that can be used to analyze logs to gain insights into how to improve information architecture and site usage.

![What is a log file? A delimited, text file with information about what the server is doing IP Address or Domain name Date/Time Method used & Page Requested Protocol, Response Code & Bytes Returned Referring Page (sometimes) UserAgent & Operating System p0016c74ea.us.kpmg.com - - [01/Sep/2004:08:17:21 -0500] "GET /images/sanchez.jpg HTTP/1.1" 200 - "http://www.ischool.utexas.edu/research/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows XP)"](https://image.slidesharecdn.com/web-servers3966/75/Web-Servers-8-2048.jpg)