This document provides an overview of a major seminar on knowledge discovery from web logs. It discusses how analyzing vast amounts of web site traversal data stored in web logs can reveal useful knowledge about user behavior that can be applied to improve web service performance. Specific techniques covered include mining web logs to build path profiles that predict future page visits, using these predictions to prefetch web documents for faster loading, and clustering web pages to create more intuitive user interfaces. The document lists several applications of web log mining and its advantages.

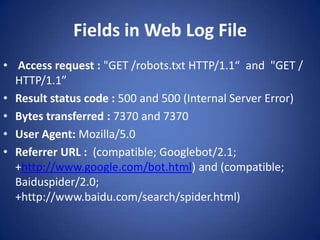

![Fields in Web Log File

• Reference Website www.hdwally.com Web Server: Apache

1. 66.249.71.6 - - [23/Feb/2012:06:23:46 -0600] "GET

/robots.txt HTTP/1.1" 500 7370 "-" "Mozilla/5.0

(compatible; Googlebot/2.1;

+http://www.google.com/bot.html)“

2. 180.76.5.92 - - [23/Feb/2012:06:11:04 -0600] "GET /

HTTP/1.1" 500 7370 "-" "Mozilla/5.0 (compatible;

Baiduspider/2.0;

+http://www.baidu.com/search/spider.html)“

• IP Adress:-66.249.71.6 and 180.76.5.92

• UserName:- -- and --

• Timestamp :- [23/Feb/2012:06:23:46 -0600] and -

[23/Feb/2012:06:11:04 -0600] (time of visit by webserver)](https://image.slidesharecdn.com/avtarsppt-130219092435-phpapp01/85/Avtar-s-ppt-6-320.jpg)

![Example Of a Web Log File

• fcrawler.looksmart.com - - [26/Apr/2000:00:00:12 -0400]

"GET /contacts.html HTTP/1.0" 200 4595 "-" "FAST-

WebCrawler/2.1-pre2 (ashen@looksmart.net)"

fcrawler.looksmart.com - - [26/Apr/2000:00:17:19 -0400]

"GET /news/news.html HTTP/1.0" 200 16716 "-" "FAST-

WebCrawler/2.1-pre2 (ashen@looksmart.net)“

• 123.123.123.123 - - [26/Apr/2000:00:23:48 -0400] "GET

/pics/wpaper.gif HTTP/1.0" 200 6248

"http://www.jafsoft.com/asctortf/" "Mozilla/4.05

(Macintosh; I; PPC )"](https://image.slidesharecdn.com/avtarsppt-130219092435-phpapp01/85/Avtar-s-ppt-8-320.jpg)