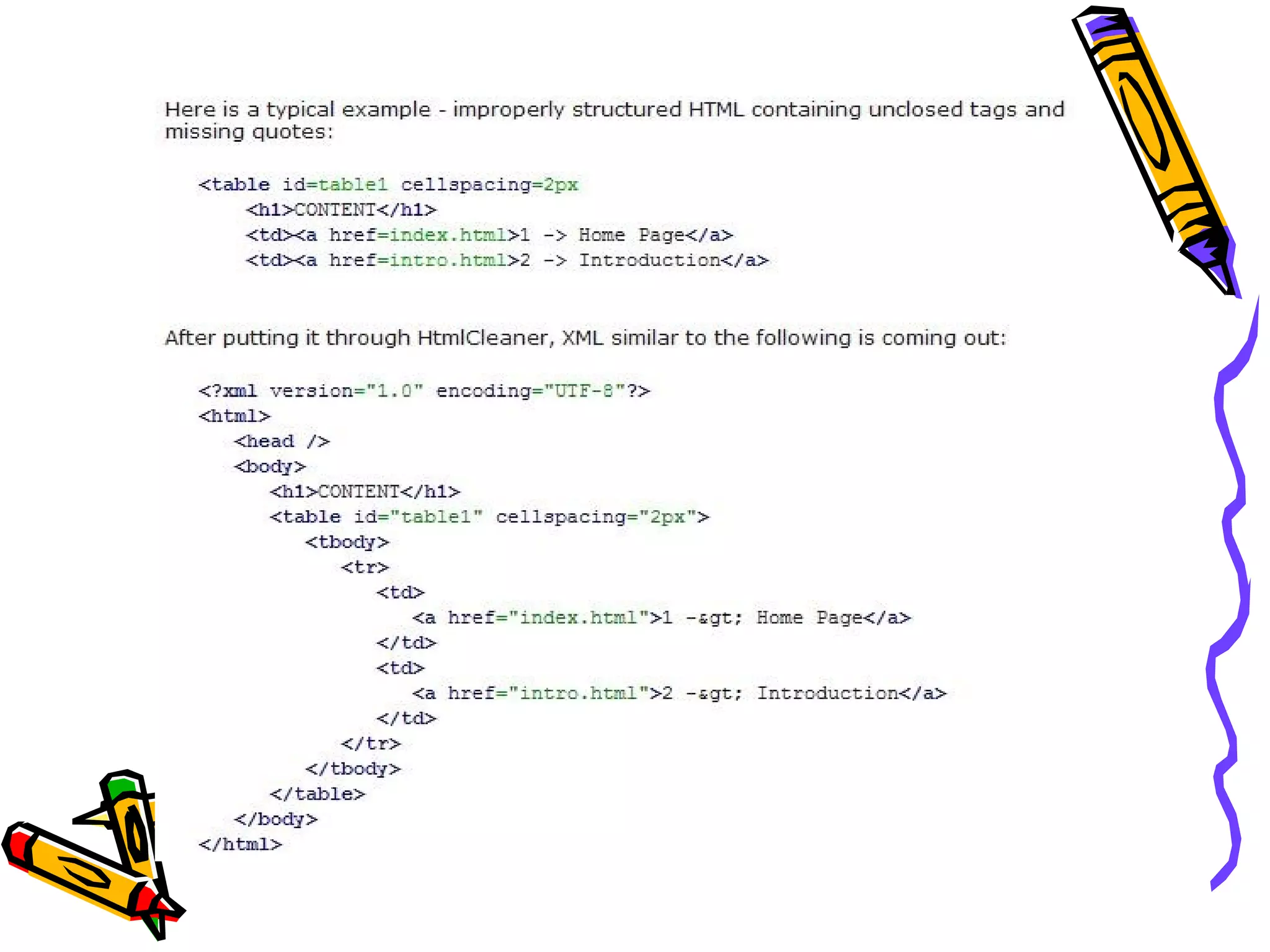

This document discusses an overview of how a web crawler works including parsing HTML, common parsers used, the structure of parsers, examples of extraction and transformation, and concludes with uses of web crawlers and a question and answer session.