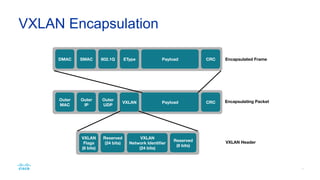

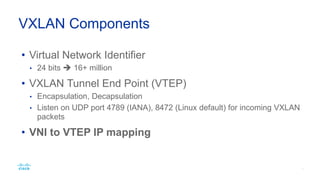

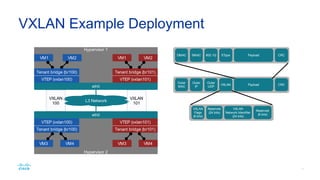

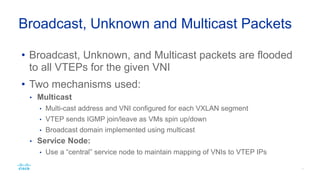

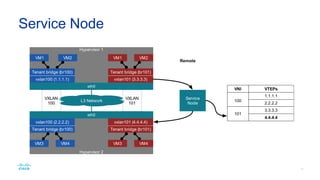

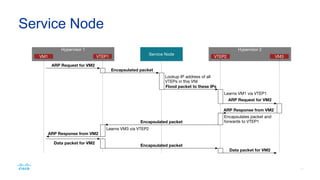

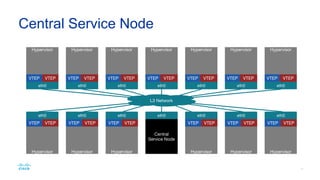

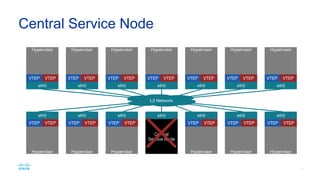

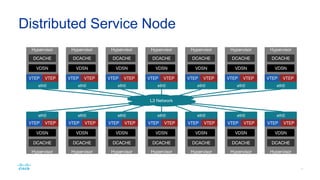

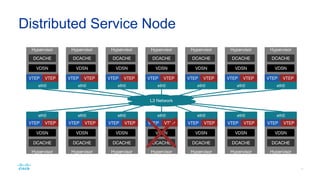

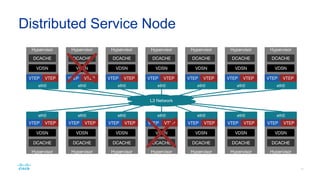

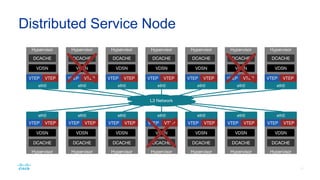

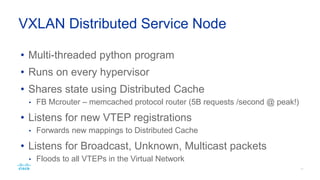

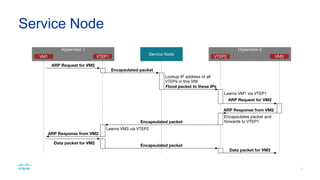

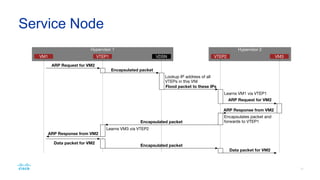

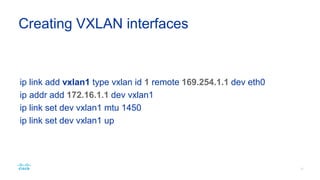

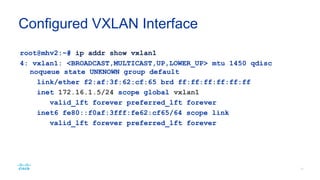

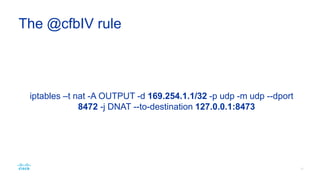

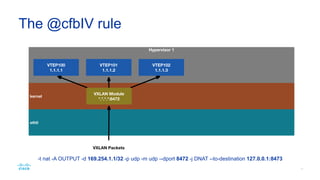

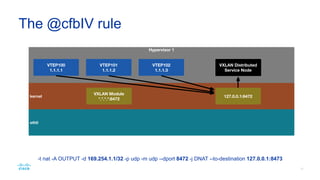

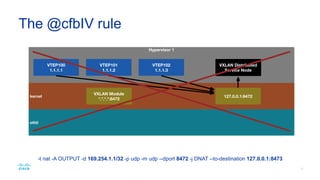

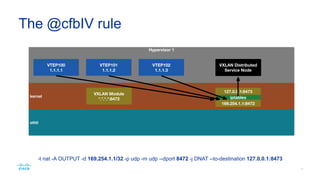

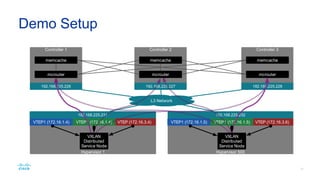

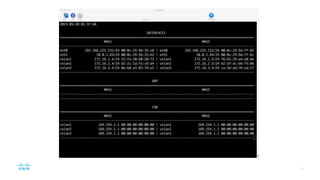

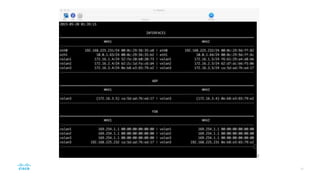

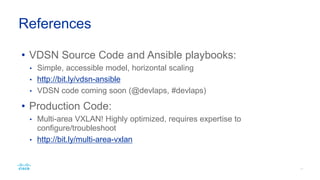

The document discusses the implementation of VXLAN (Virtual Extensible LAN) for addressing scalability and performance issues in modern data center networks. It highlights VXLAN's ability to support over 16 million VLANs while reducing pressure on traditional tables and the use of distributed service nodes for efficient management. Additionally, it mentions integration options with Neutron and provides references for further exploration of the technologies discussed.