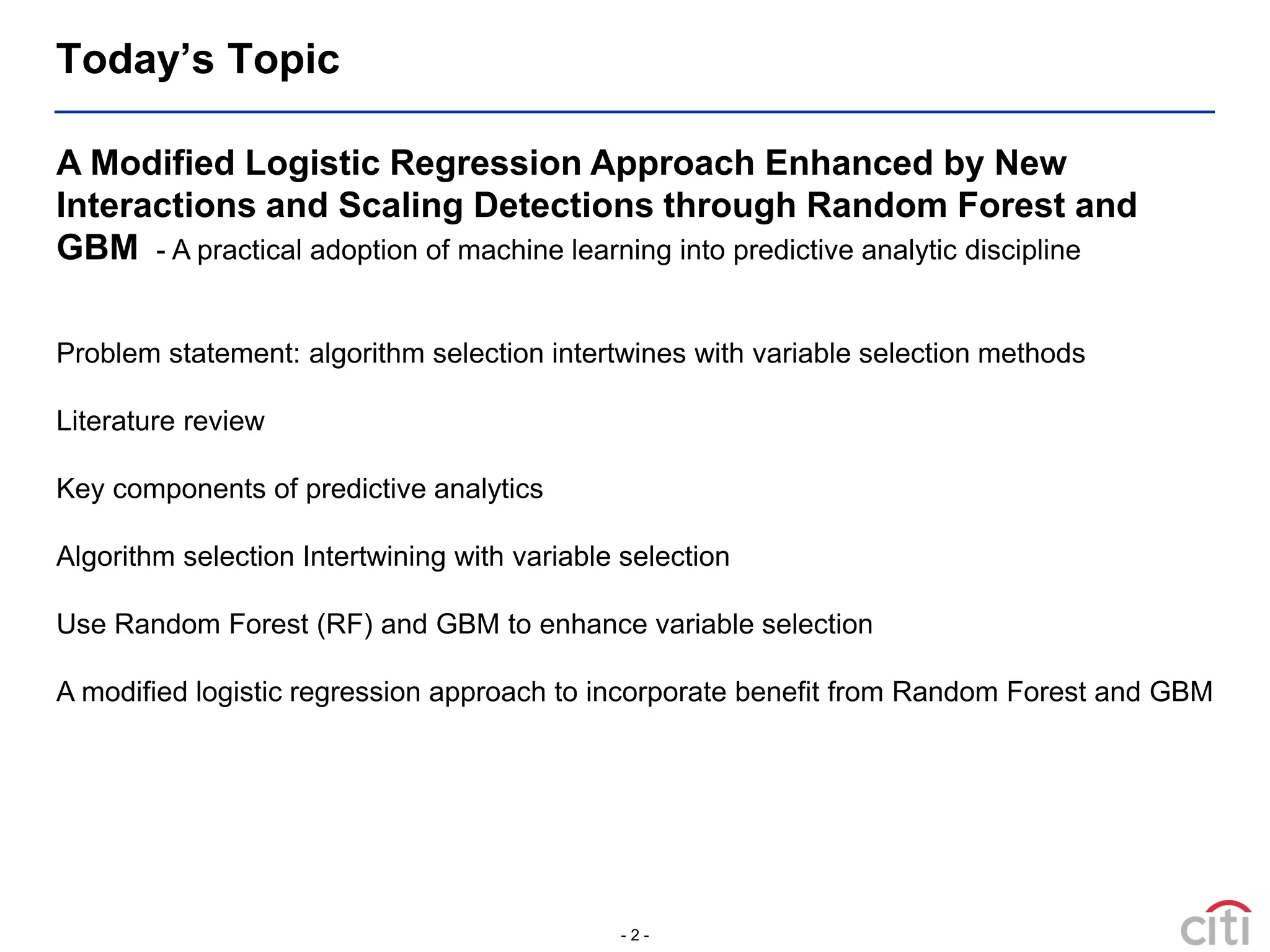

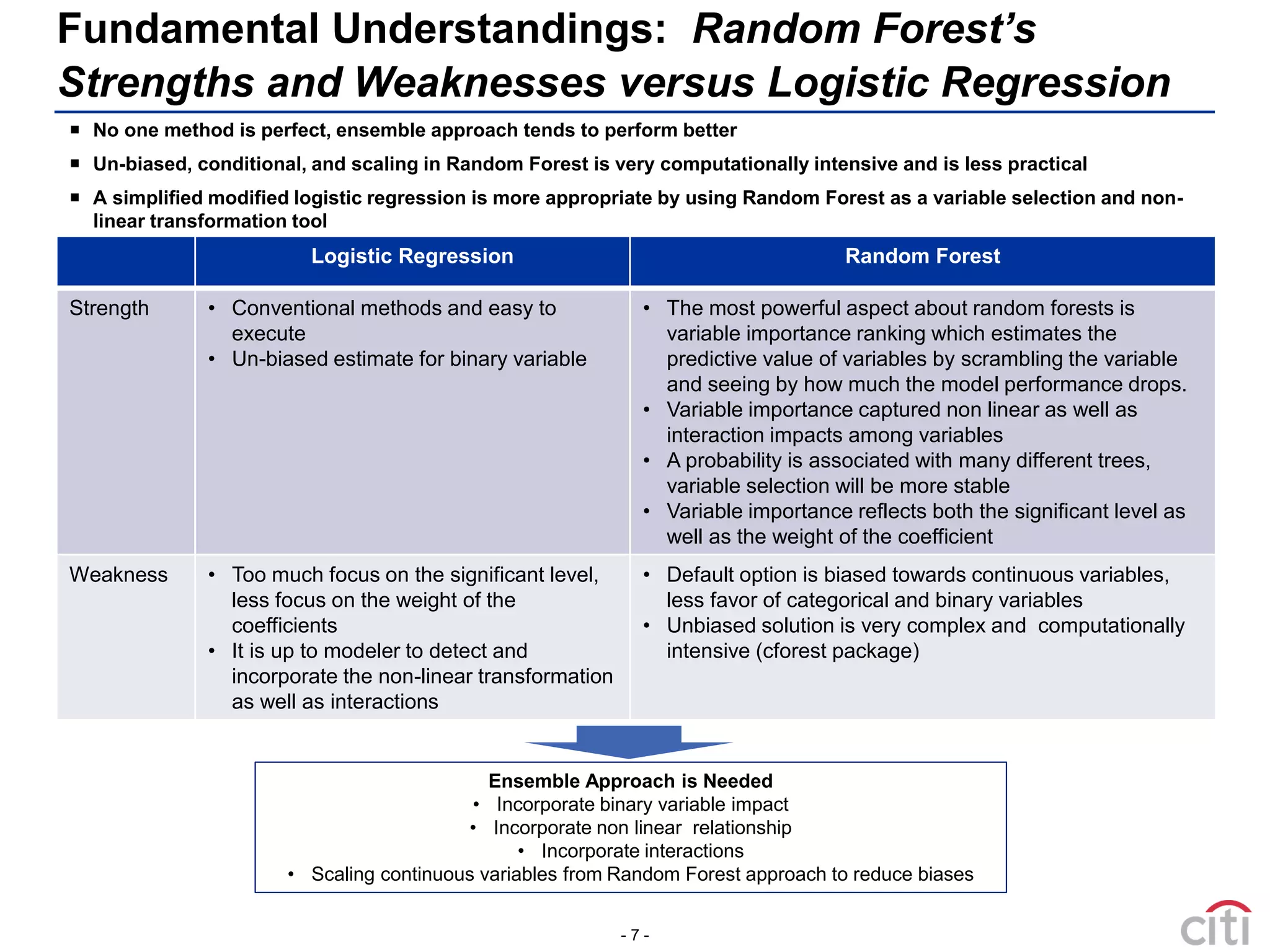

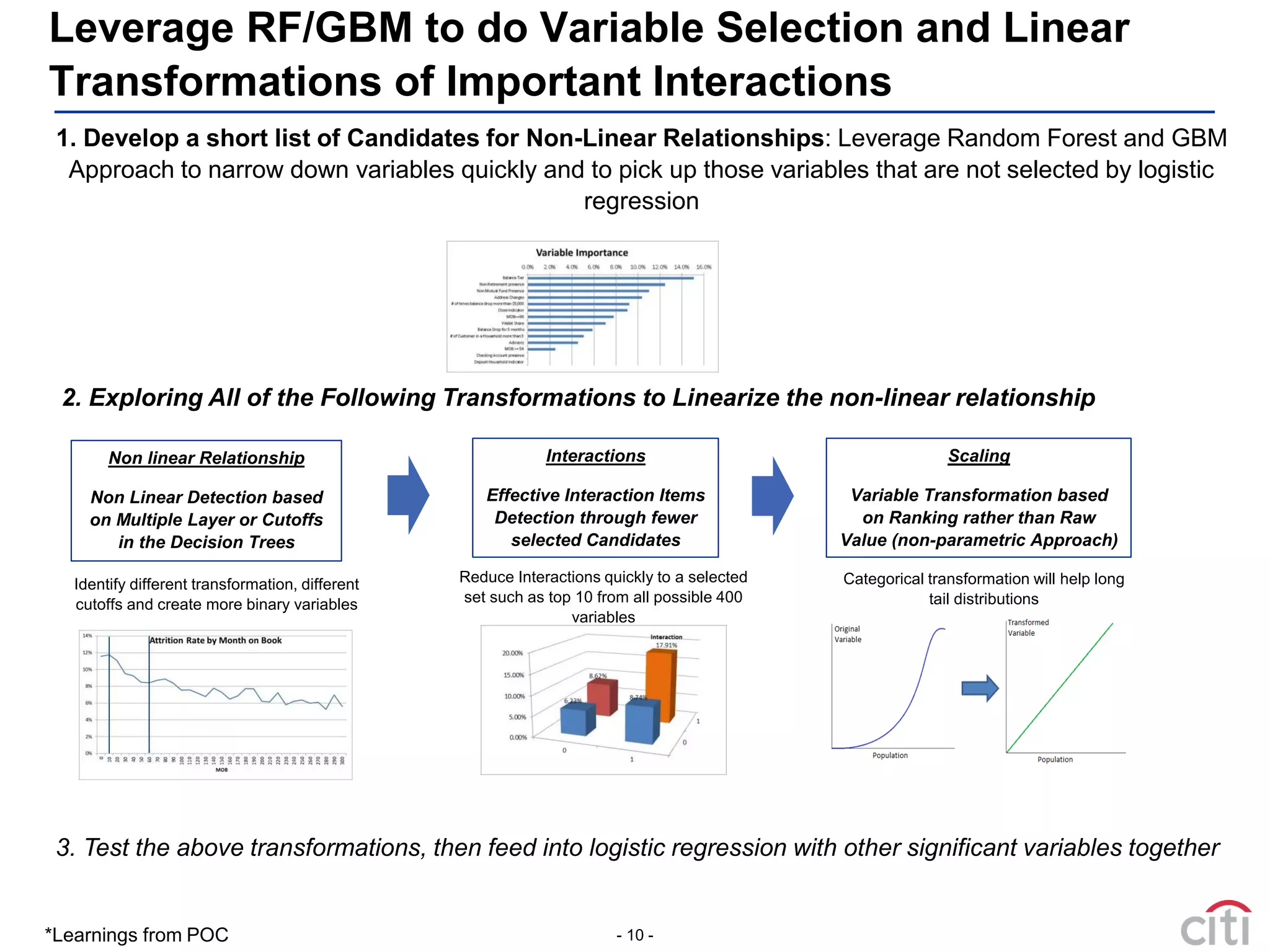

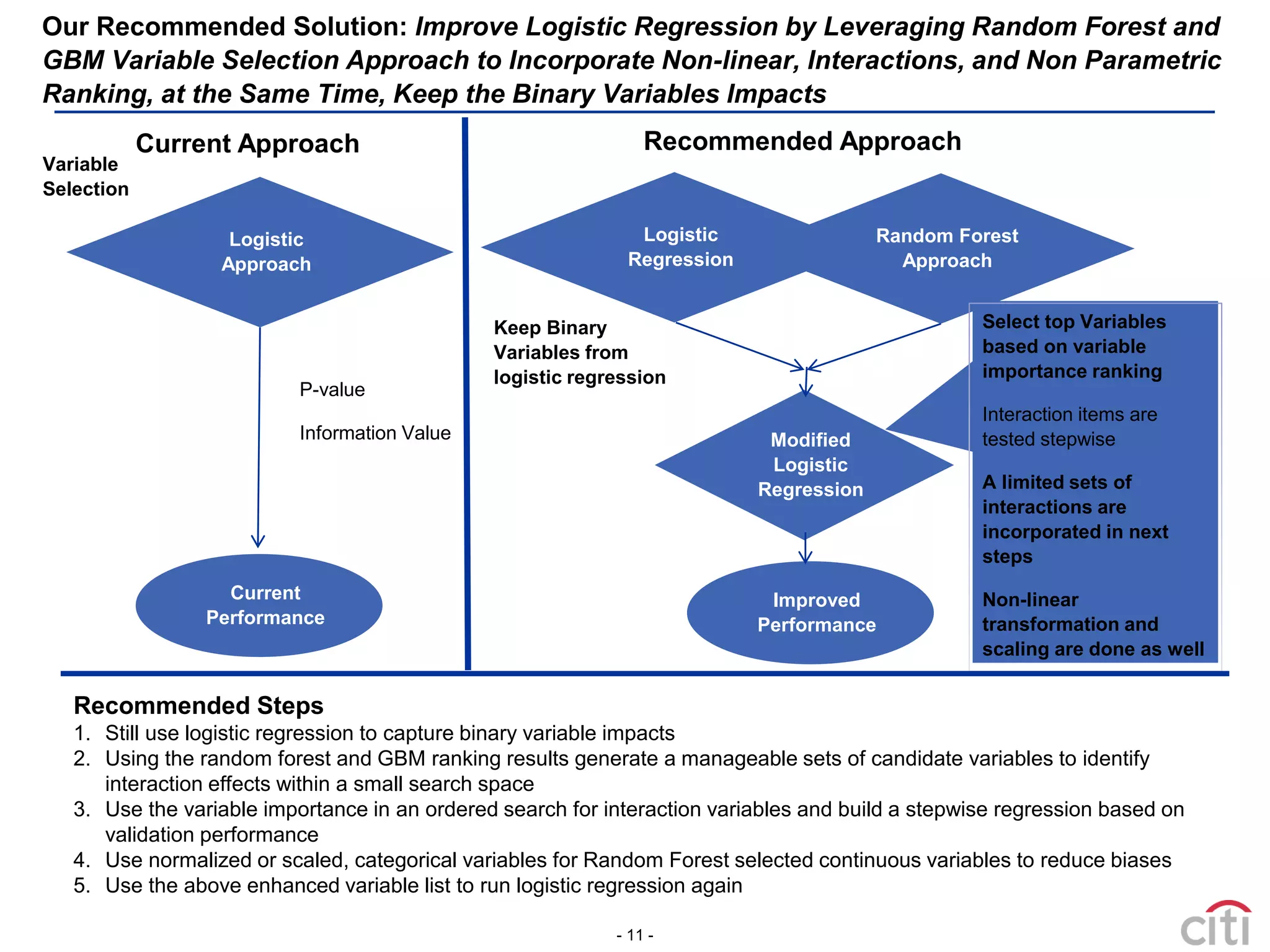

This document proposes a modified logistic regression approach that leverages random forest and gradient boosted machines (GBM) to enhance variable selection. It begins by discussing how different variable selection methods can generate different predictive drivers. It then reviews literature showing random forest often outperforms logistic regression and is well-suited for credit scoring. The document explains how algorithm selection is intertwined with variable selection and variable transformations. It recommends using random forest and GBM to narrow variables, detect non-linear relationships and interactions, and transform variables before incorporating them into a modified logistic regression model. This hybrid approach aims to incorporate benefits from both ensemble methods and logistic regression.