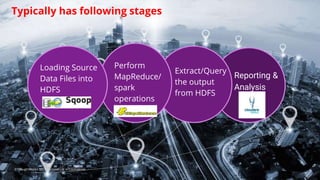

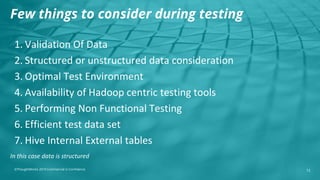

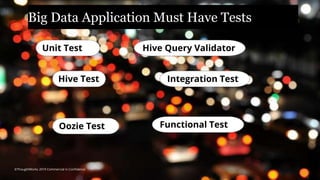

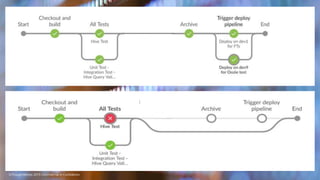

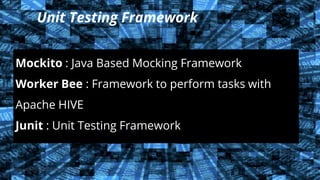

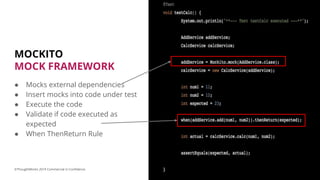

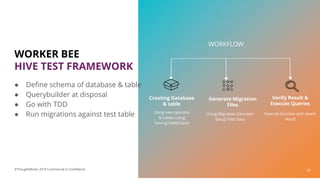

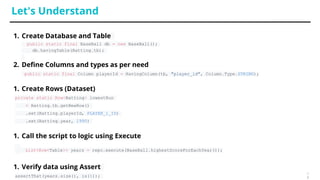

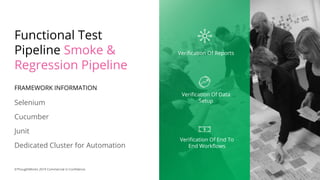

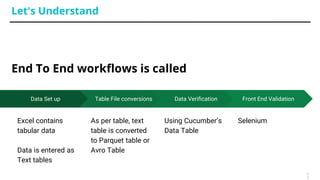

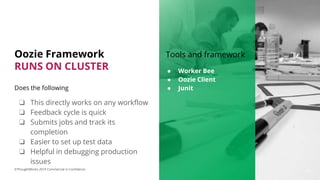

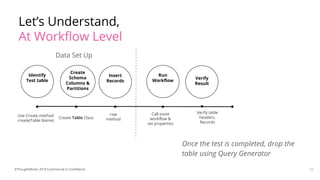

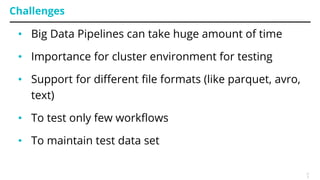

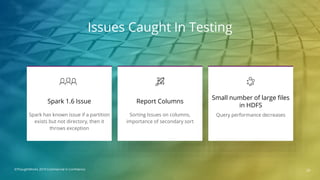

This document discusses testing of big data applications. It describes various types of tests including unit tests, integration tests, functional tests and tests of workflows like Oozie tests. It also discusses automation tools and frameworks for testing like Mockito, Worker Bee and Cucumber. Challenges in big data testing mentioned include long test run times, need for dedicated test clusters and maintaining large test data sets.