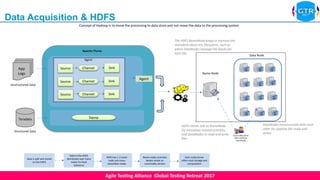

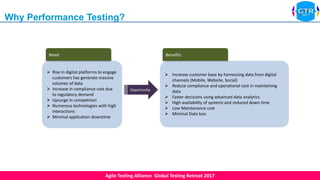

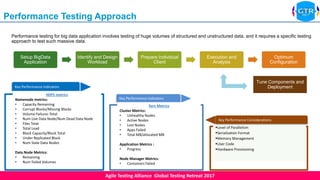

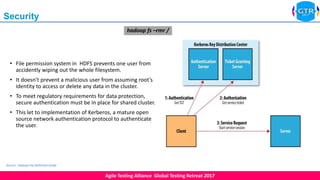

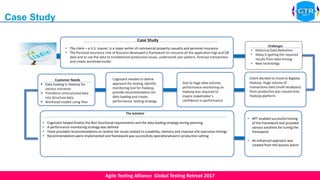

The document discusses the importance of performance testing in big data applications, highlighting the need for specific testing approaches due to massive data volumes. It outlines data acquisition through HDFS architecture and emphasizes security measures such as the implementation of Kerberos for user authentication. A case study of a U.S. insurer illustrates the practical application of these concepts to improve data handling and application performance.