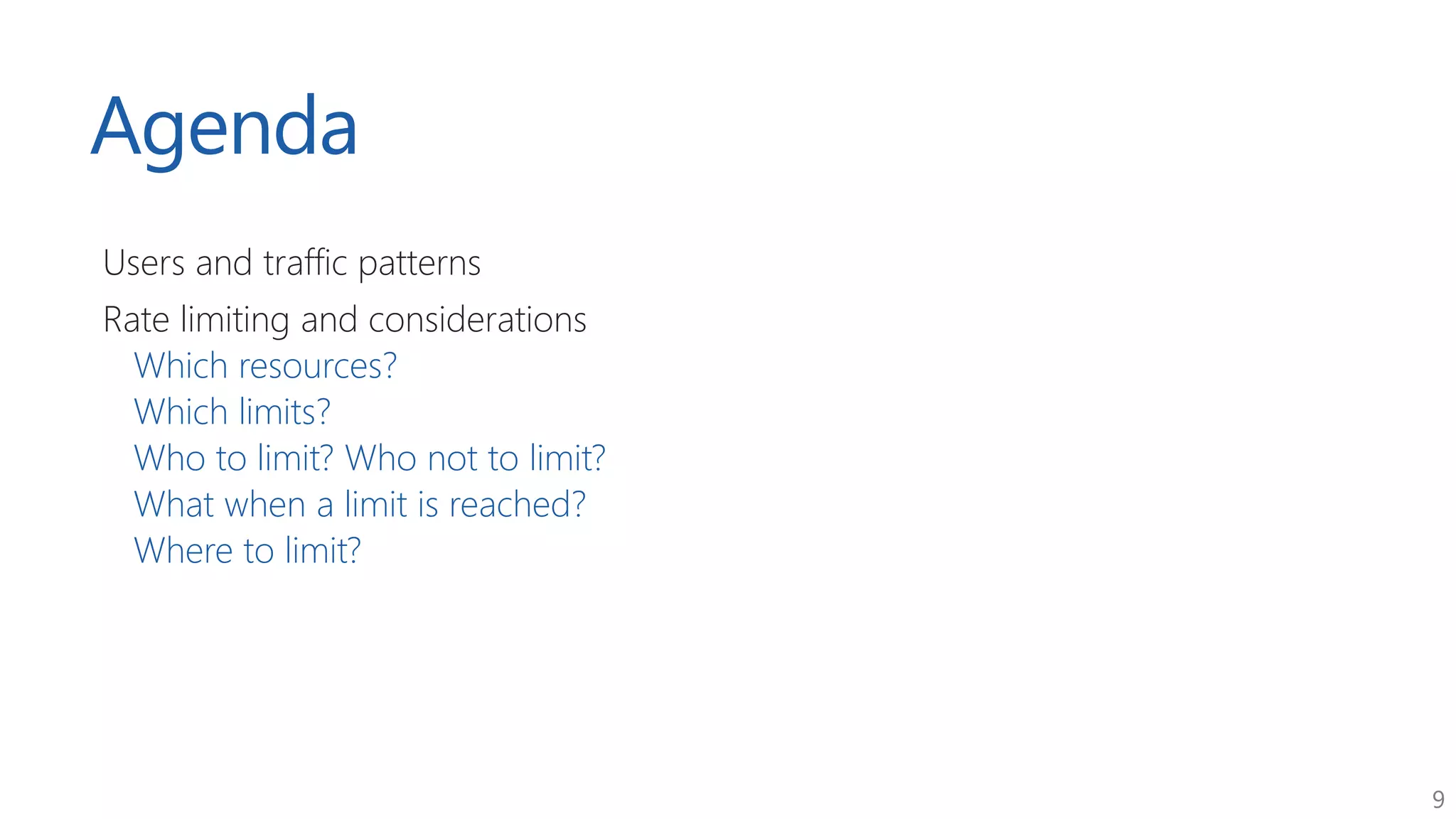

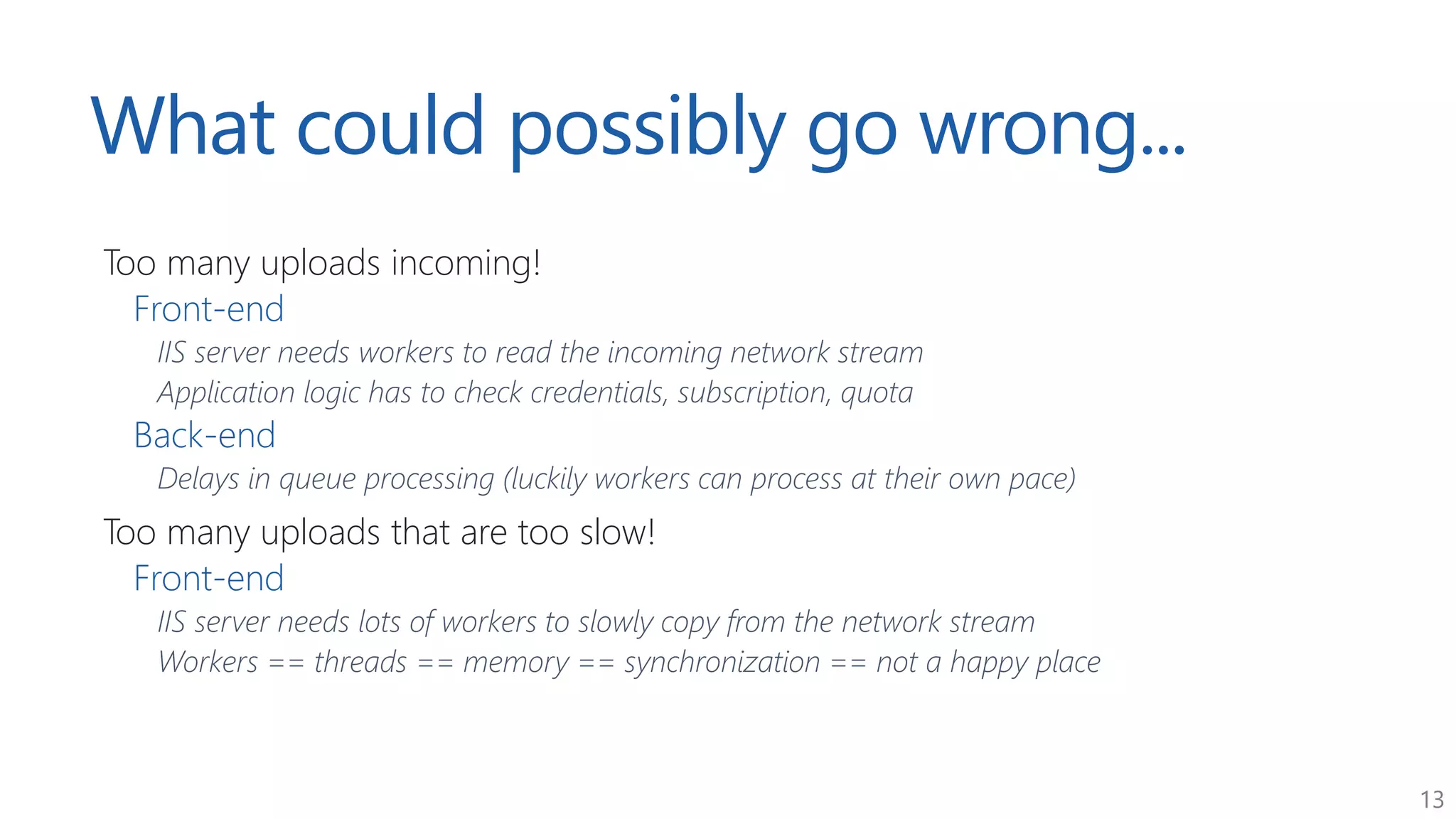

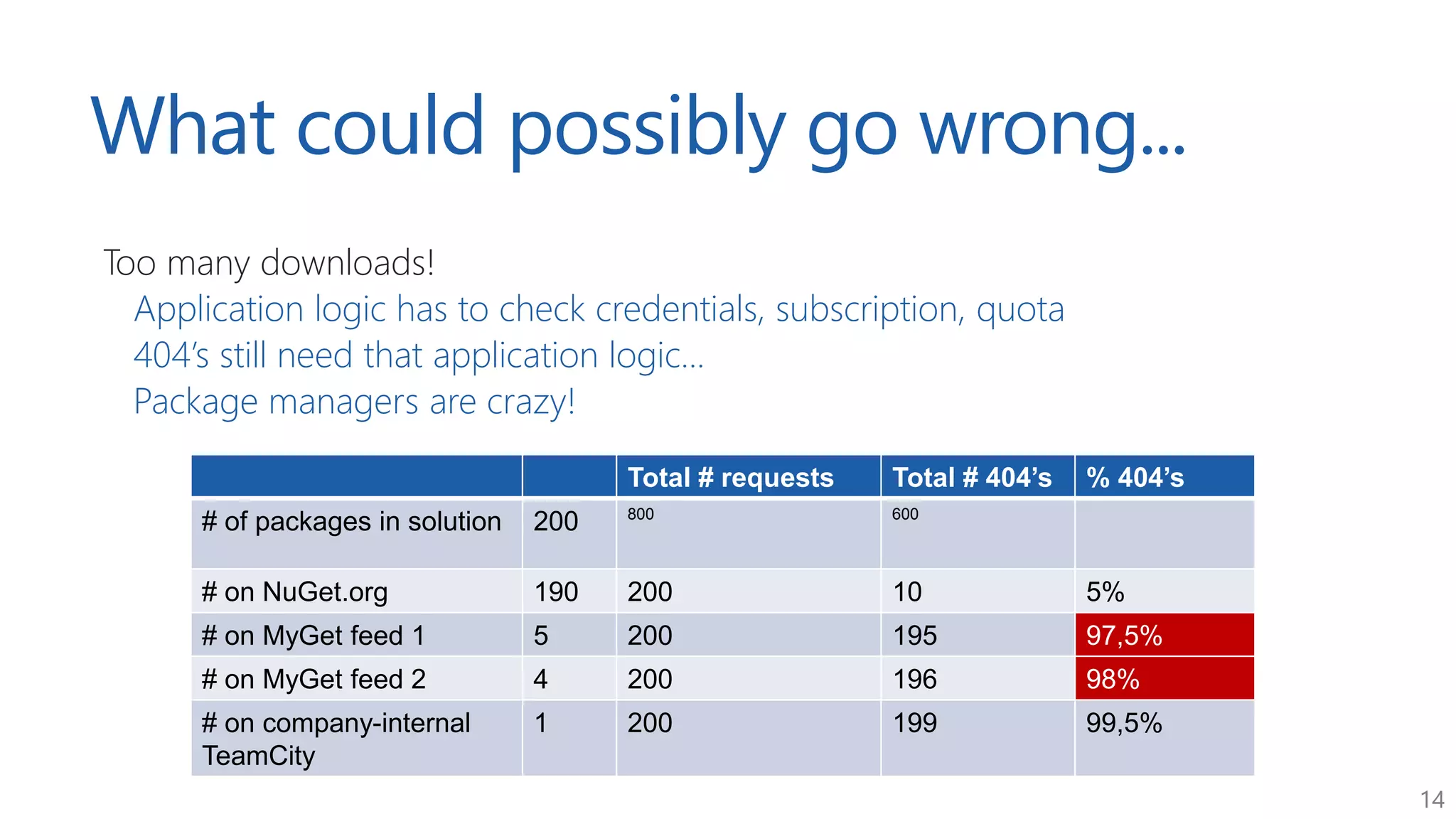

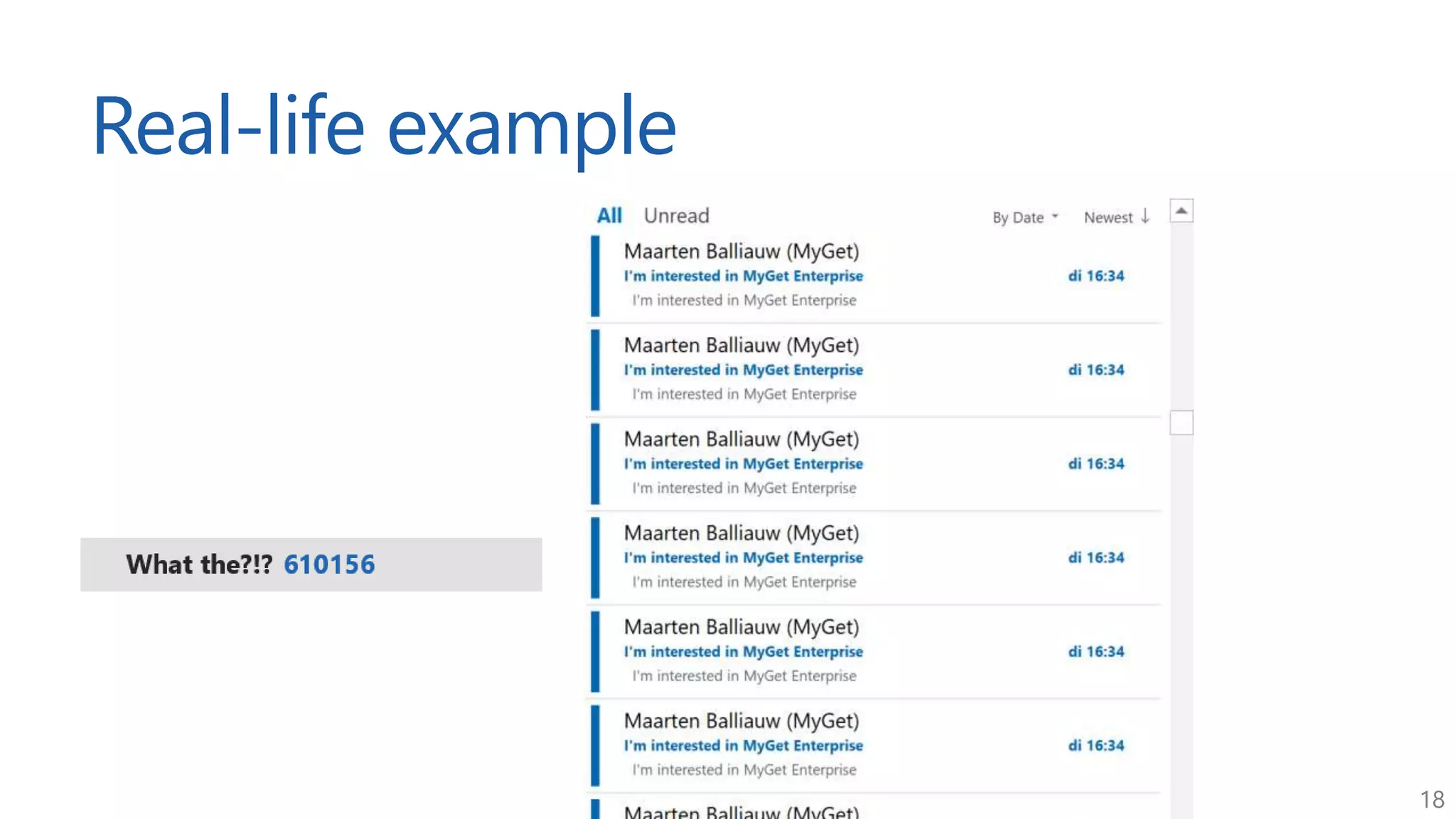

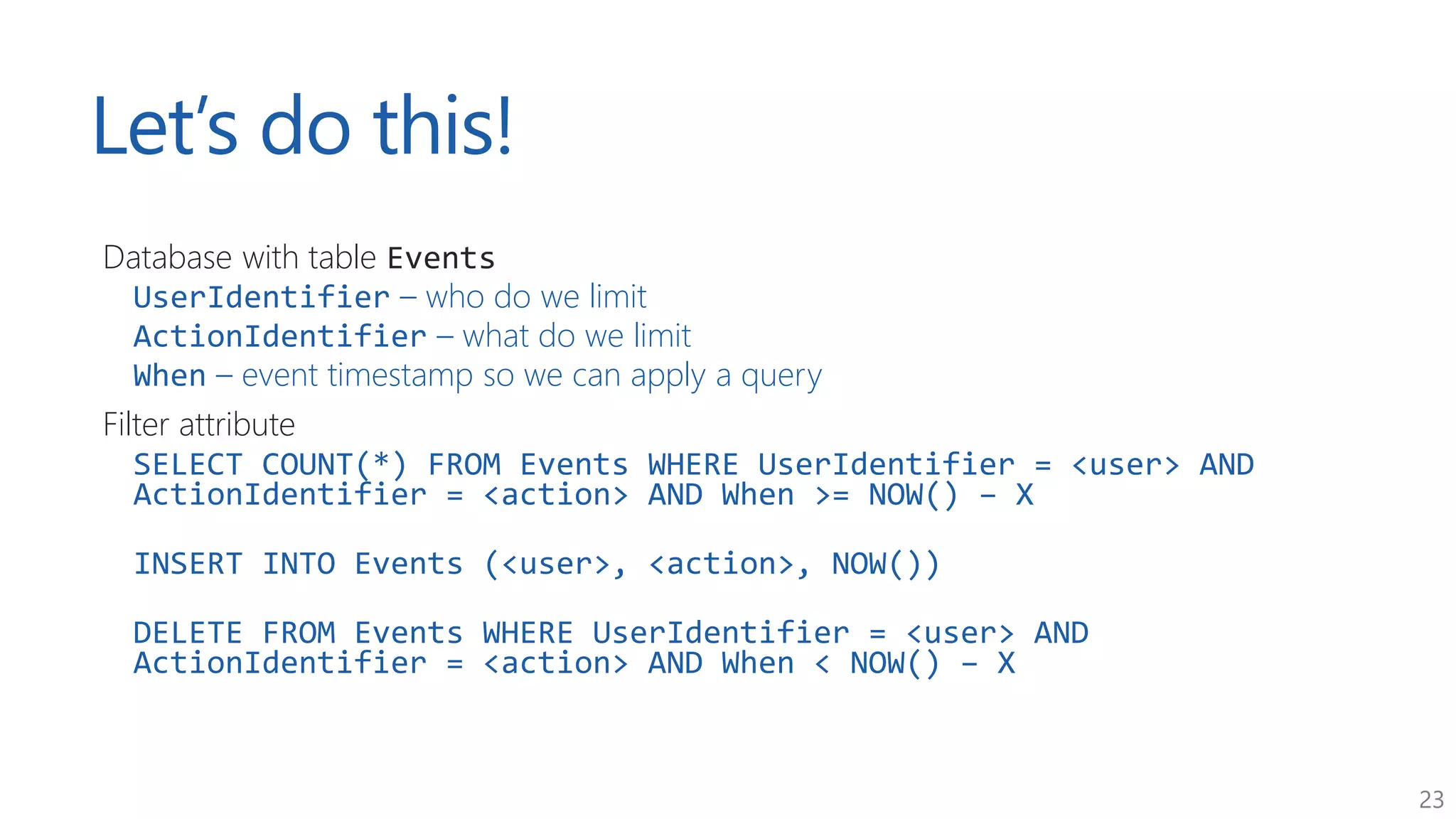

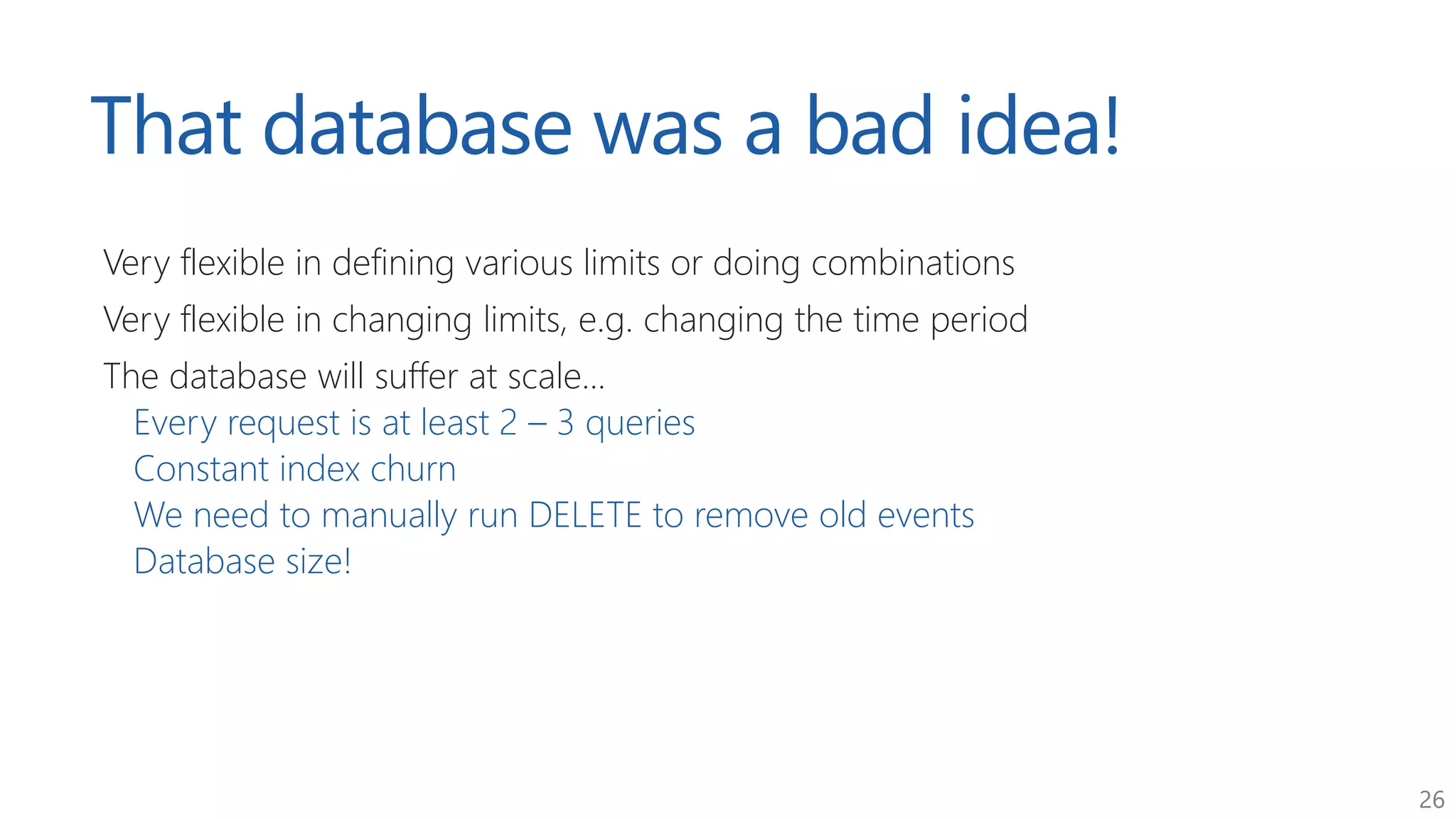

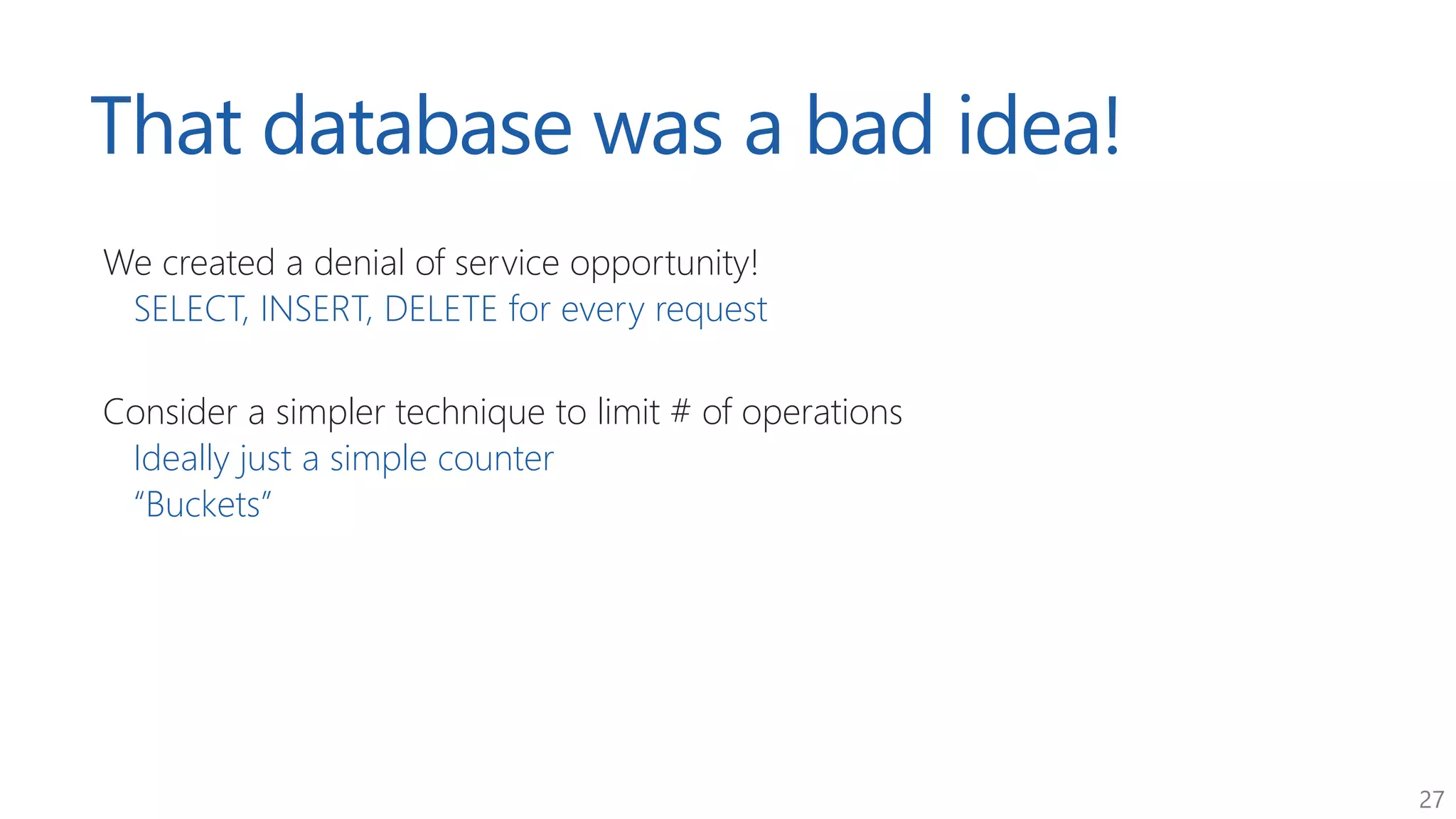

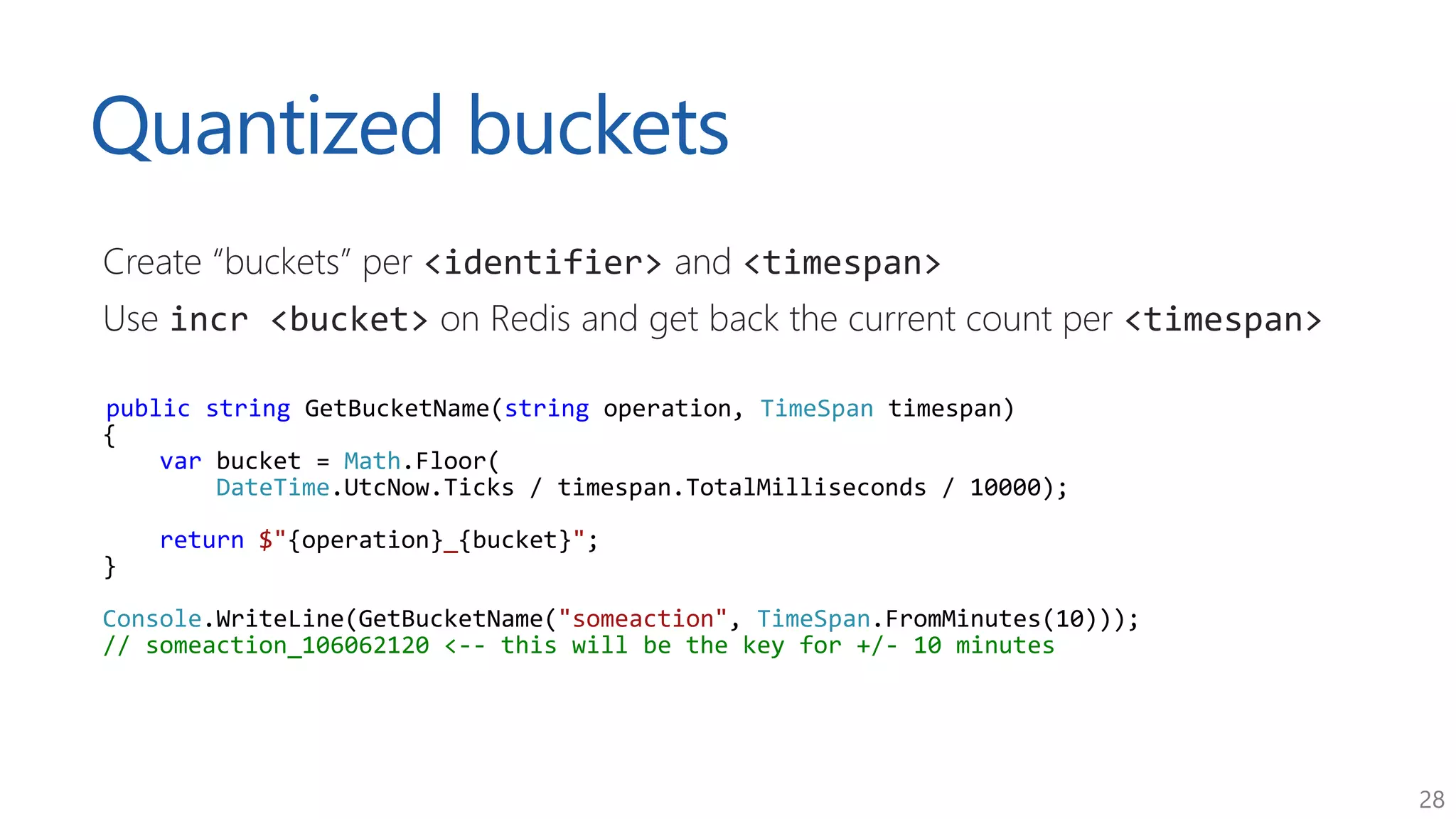

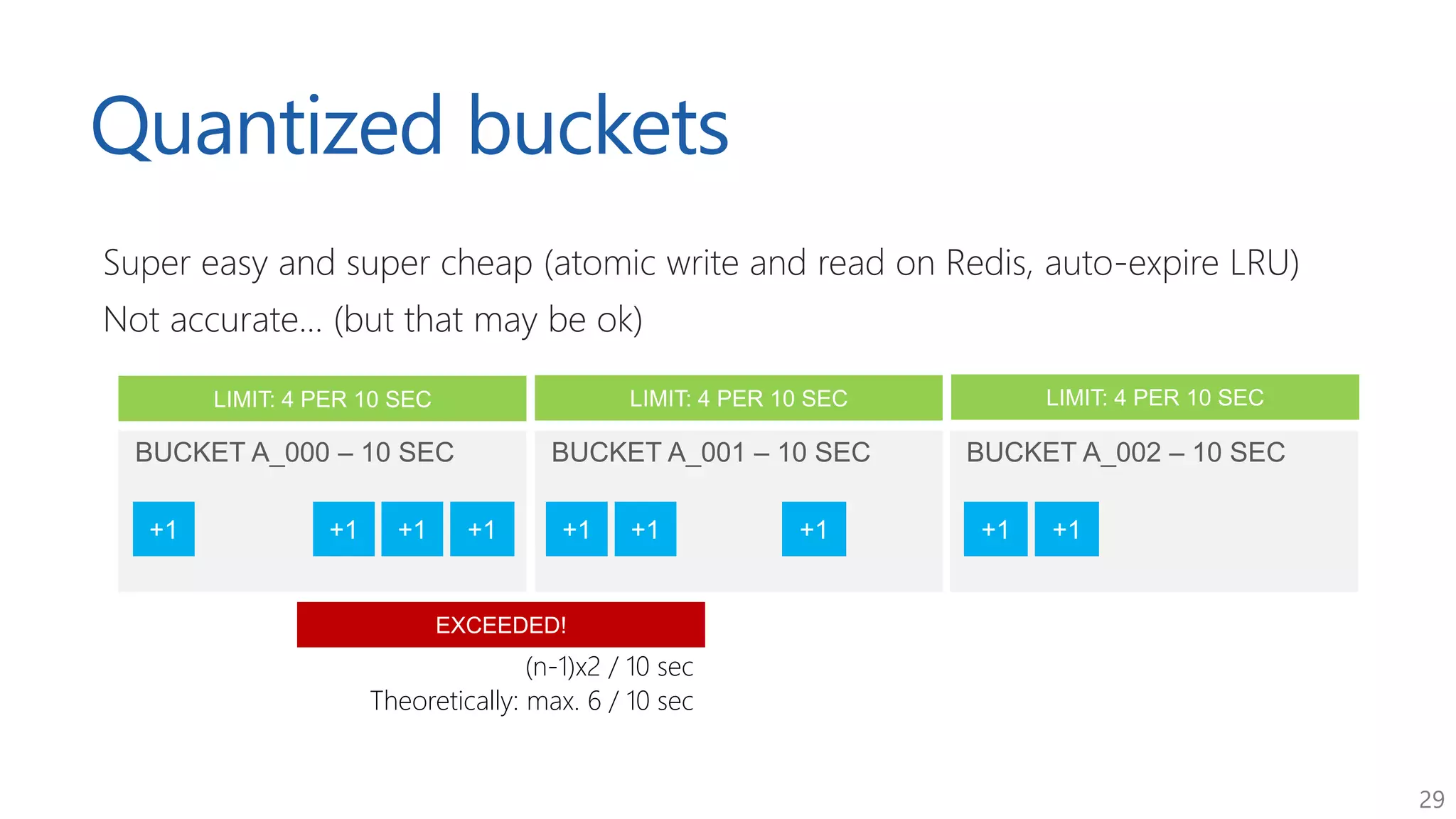

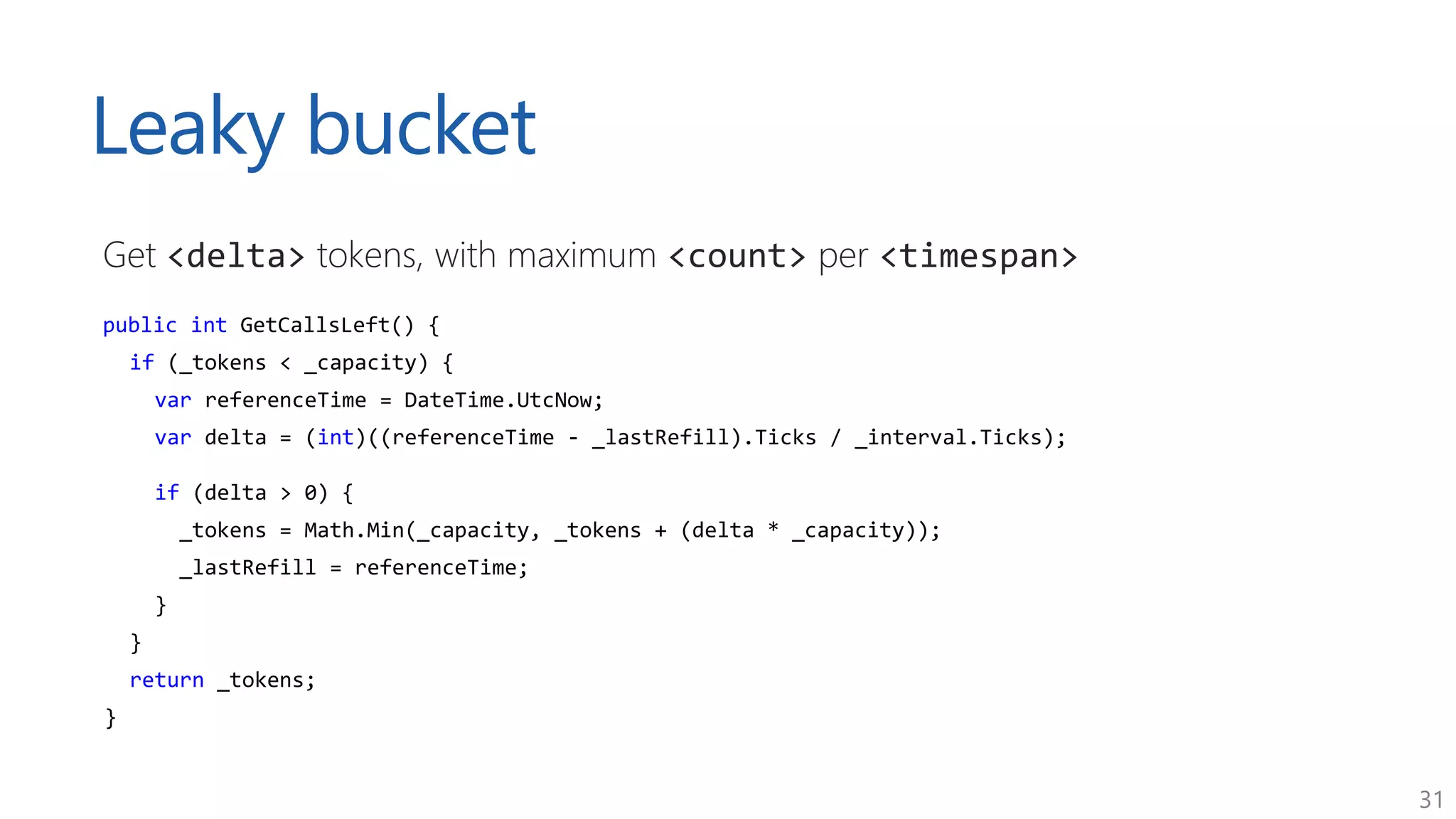

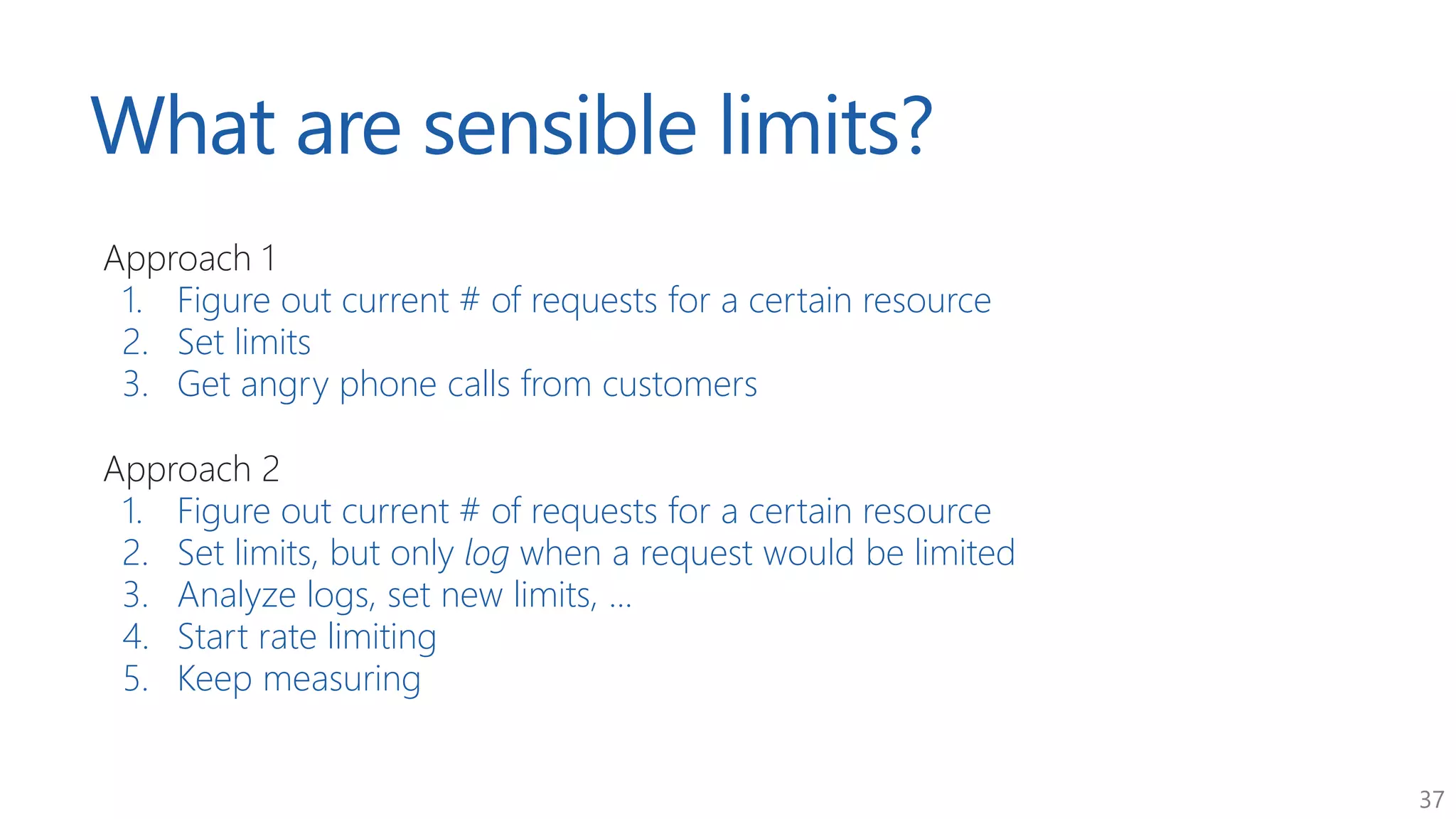

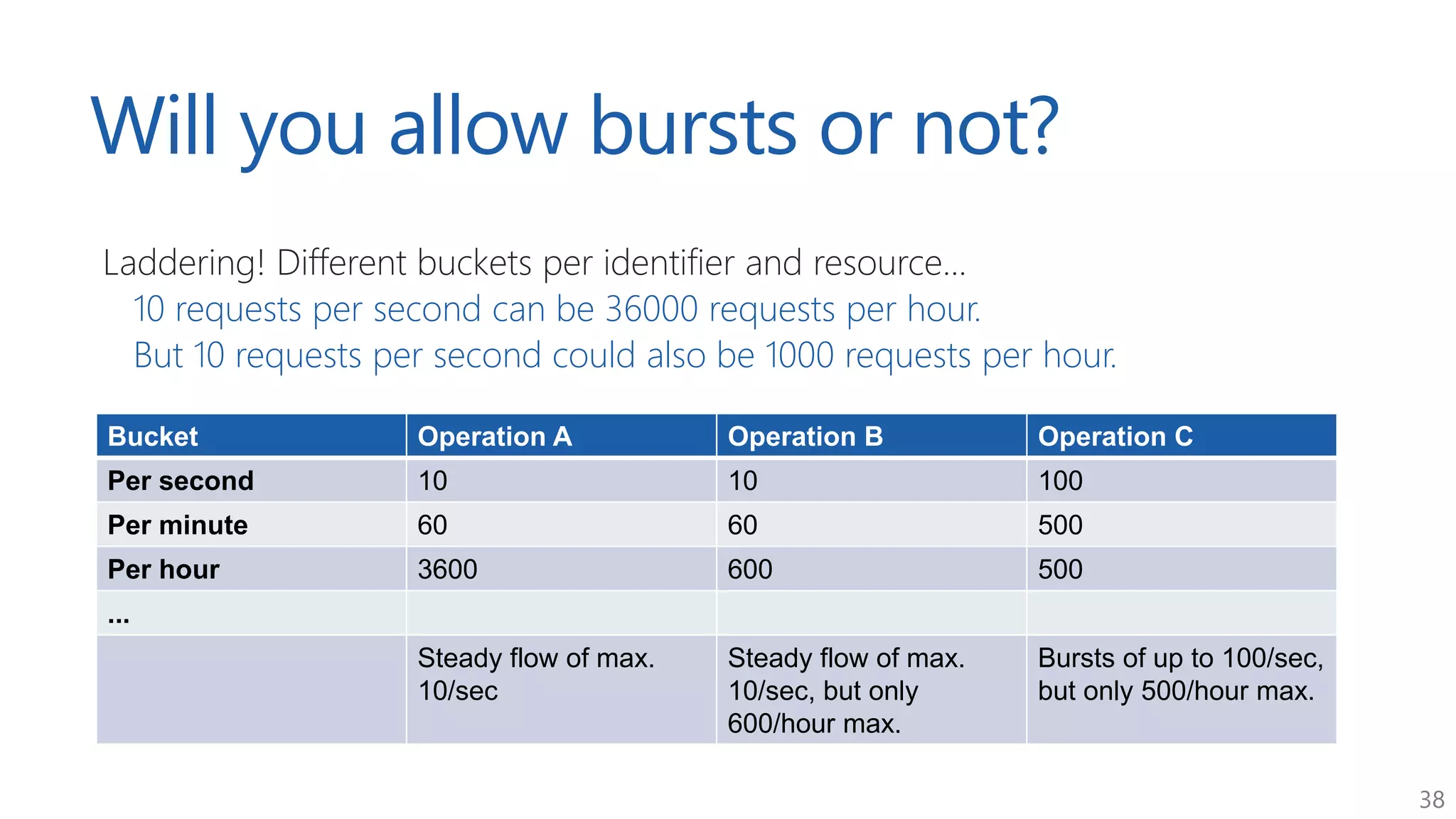

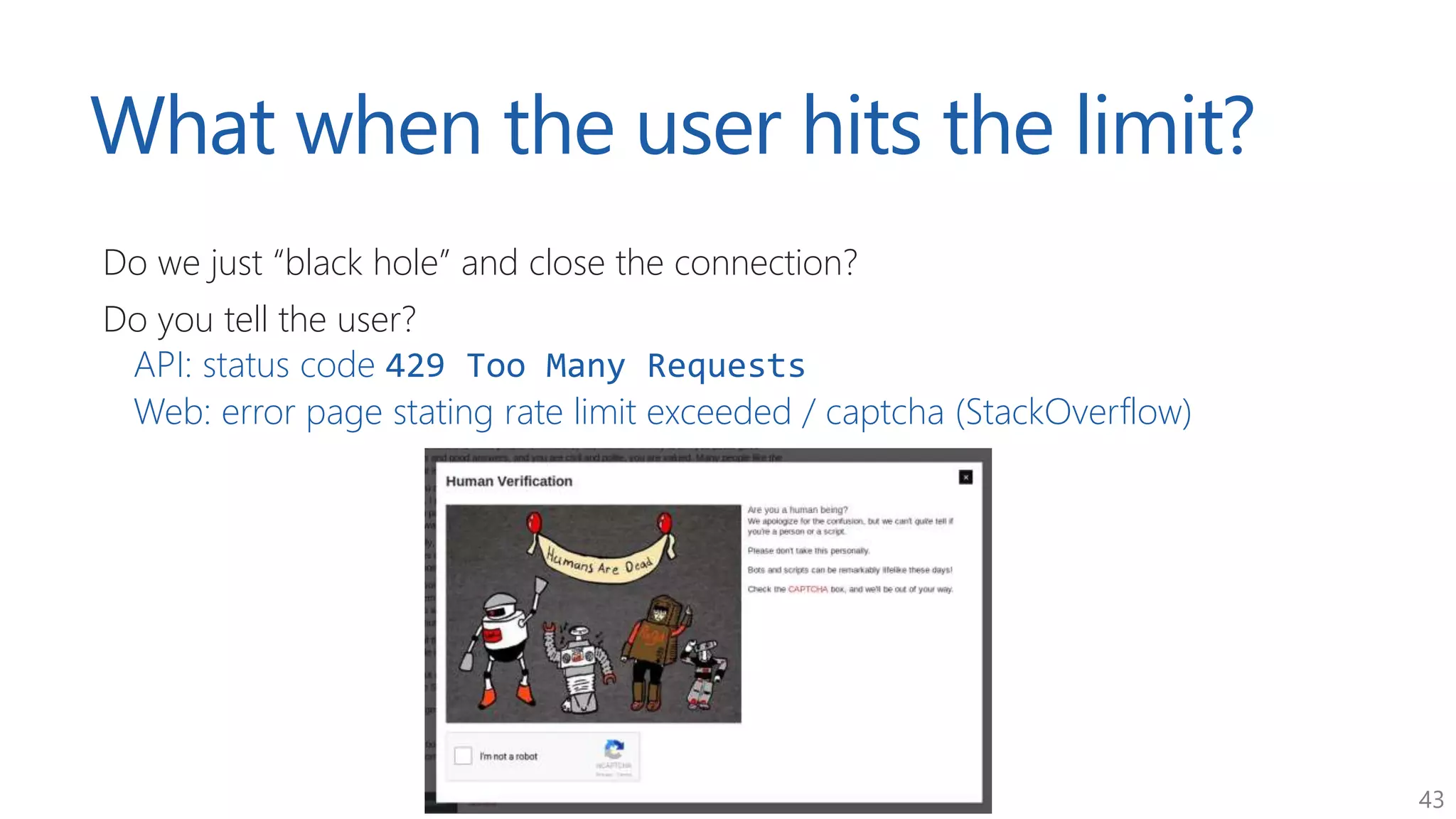

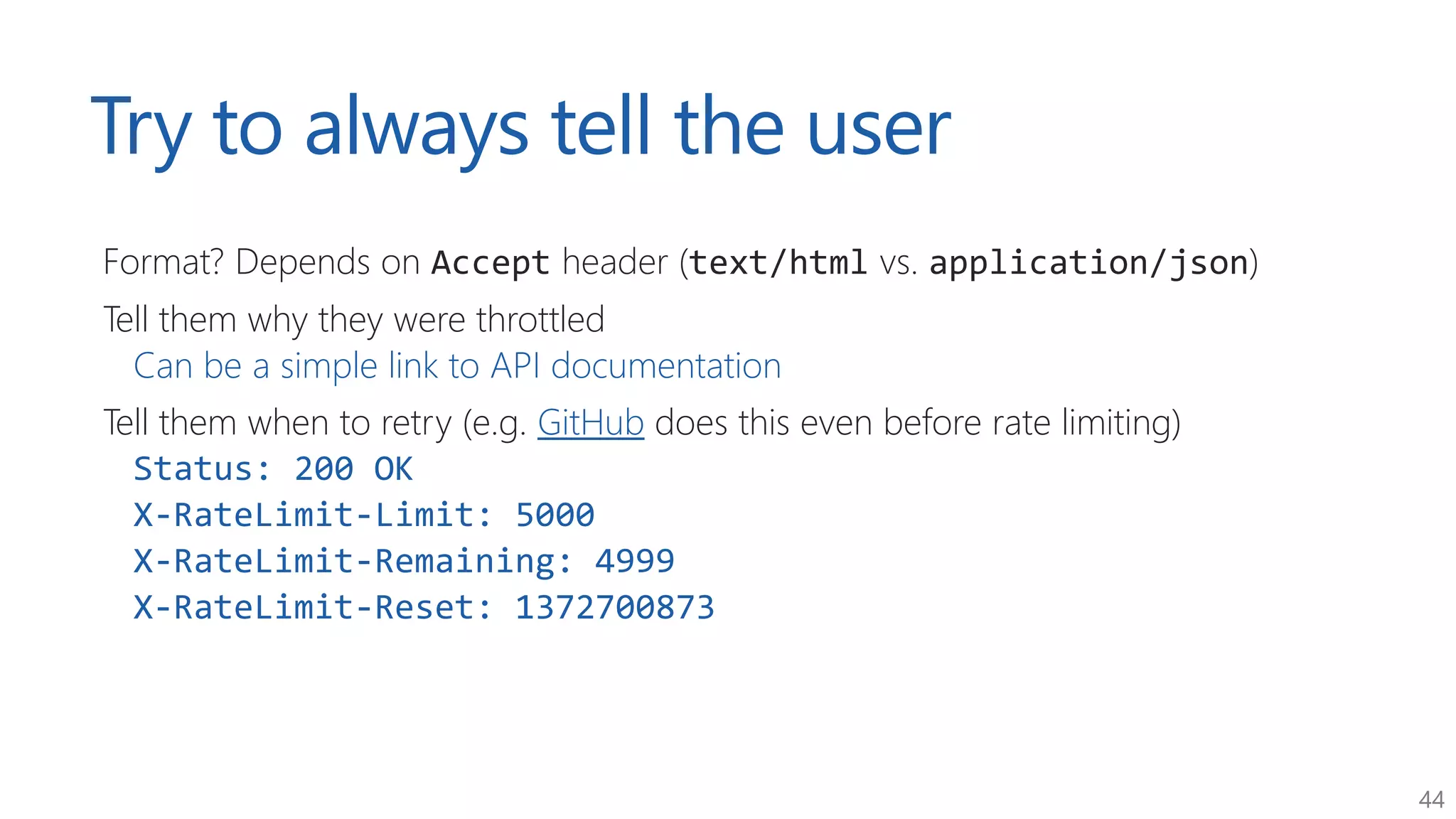

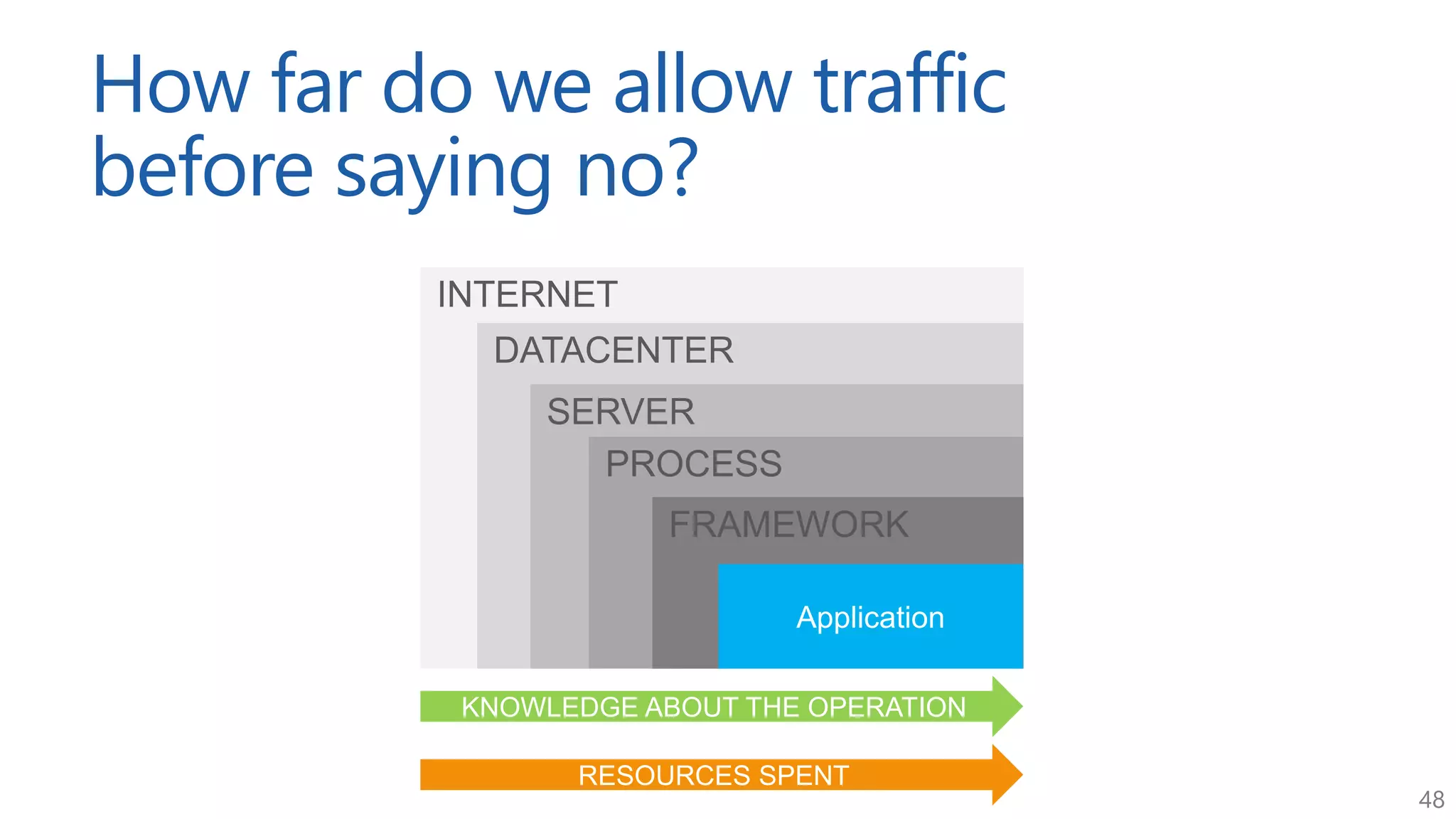

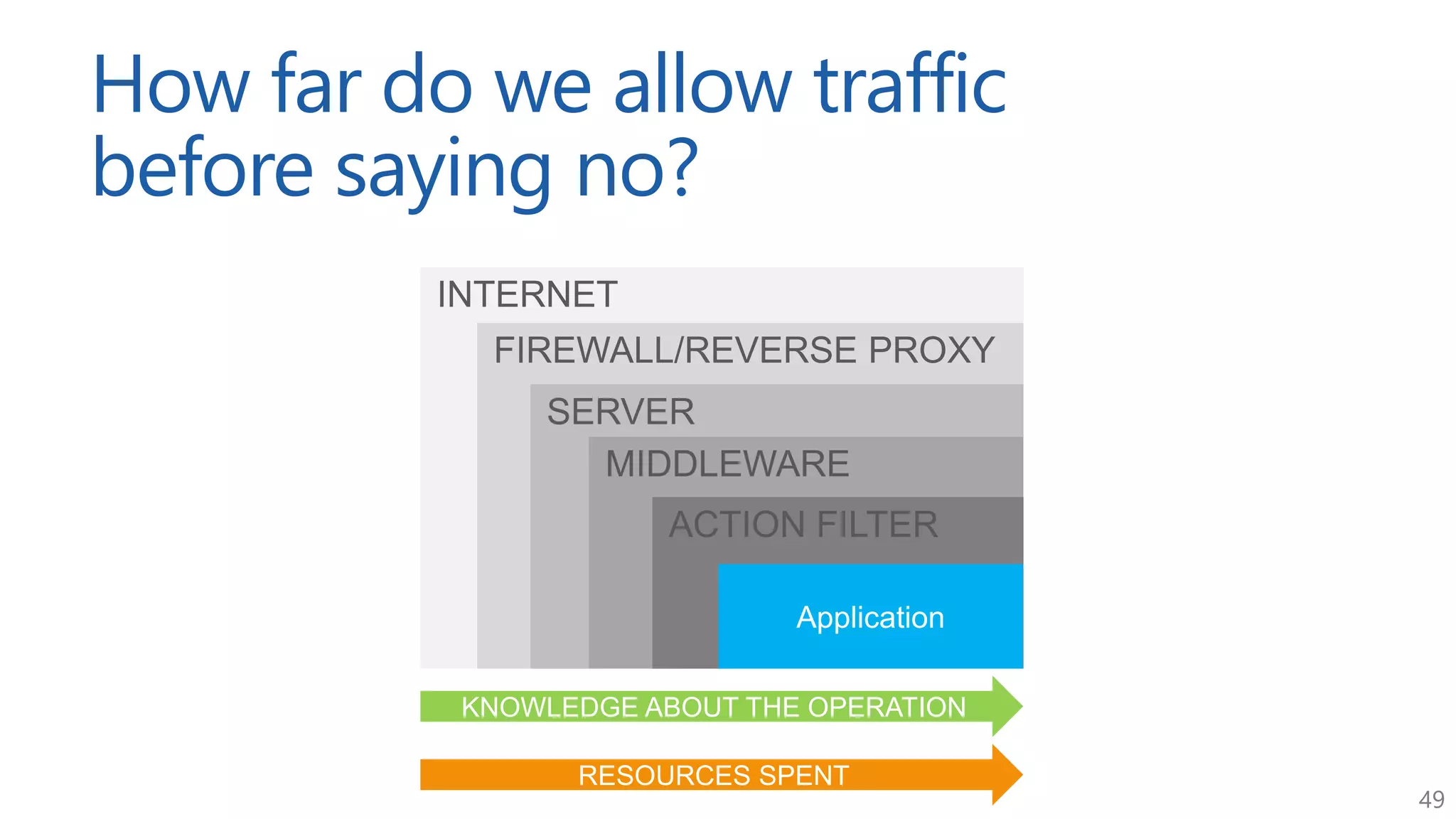

The document discusses approaches for application request throttling, highlighting the importance of rate limiting to manage traffic patterns and prevent issues such as denial of service. It outlines various strategies for implementing rate limiting, determining sensible limits, and the identifiers to use for monitoring requests. Additionally, it emphasizes the need for monitoring and responding appropriately when limits are reached to improve application performance and security.