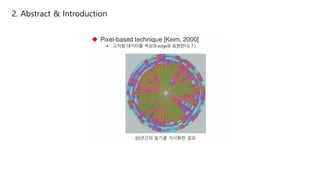

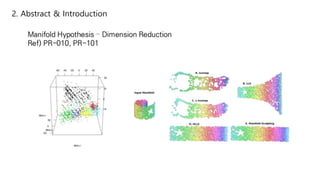

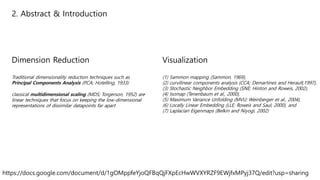

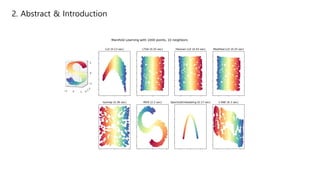

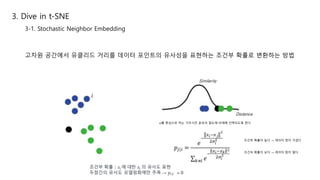

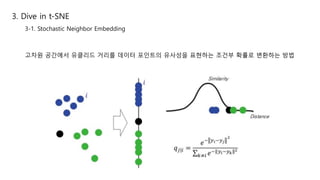

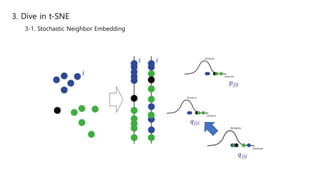

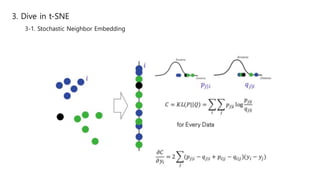

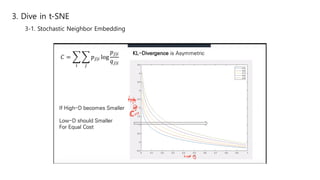

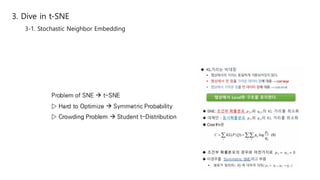

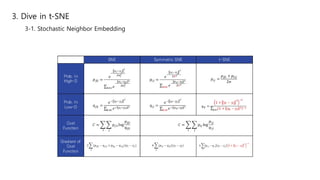

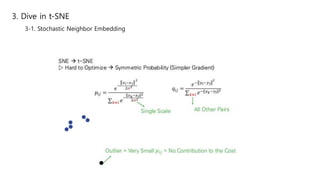

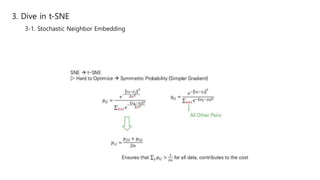

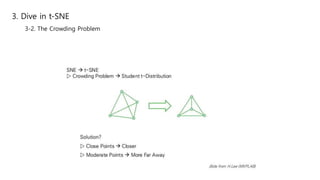

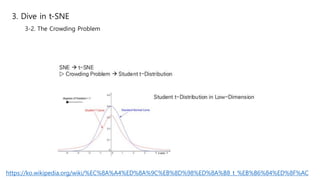

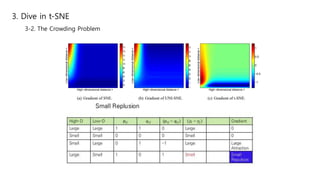

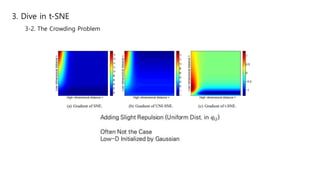

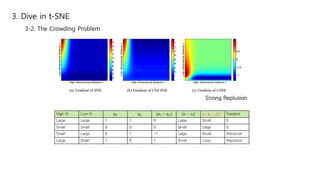

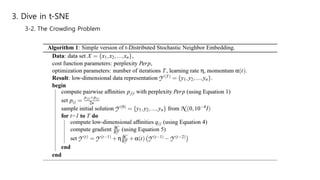

This document summarizes the t-SNE technique for visualizing high-dimensional data in two or three dimensions. It explains that t-SNE is an advanced version of Stochastic Neighbor Embedding (SNE) that can better preserve local and global data structures compared to linear dimensionality reduction methods. The document outlines how t-SNE converts Euclidean distances between data points in high-dimensions to conditional probabilities representing similarity. It also discusses the "crowding problem" that occurs when mapping high-dimensional data to low-dimensions, and how t-SNE addresses this issue.