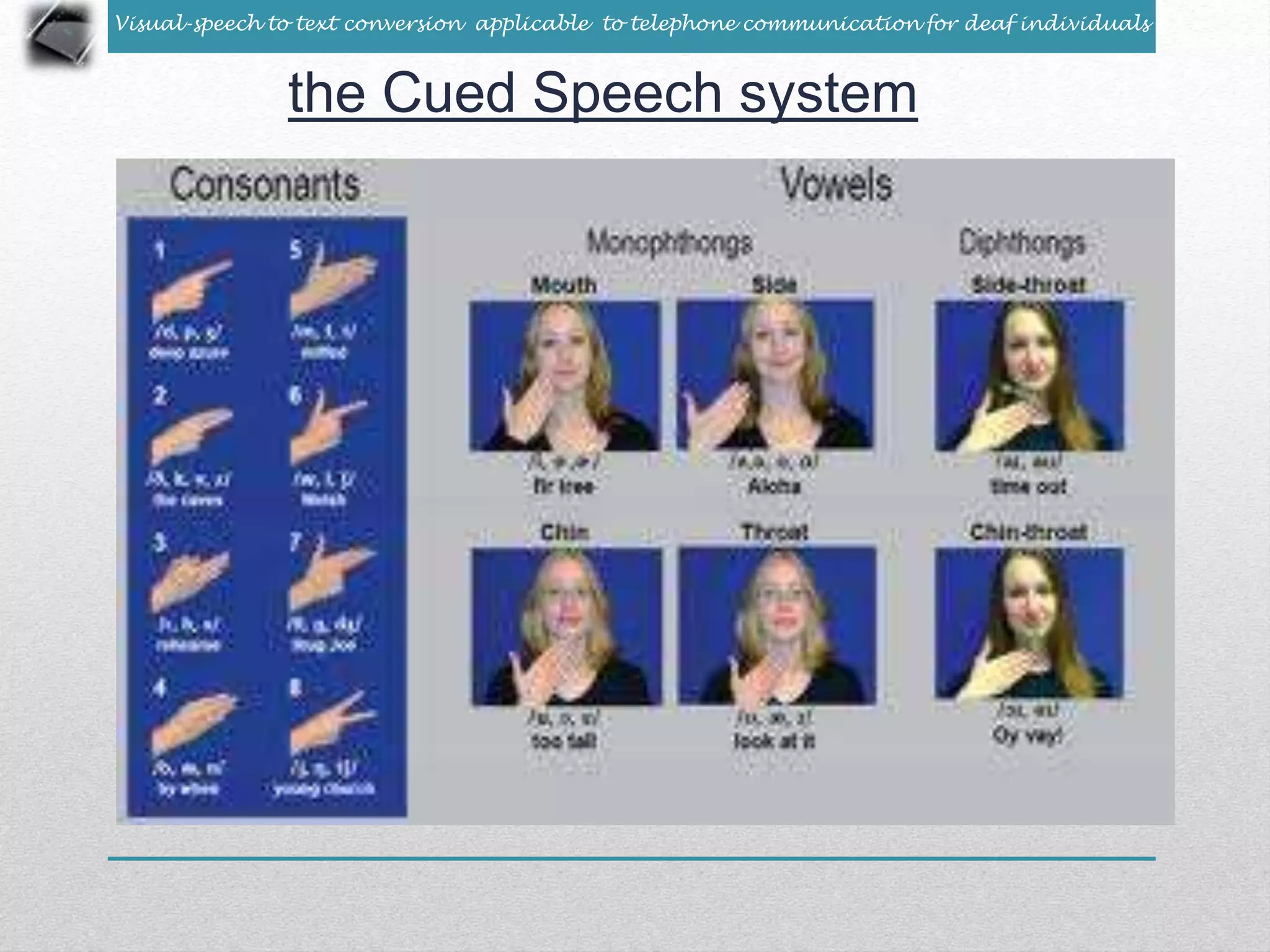

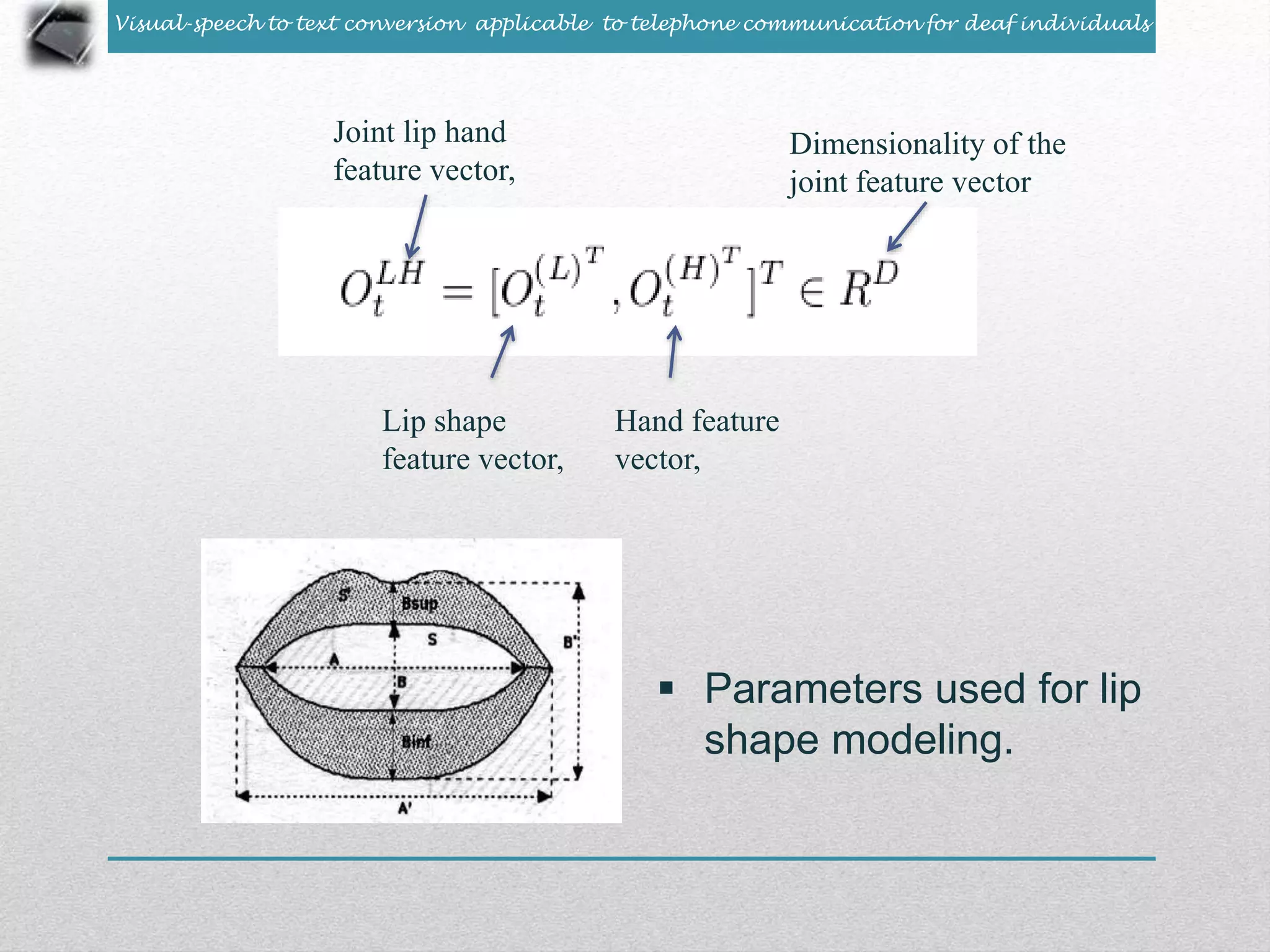

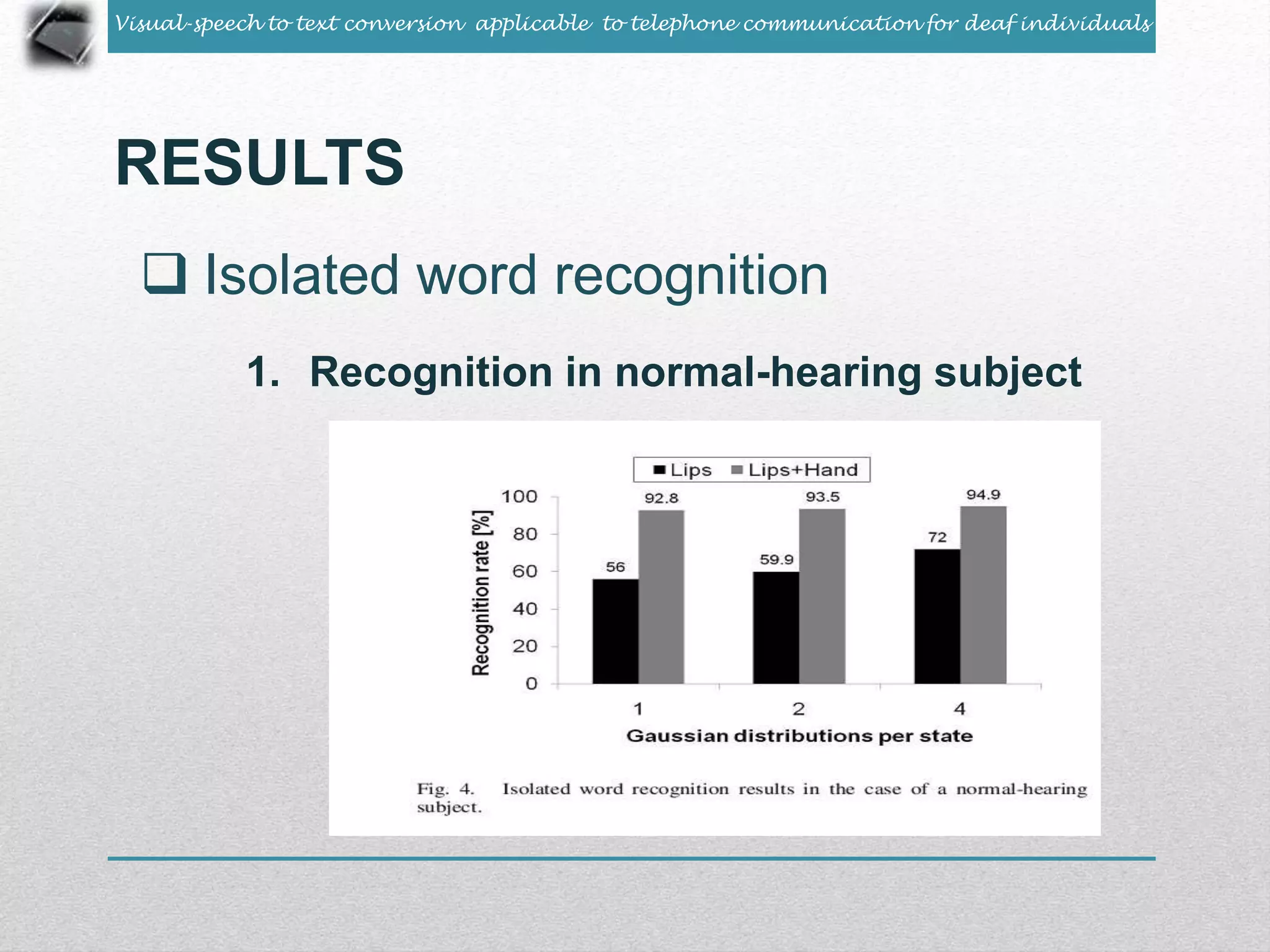

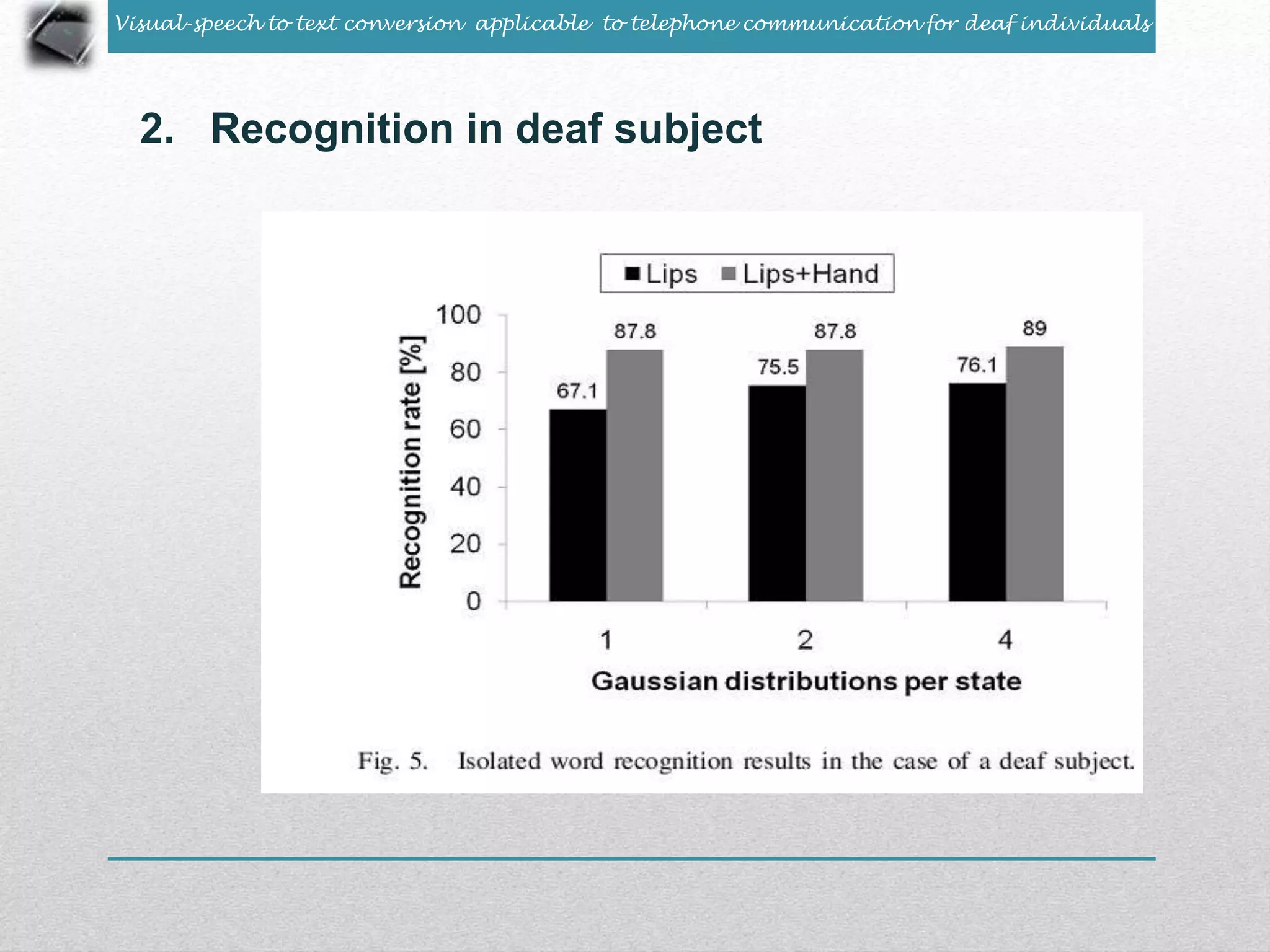

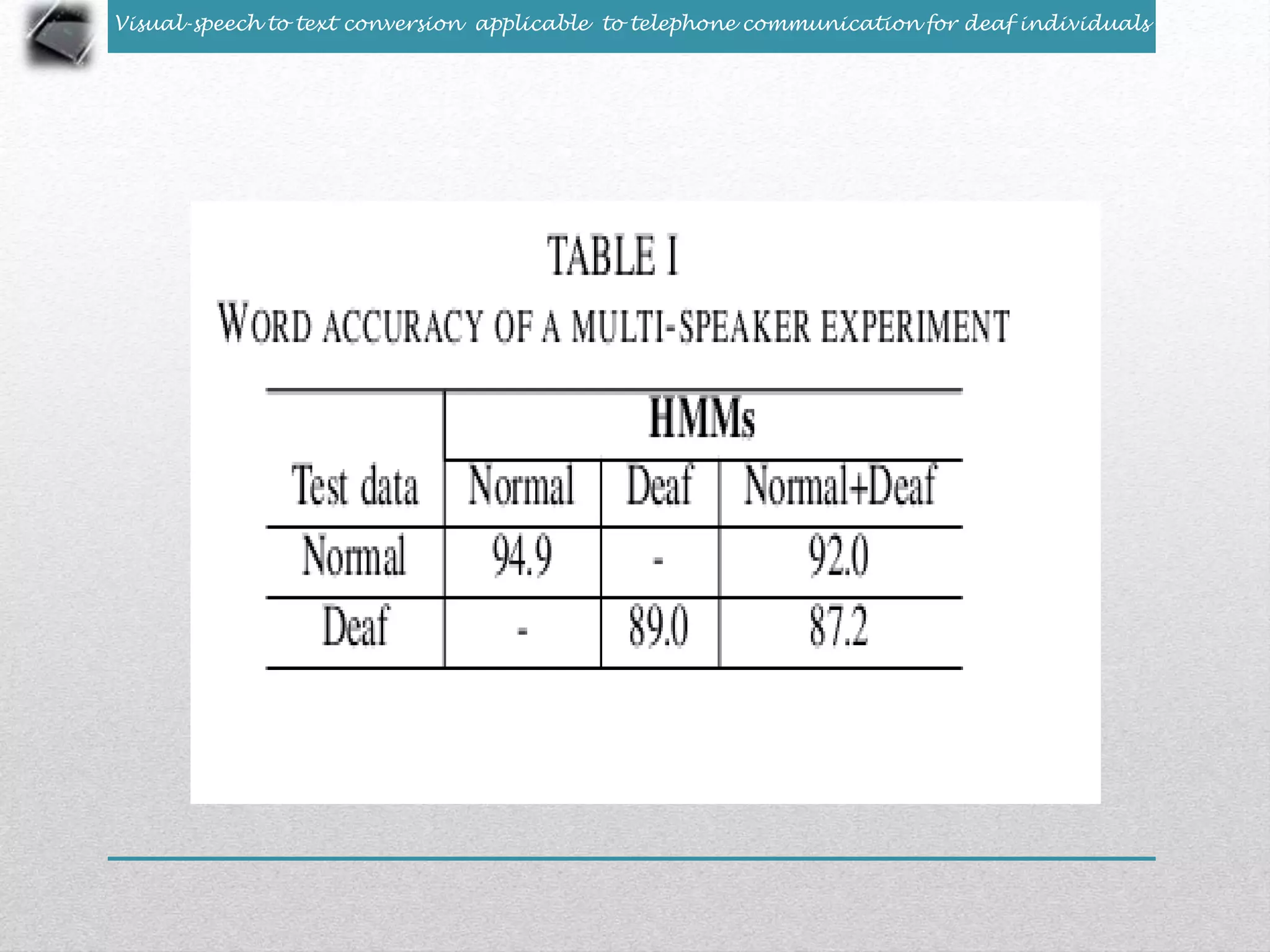

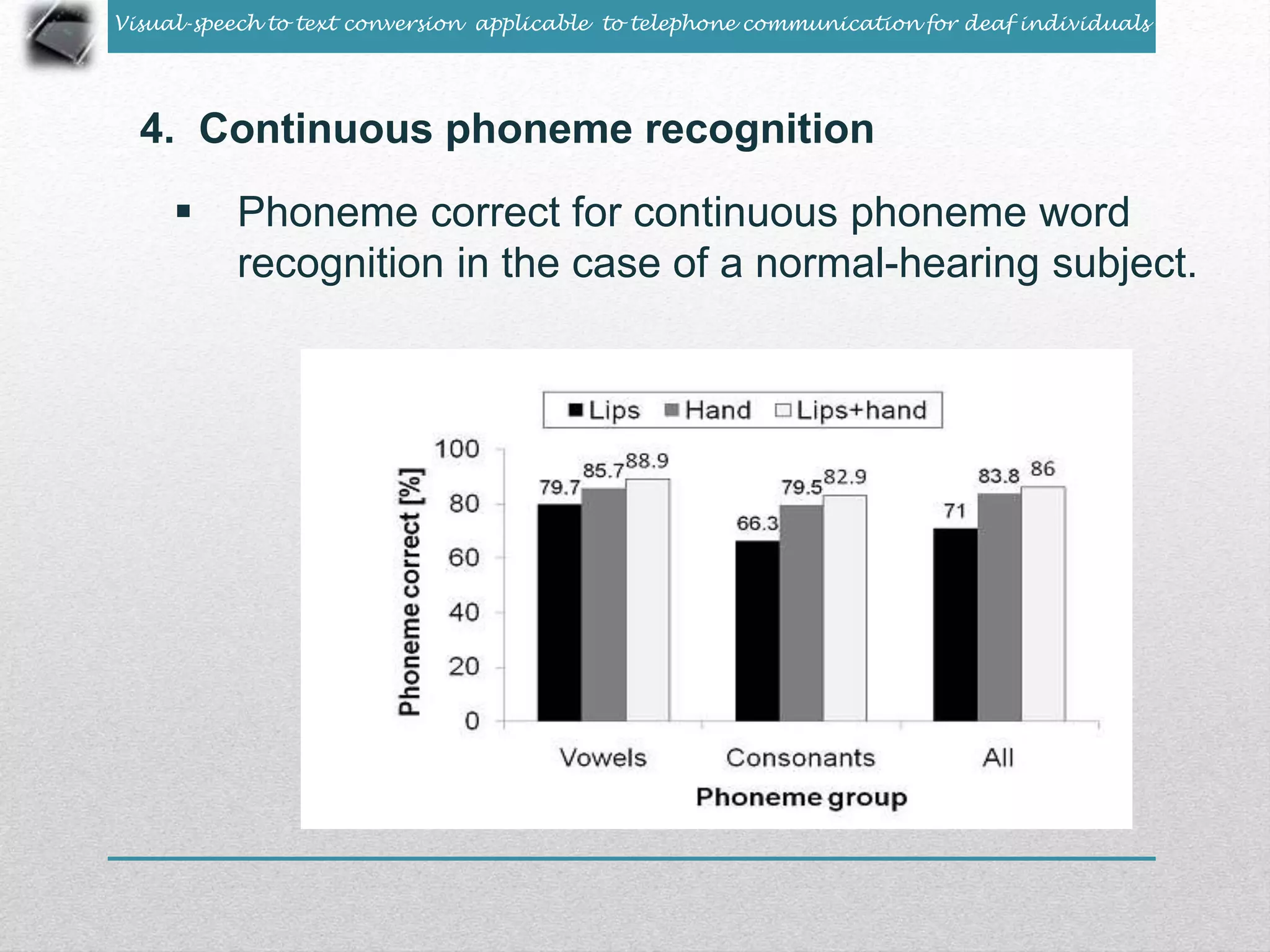

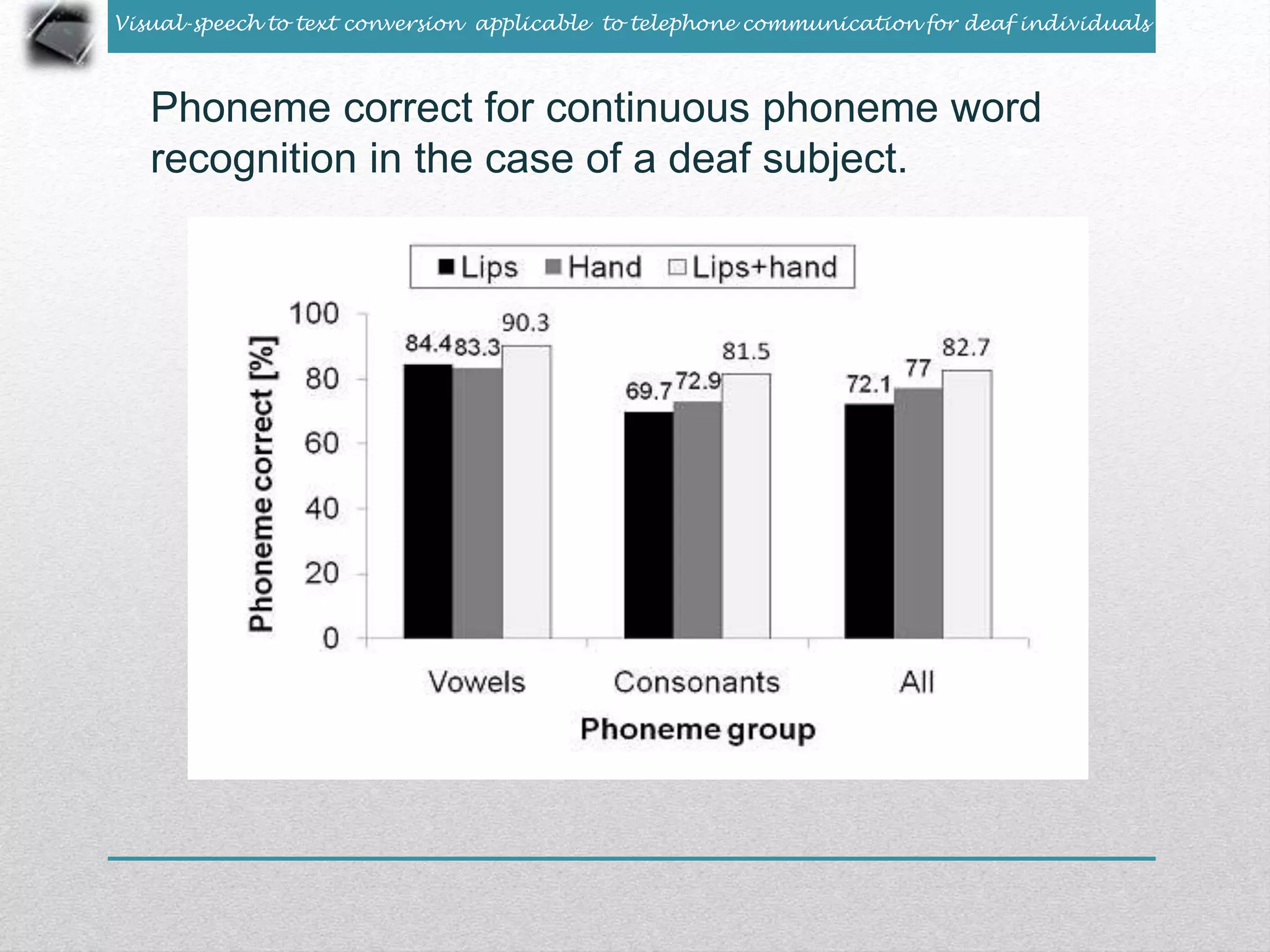

This document discusses a new system to convert visual-speech to text for deaf individuals using telephone communication. The system aims to automatically recognize Cued Speech gestures and convert them to text. Researchers extracted lip shapes and hand coordinates from video recordings of individuals performing Cued Speech. They used Hidden Markov Models and feature fusion to integrate lip shapes and hand gestures for isolated word and continuous phoneme recognition. The system achieved 86-94% accuracy for isolated words and 82-89% for continuous phonemes, indicating it can effectively convert visual-speech to text.