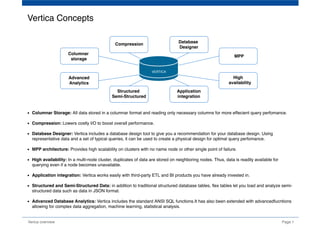

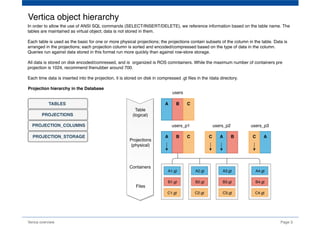

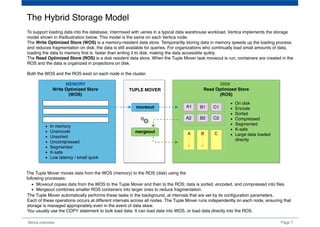

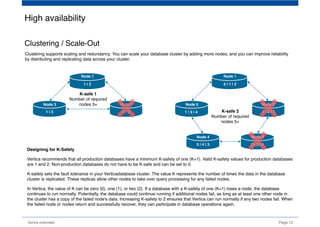

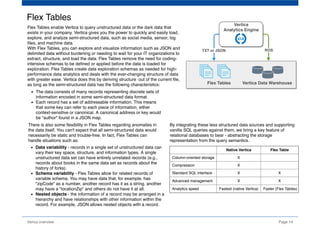

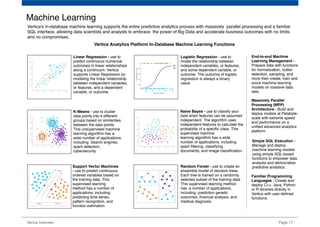

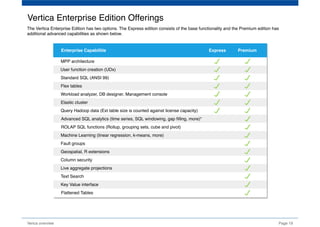

The document provides an overview of Vertica, a columnar storage database known for its efficient query performance through compression and optimized data storage. It highlights the architecture features such as MPP (massively parallel processing), high availability with data duplicates across nodes, and the use of flex tables for structured and semi-structured data. Additionally, it details the database design tool that aids users in optimizing their database schema and layout for improved analytics and storage efficiency.