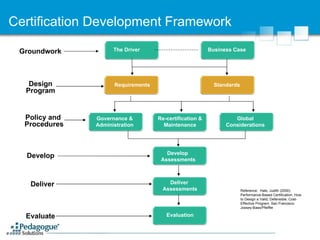

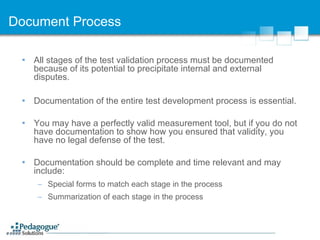

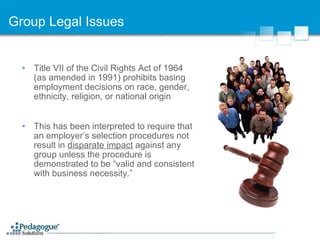

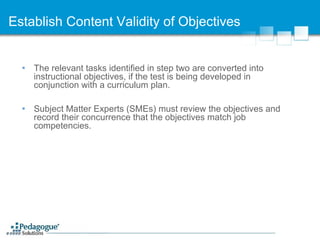

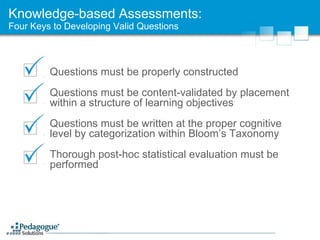

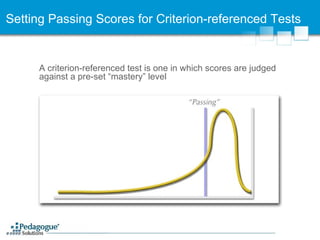

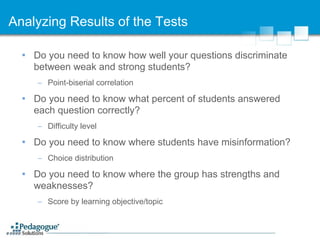

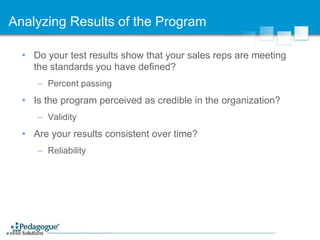

The document outlines a certification program for sales representatives, including design, development, and evaluation processes. It emphasizes the importance of valid assessments, governance, job competency analysis, and legal considerations in certification, particularly in the pharmaceutical industry. Participants in the workshop will learn about the different types of certification, competitive advantages, and the steps necessary to implement an effective certification process.

![Questions? Gregory Sapnar Bristol-Myers Squibb [email_address] 609-897-4307 Steven B. Just Ed.D. Pedagogue Solutions [email_address] 609-921-7585 x12](https://image.slidesharecdn.com/version6spbt2007prs-124468363867-phpapp02/85/Version-6-Spbt-2007-Prs-90-320.jpg)