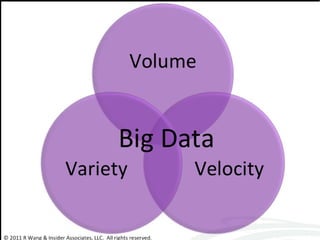

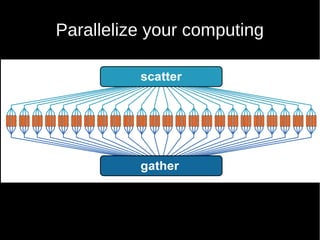

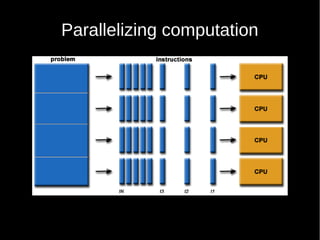

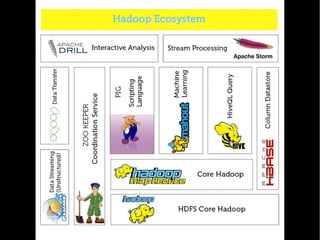

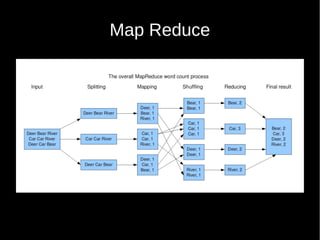

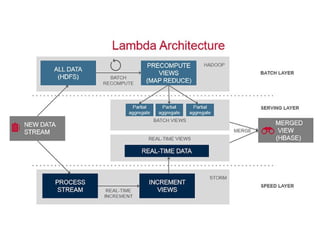

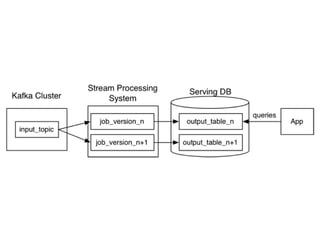

The document discusses the rapid growth of data, highlighting that 90% of the world's data was created in the last two years and how traditional methods of data management are becoming insufficient. It introduces distributed file systems and parallelized computing as solutions to efficiently handle large data volumes. Notably, it mentions Hadoop and MapReduce as effective tools for managing data variety and computing scalability.