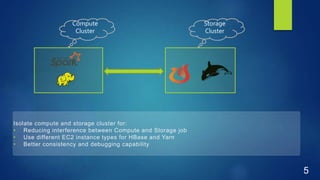

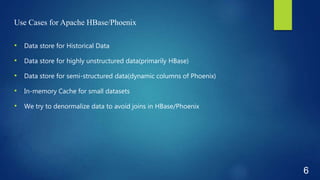

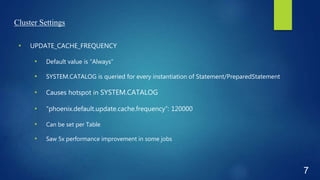

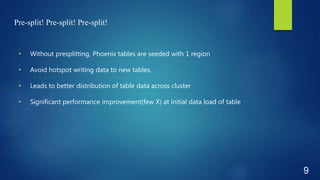

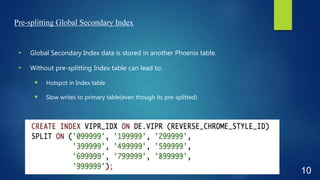

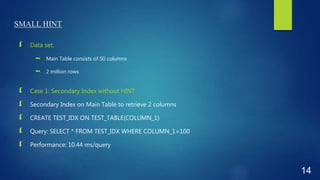

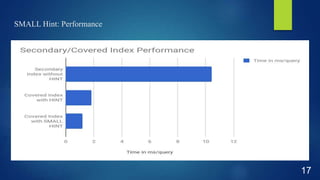

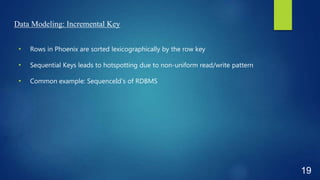

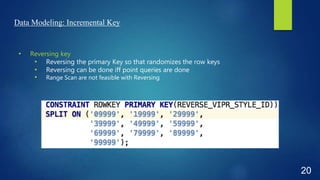

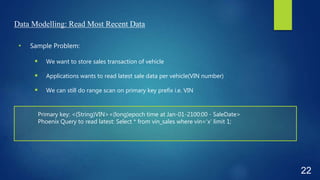

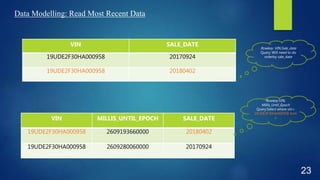

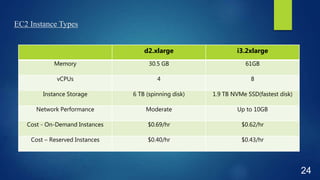

This document discusses performance optimization techniques for Apache HBase and Phoenix at TRUECar. It begins with an agenda and overview of TRUECar's data architecture. It then discusses use cases for HBase/Phoenix at TRUECar and various performance optimization techniques including cluster settings, table settings, data modeling, and EC2 instance types. Specific techniques covered include pre-splitting tables, bloom filters, hints like SMALL and NO_CACHE, in-memory storage, incremental keys, and using faster instance types like i3.2xlarge. The document aims to provide insights on optimizing HBase/Phoenix performance gained from TRUECar's experiences.