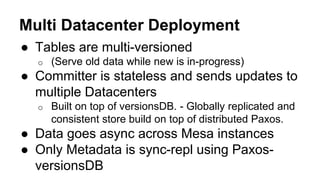

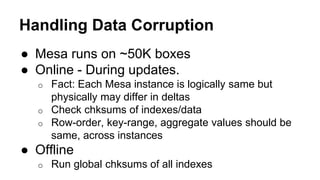

Mesa is Google's data warehousing system that powers their advertising business. It provides an atomic, consistent, available, near real-time and scalable data store. Mesa stores metadata on BigTable and data on Colossus. It handles trillions of queries per day and millions per second across tens of thousands of machines globally. Mesa uses a multi-versioned data model with pre-aggregation and supports multiple indexes.