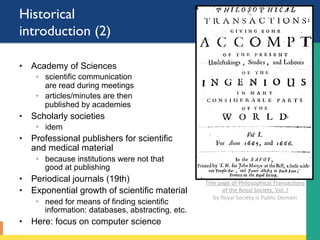

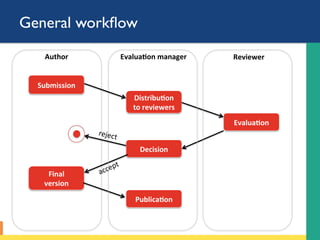

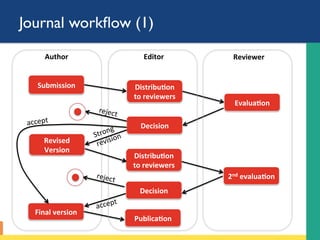

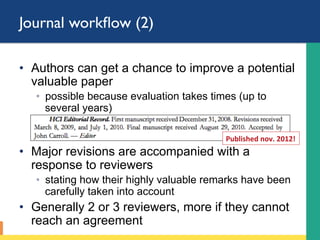

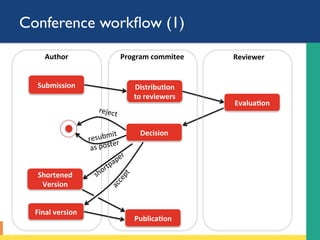

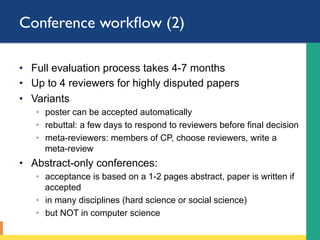

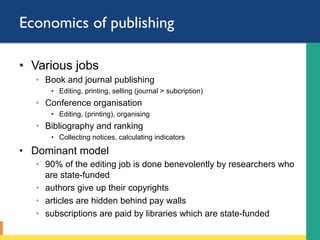

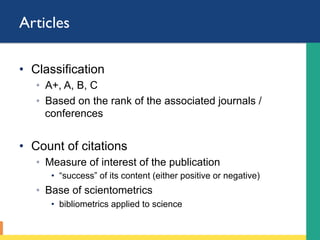

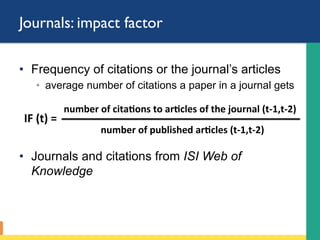

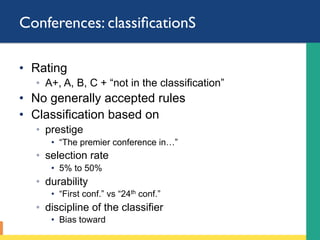

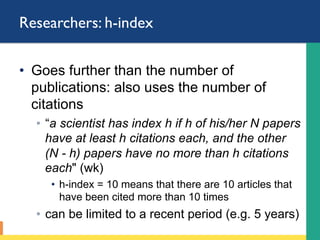

This document provides an overview of methodology and tools for scientific publishing. It discusses the objectives of the course, which are to understand facets of publishing such as journals, conferences, books, and publication workflows. It also covers obtaining an idea of publication-based evaluation metrics like impact factor and h-index. The document outlines different types of scientific documents, principles of publication, economics of publishing, and bibliometrics.