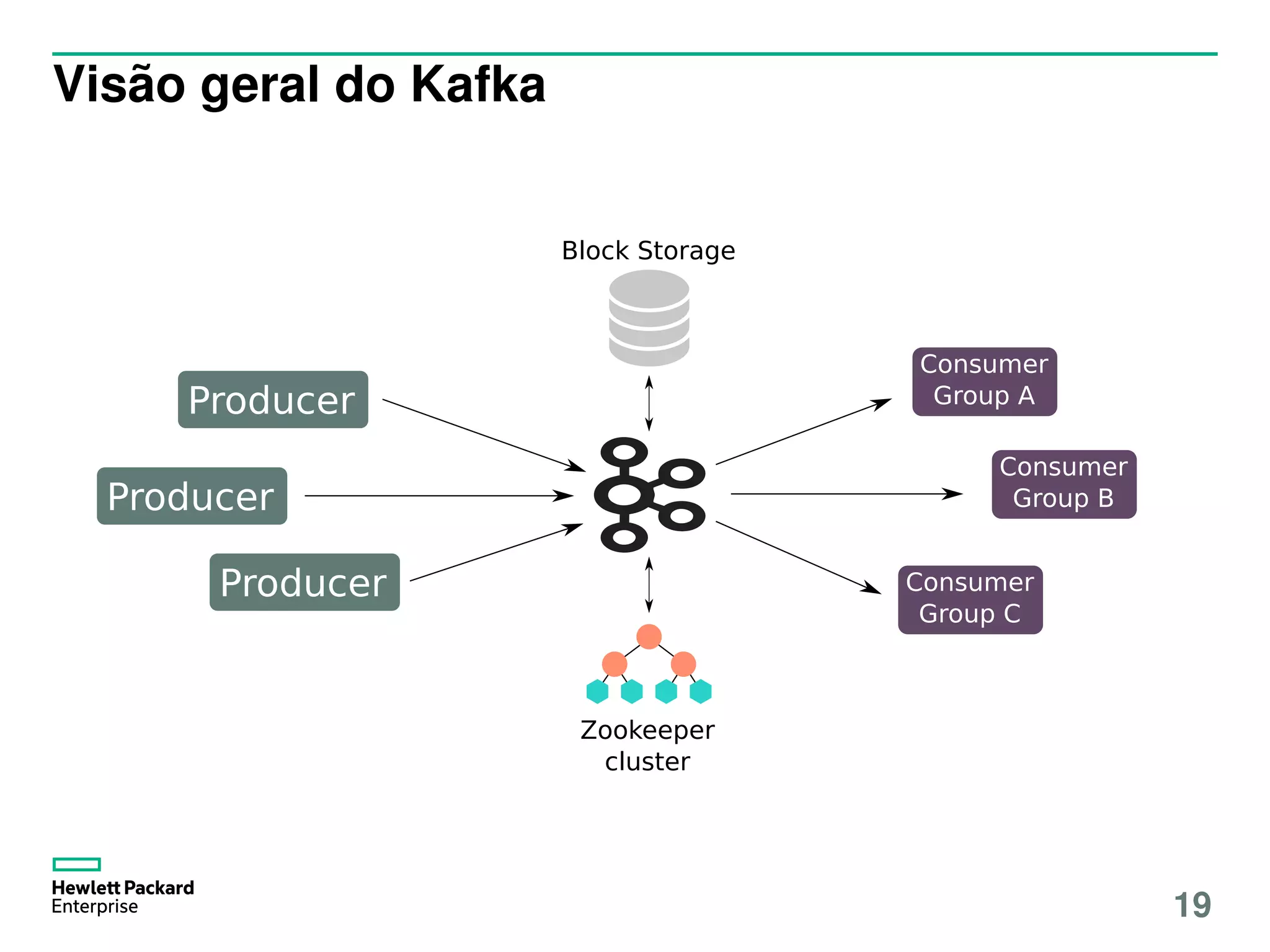

The document introduces Apache Kafka, an open-source distributed event streaming platform. It was created by the architecture team at LinkedIn to provide a high-throughput, scalable messaging infrastructure. Kafka uses a distributed commit log to store streams of records in a fault-tolerant way and allows consumers to read data from the commit logs. It also uses Zookeeper for coordination between nodes in the Kafka cluster.

![Log? Log tipo arquivos de log?

$ sudo tail -n 5 -f /var/log/syslog

Jun 4 19:37:07 inception systemd[1]: Time has been ch ...

Jun 4 19:37:07 inception systemd[1]: apt-daily.timer: ...

Jun 4 19:39:13 inception systemd[1]: Started Cleanup ...

Jun 4 19:40:01 inception CRON[5892]: (root) CMD (test ...

Jun 4 19:44:51 inception org.kde.kaccessibleapp[6056] ...

_

6](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-6-2048.jpg)

![Log? Log tipo arquivos de log?

$ sudo tail -n 5 -f /var/log/syslog

Jun 4 19:37:07 inception systemd[1]: Time has been ch ...

Jun 4 19:37:07 inception systemd[1]: apt-daily.timer: ...

Jun 4 19:39:13 inception systemd[1]: Started Cleanup ...

Jun 4 19:40:01 inception CRON[5892]: (root) CMD (test ...

Jun 4 19:44:51 inception org.kde.kaccessibleapp[6056] ...

Jun 4 19:49:02 inception ntpd[711]: receive: Unexpect ...

_

6](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-7-2048.jpg)

![Log? Log tipo arquivos de log?

$ sudo tail -n 5 -f /var/log/syslog

Jun 4 19:37:07 inception systemd[1]: Time has been ch ...

Jun 4 19:37:07 inception systemd[1]: apt-daily.timer: ...

Jun 4 19:39:13 inception systemd[1]: Started Cleanup ...

Jun 4 19:40:01 inception CRON[5892]: (root) CMD (test ...

Jun 4 19:44:51 inception org.kde.kaccessibleapp[6056] ...

Jun 4 19:49:02 inception ntpd[711]: receive: Unexpect ...

Jun 4 19:55:31 inception kernel: [11996.667253] hrtim ...

_

6](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-8-2048.jpg)

26](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-28-2048.jpg)

/* just try to send data */

val future: Future[ RecordMetadata ] = producer.send(message)

/* try to send data and call -me back after it */

val futureAndCallback : Future[ RecordMetadata ] =

producer.send(message ,

new Callback () {

def onCompletion (

metadata: RecordMetadata , exception: Exception) {

/* (metadata XOR exception) is non -null :( */

}

})

producer.close () /* release */

27](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-29-2048.jpg)

28](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-30-2048.jpg)

![Consumer em Scala (>= 0.9.0.0)

/* subscribe to as many topics as you like */

consumer.subscribe(Arrays.asList(" the_destination_topic "))

while (true) {

val records: /* argument is the timeout in millis */

ConsumerRecords [String , String] = consumer.poll (100)

records foreach {

record: ConsumerRecord [String , String] =>

log.info("${record.topic ()} is at ${record.offset ()}")

}

}

29](https://image.slidesharecdn.com/tdc-kafka-pedro-duarte-20161007174353-161011203847/75/TDC2016POA-Trilha-Arquitetura-Apache-Kafka-uma-introducao-a-logs-distribuidos-31-2048.jpg)