SVMs are classifiers derived from statistical learning theory that maximize the margin between decision boundaries. They work by mapping data to a higher dimensional space using kernels to allow for nonlinear decision boundaries. SVMs find the linear decision boundary that maximizes the margin between the closest data points of each class by solving a quadratic programming optimization problem.

![The solution of which gives the optimal values:

a0,1 =a0,2 =a0,3 =a0,4 =1/8

w0 = Σ a0,i di φ(xi)

= 1/8[φ(x1)- φ(x2)- φ(x3)+ φ(x4)]

1 1 1 1 0

1 1 1 1 0

1 2 − 2 − 2 2 − 1

= − + − − = 2

8 1 1 1 1 0

− 2 − 2 2

2 0

− 2 2 − 2

2 0

Where the first element of w0 gives the bias b](https://image.slidesharecdn.com/svm2-120517231712-phpapp02/85/Svm-58-320.jpg)

![Hyperplane is defined by

m1

∑w ϕ ( x ) + b = 0

j =1

j j

or

m1

∑w ϕ ( x ) = 0;

j =0

j j where ϕ0 ( x ) = 1

writing : ϕ( x ) = [ϕ0 ( x ), ϕ1 ( x ),..., ϕm1 ( x )]

w ϕ( x ) = 0

T

we get :

N

from optimality conditions : w = ∑ai d i ϕ( x i )

i =1](https://image.slidesharecdn.com/svm2-120517231712-phpapp02/85/Svm-65-320.jpg)

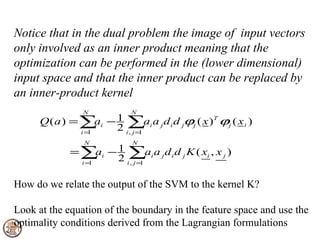

![We therefore only need a suitable choice of kernel function cf:

Mercer’s Theorem:

Let K(x,y) be a continuous symmetric kernel that defined in the

closed interval [a,b]. The kernel K can be expanded in the form

Κ (x,y) = φ(x) T φ(y)

provided it is positive definite. Some of the usual choices for K are:

Polynomial SVM (x T xi+1)p p specified by user

RBF SVM exp(-1/(2σ2) || x – xi||2) σ specified by user

MLP SVM tanh(s0 x T xi + s1)](https://image.slidesharecdn.com/svm2-120517231712-phpapp02/85/Svm-68-320.jpg)