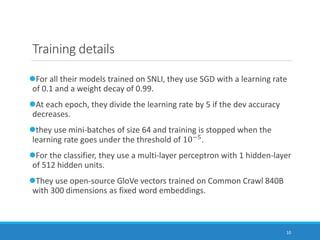

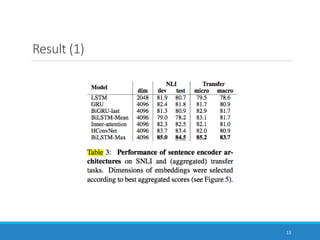

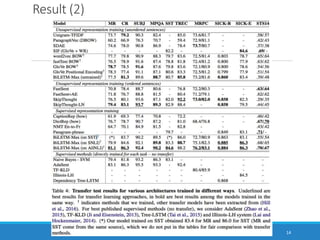

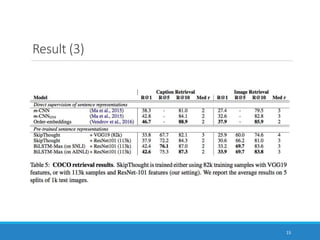

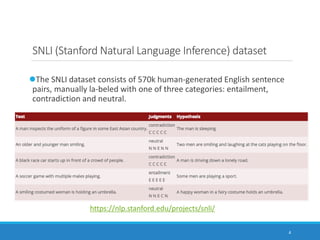

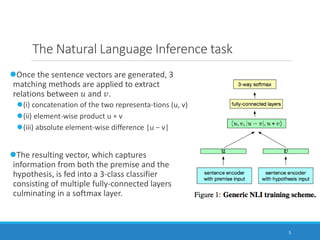

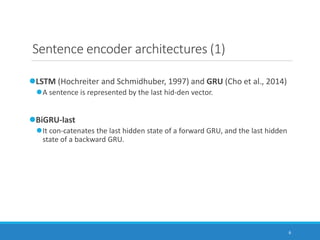

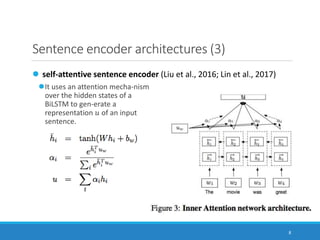

This document summarizes the paper "Supervised Learning of Universal Sentence Representations from Natural Language Inference Data". It discusses how the researchers trained sentence embeddings using supervised data from the Stanford Natural Language Inference dataset. They tested several sentence encoder architectures and found that a BiLSTM network with max pooling produced the best performing universal sentence representations, outperforming prior unsupervised methods on 12 transfer tasks. The sentence representations learned from the natural language inference data consistently achieved state-of-the-art performance across multiple downstream tasks.

![9

Sentence encoder architectures (4)

Hierarchical ConvNet

It is like AdaSent (Zhao et al., 2015).

The final representation 𝑢 =

[𝑢1, 𝑢2, 𝑢3, 𝑢4] concatenates

representations at different levels of

the input sentence.](https://image.slidesharecdn.com/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412/85/Paper-Reading-Supervised-Learning-of-Universal-Sentence-Representations-from-Natural-Language-Inference-Data-10-320.jpg)