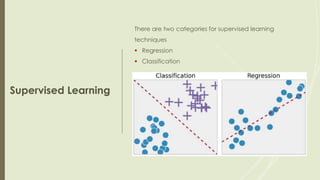

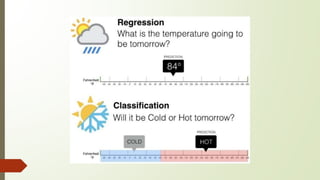

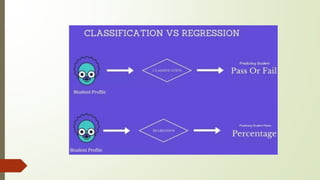

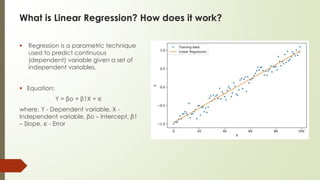

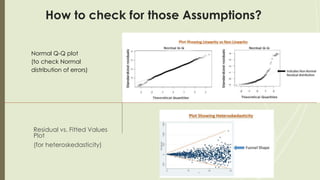

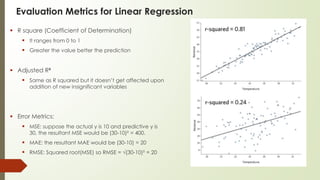

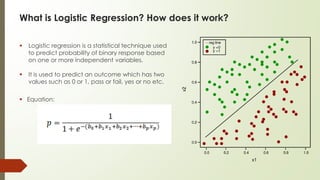

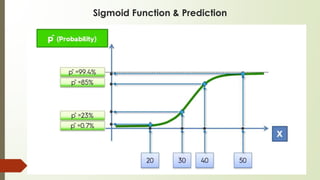

Supervised machine learning involves regression and classification techniques. Linear regression predicts continuous output values based on linear relationships between input features. It assumes features are linearly related and errors are normally distributed. Logistic regression predicts binary classification with probabilities calculated from the sigmoid function. It is used for problems like predicting clicks from user data. Both techniques are evaluated using metrics like R-squared and accuracy derived from confusion matrices. Case studies demonstrate using linear regression to predict ice cream revenue from temperature, and logistic regression to classify customer ad clicks.