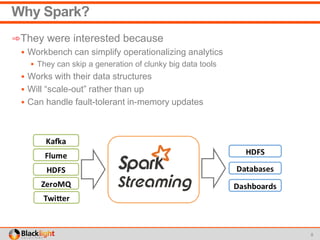

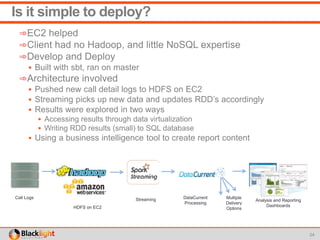

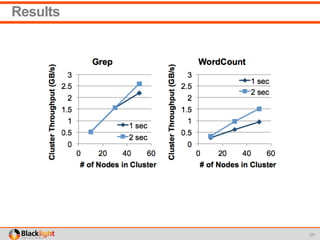

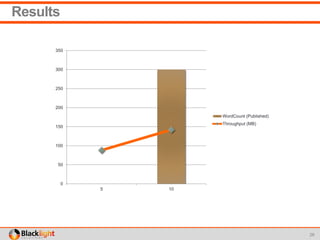

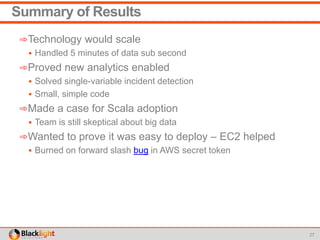

This document summarizes a proof-of-concept project using Spark Streaming to enable new analytics for a telecommunications company processing 90 million calls per day. The company wanted to test Spark Streaming's ability to scale analytics and prove it could enable techniques like incident detection. The project showed Spark Streaming could handle 5 minutes of call data sub-second, successfully detected incidents using a univariate technique, and proved relatively easy deployment to AWS. While the company's team remained skeptical of big data technologies, the project proved Spark Streaming's capabilities.

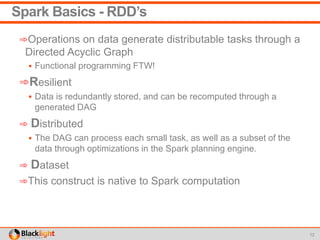

![Anatomy of a Spark Streaming Program

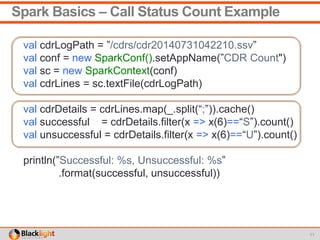

val sparkConf = new SparkConf().setAppName(“QueueStream”)

val ssc = new StreamingContext(sparkConf, Seconds(1))

val rddQueue = new SynchronizedQueue[RDD[Int]]()

val inputStream = ssc.queueStream(rddQueue)

val mappedStream = inputStream.map(x => (x % 10, 1))

val reducedStream = mappedStream.reduceByKey(_ + _)

reducedStream.print()

ssc.start()

for(i 1 to 30) {

rddQueue += ssc.sparkContext.makeRDD(1 to 1000, 10)

Thread.sleep(1000)

}

ssc.stop()

20

Utilities also available for

Twitter

Kafka

Flume

Filestream](https://image.slidesharecdn.com/sparktelcousecase-150629192740-lva1-app6891/85/Spark-Streaming-Early-Warning-Use-Case-20-320.jpg)

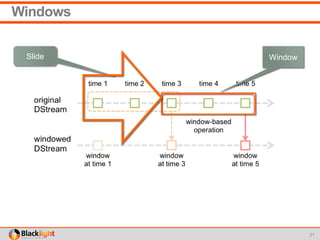

![Streaming Call Analysis with Windows

val path = "/Users/chance/Documents/cdrdrop”

val conf = new SparkConf()

.setMaster("local[12]")

.setAppName("CDRIncidentDetection")

.set("spark.executor.memory","8g")

val ssc = new StreamingContext(conf,Seconds(iteration))

val callStream = ssc.textFileStream(path)

val cdr = callStream.window(Seconds(window),Seconds(slide)).map(_.split(";"))

val cdrArr = cdr.filter(c => c.length>136)

.map(c => extractCallDetailRecord(c))

val result = detectIncidents(cdrArr)

result.foreach(rdd => rdd.take(10)

.foreach{case(x,(d,high,low,res)) =>

println(x + "," + high + "," + d + "," + low + "," + res) })

ssc.start()

ssc.awaitTermination()

22](https://image.slidesharecdn.com/sparktelcousecase-150629192740-lva1-app6891/85/Spark-Streaming-Early-Warning-Use-Case-22-320.jpg)

![Can we enable new analytics?

23

➾Incident detection

Chose a univariate technique[1] to detect behavior out of profile

from recent events

Technique identifies

out of profile events

dramatic shifts in the profile

Easy to understand

Recent

Window](https://image.slidesharecdn.com/sparktelcousecase-150629192740-lva1-app6891/85/Spark-Streaming-Early-Warning-Use-Case-23-320.jpg)

![References

➾[1] Zaharia et al : Discretized Streams

➾[2] Zaharia et al: Discretized Streams: Fault-Tolerant

Streaming

➾[3] Das : Spark Streaming – Real-time Big-Data Processing

➾[4] Spark Streaming Programming Guide

➾[5] Running Spark on EC2

➾[6] Spark on EMR

➾[7] Ahelegby: Time Series Outliers

29](https://image.slidesharecdn.com/sparktelcousecase-150629192740-lva1-app6891/85/Spark-Streaming-Early-Warning-Use-Case-29-320.jpg)