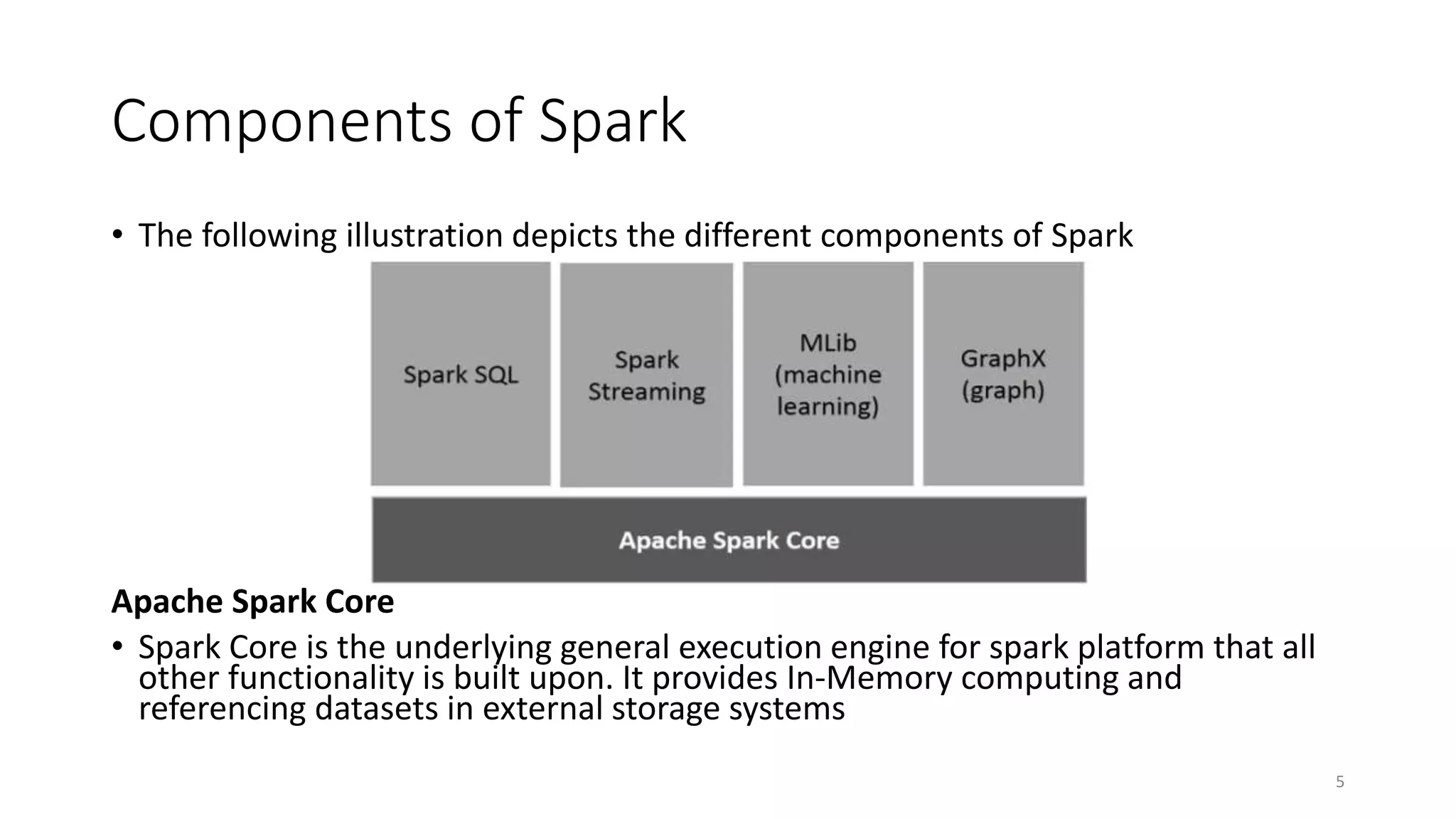

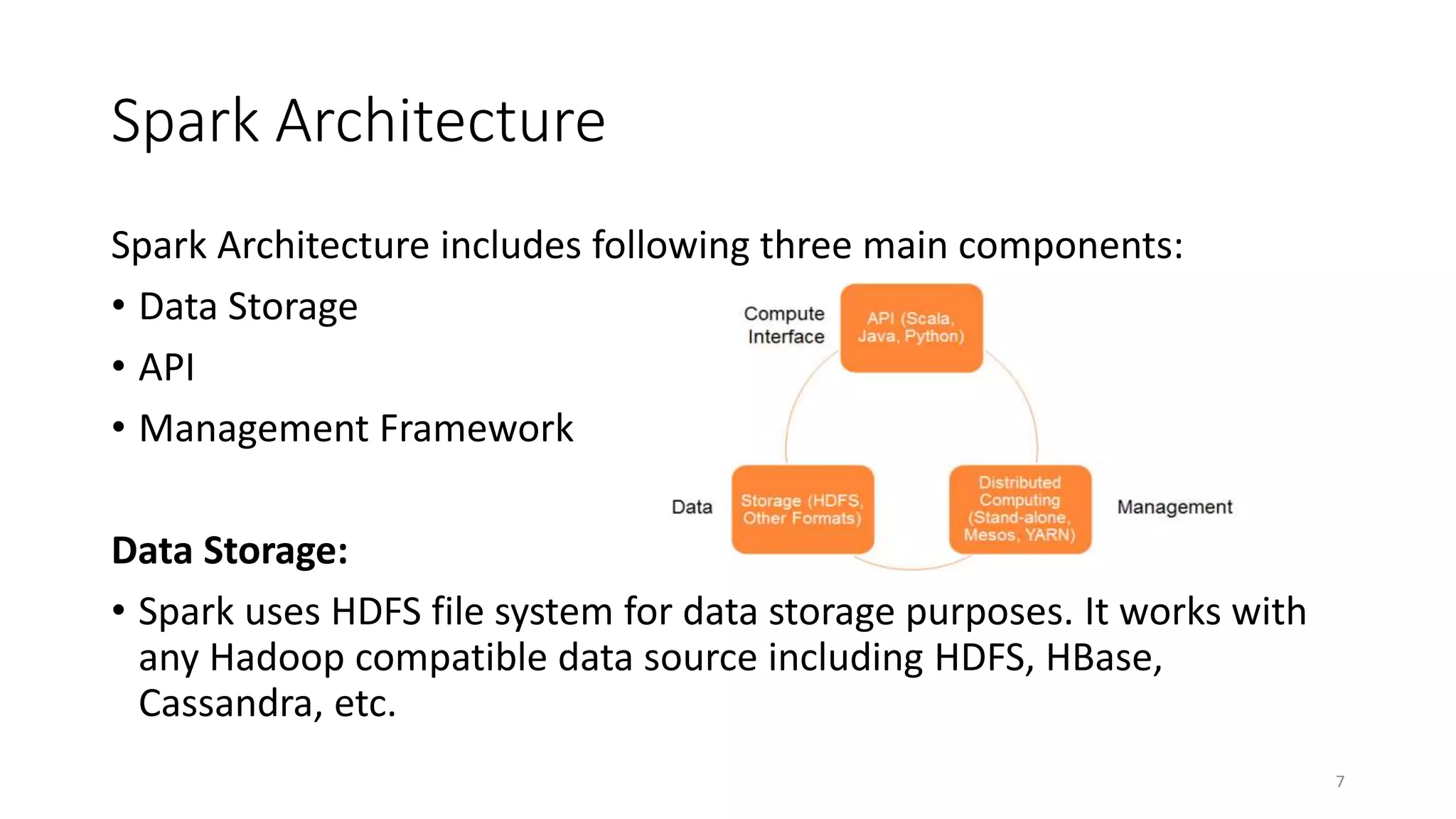

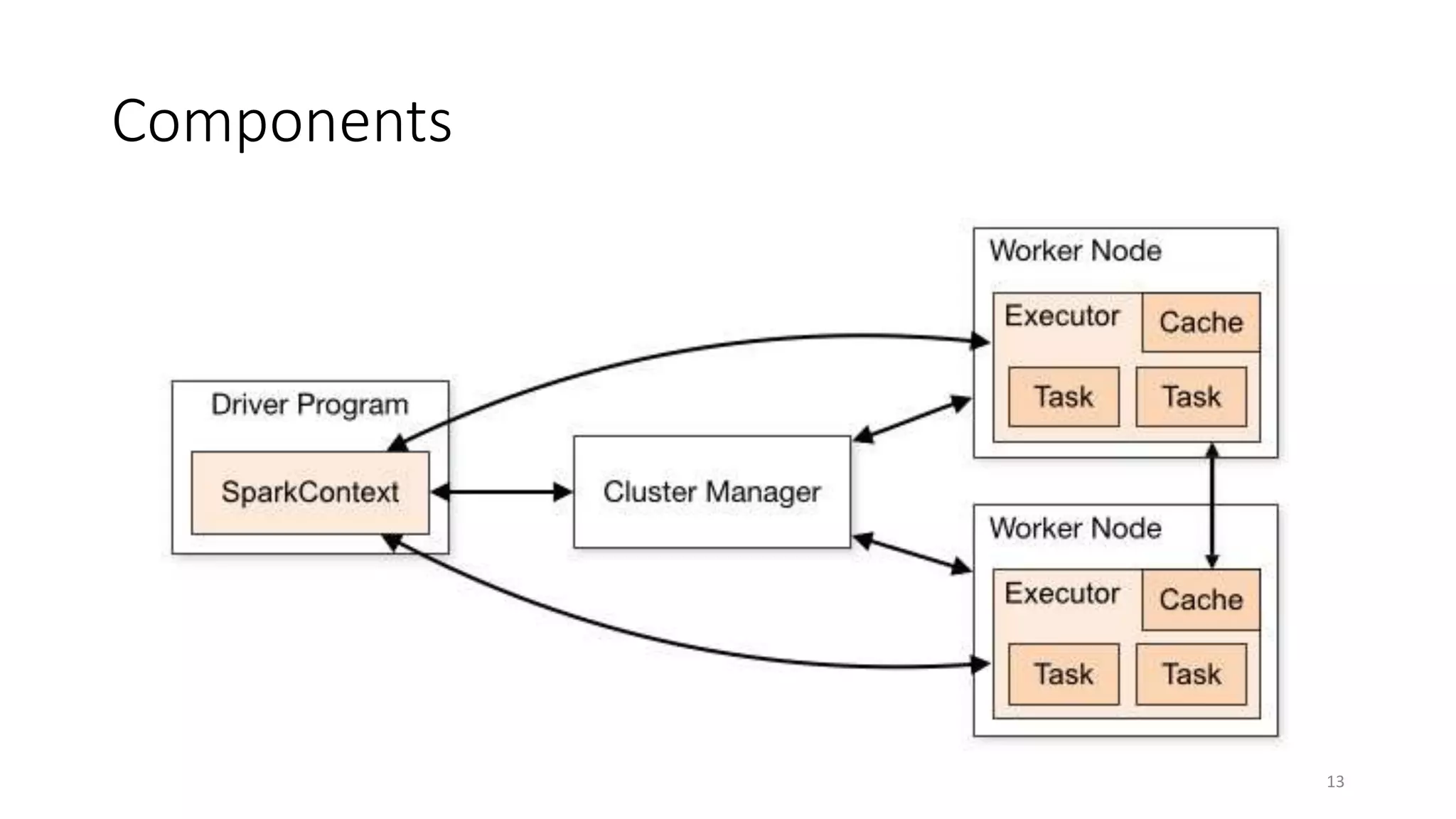

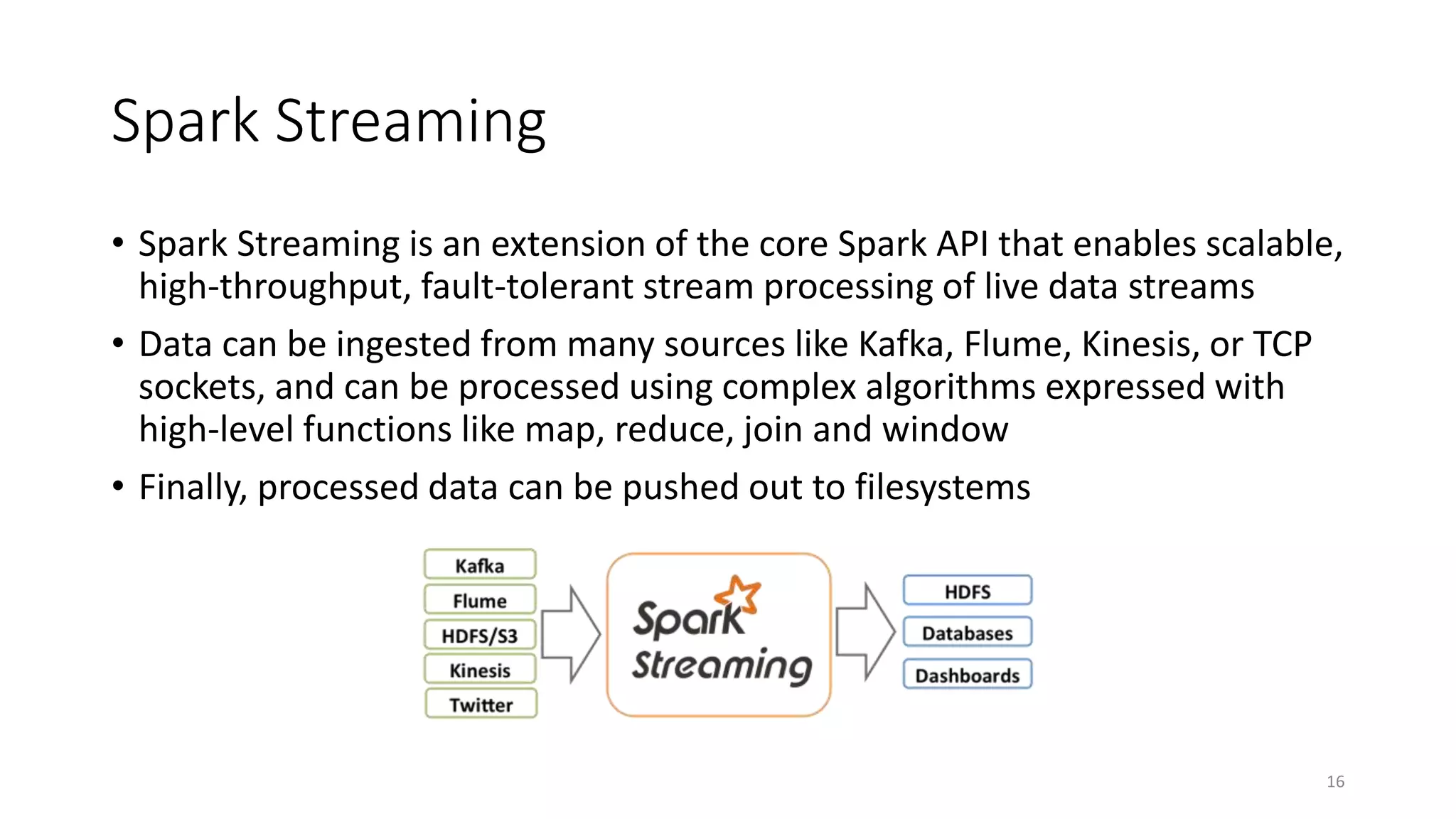

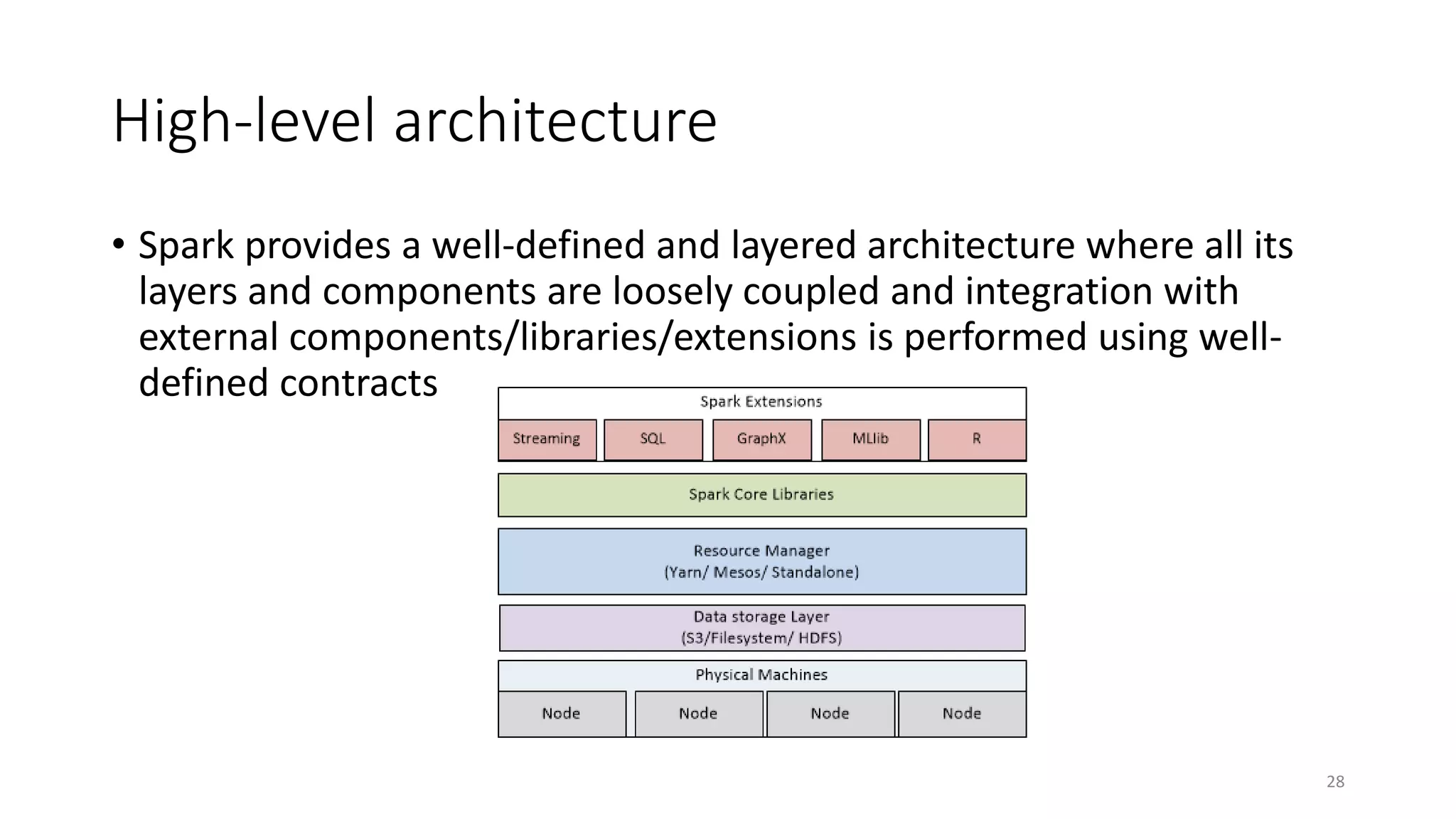

Apache Spark is a fast, general-purpose cluster computing system that allows processing of large datasets in parallel across clusters. It can be used for batch processing, streaming, and interactive queries. Spark improves on Hadoop MapReduce by using an in-memory computing model that is faster than disk-based approaches. It includes APIs for Java, Scala, Python and supports machine learning algorithms, SQL queries, streaming, and graph processing.