This document discusses various aspects of software testing, including:

- Definitions of testing and its purposes from software experts.

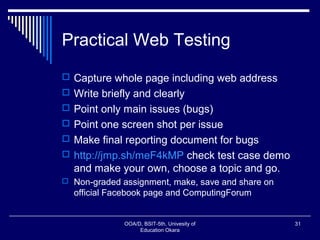

- Good practices for testing such as focusing on error detection, avoiding non-reproducible tests, and thoroughly inspecting results.

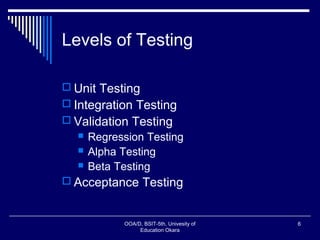

- Different levels of testing from unit to acceptance.

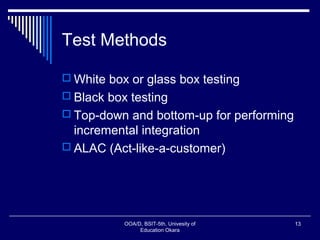

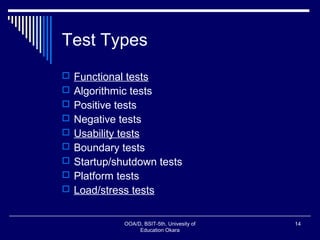

- Methods and types of testing like white-box, black-box, functional, and load testing.

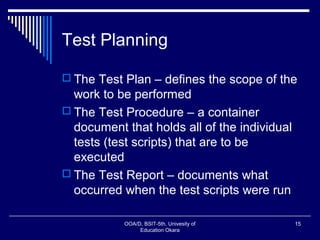

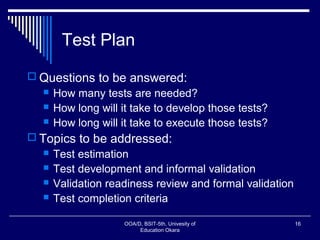

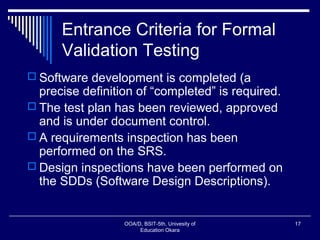

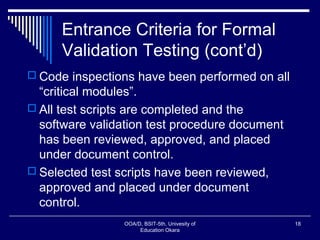

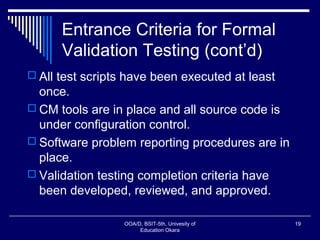

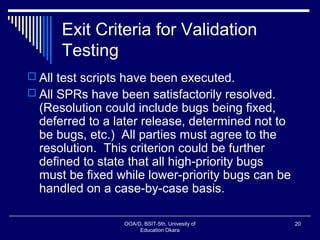

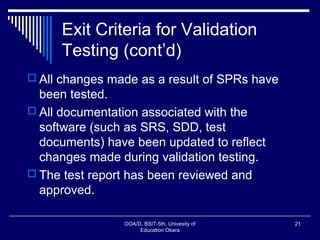

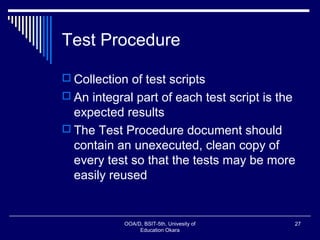

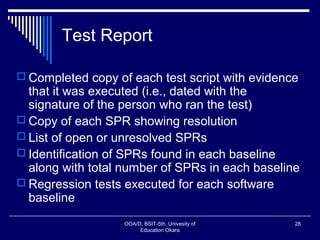

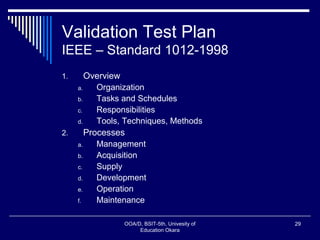

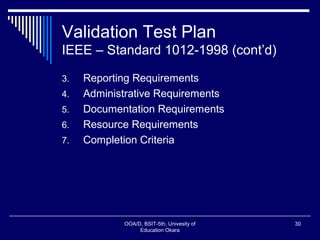

- The importance of planning tests through test plans, procedures, and reports.

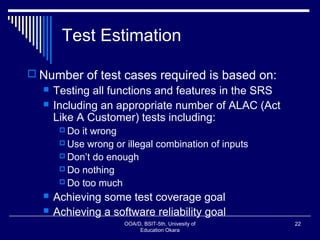

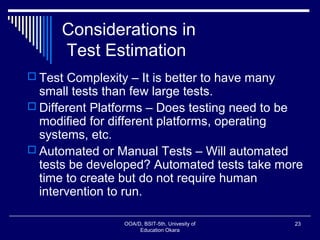

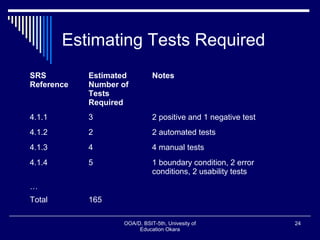

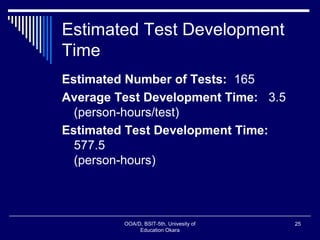

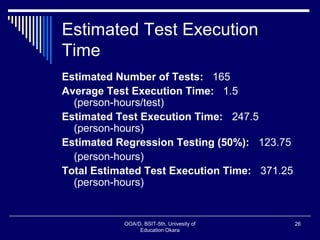

- Estimating the number of tests needed and time required for development and execution.