The document summarizes key principles of software testing including:

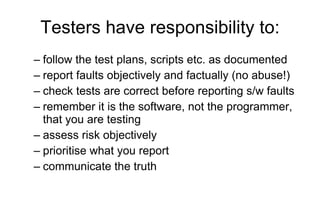

1. Testing is necessary because software will contain faults due to human errors, and failures can be costly.

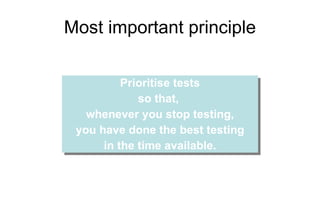

2. Exhaustive testing of all possible test cases is impractical. Risk-based prioritization is used to test the most important cases first.

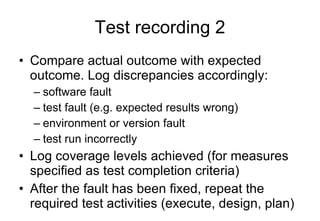

3. The test process includes planning, specification, execution, recording results and checking completion criteria. Effective test cases are prioritized to efficiently find faults.