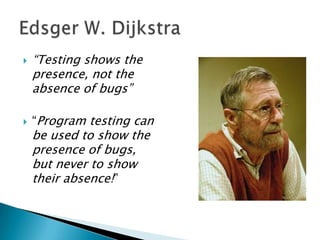

Damian Gordon was a Dutch computer scientist born in 1930 in Rotterdam who received the 1972 Turing Award. He developed several programming language principles including that testing shows presence of bugs but not absence, exhaustive testing is impossible, early testing is important, and defects often cluster in small areas of code. He stressed the importance of risk analysis, test objectives, and regularly updating test cases to find new issues rather than relying on the same cases. Testing approaches must also be tailored to contexts like safety-critical systems versus ecommerce.