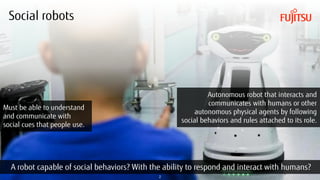

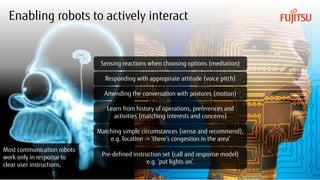

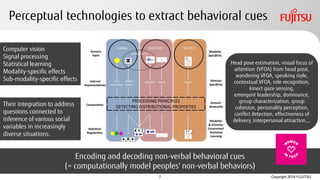

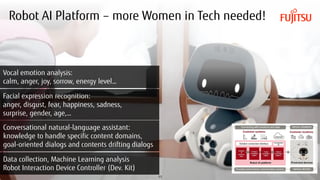

This document discusses social robots and their ability to interact with humans. It describes how social robots can understand social cues, communicate through speech and gestures, and express emotions. The document also mentions experiments showing that elderly residents interacting with robots became more talkative and smiled more. Finally, it discusses technologies needed for social robots to actively engage in conversations, imitate human behaviors, and apply machine learning to areas like emotion recognition.