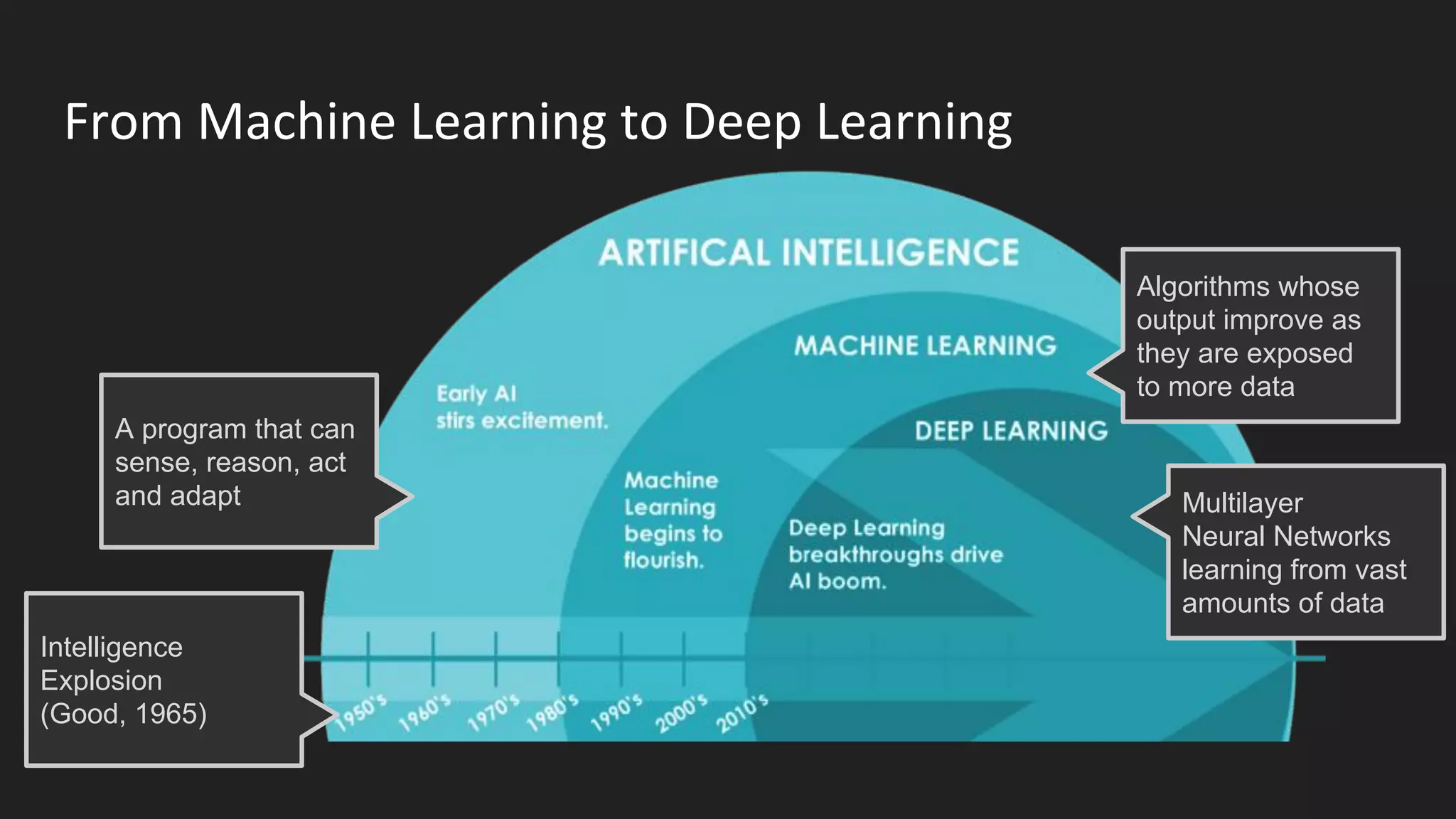

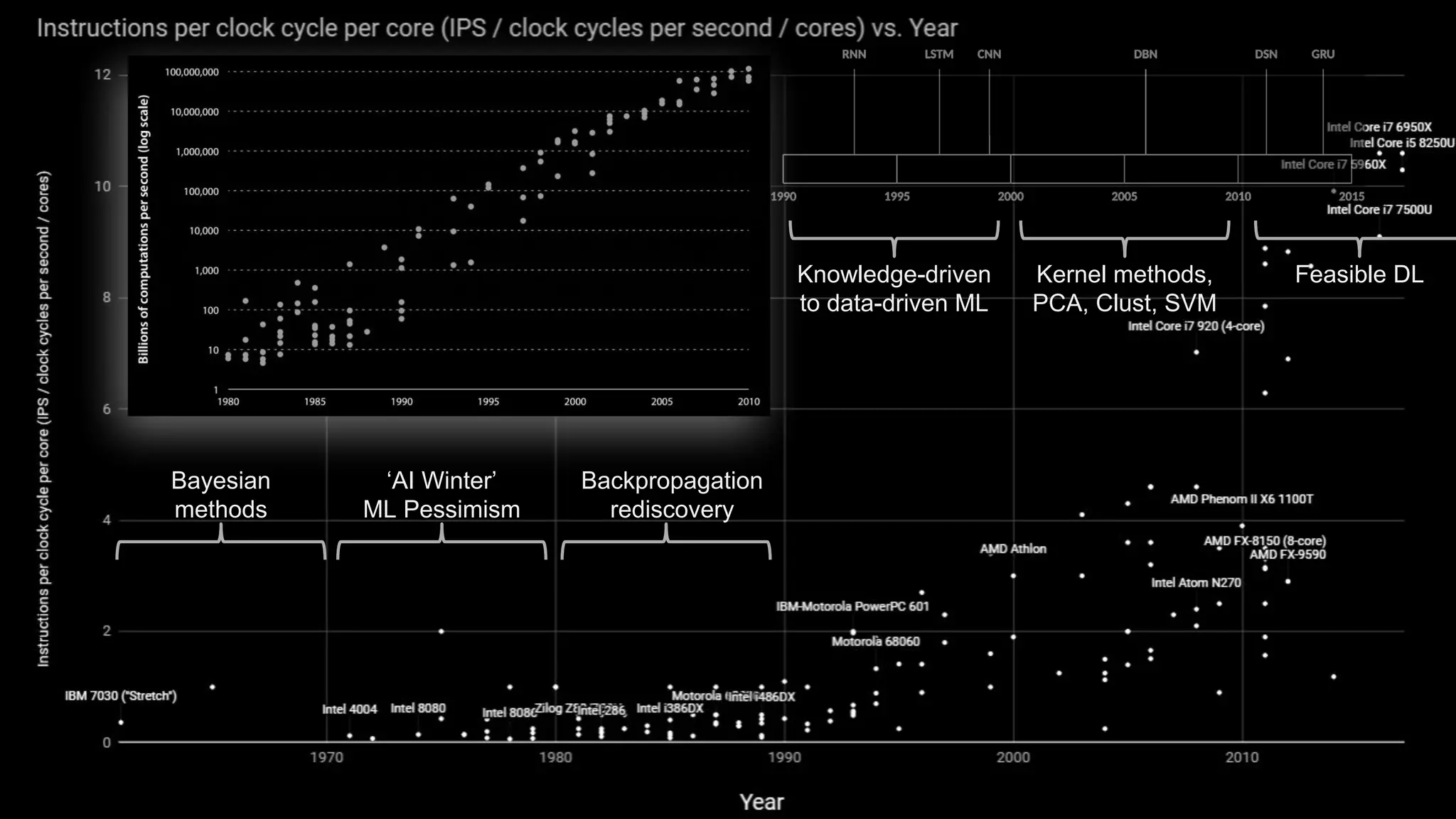

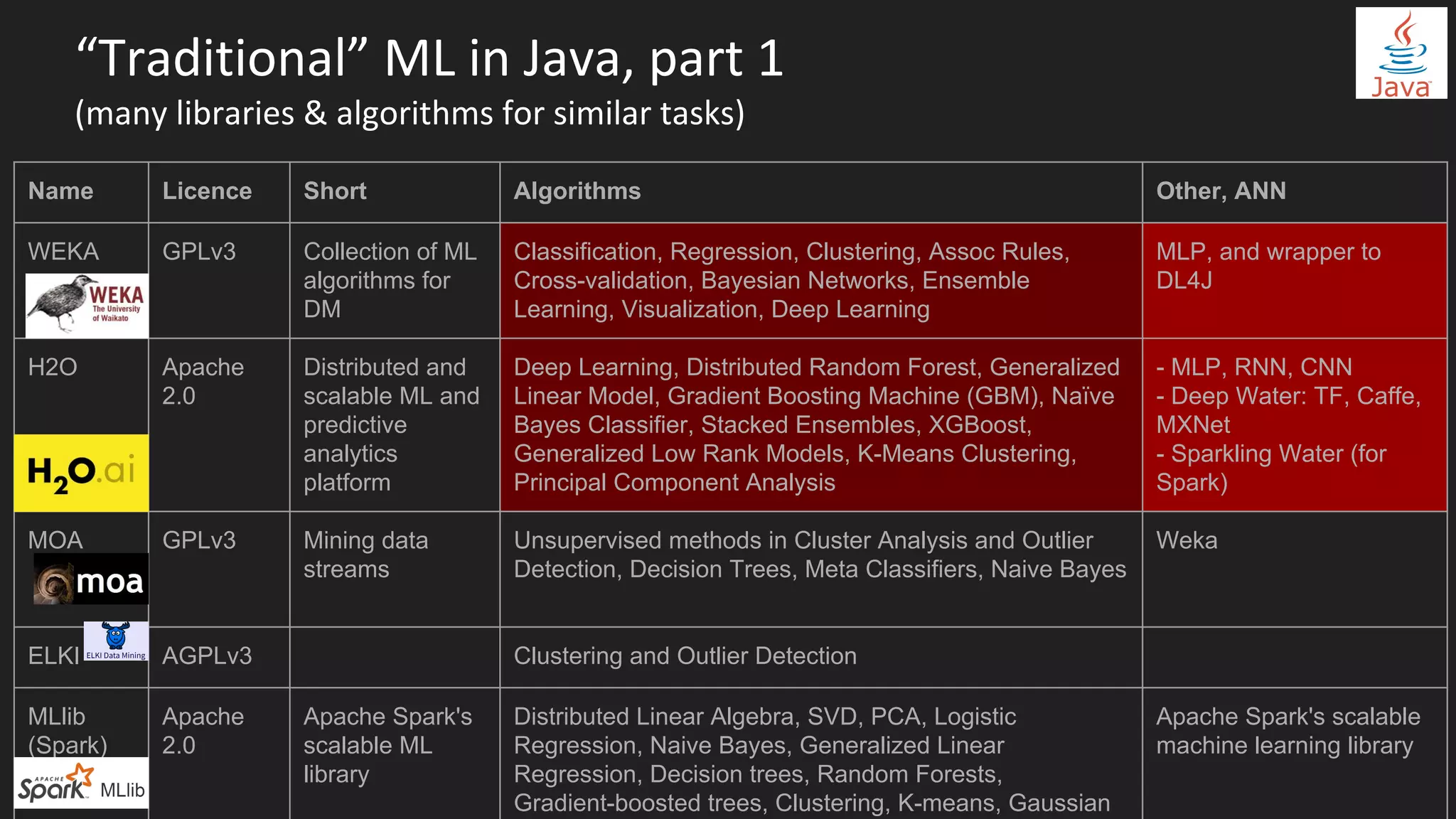

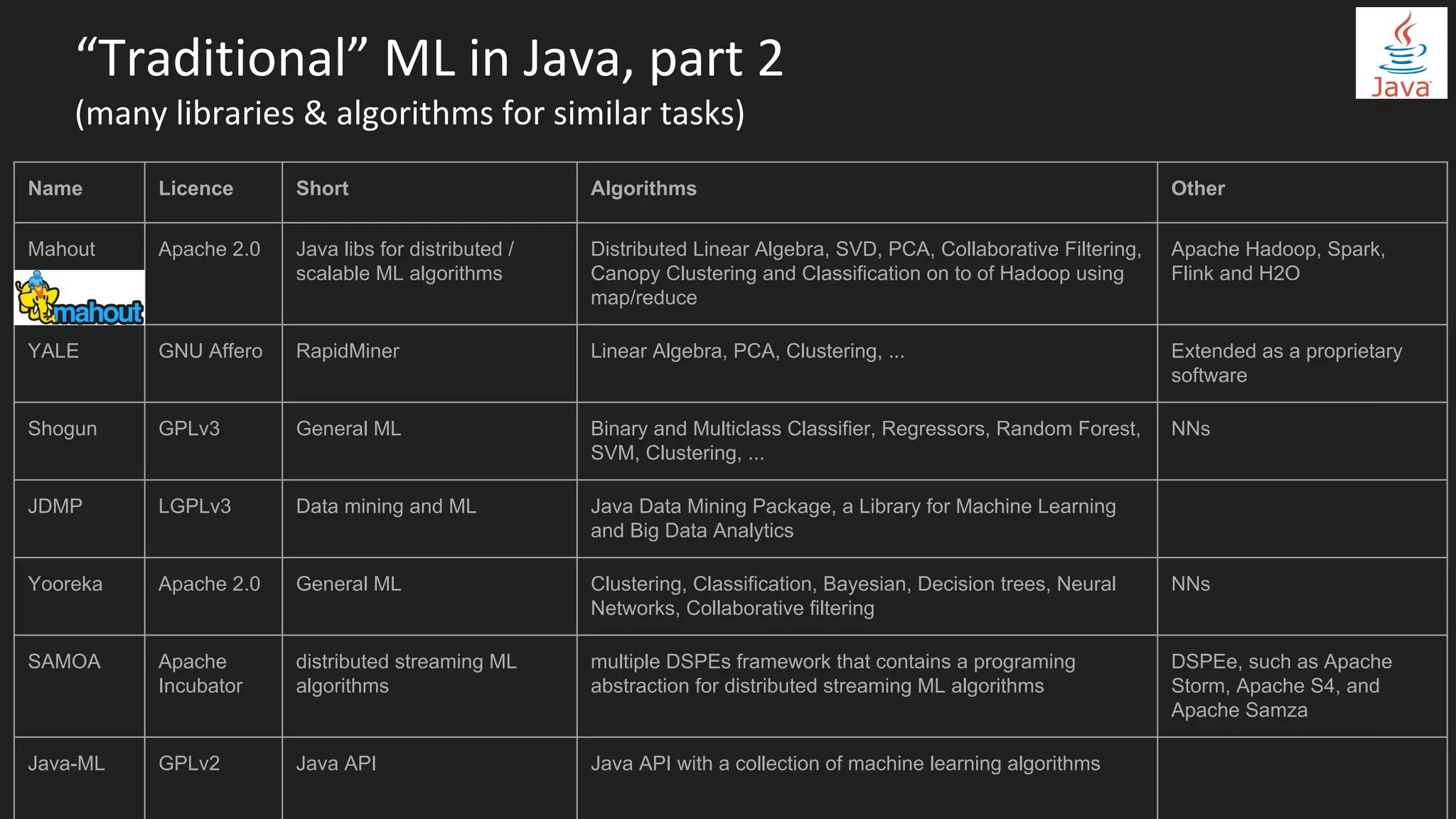

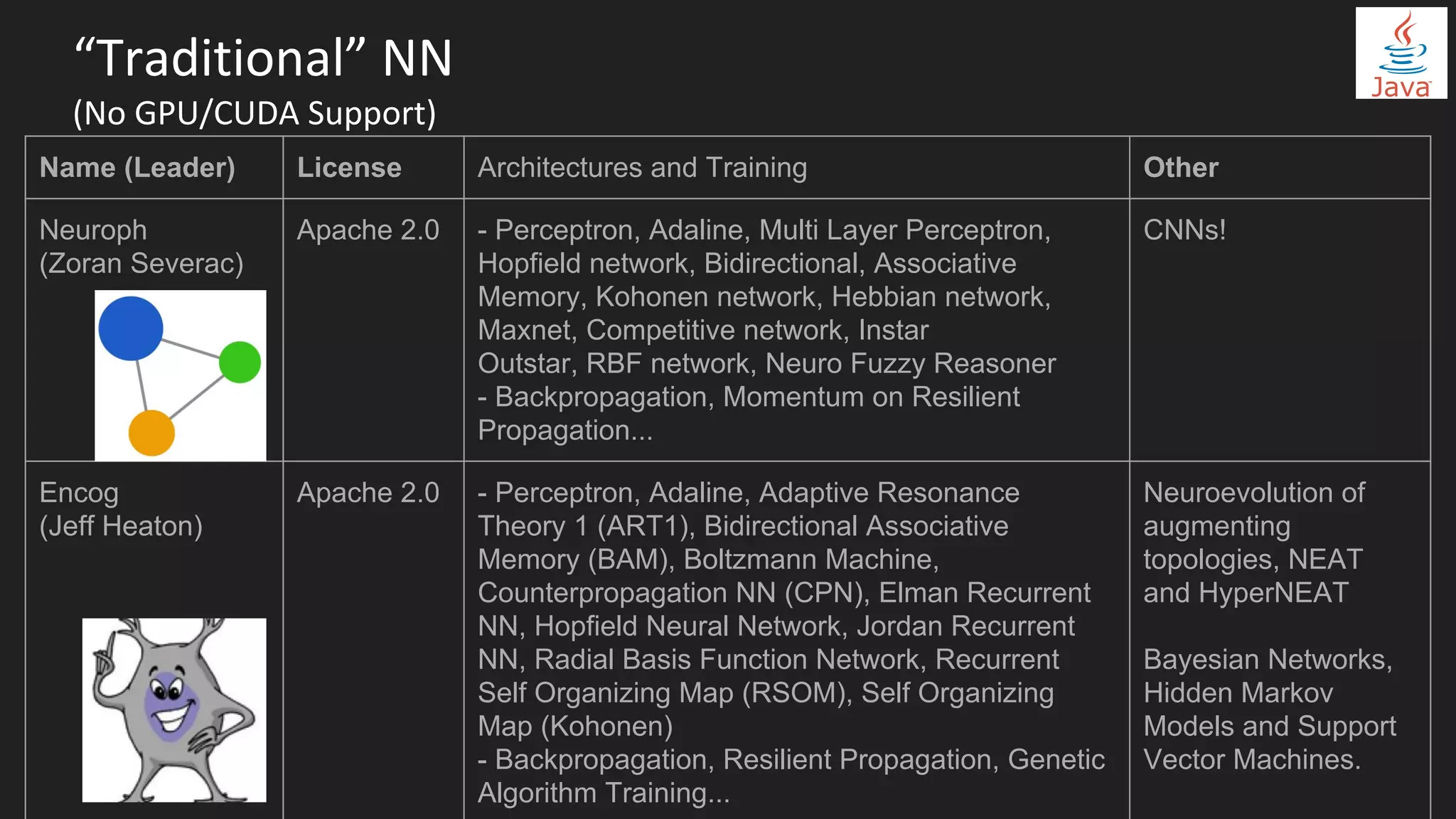

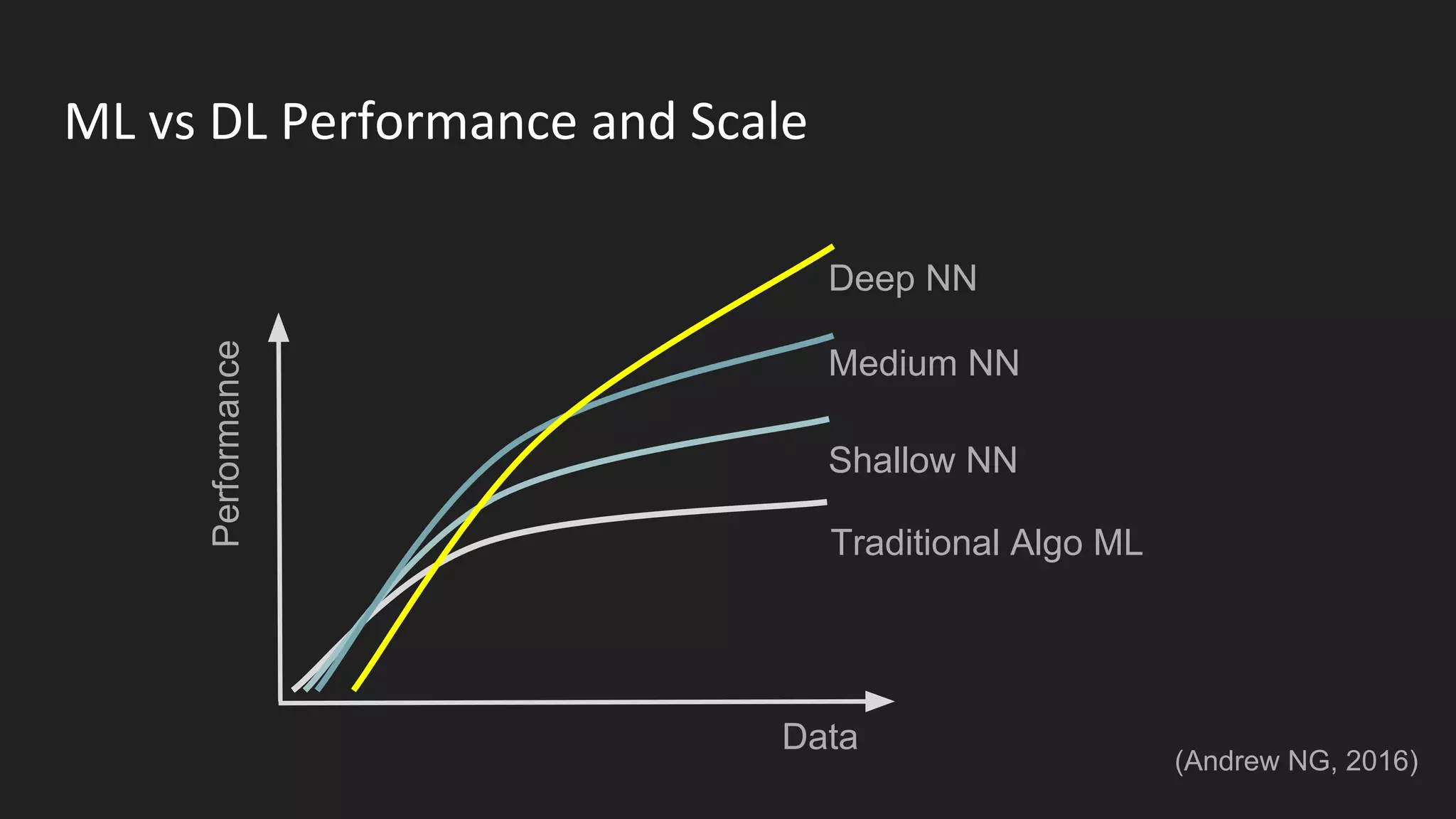

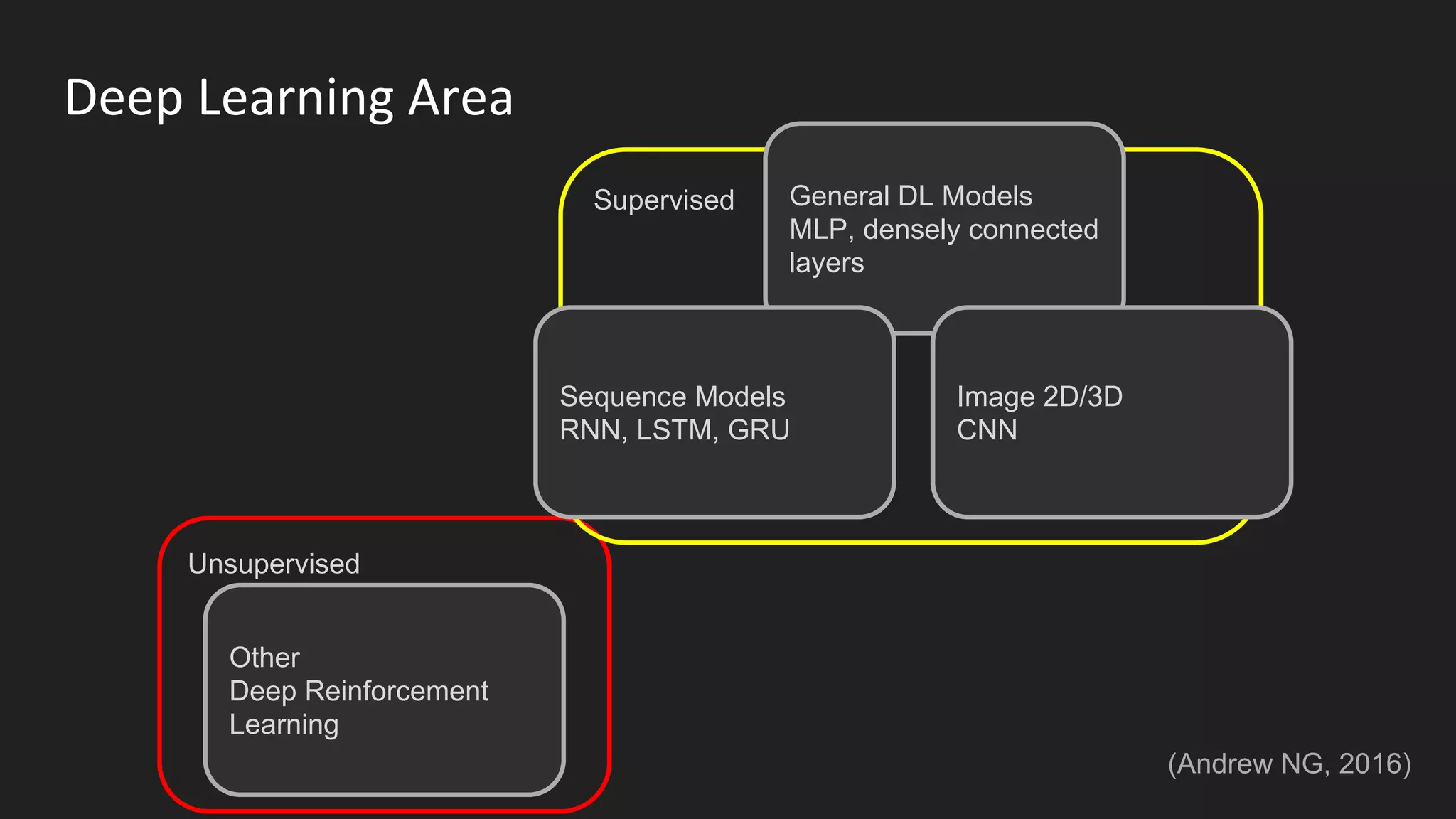

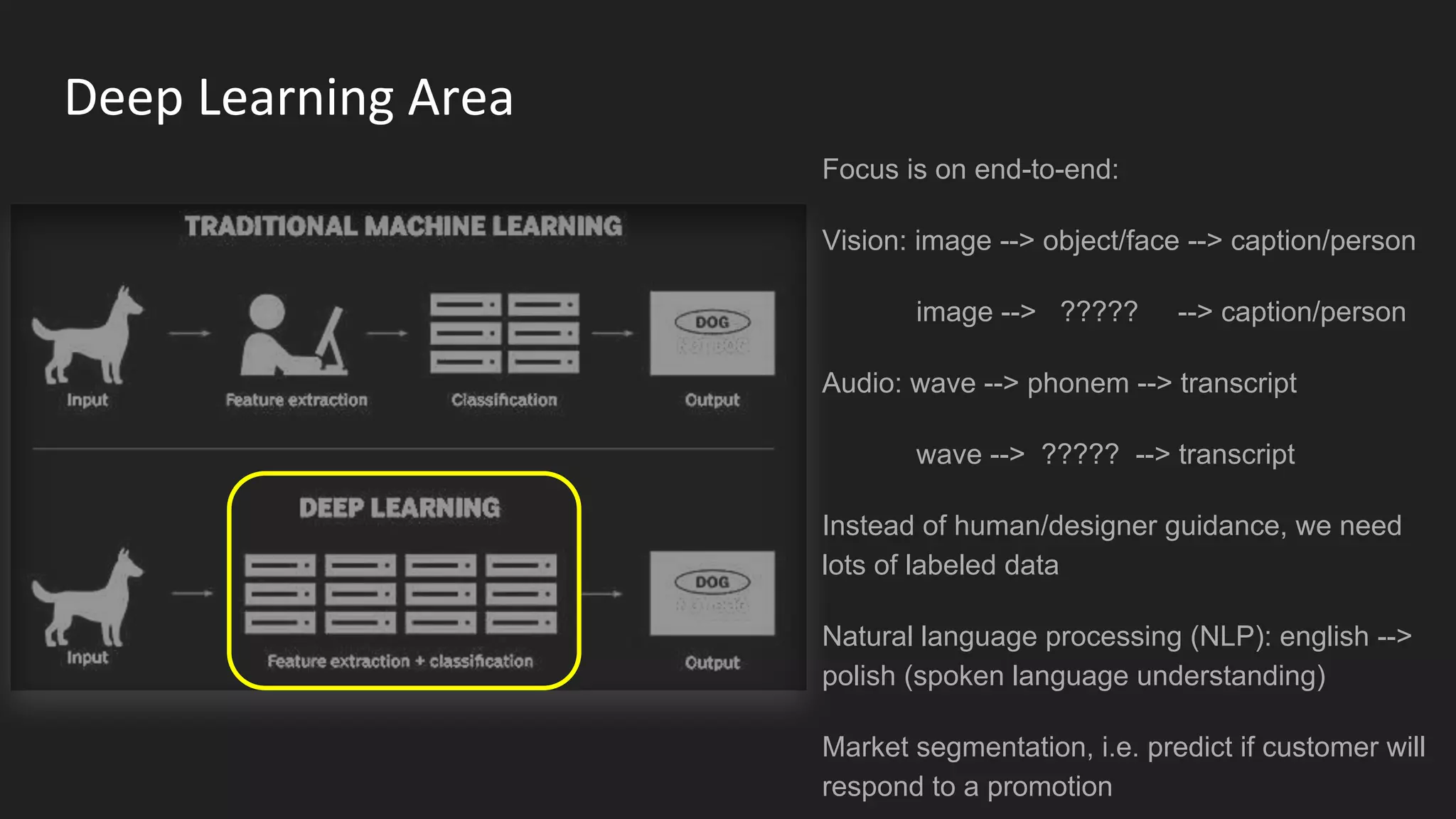

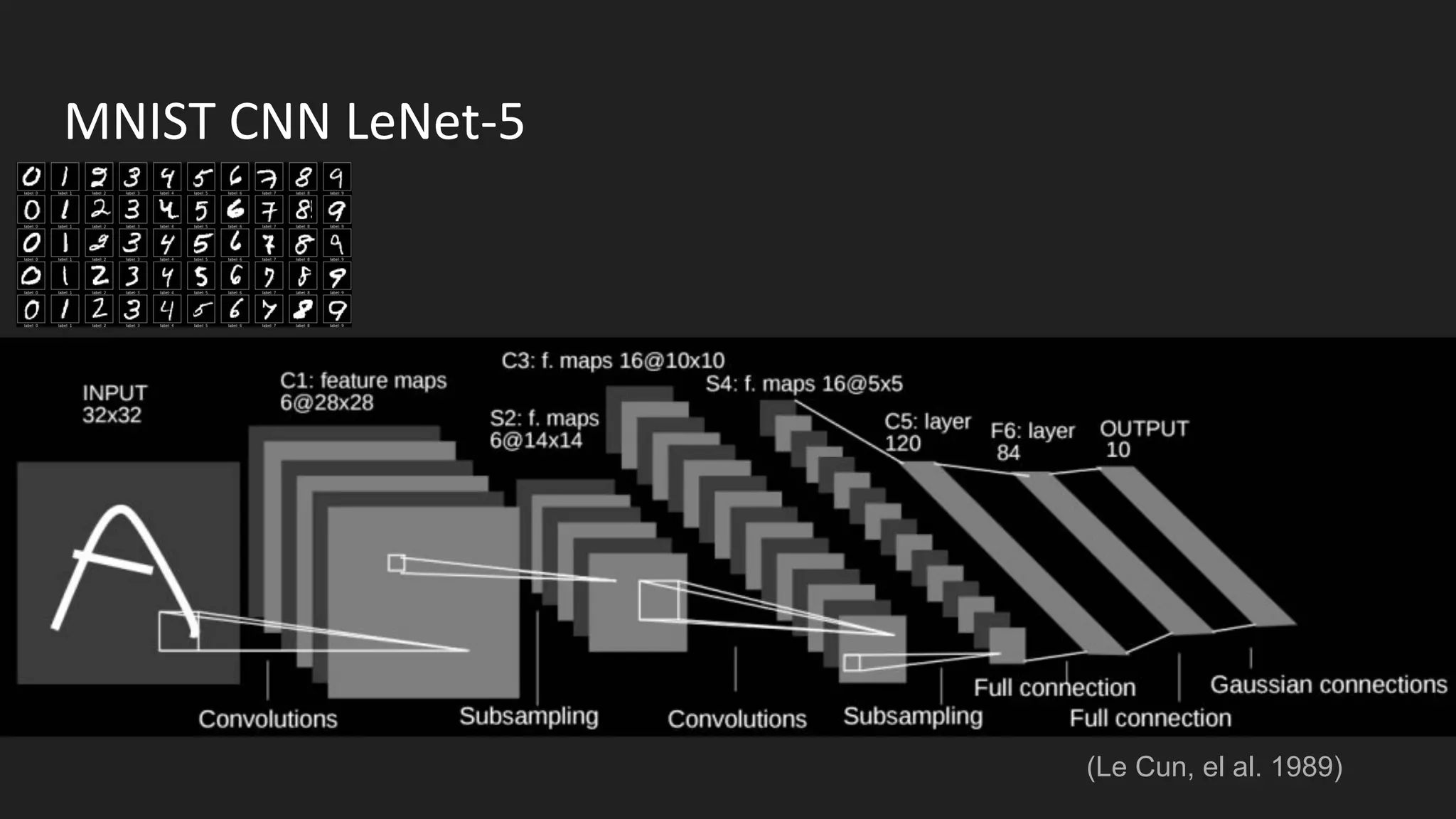

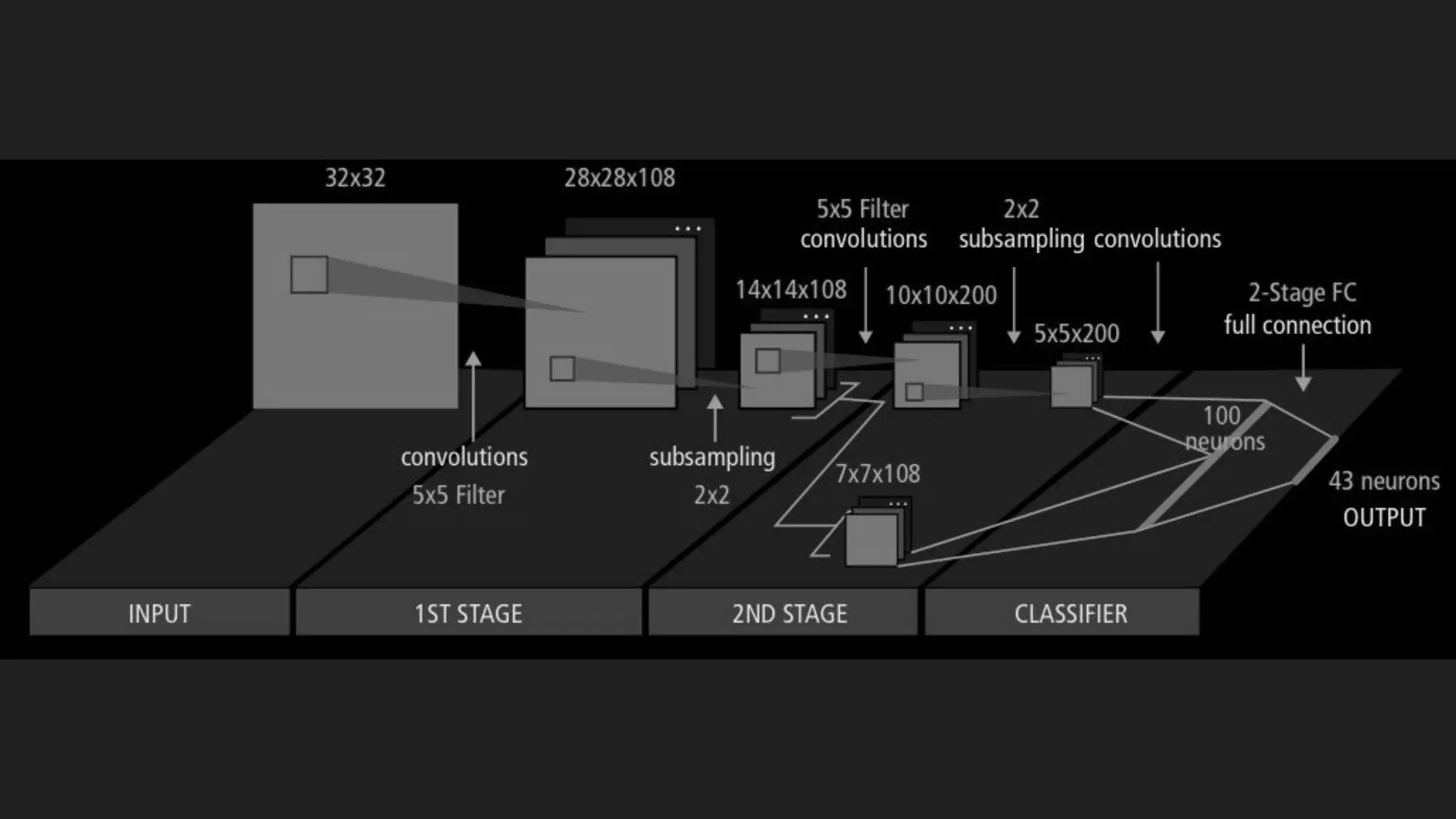

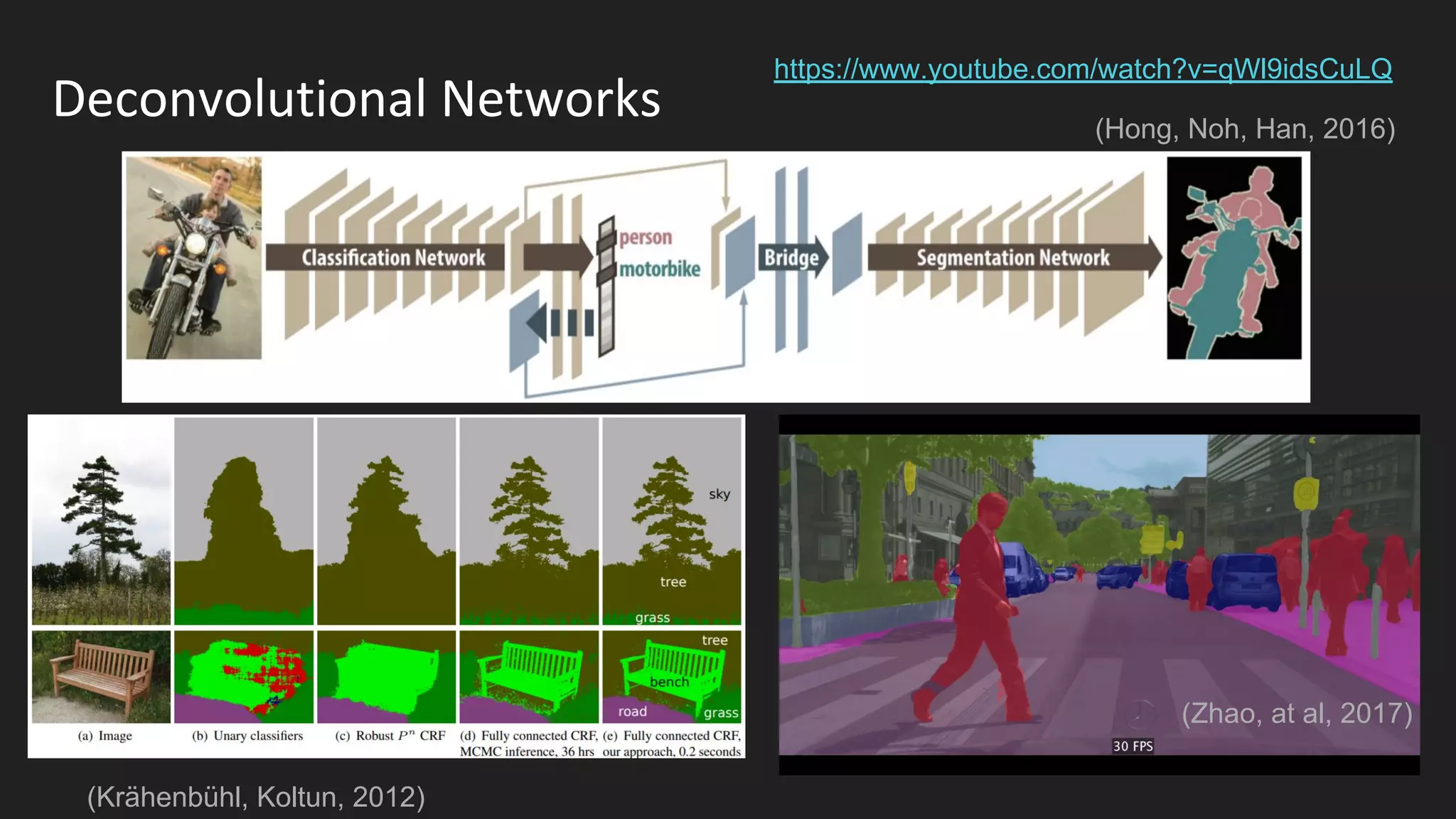

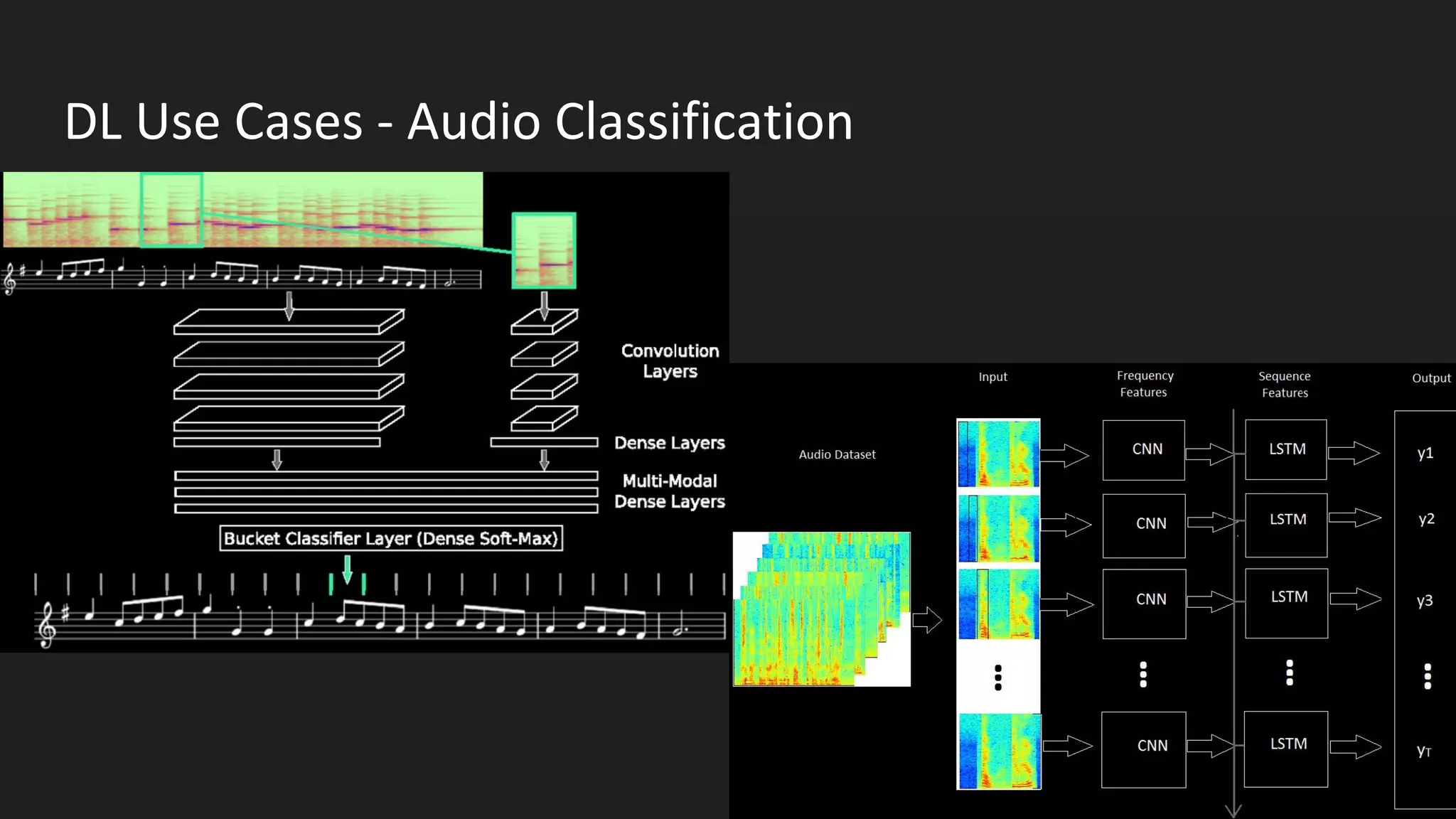

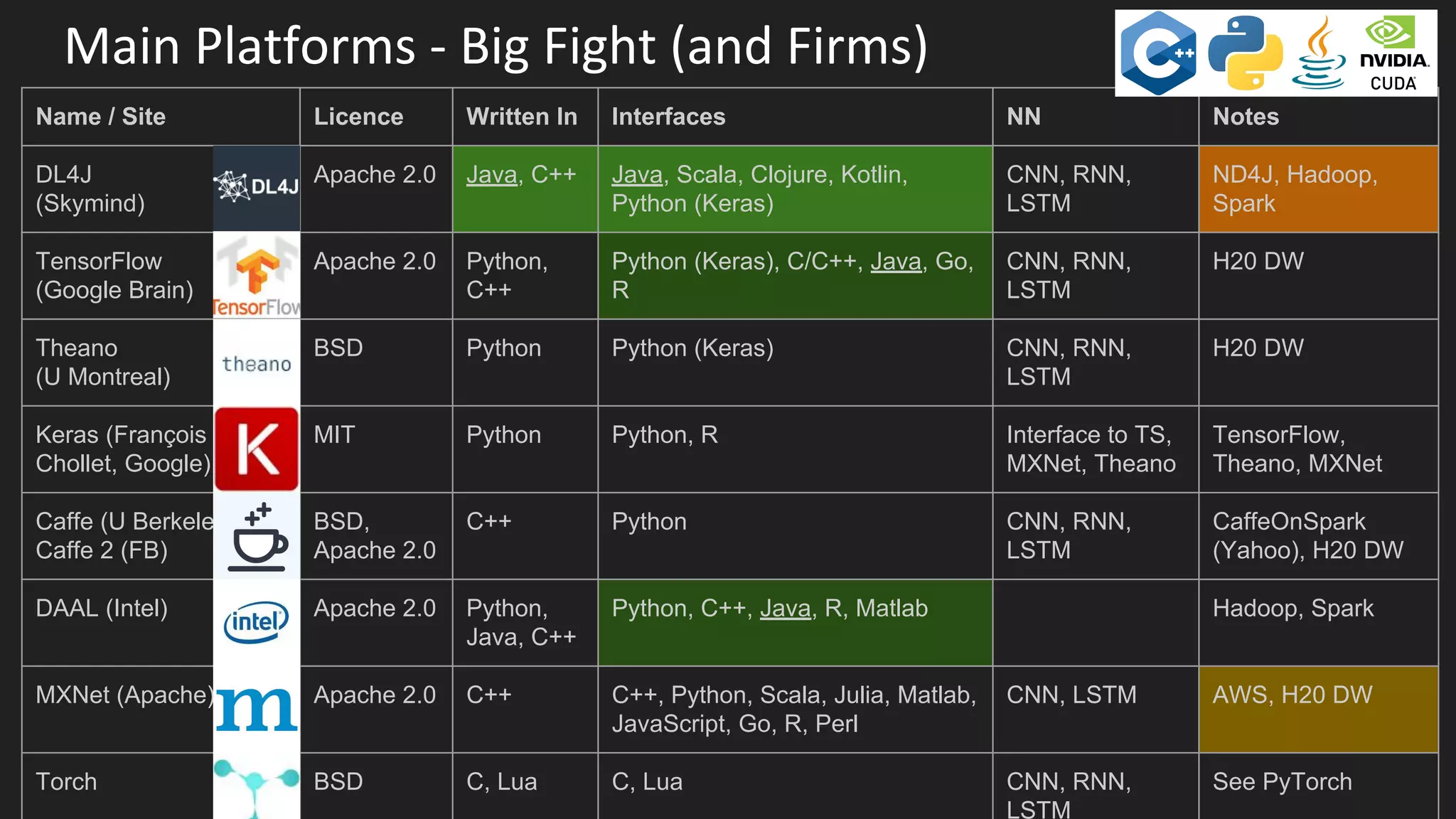

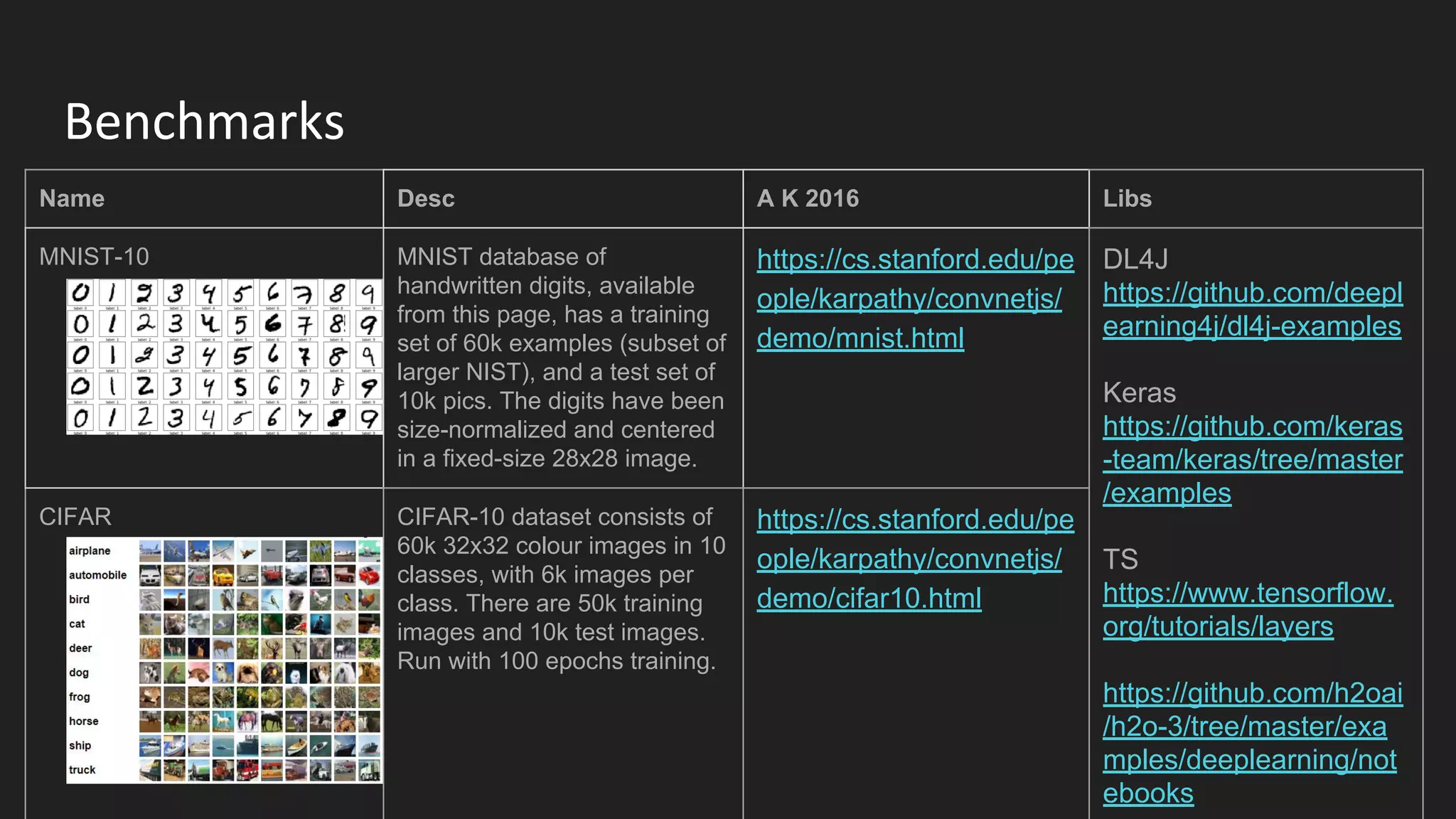

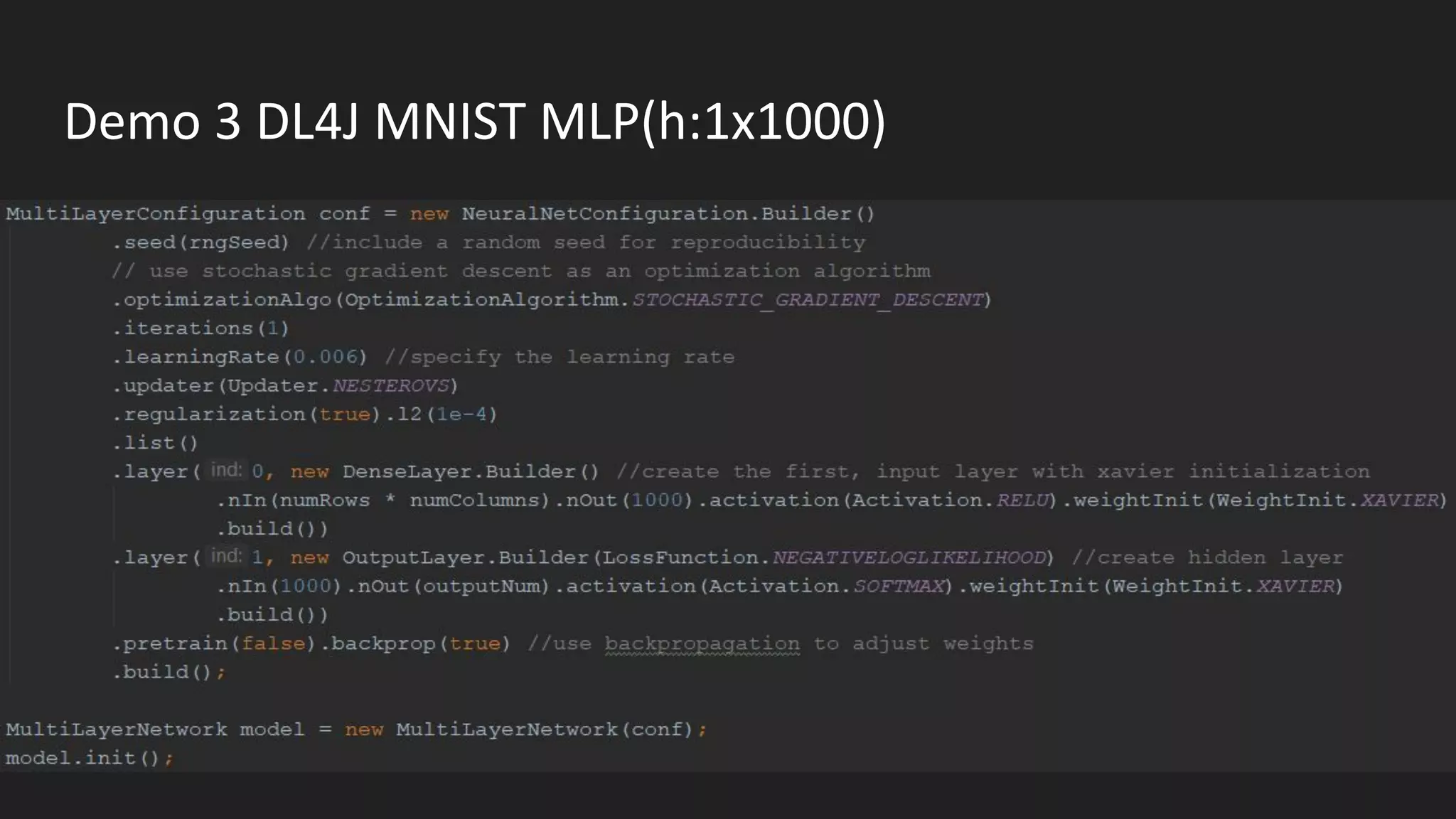

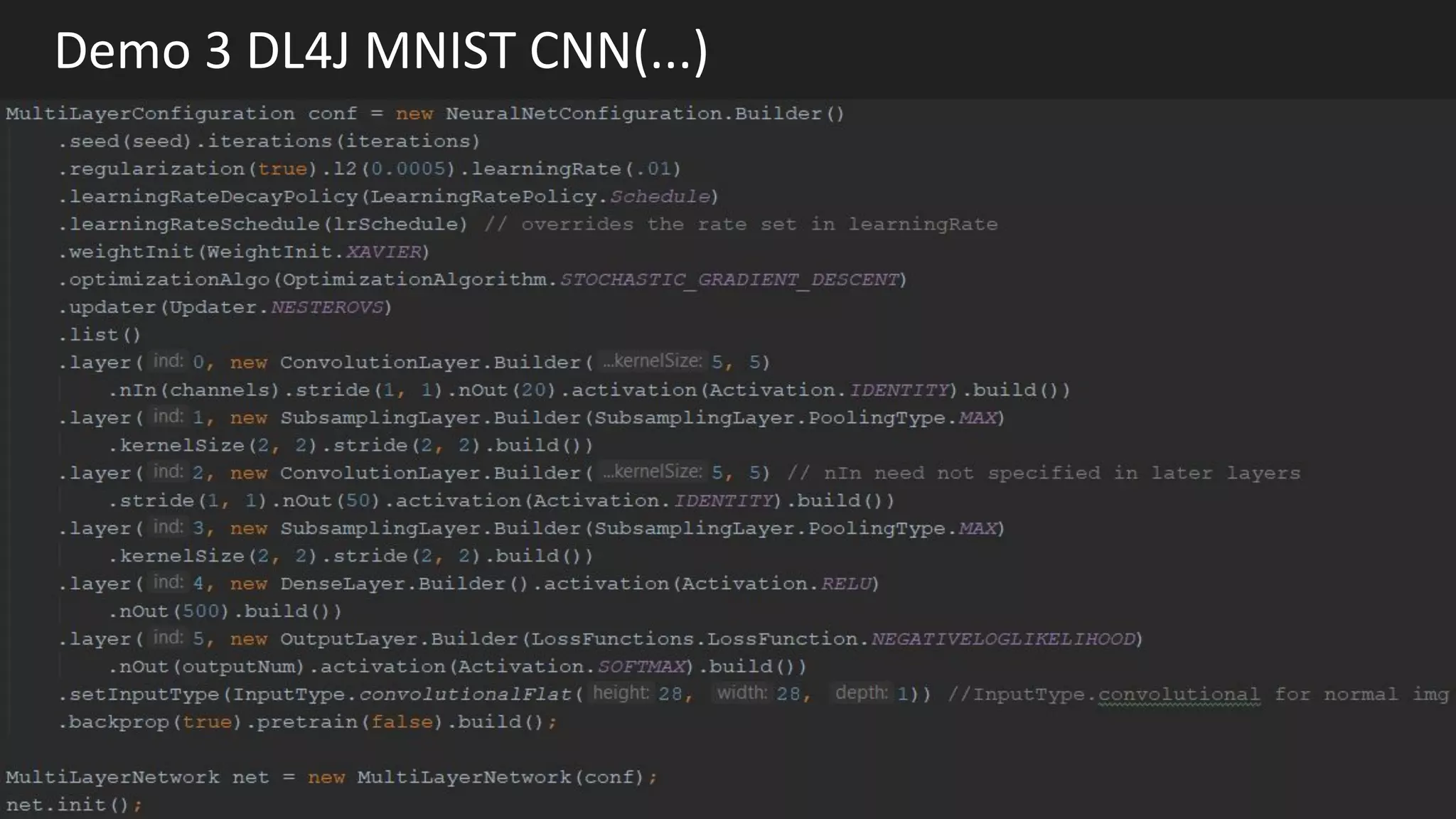

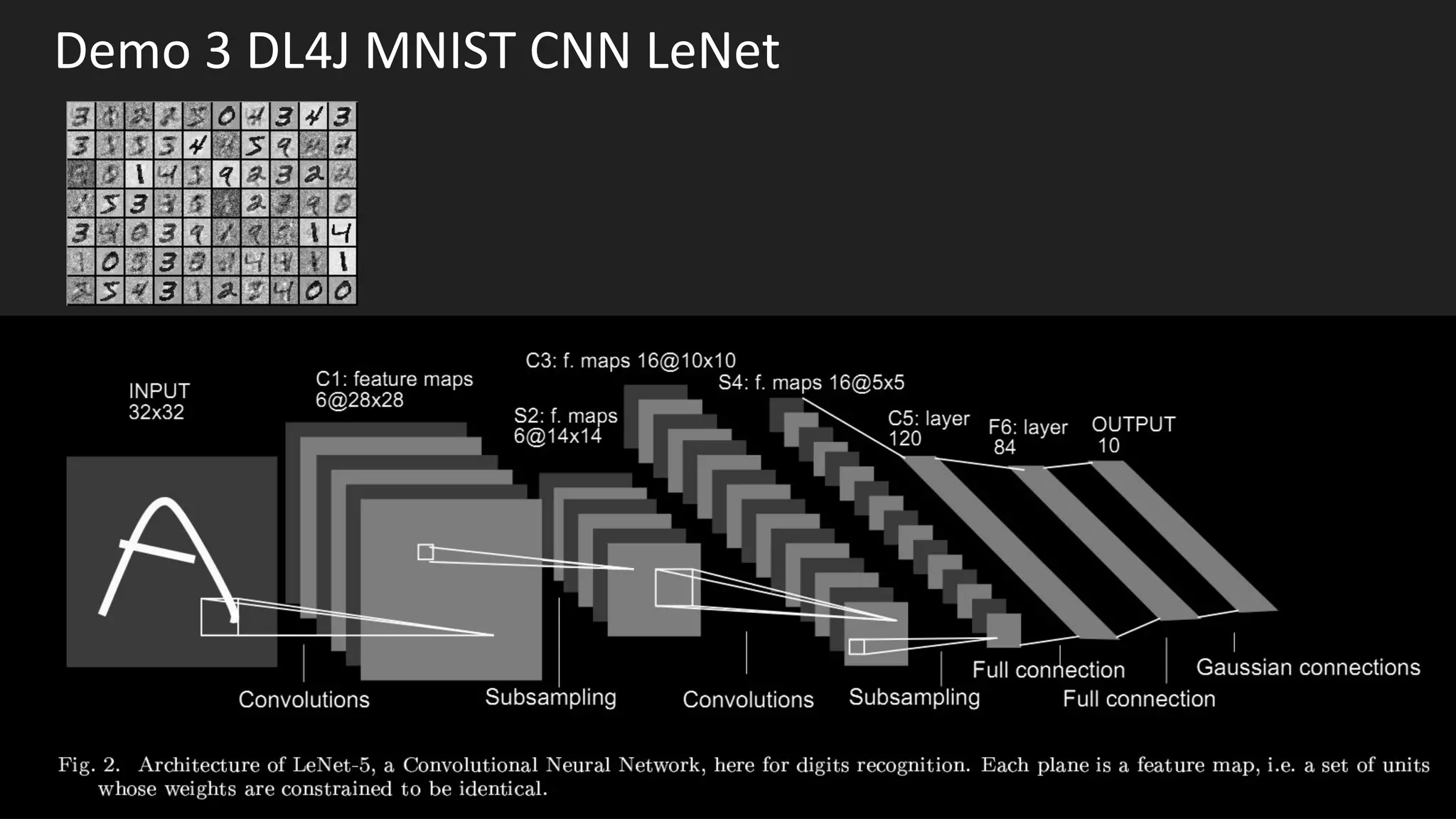

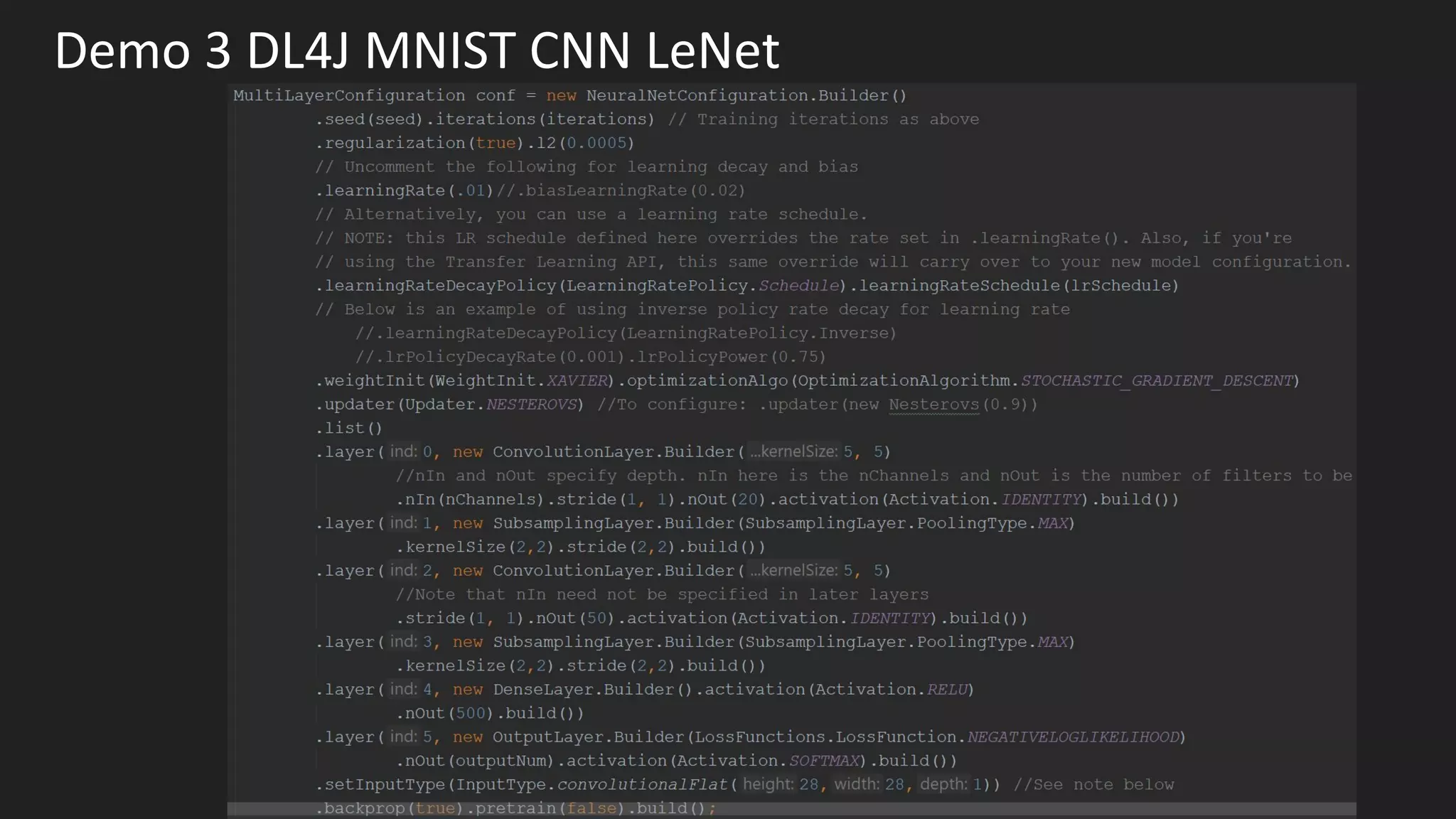

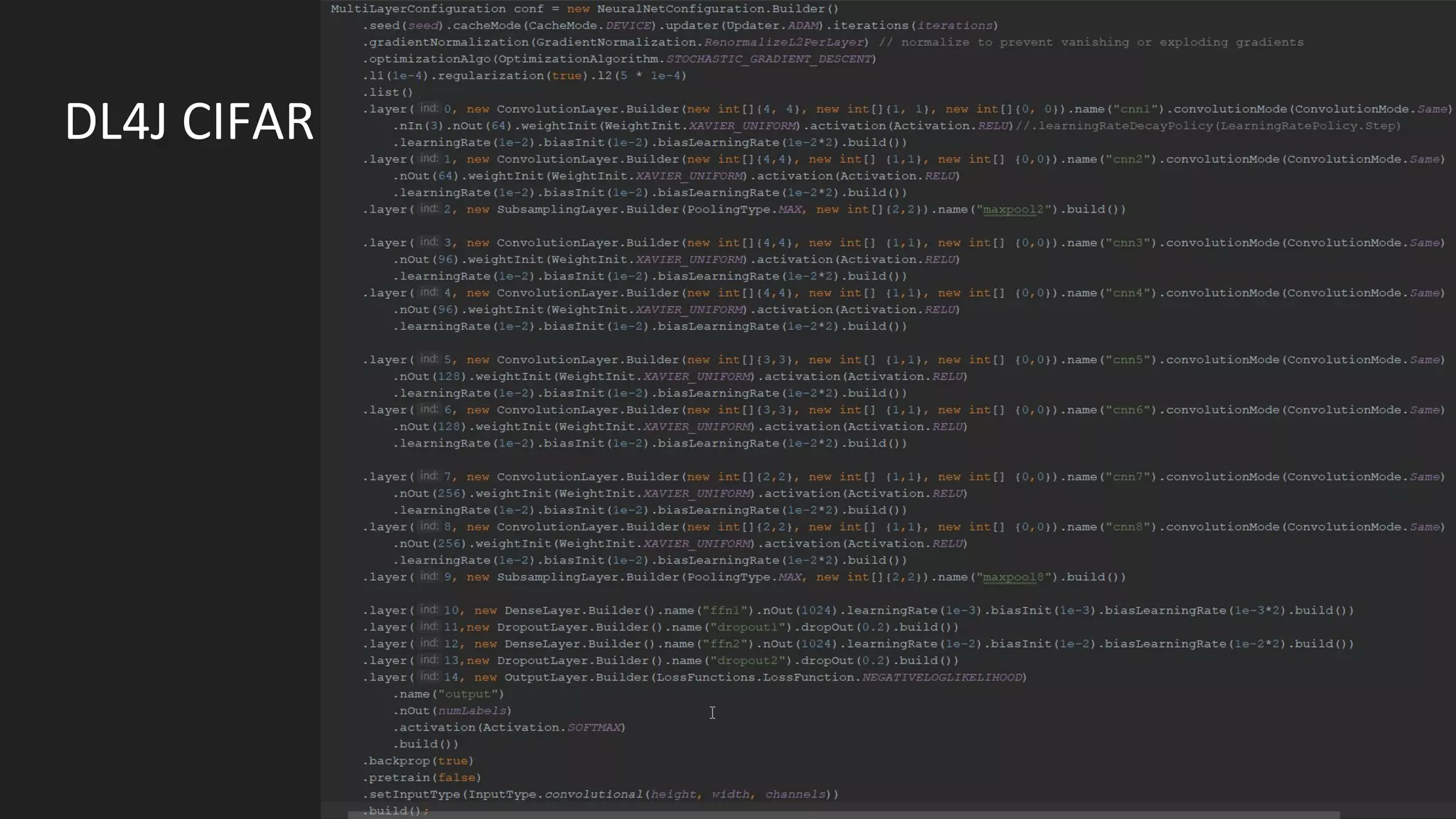

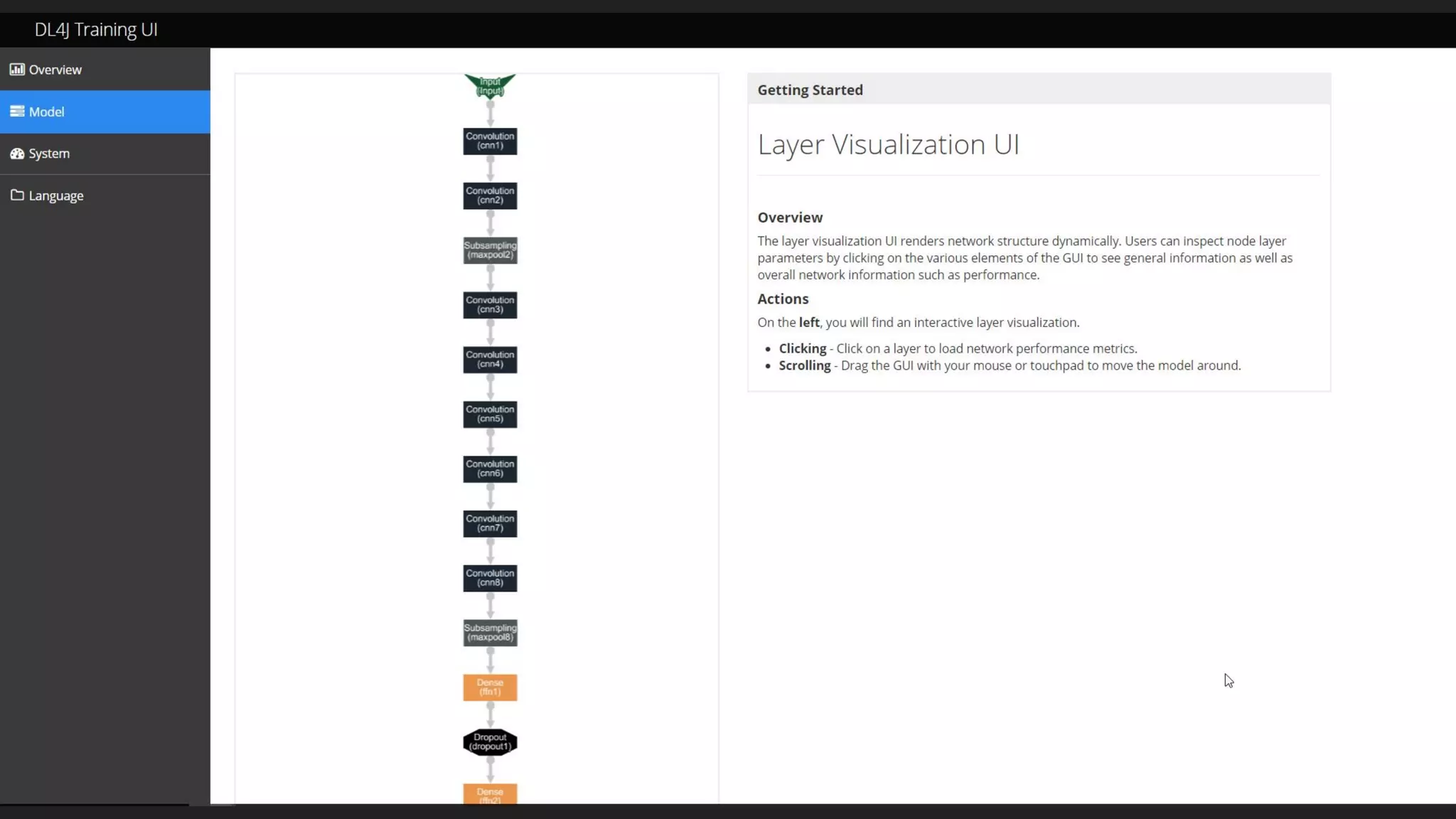

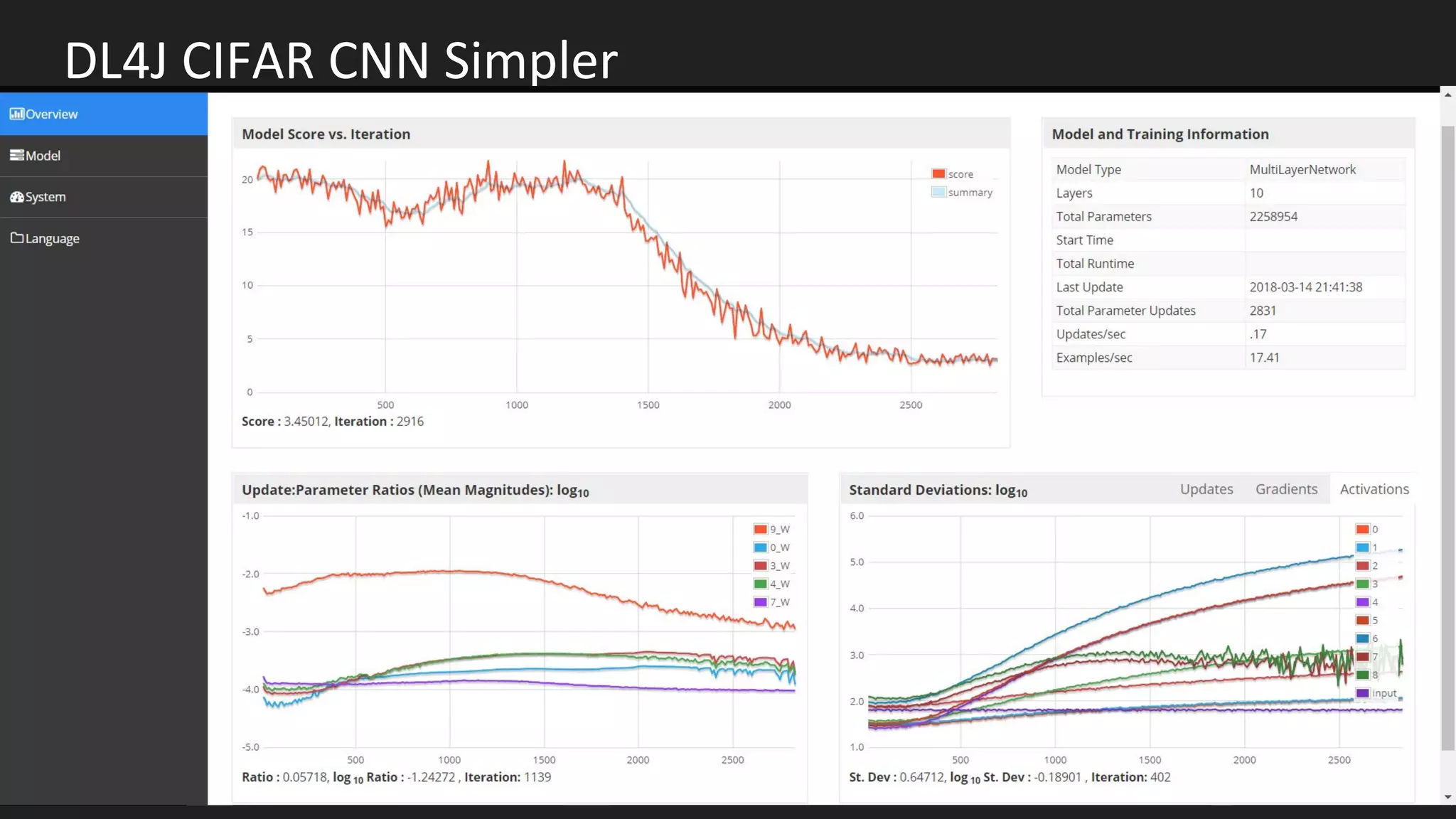

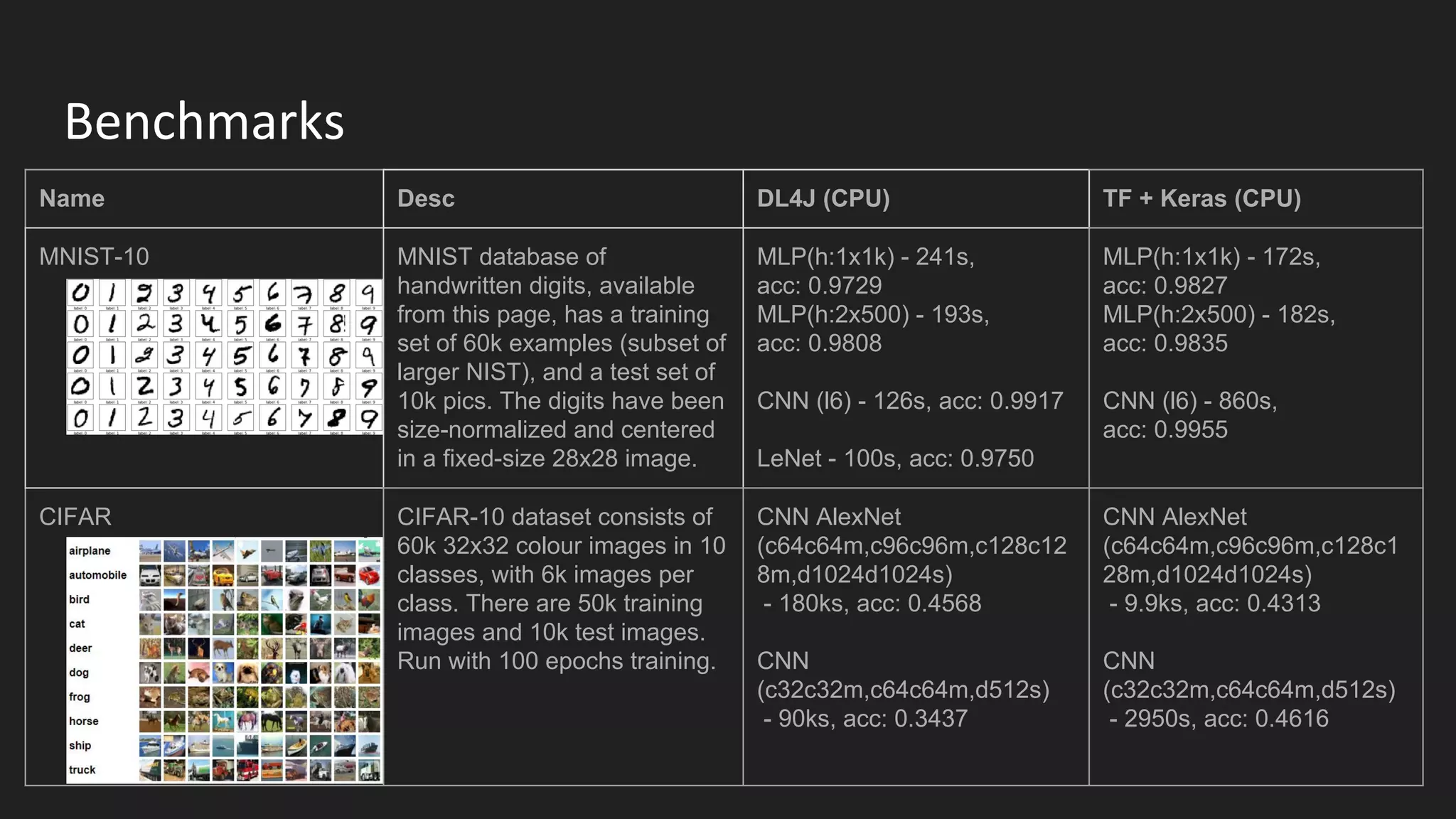

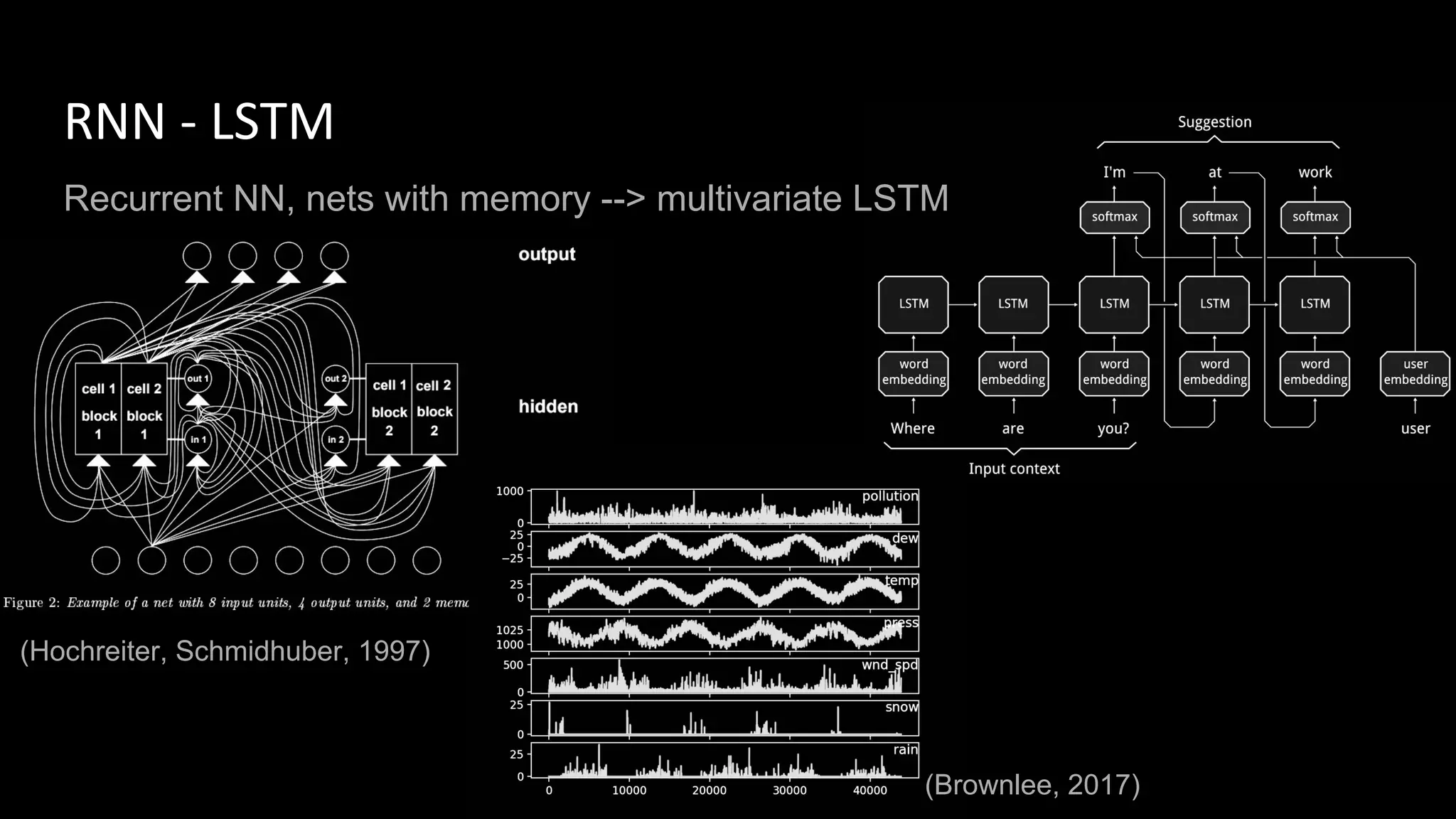

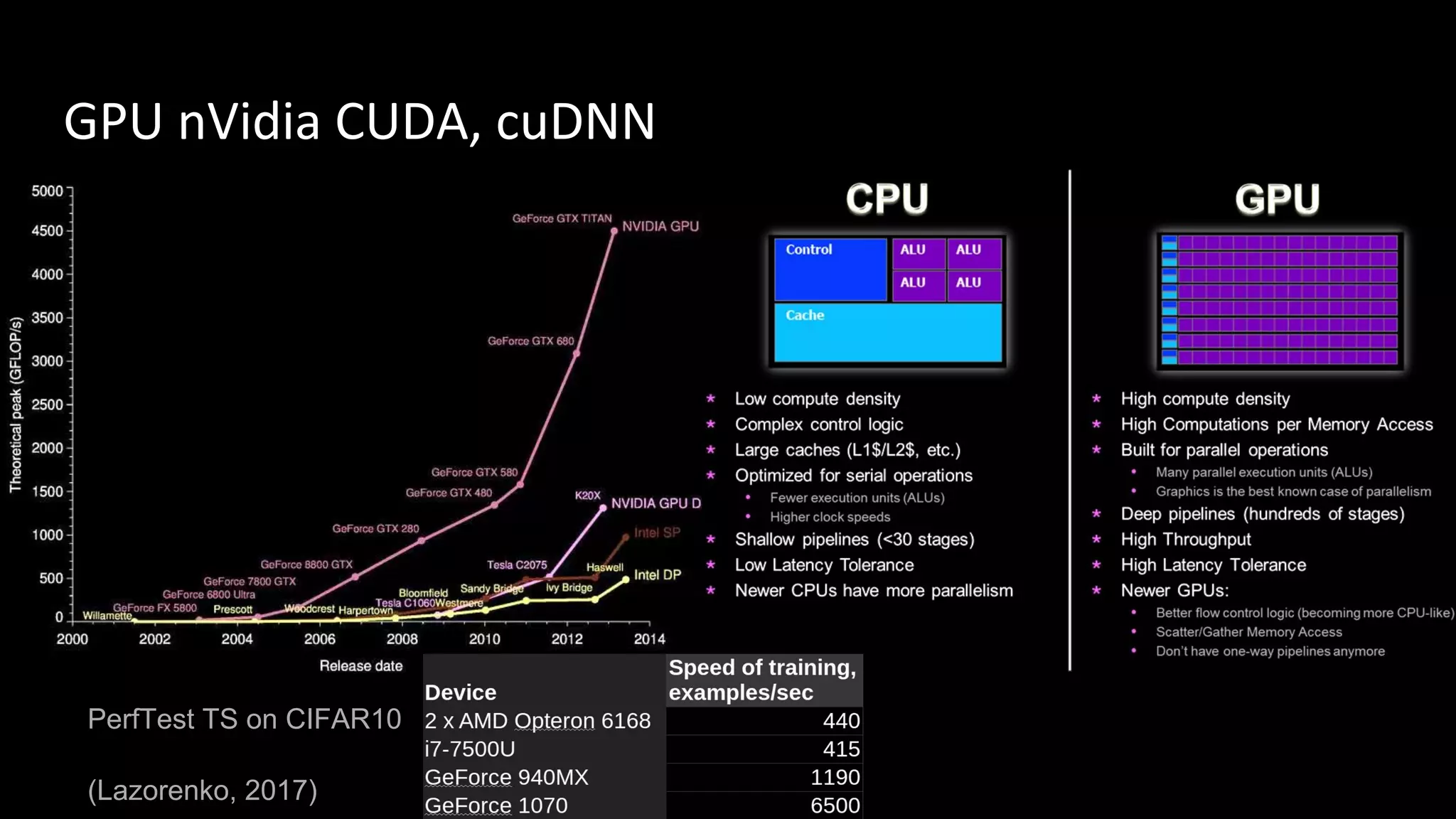

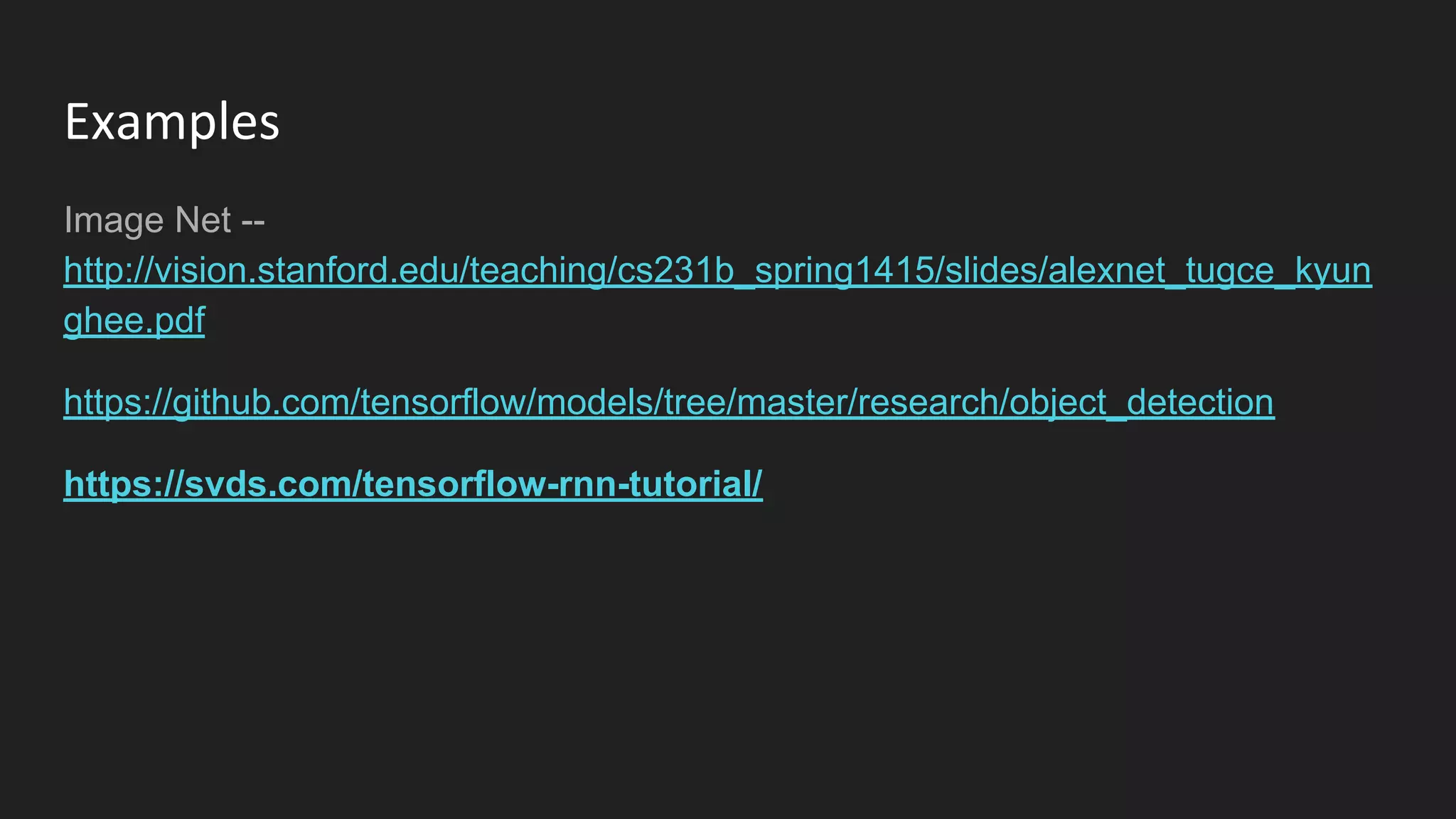

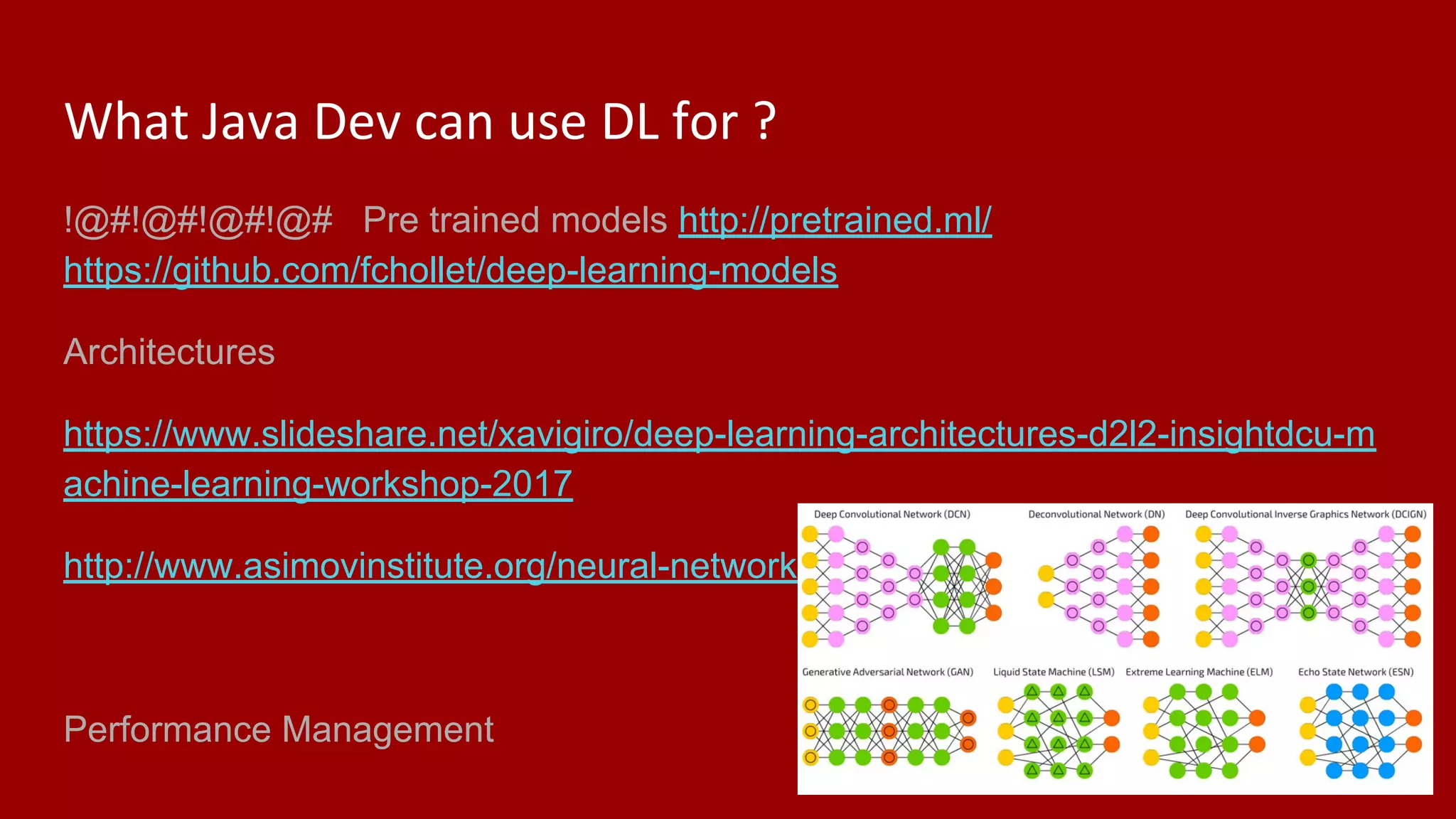

Machine learning and deep learning techniques are present in Java through various libraries. Deep learning allows neural networks to learn from vast amounts of data through multilayer architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs). The talk discussed several popular Java libraries that support both traditional machine learning algorithms and deep learning models, including DL4J, TensorFlow, Keras, and H2O. It provided examples of training deep learning models on MNIST and CIFAR10 datasets in DL4J and compared performance between DL4J and TensorFlow.