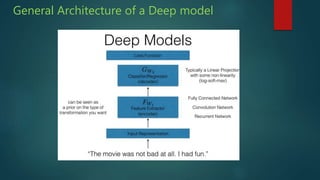

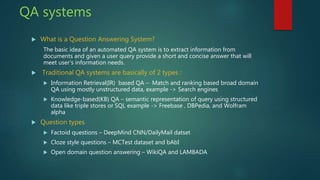

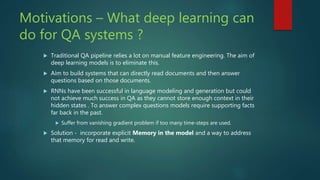

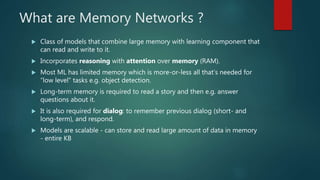

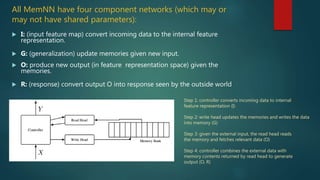

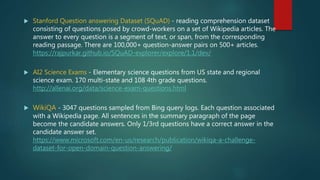

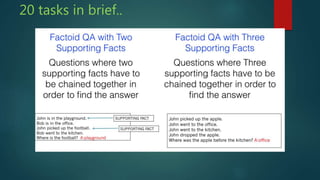

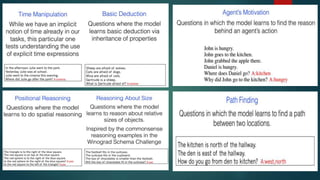

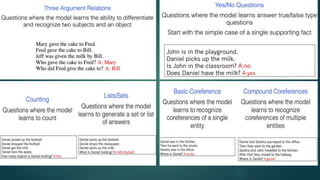

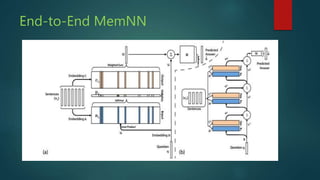

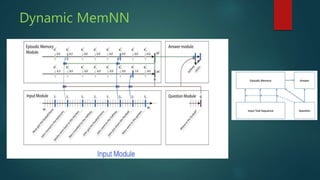

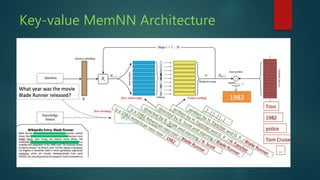

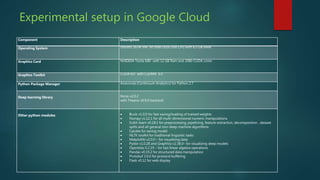

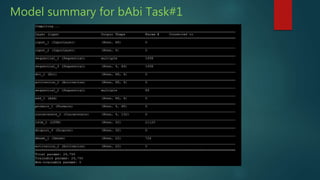

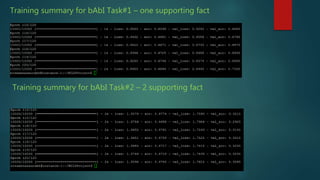

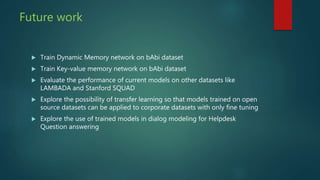

The presentation discusses deep learning and its application in question answering (QA) systems, focusing on the use of deep architectures such as memory networks that integrate learning with memory capabilities. It outlines the two primary types of traditional QA systems—information retrieval and knowledge-based systems—and highlights the shift towards models that can autonomously read documents and answer questions. Additionally, various datasets for training QA models are presented, along with future work aimed at improving memory networks and exploring their application in real-world scenarios.