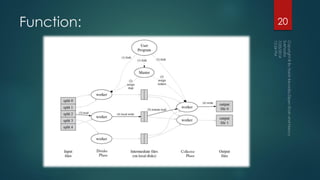

The document discusses the concept of computer clusters used for processing large amounts of data, emphasizing the need for distributed computing to manage big data efficiently. It explains how a master node coordinates the tasks of worker nodes and how algorithms are needed to handle interdependent data inputs. The document also describes the MapReduce programming model as a method to generate scalable and fault-tolerant systems for data processing tasks.