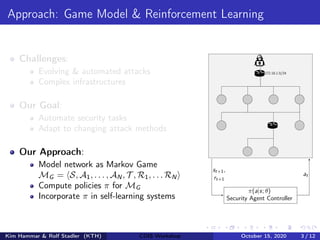

- The document describes using reinforcement learning to model network security as a Markov game and learn security strategies through self-play.

- The network infrastructure is modeled as a graph and the interactions between an attacker and defender are framed as a partially observable, zero-sum Markov game.

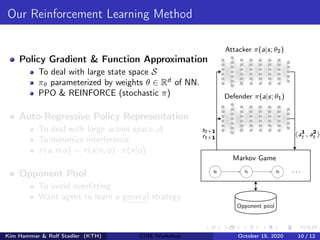

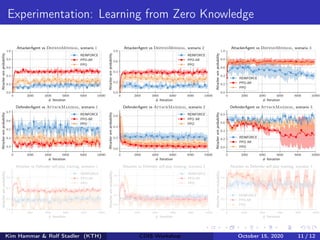

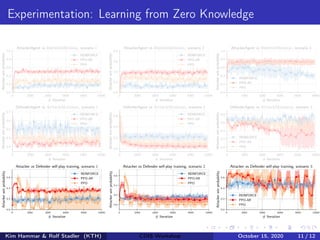

- Reinforcement learning is used to approximate optimal policies for both players by representing their policies with neural networks and optimizing rewards over many episodes of self-play without human intervention.

![Markov Game Model for Intrusion Prevention

(1) Network Infrastructure (2) Graph G = N, E

Ndata

Nstart

(3) State space |S| = (w + 1)|N|·m·(m+1)

SA

3 = [0, 0, 0, 0]

SD

3 = [9, 1, 5, 8, 1]

SA

0 = [0, 0, 0, 0]

SD

0 = [0, 0, 0, 0, 0]

SA

1 = [0, 0, 0, 0]

SD

1 = [1, 9, 9, 8, 1]

SA

2 = [0, 0, 0, 0]

SD

2 = [9, 9, 9, 8, 1]

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 7 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-11-320.jpg)

![Markov Game Model for Intrusion Prevention

(1) Network Infrastructure (2) Graph G = N, E

Ndata

Nstart

(3) State space |S| = (w + 1)|N|·m·(m+1)

SA

3 = [0, 0, 0, 0]

SD

3 = [9, 1, 5, 8, 1]

SA

0 = [0, 0, 0, 0]

SD

0 = [0, 0, 0, 0, 0]

SA

1 = [0, 0, 0, 0]

SD

1 = [1, 9, 9, 8, 1]

SA

2 = [0, 0, 0, 0]

SD

2 = [9, 9, 9, 8, 1]

(4) Action space |A| = |N| · (m + 1)

?/0 ?/0 ?/0 ?/0?/0?/0

?/0 ?/0 ?/0

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 7 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-12-320.jpg)

![Markov Game Model for Intrusion Prevention

(1) Network Infrastructure (2) Graph G = N, E

Ndata

Nstart

(3) State space |S| = (w + 1)|N|·m·(m+1)

SA

3 = [0, 0, 0, 0]

SD

3 = [9, 1, 5, 8, 1]

SA

0 = [0, 0, 0, 0]

SD

0 = [0, 0, 0, 0, 0]

SA

1 = [0, 0, 0, 0]

SD

1 = [1, 9, 9, 8, 1]

SA

2 = [0, 0, 0, 0]

SD

2 = [9, 9, 9, 8, 1]

(4) Action space |A| = |N| · (m + 1)

?/0 ?/0 ?/0 ?/0?/0?/0

?/0 ?/0 ?/0

(5) Game Dynamics T , R1, R2, ρ0

4/0 0/1 8/0 ?/0?/0?/0

0/0 9/0 8/0

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 7 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-13-320.jpg)

![Markov Game Model for Intrusion Prevention

(1) Network Infrastructure (2) Graph G = N, E

Ndata

Nstart

(3) State space |S| = (w + 1)|N|·m·(m+1)

SA

3 = [0, 0, 0, 0]

SD

3 = [9, 1, 5, 8, 1]

SA

0 = [0, 0, 0, 0]

SD

0 = [0, 0, 0, 0, 0]

SA

1 = [0, 0, 0, 0]

SD

1 = [1, 9, 9, 8, 1]

SA

2 = [0, 0, 0, 0]

SD

2 = [9, 9, 9, 8, 1]

(4) Action space |A| = |N| · (m + 1)

?/0 ?/0 ?/0 ?/0?/0?/0

?/0 ?/0 ?/0

(5) Game Dynamics T , R1, R2, ρ0

4/0 0/1 8/0 ?/0?/0?/0

0/0 9/0 8/0

∴

- Markov game

- Zero-sum

- 2 players

- Partially observed

- Stochastic elements

- Round-based

MG = S, A1, A2, T , R1, R2, γ, ρ0

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 7 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-14-320.jpg)

![The Reinforcement Learning Problem

Goal:

Approximate π∗

= arg maxπ E

T

t=0 γt

rt+1

Learning Algorithm:

Represent π by πθ

Define objective J(θ) = Eo∼ρπθ ,a∼πθ

[R]

Maximize J(θ) by stochastic gradient ascent with gradient

θJ(θ) = Eo∼ρπθ ,a∼πθ

[ θ log πθ(a|o)Aπθ

(o, a)]

Domain-Specific Challenges:

Partial observability: captured in the model

Large state space |S| = (w + 1)|N|·m·(m+1)

Large action space |A| = |N| · (m + 1)

Non-stationary Environment due to presence of adversary

Agent

Environment

at

st+1

rt+1

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 9 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-16-320.jpg)

![The Reinforcement Learning Problem

Goal:

Approximate π∗

= arg maxπ E

T

t=0 γt

rt+1

Learning Algorithm:

Represent π by πθ

Define objective J(θ) = Eo∼ρπθ ,a∼πθ

[R]

Maximize J(θ) by stochastic gradient ascent with gradient

θJ(θ) = Eo∼ρπθ ,a∼πθ

[ θ log πθ(a|o)Aπθ

(o, a)]

Domain-Specific Challenges:

Partial observability: captured in the model

Large state space |S| = (w + 1)|N|·m·(m+1)

Large action space |A| = |N| · (m + 1)

Non-stationary Environment due to presence of adversary

Agent

Environment

at

st+1

rt+1

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 9 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-17-320.jpg)

![The Reinforcement Learning Problem

Goal:

Approximate π∗

= arg maxπ E

T

t=0 γt

rt+1

Learning Algorithm:

Represent π by πθ

Define objective J(θ) = Eo∼ρπθ ,a∼πθ

[R]

Maximize J(θ) by stochastic gradient ascent with gradient

θJ(θ) = Eo∼ρπθ ,a∼πθ

[ θ log πθ(a|o)Aπθ

(o, a)]

Domain-Specific Challenges:

Partial observability: captured in the model

Large state space |S| = (w + 1)|N|·m·(m+1)

Large action space |A| = |N| · (m + 1)

Non-stationary Environment due to presence of adversary

Agent

Environment

at

st+1

rt+1

Kim Hammar & Rolf Stadler (KTH) CDIS Workshop October 15, 2020 9 / 12](https://image.slidesharecdn.com/cdishammarstadler15oct2020-201015122316/85/Self-Learning-Systems-for-Cyber-Security-18-320.jpg)