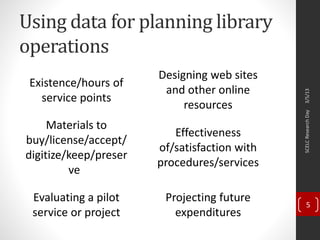

The document discusses the importance of using data for making evidence-driven decisions in library operations, covering various aspects like evaluating services, materials management, and website design. It emphasizes the significance of analyzing metrics such as cost-effectiveness, user satisfaction, and future projections to improve library services. Additionally, it provides strategies for collecting and analyzing data to inform decisions and enhance service delivery.

![Evaluating a pilot service or

project

• Is the cost/benefit ratio appropriate?

• What is the raw cost?

• But it’s not all about cost/benefit:

• Is the pilot achieving its aims?

• Does this [whatever] do what we thought it

would?

• What collateral effects will it have?

• Were the assumptions we made correct?

• Data collection will be varied for this task

3/5/13SCELCResearchDay

12](https://image.slidesharecdn.com/scelc-140316203326-phpapp01/85/Analyzing-Data-Getting-Results-Making-it-All-Make-Sense-12-320.jpg)

![Effectiveness of/satisfaction

with procedures/services

• What parts of our current service are users most and

least happy about?

• What are the ineffieciences in our procedure for

[whatever]?

• Some data collection ideas

• User surveys

• Ratio of potential to actual users

• Ratio of returning to non-returning users

• Error/failure rates

• Time from request to delivery

• Time tracking during staff activity

3/5/13SCELCResearchDay

14](https://image.slidesharecdn.com/scelc-140316203326-phpapp01/85/Analyzing-Data-Getting-Results-Making-it-All-Make-Sense-14-320.jpg)