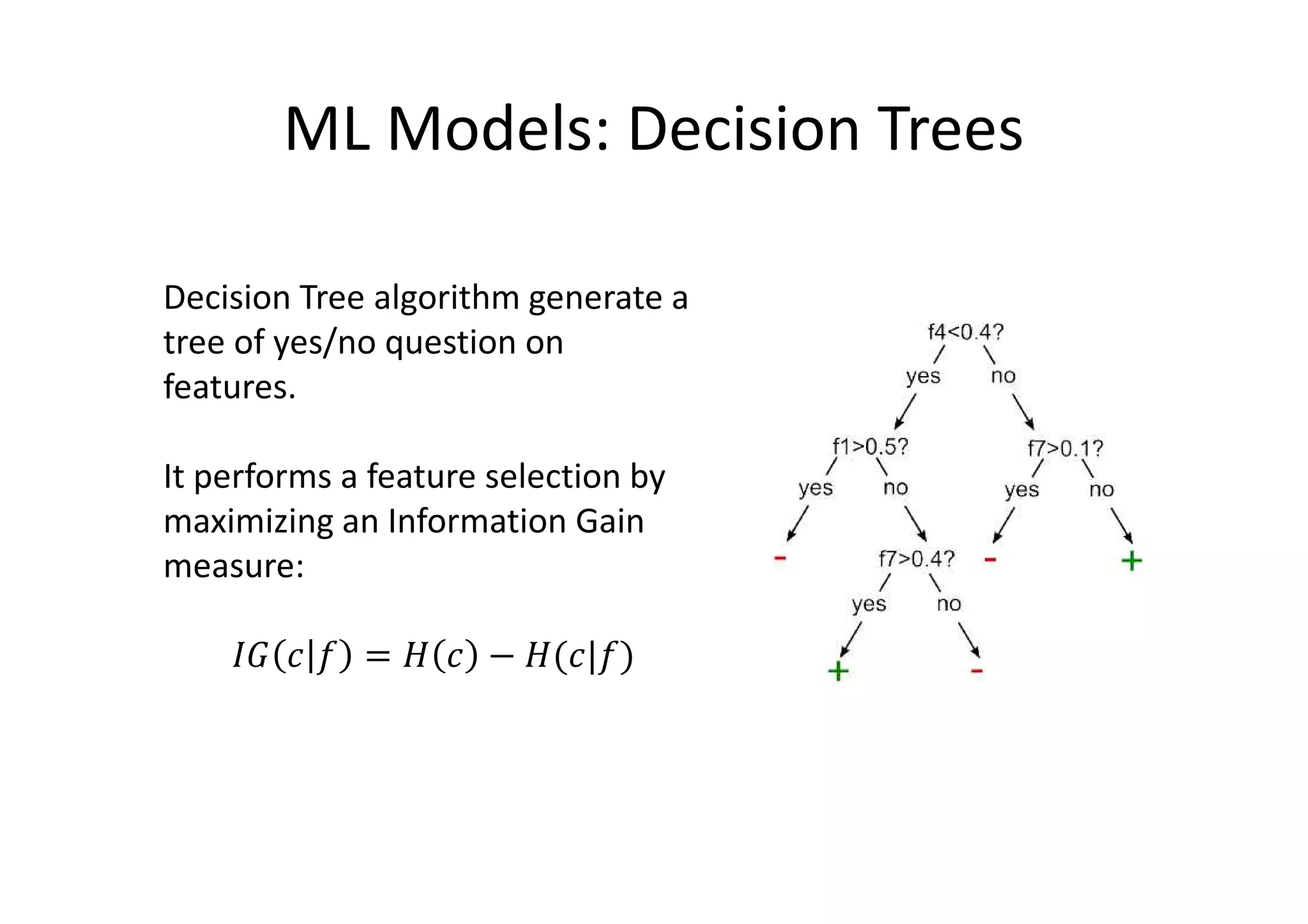

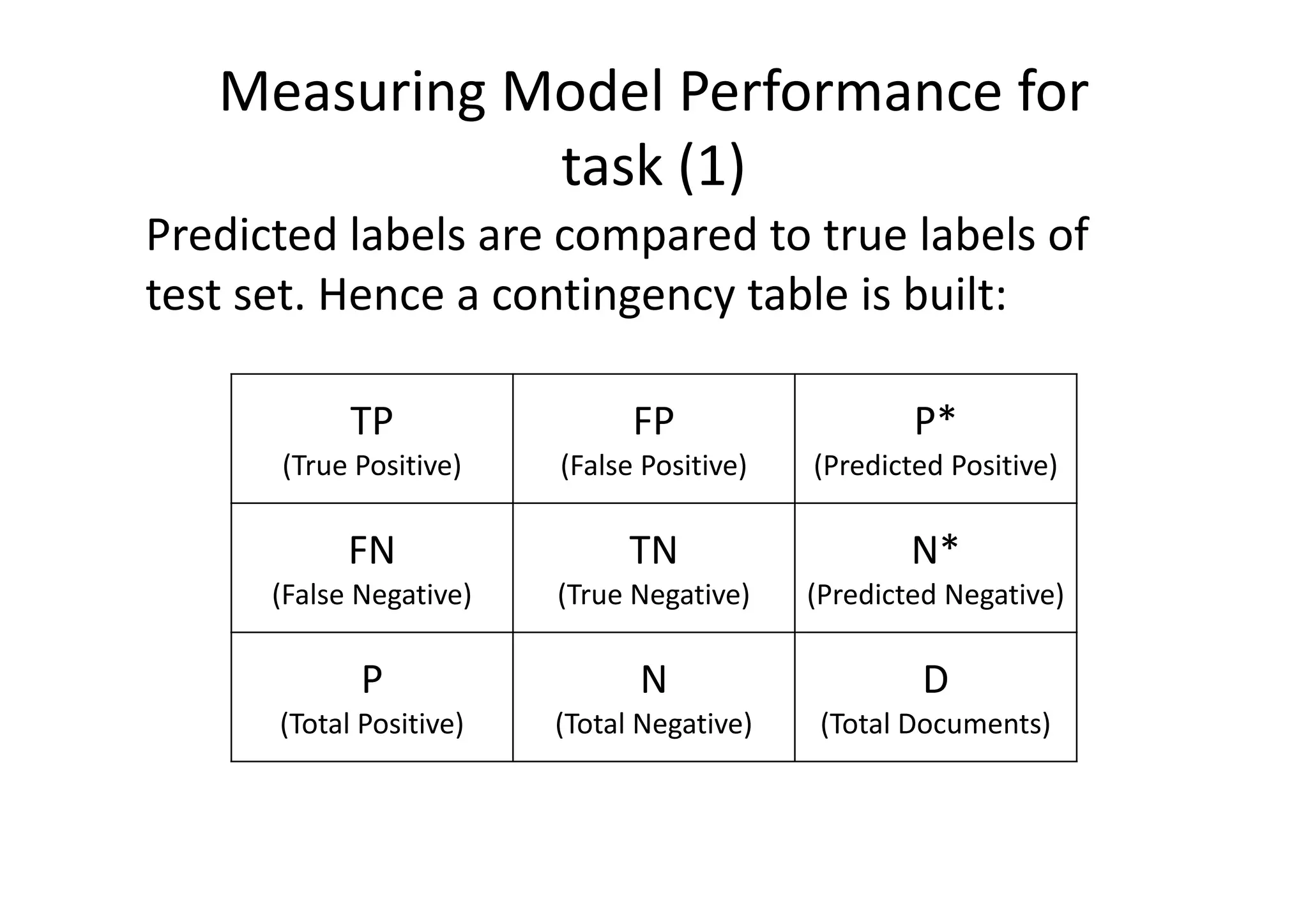

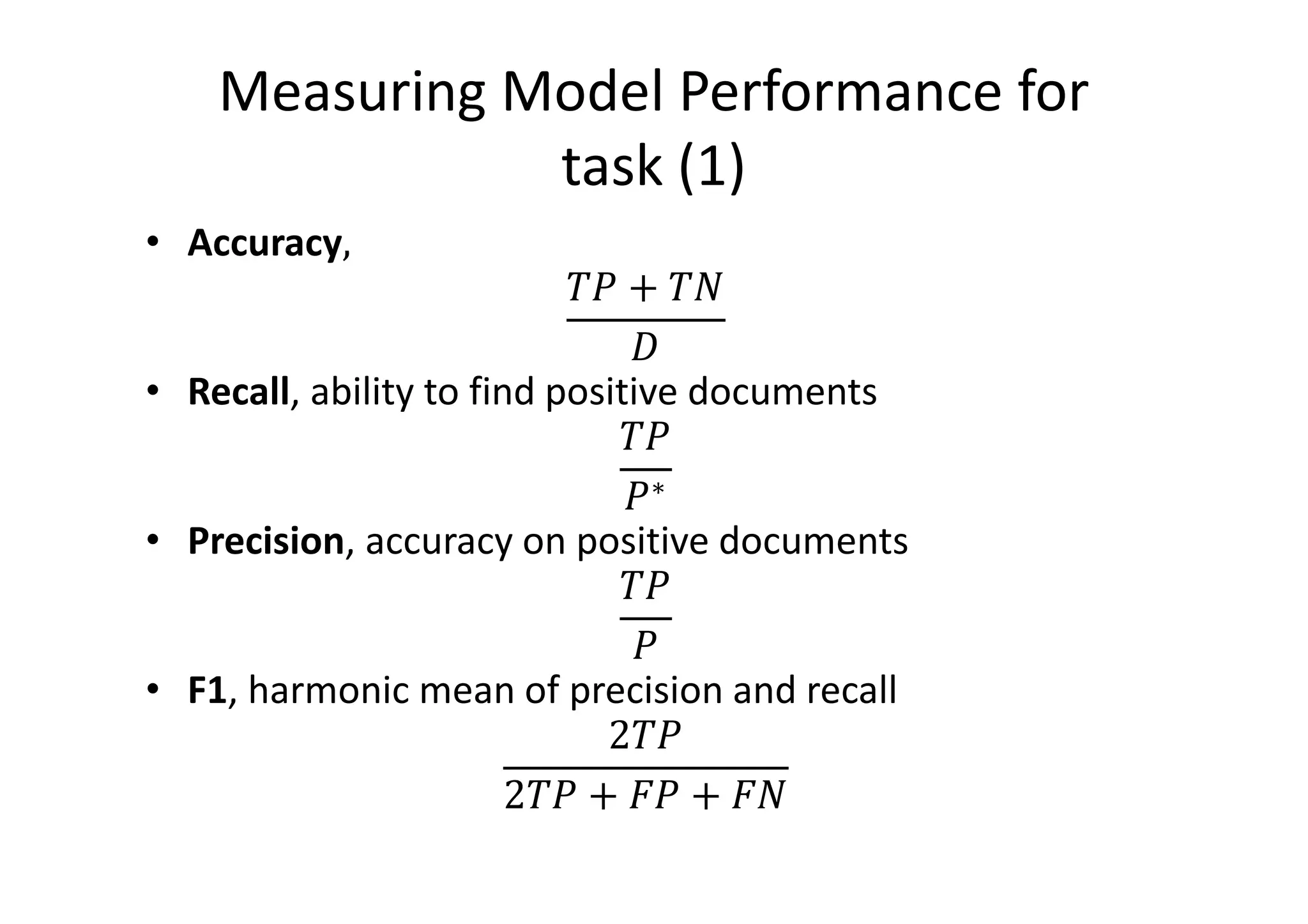

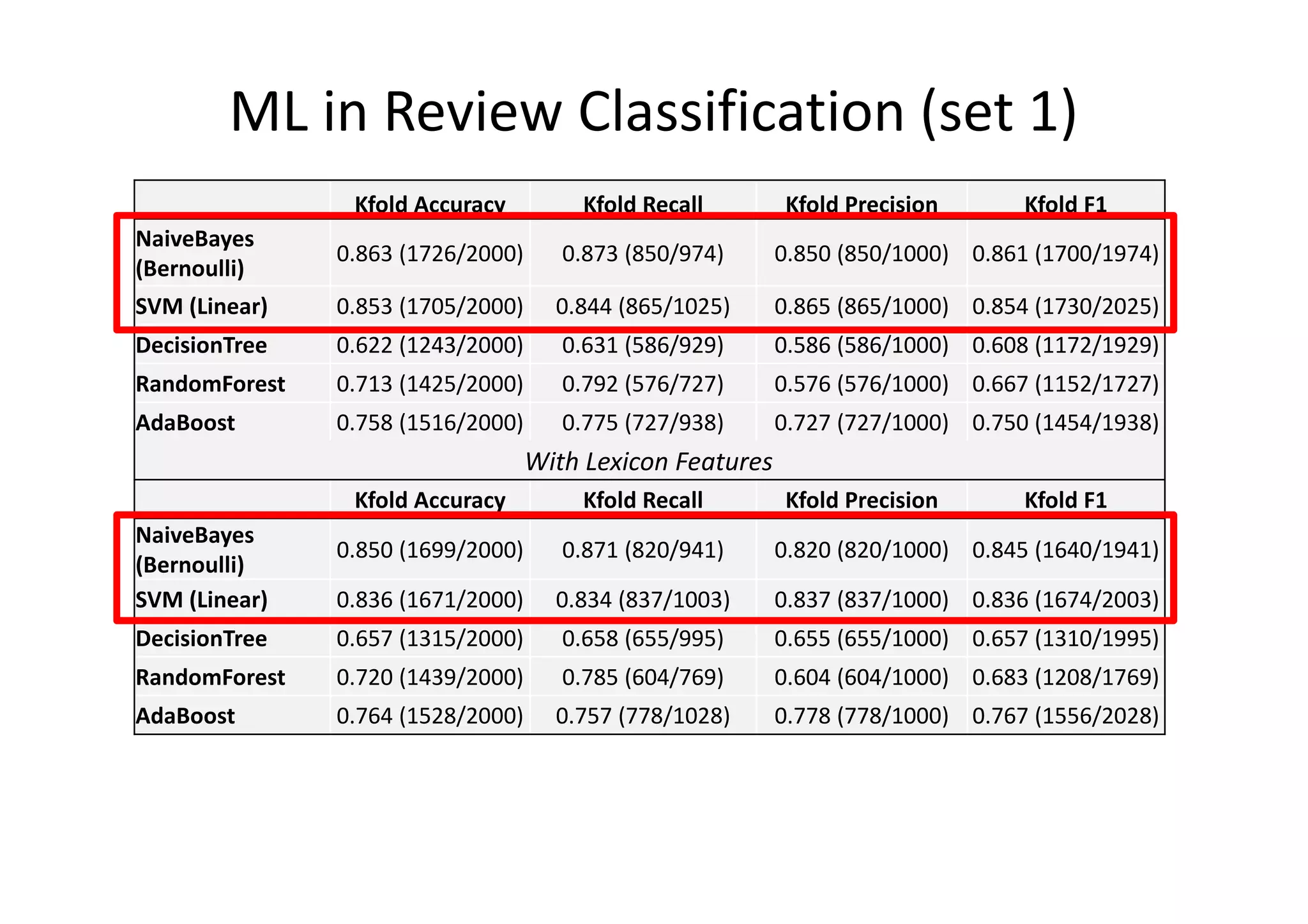

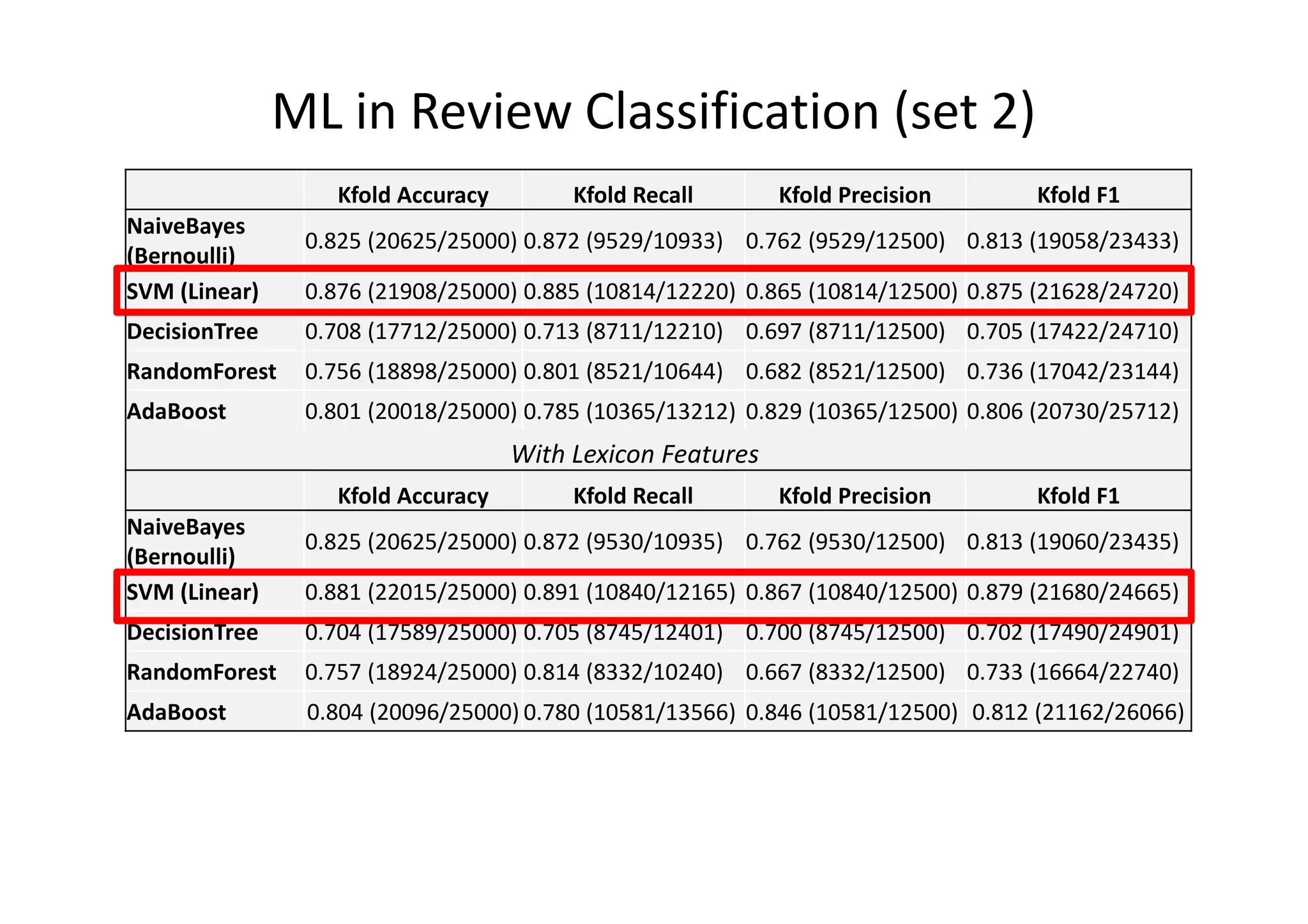

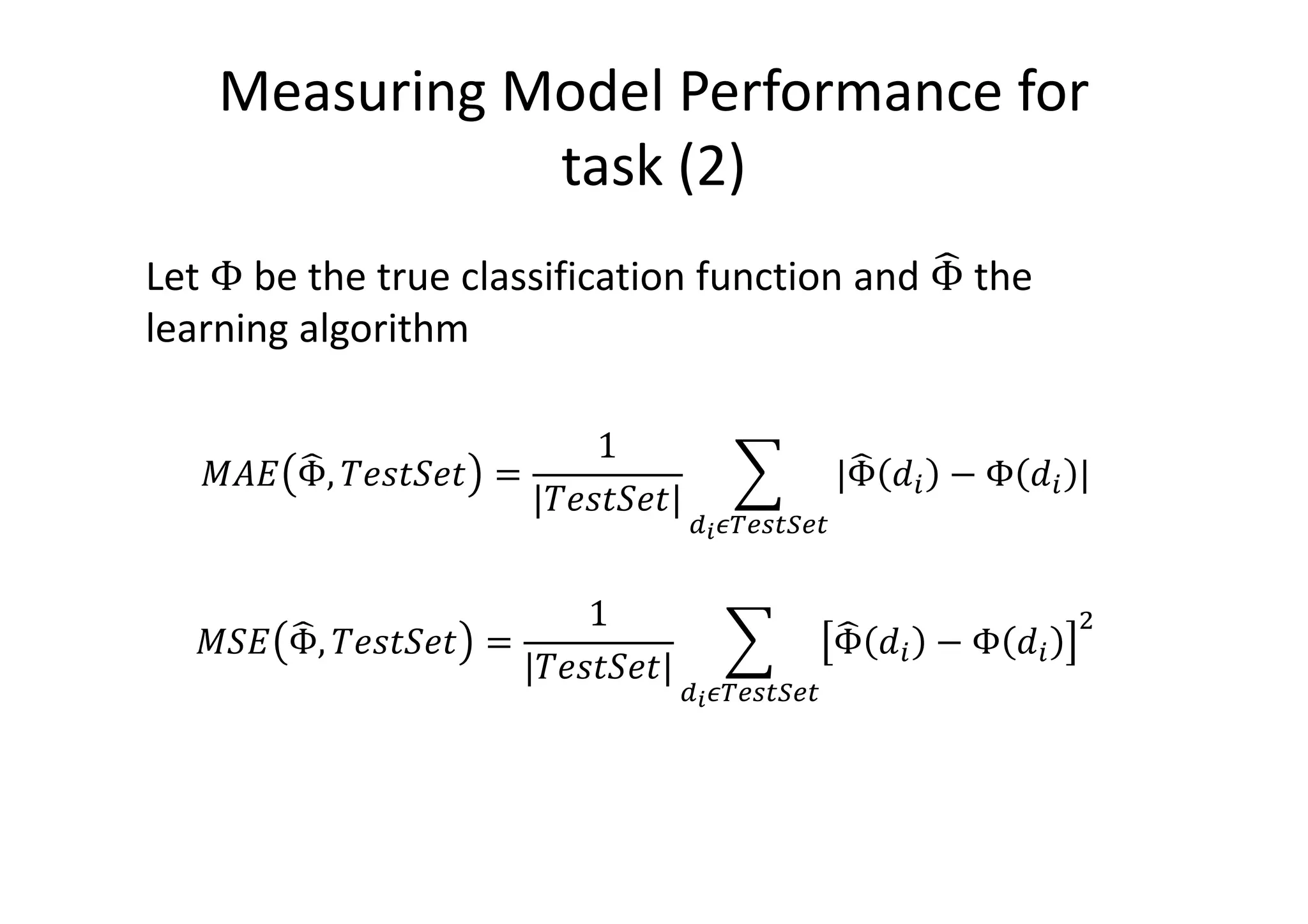

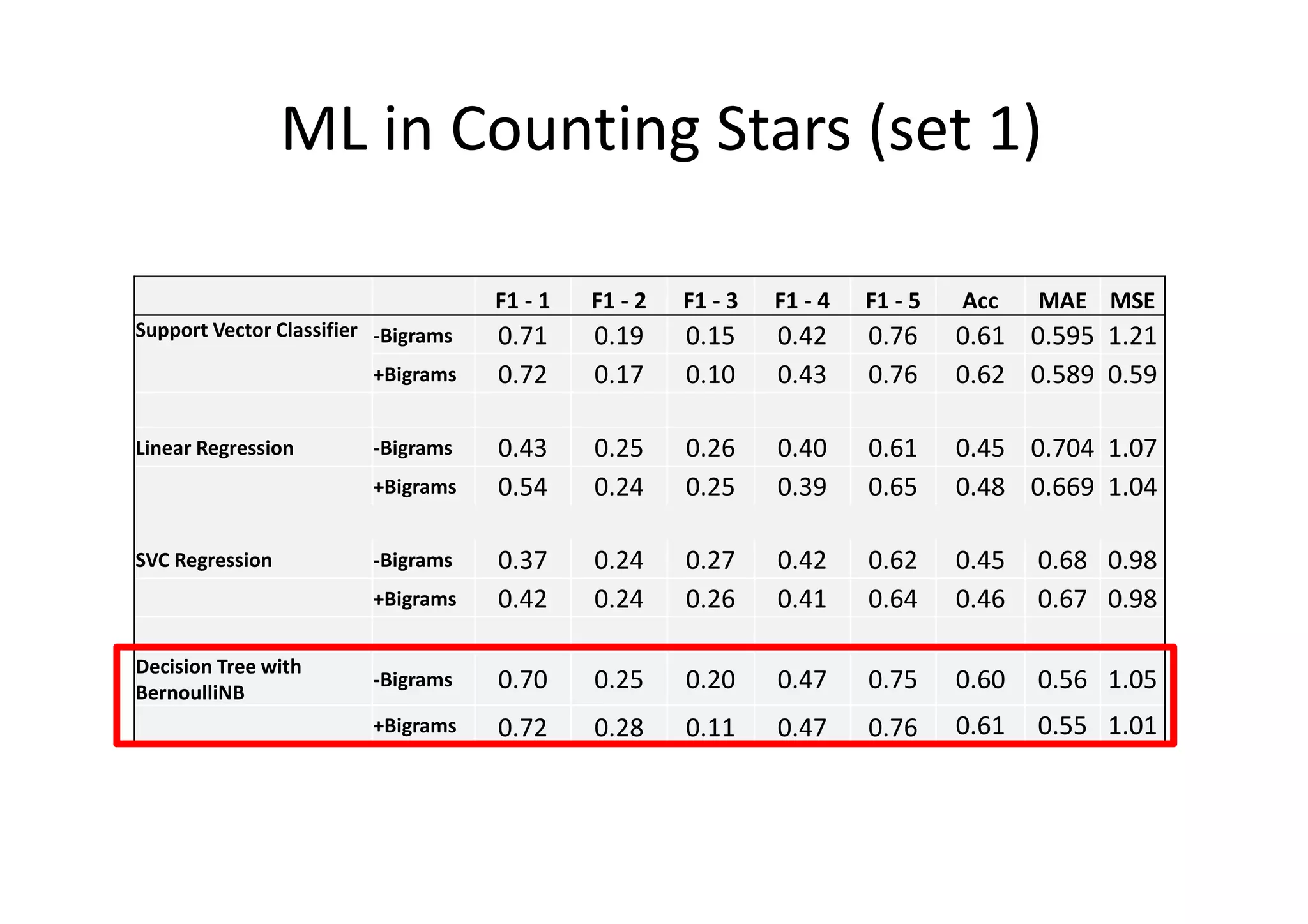

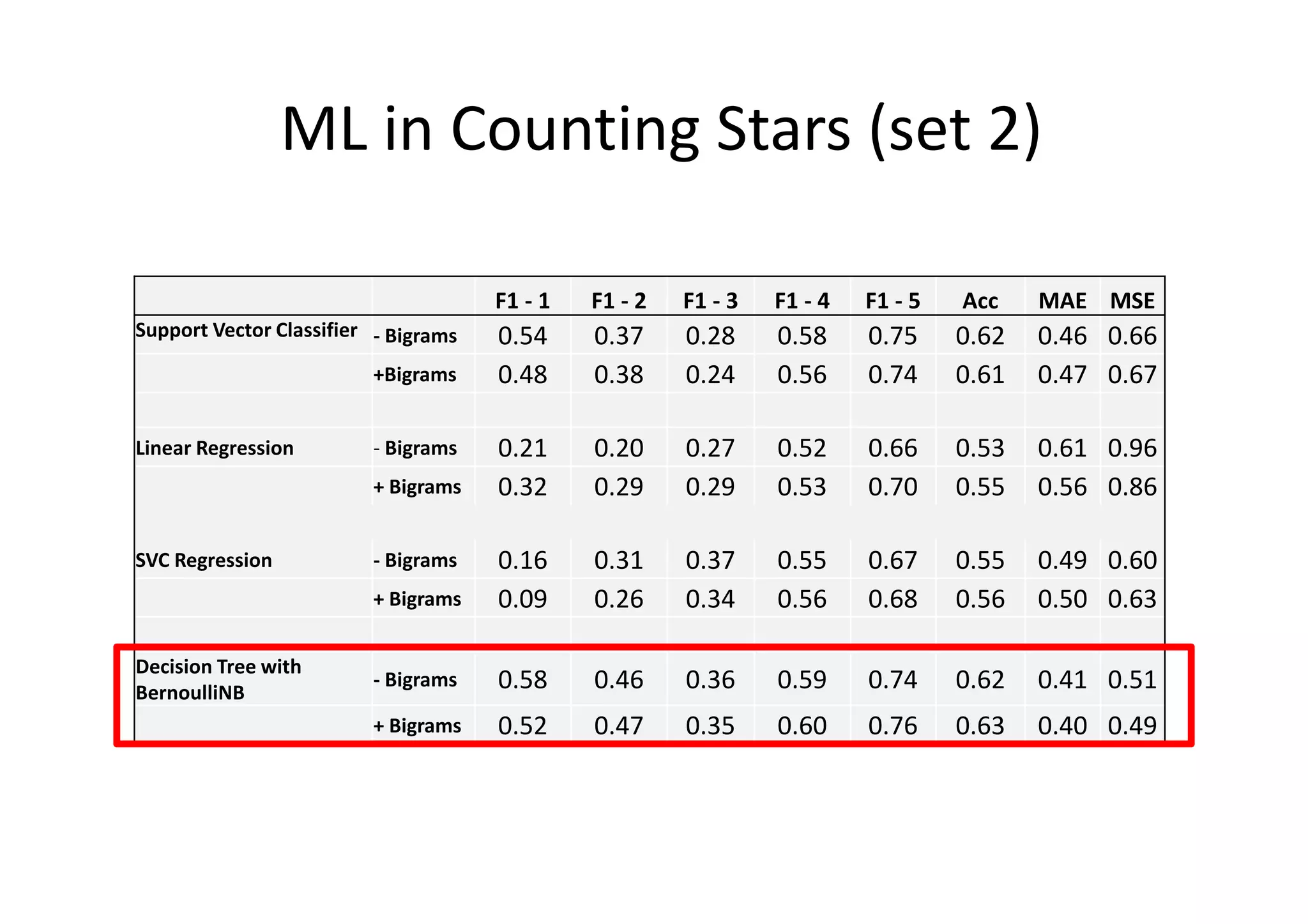

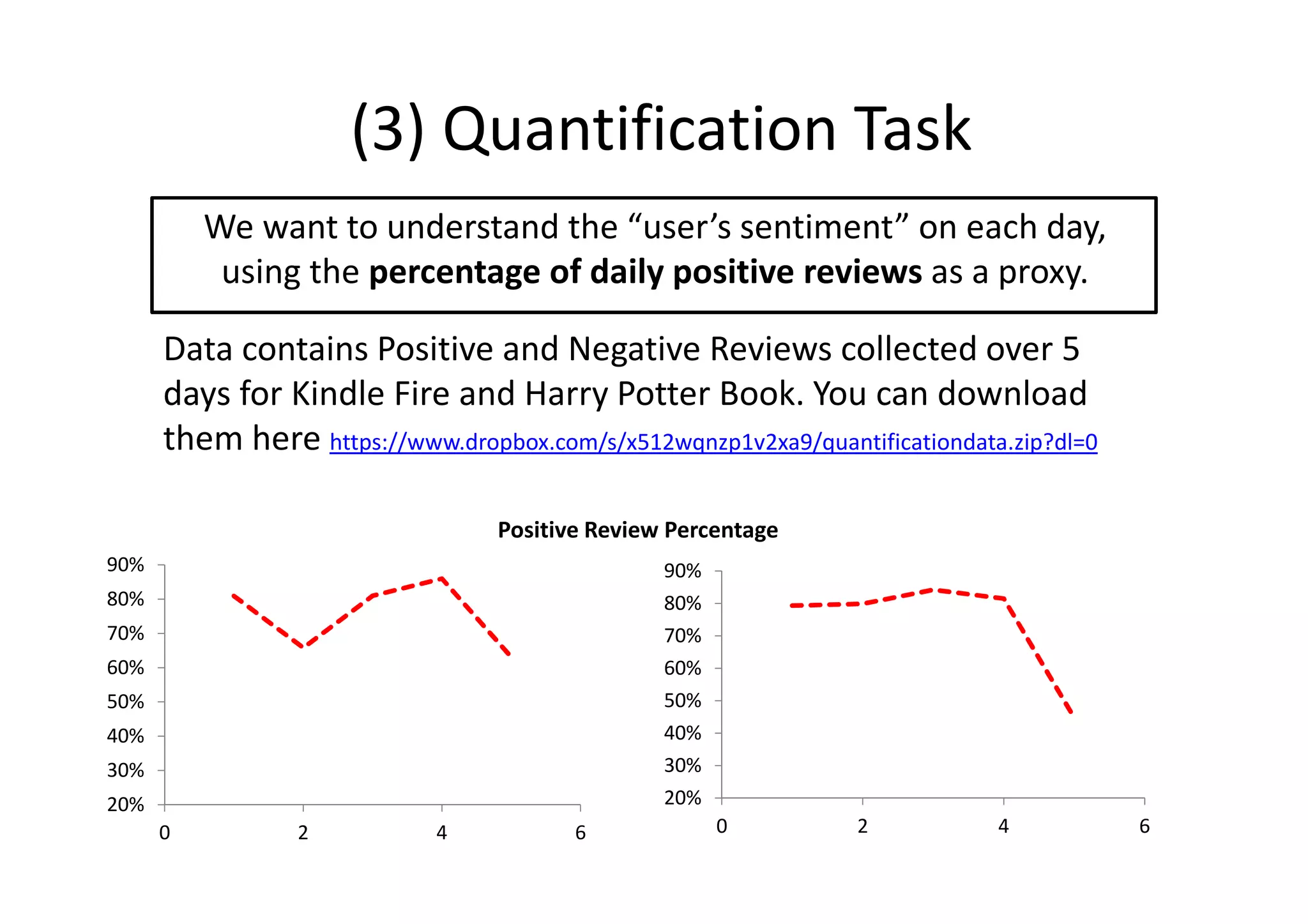

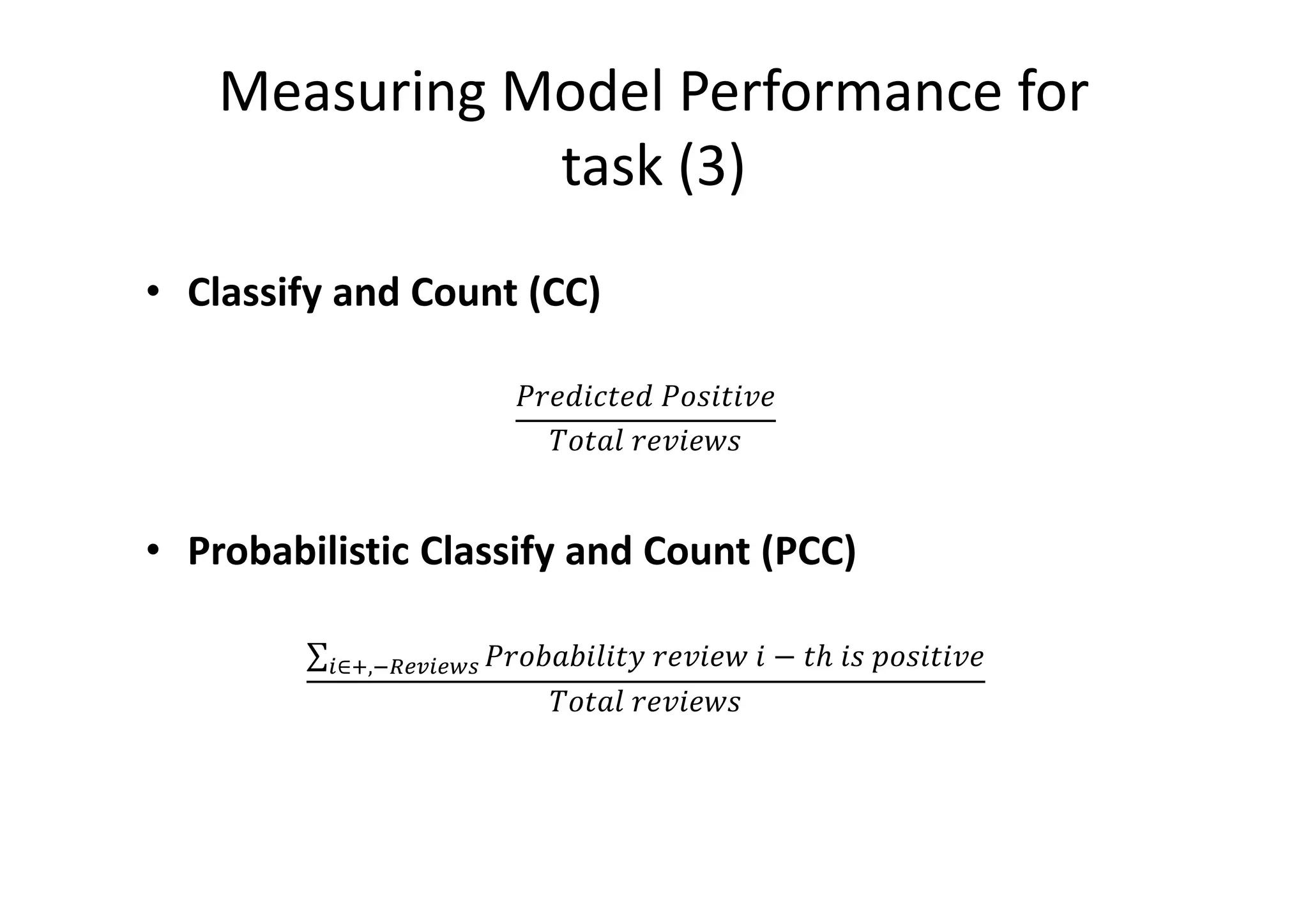

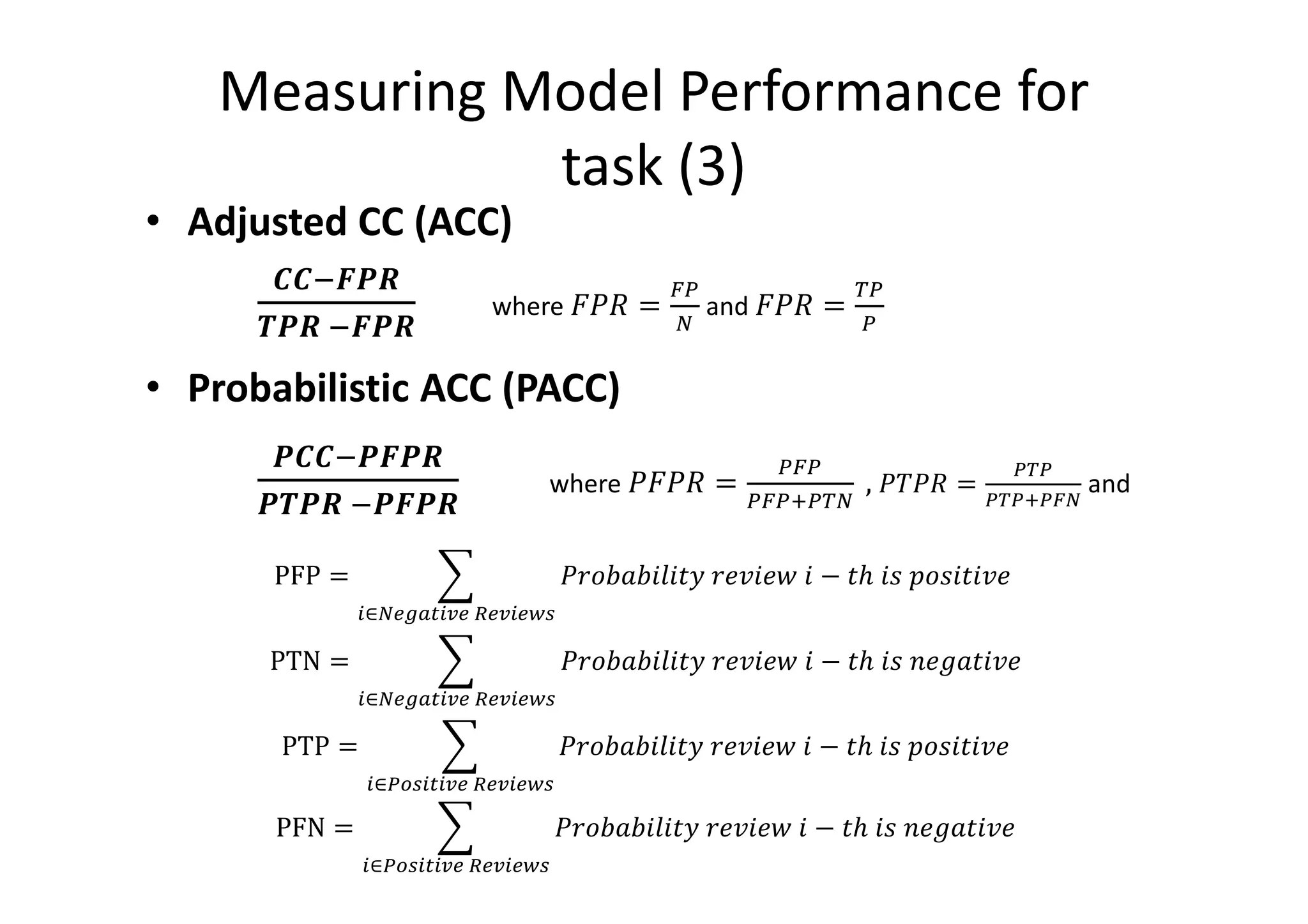

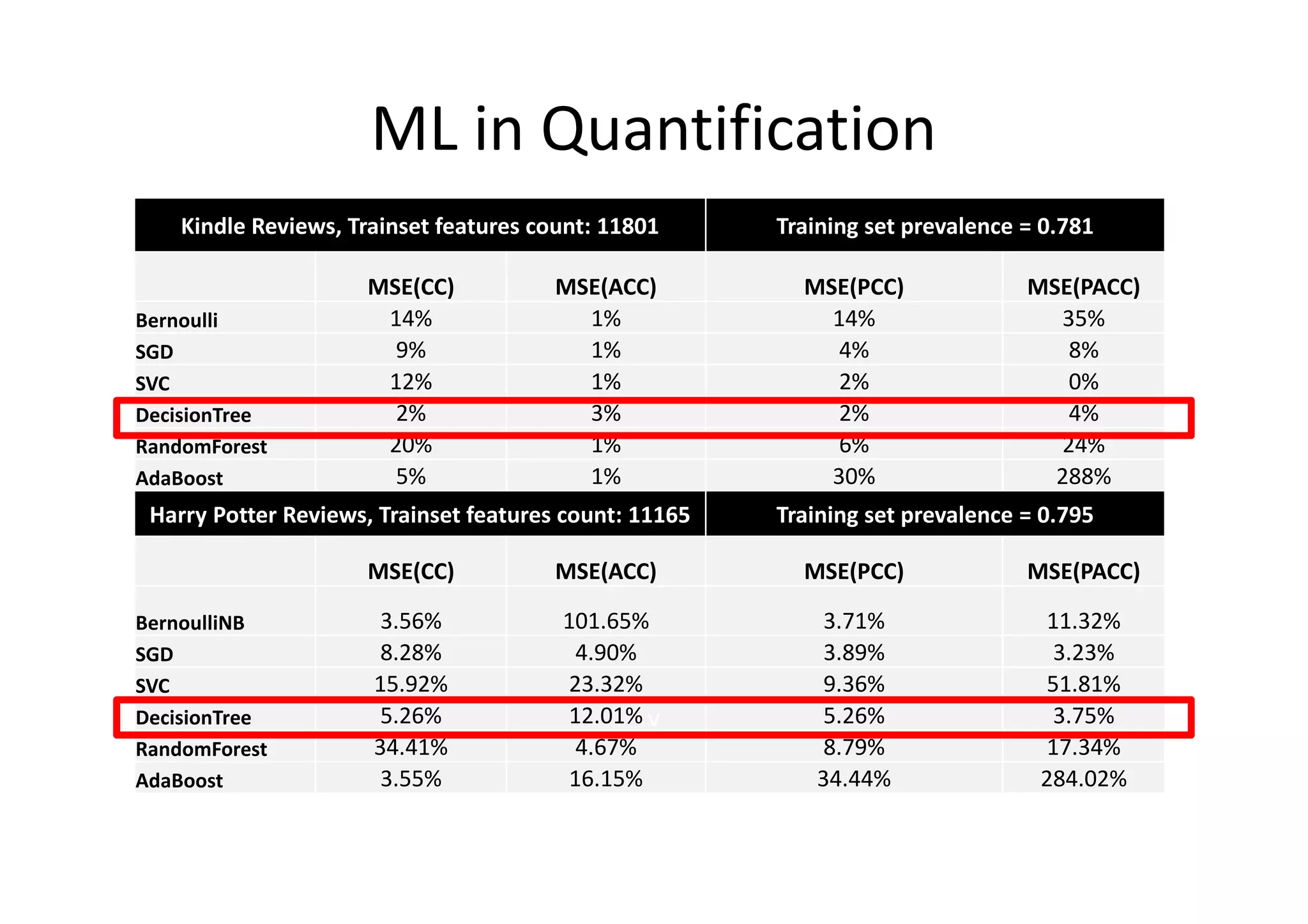

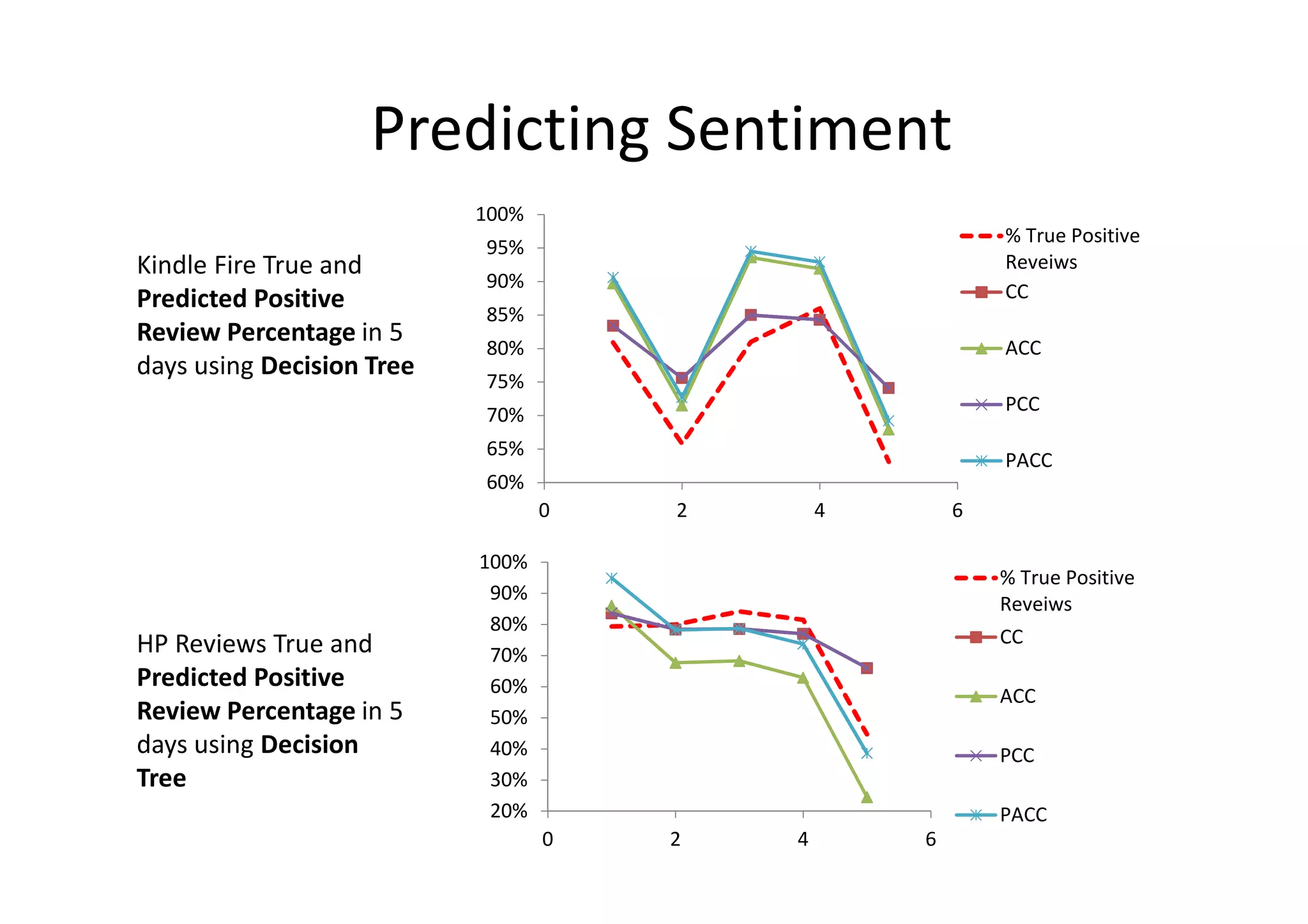

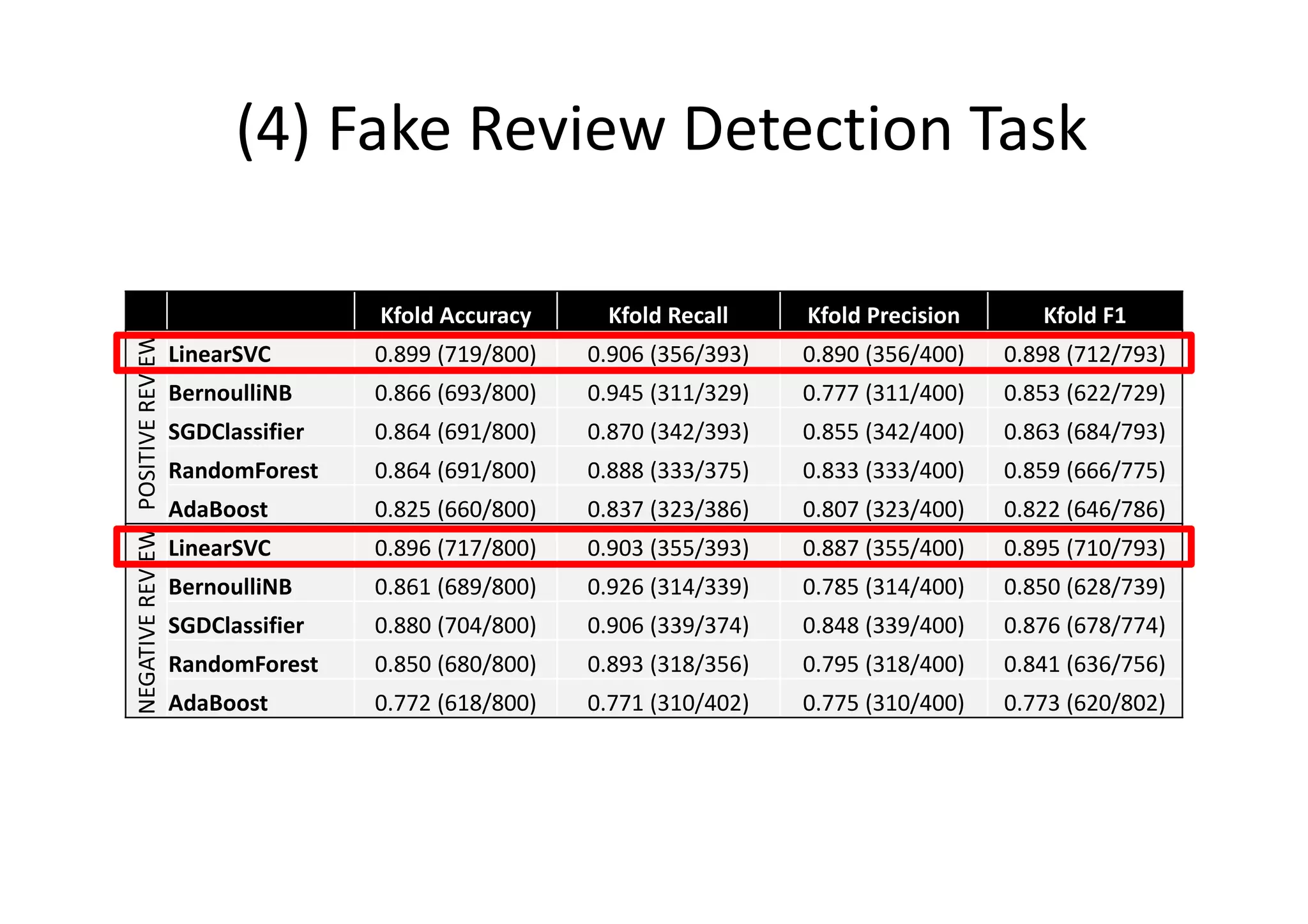

The document details a sentiment analysis and opinion mining project comparing various machine learning algorithms for tasks such as review classification, star prediction, sentiment quantification, and fake review detection. It employs models like Naïve Bayes, SVM, Decision Trees, and Random Forest, analyzing their performance through metrics such as accuracy, precision, and recall across different datasets. Additionally, it provides data sources for movie and product reviews used in the analyses.