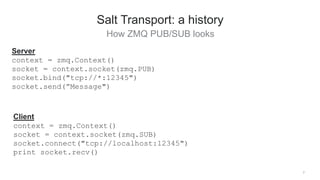

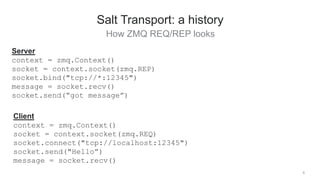

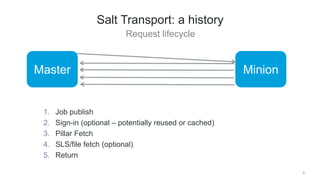

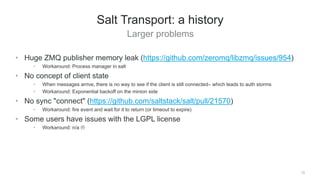

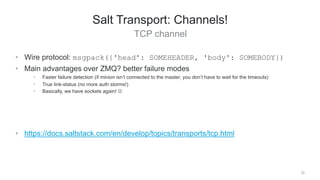

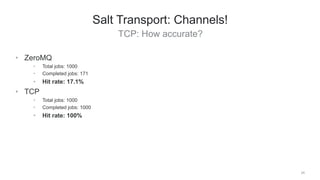

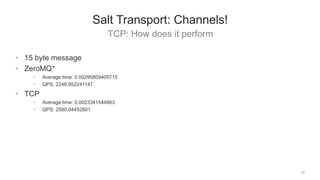

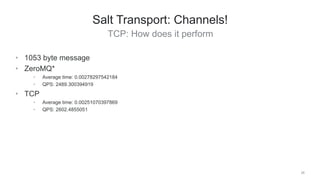

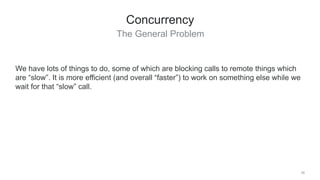

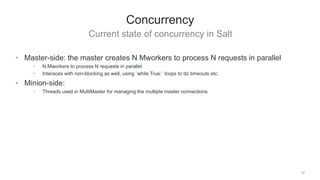

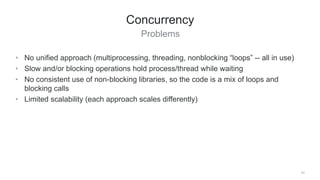

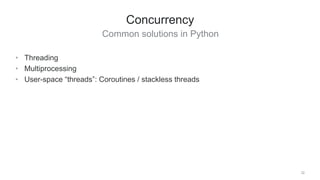

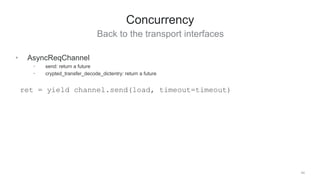

The document discusses improvements in Salt's transport mechanism, highlighting the transition from ZeroMQ to a new TCP-based transport called RAET for better performance and scalability. It addresses historical issues with message loss, memory leaks, and the need for modularity in transport systems, while introducing concepts of concurrency in handling requests within the system. It emphasizes the importance of coroutines and a solid concurrency model to enhance Salt's capabilities, with future enhancements suggested for transport and concurrency management.

![33

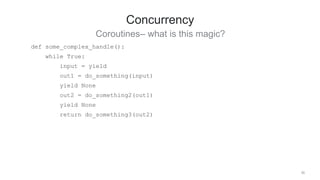

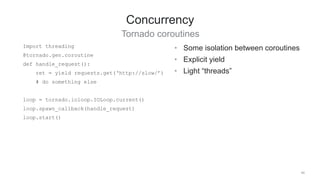

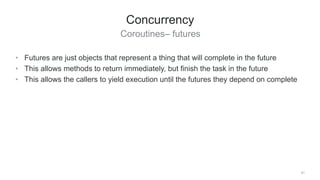

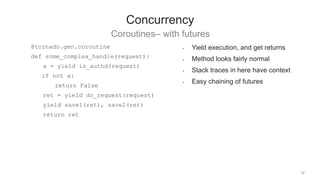

Concurrency

Threading

• Some isolation between threads

• Pre-emptive scheduling

Import threading

def handle_request():

ret = requests.get(‘http://slowthing/’)

# do something else

threads = []

for x in xrange(0, NUM)REQUESTS):

t = threading.Thread(target=handle_request)

t.start()

threads.append(t)

for t in threads:

t.join()](https://image.slidesharecdn.com/saltstacktransportandconcurrency-160422201527/85/Saltconf-2016-Salt-stack-transport-and-concurrency-33-320.jpg)

![34

Concurrency

Multiprocessing

• Complete isolation

• Pre-emptive scheduling

Import multiprocessing

def handle():

ret = requests.get(‘http://slowthing/’)

# do something else

Processes = []

for x in xrange(0, NUM)REQUESTS):

p = multiprocessing.Process(target=handle)

p.start()

processes.append(p)

For p in processes:

p.join()](https://image.slidesharecdn.com/saltstacktransportandconcurrency-160422201527/85/Saltconf-2016-Salt-stack-transport-and-concurrency-34-320.jpg)

![Copy-on-write thread/coroutine specific dict

49

ContextDict

• Works just like a dict

• Exposes a clone() method, which creates a `ChildContextDict` which is a

thread/coroutine local copy

• With tornado’s StackContext, we switch the backing dict of the parent with your

child using a context manager

cd = ContextDict(foo=bar)

print cd[‘foo’] # will be bar

with tornado.stack_context.StackContext(cd.clone):

print cd[‘foo’] # will be bar

cd[‘foo’] = ‘baz’

print cd[‘foo’] # will be baz

print cd[‘foo’] # will be bar

More examples: https://github.com/saltstack/salt/blob/develop/tests/unit/context_test.py](https://image.slidesharecdn.com/saltstacktransportandconcurrency-160422201527/85/Saltconf-2016-Salt-stack-transport-and-concurrency-49-320.jpg)

![concurrency controls for state execution

52

zk_concurrency

acquire_lock:

zk_concurrency.lock:

- name: /trafficeserver

- zk_hosts: 'zookeeper:2181'

- max_concurrency: 4

- prereq:

- service: trafficserver

trafficserver:

service.running: []

release_lock:

zk_concurrency.unlock:

- name: /trafficserver

- require:

- service: trafficserver](https://image.slidesharecdn.com/saltstacktransportandconcurrency-160422201527/85/Saltconf-2016-Salt-stack-transport-and-concurrency-52-320.jpg)