This document reviews various linear Gaussian models, emphasizing their relationships and applications, including factor analysis, PCA, Gaussian mixtures, and hidden Markov models. It discusses the basic inference and learning problems associated with these models, along with the expectation-maximization algorithm for training. The paper concludes by critiquing the models' efficiency and suggesting areas for future research.

![The Basic (Generative) Model

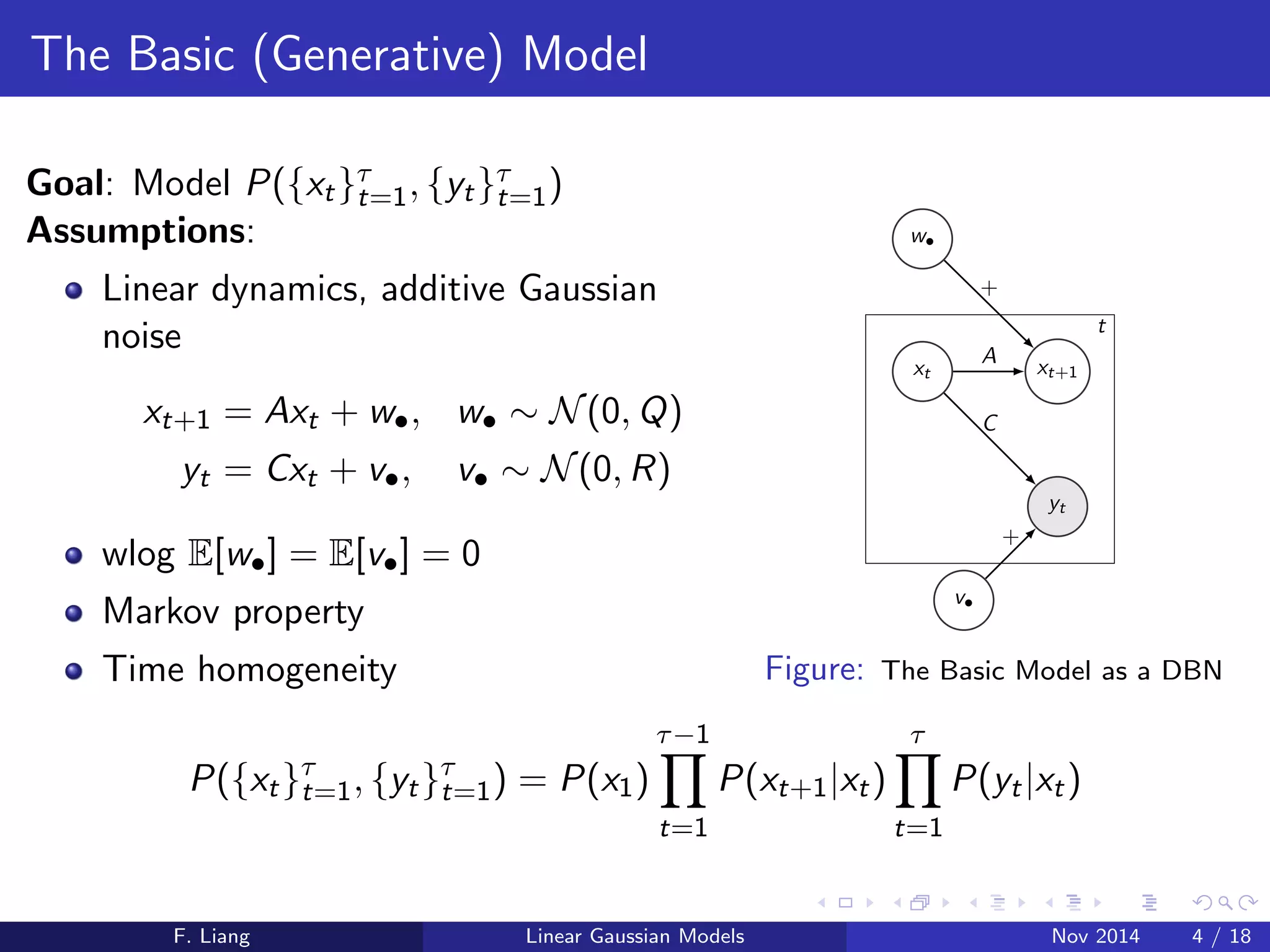

Goal: Model P(fxtgt

=1; fytgt

=1)

Assumptions:

Linear dynamics, additive Gaussian

noise

xt+1 = Axt + w; w N(0;Q)

yt = Cxt + v; v N(0; R)

wlog E[w] = E[v] = 0

Markov property

Time homogeneity

w

+

xt xt+1

yt

v

A

C

+

t

Figure: The Basic Model as a DBN

P(fxtgt

=1; fytgt

=1) = P(x1)

Y1

t=1

P(xt+1jxt )

Y

t=1

P(yt jxt )

F. Liang Linear Gaussian Models Nov 2014 4 / 18](https://image.slidesharecdn.com/roweis-1999-141111235734-conversion-gate02/75/A-Unifying-Review-of-Gaussian-Linear-Models-Roweis-1999-5-2048.jpg)

![Discrete-State Modeling: Winner-Takes-All (WTA)

Non-linearity

Assume: x discretely supported,

R

7!

P

Winner-Takes-All Non-Linearity: WTA[x] = ei where i = arg maxj xj

xt+1 = WTA[Axt + w] w N(;Q)

yt = Cxt + v v N(0; R)

x WTA[N(; )] de](https://image.slidesharecdn.com/roweis-1999-141111235734-conversion-gate02/75/A-Unifying-Review-of-Gaussian-Linear-Models-Roweis-1999-18-2048.jpg)