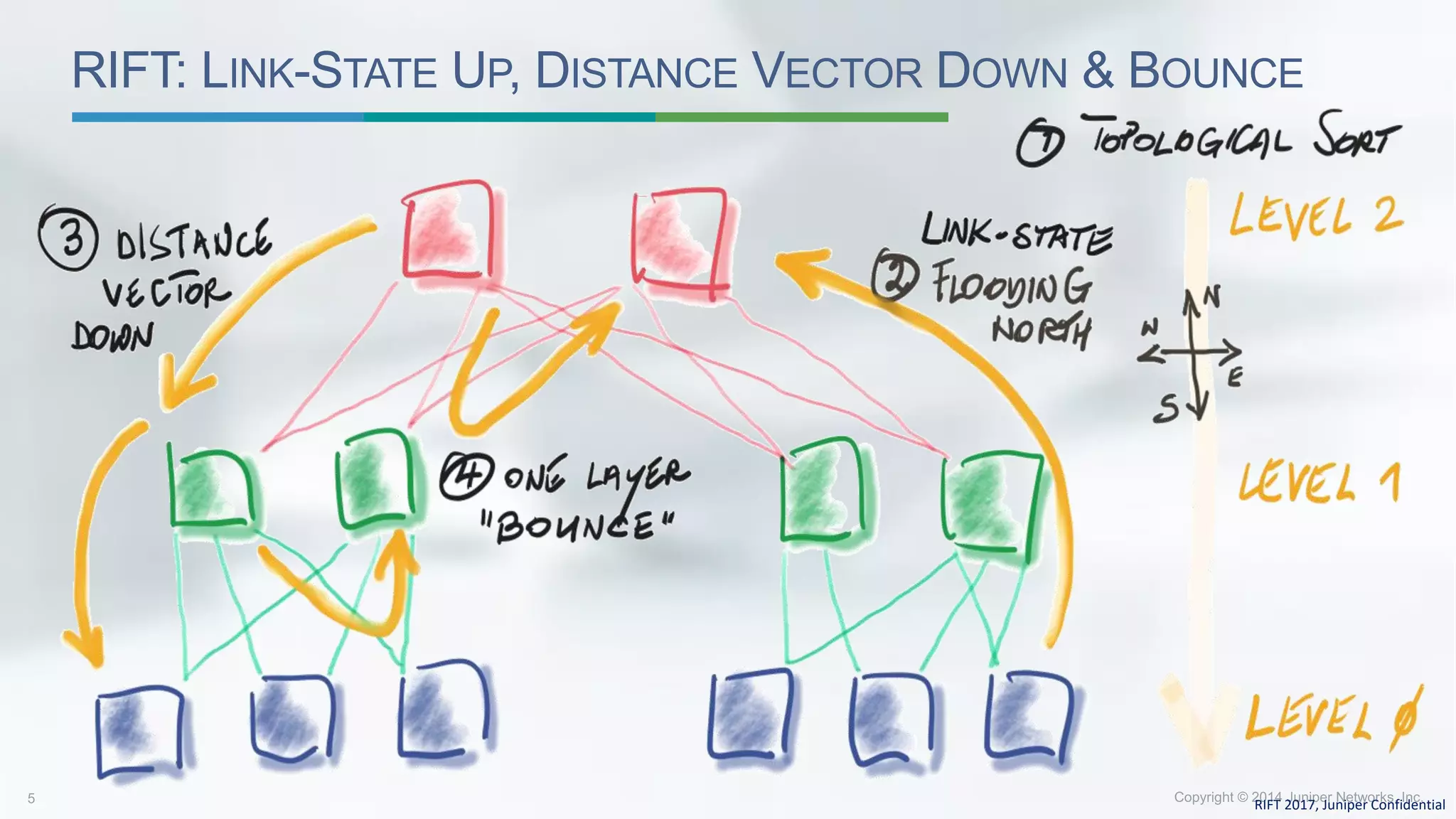

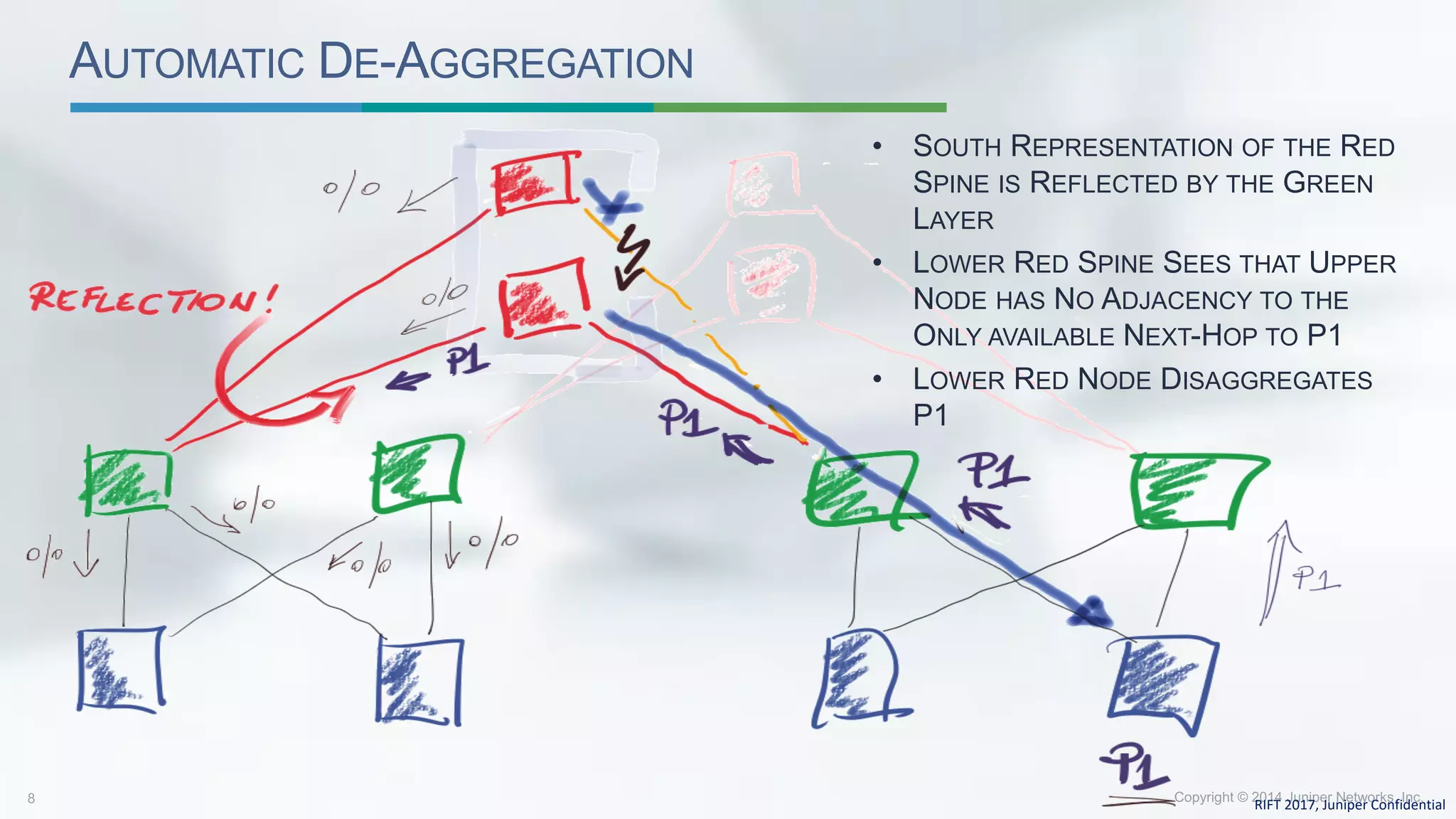

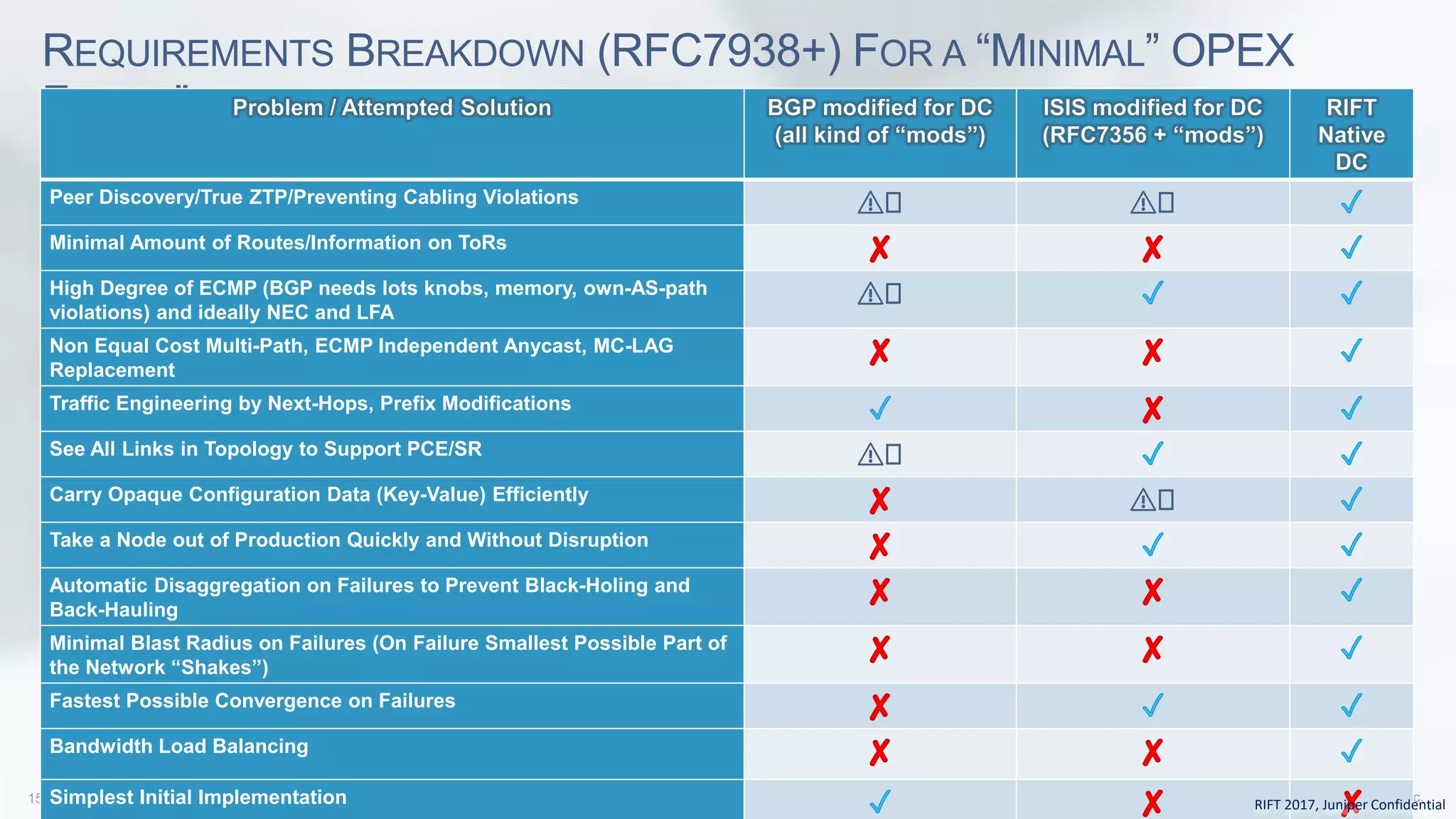

RIFT is a new data center routing protocol that addresses issues with using link-state IGP protocols and eBGP as underlay routing protocols in large-scale data centers. It uses link-state routing northbound for fast convergence while using distance-vector routing southbound to minimize the amount of routing information stored and advertised on top-of-rack switches. RIFT also supports features like automatic route disaggregation to prevent blackholes, fast decommissioning of nodes, and zero-touch provisioning. It has been standardized through the IETF and implemented in Juniper and open source software.